Wave-Mamba: Wavelet State Space Model for Ultra-High-Definition Low-Light Image Enhancement (ACMMM2024)

This is the office implementation of Wave-Mamba: Wavelet State Space Model for Ultra-High-Definition Low-Light Image Enhancement, ACMMM2024.

Wenbin Zou*, Hongxia Gao ✉️, Weipeng Yang, and Tongtong Liu

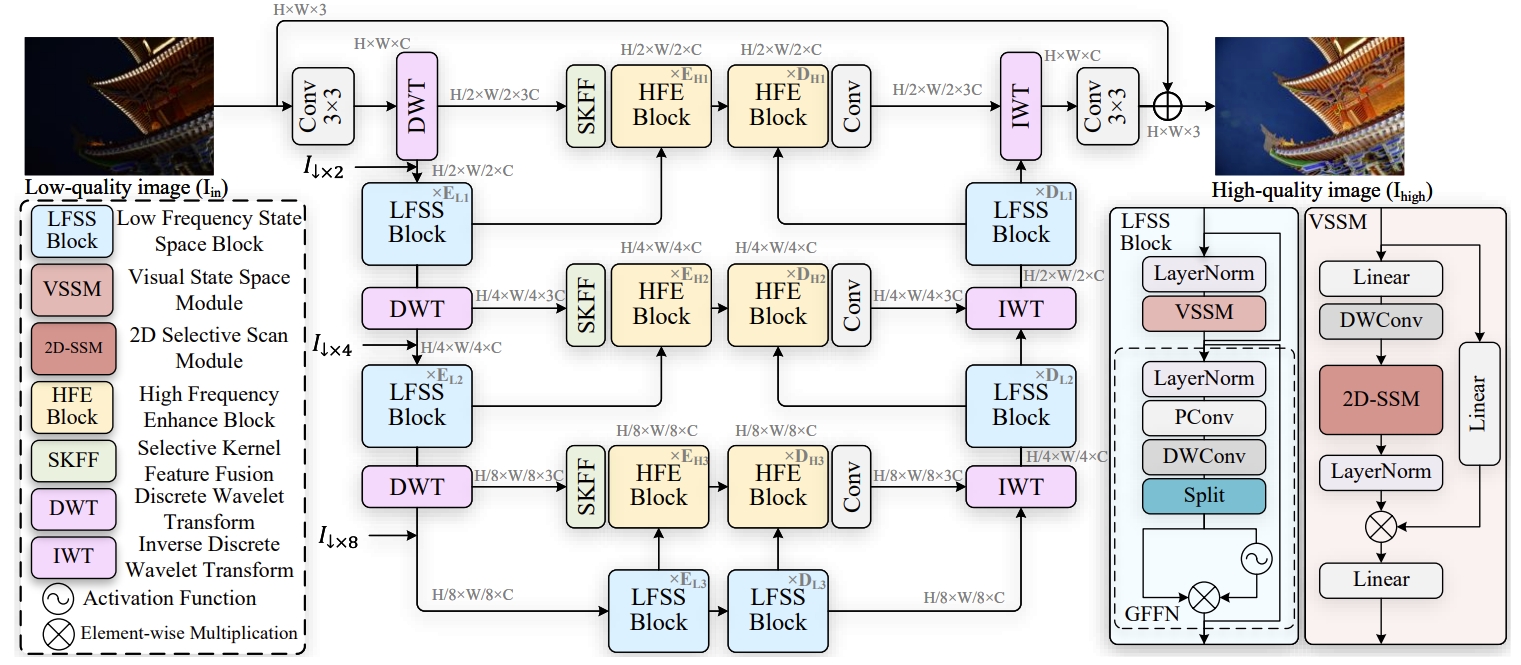

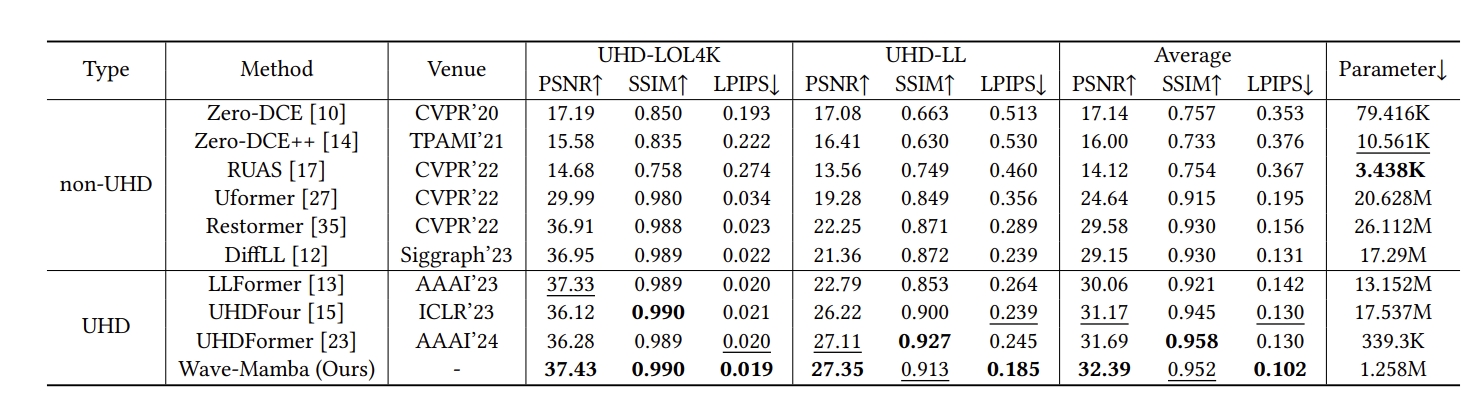

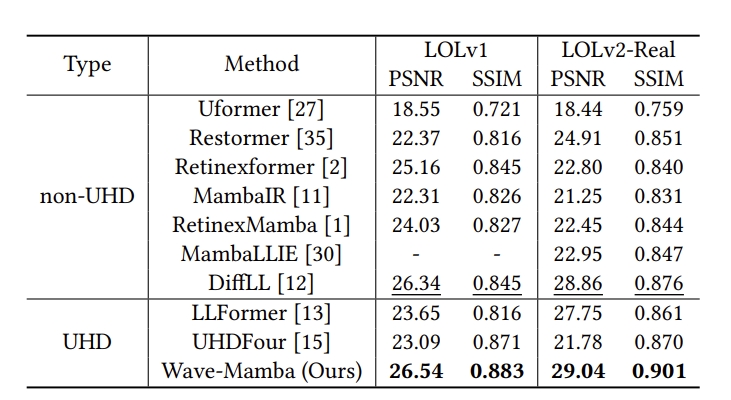

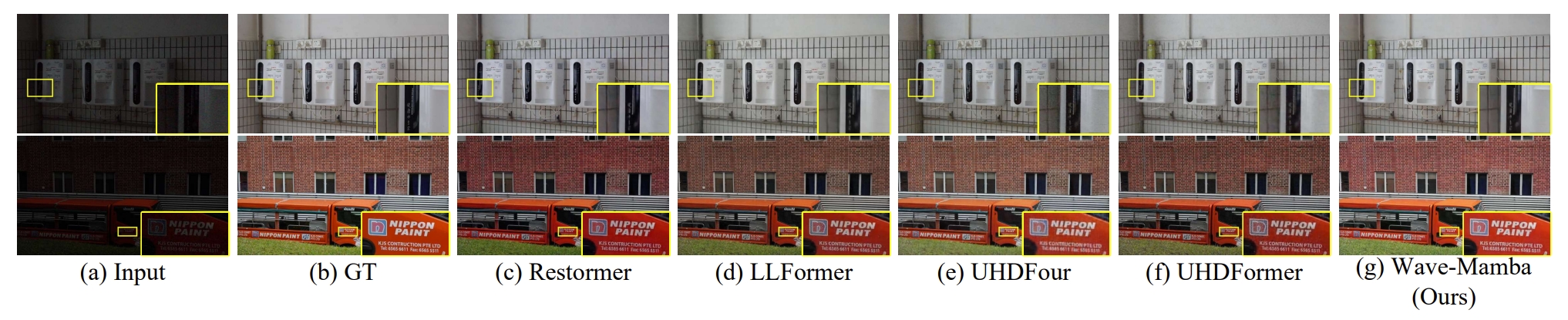

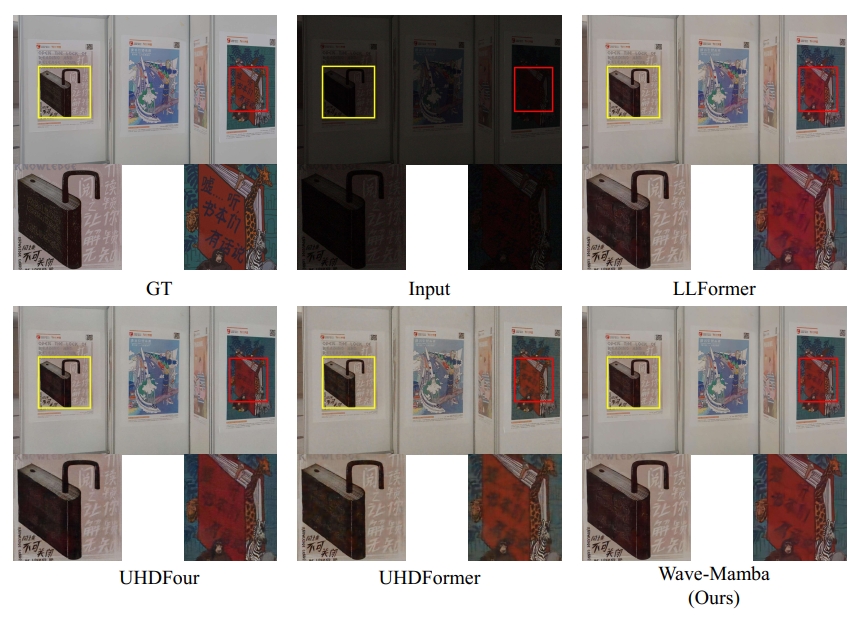

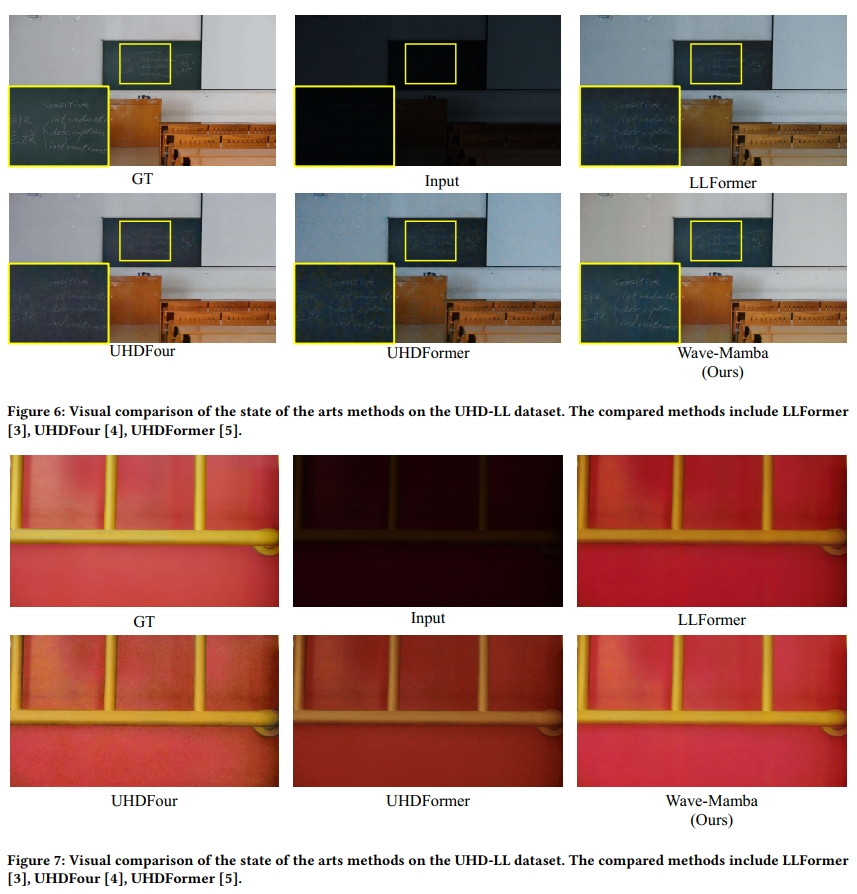

Abstract: Ultra-high-definition (UHD) technology has attracted widespread attention due to its exceptional visual quality, but it also poses new challenges for low-light image enhancement (LLIE) techniques. UHD images inherently possess high computational complexity, leading existing UHD LLIE methods to employ high-magnification downsampling to reduce computational costs, which in turn results in information loss. The wavelet transform not only allows downsampling without loss of information, but also separates the image content from the noise. It enables state space models (SSMs) to avoid being affected by noise when modeling long sequences, thus making full use of the long-sequence modeling capability of SSMs. On this basis, we propose Wave-Mamba, a novel approach based on two pivotal insights derived from the wavelet domain: 1) most of the content information of an image exists in the low-frequency component, less in the high-frequency component. 2) The high-frequency component exerts a minimal influence on the outcomes of low-light enhancement. Specifically, to efficiently model global content information on UHD images, we proposed a low-frequency state space block (LFSSBlock) by improving SSMs to focus on restoring the information of low-frequency sub-bands. Moreover, we propose a high-frequency enhance block (HFEBlock) for high-frequency sub-band information, which uses the enhanced low-frequency information to correct the high-frequency information and effectively restore the correct high-frequency details. Through comprehensive evaluation, our method has demonstrated superior performance, significantly outshining current leading techniques while maintaining a more streamlined architecture.

- Testing Code&Checkpoint

- Model.py

- Train.py

- Ubuntu >= 22.04

- CUDA >= 11.8

- Pytorch>=2.0.1

- Other required packages in

requirements.txt

cd WaveMamba

# create new anaconda env

conda create -n wavemamba python=3.8

conda activate wavemamba

# install python dependencies

pip3 install -r requirements.txt

python setup.py develop

[LOL, UHDLL, UHDLOL4K] (https://drive.google.com/drive/folders/1ifB1g7fRCPqPTKyq366lzd_zDkA2j297?usp=sharing)

bash test.sh

or

python inference_wavemamba_psnr_ssim.py

bash train.sh

or

CUDA_VISIBLE_DEVICES=0,1 python -m torch.distributed.launch --nproc_per_node=2 --master_port=4324 basicsr/train.py -opt options/train_wavemaba_uhdll.yml --launcher pytorch

@inproceedings{

zou2024wavemamba,

title={Wave-Mamba: Wavelet State Space Model for Ultra-High-Definition Low-Light Image Enhancement},

author={Wenbin Zou and Hongxia Gao and Weipeng Yang and Tongtong Liu},

booktitle={ACM Multimedia 2024},

year={2024},

url={https://openreview.net/forum?id=oQahsz6vWe}

}

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This project is based on BasicSR.