Code: https://github.com/AmbiqAI/neuralSPOT

Documentation: https://ambiqai.github.io/neuralSPOT

NeuralSPOT is a full-featured AI SDK and toolkit optimized for Ambiqs's Apollo family of ultra-low-power SoCs. It is open-source, real-time, and OS-agnostic. It was designed with Tensorflow Lite for Microcontrollers in mind, but works with any AI runtime.

NeuralSPOT is designed to help embedded AI developers in 3 important ways:

- Initial development and fine-tuning of their AI model: neuralSPOT offers tools to rapidly characterize the performance and size of a TFLite model on Ambiq processors.

- Rapid AI feature prototyping: neuralSPOT's library of easy to use drivers, feature extractors, helper functions, and communication mechanisms accelerate the development of stand-alone AI feature applications to test the model in real-world situations with real-world data and latencies.

- AI model library export: once an AI model has been developed and refined via prototyping, neuralSPOT allows one-click deployment of a static library implementing the AI model, suitable to linking into larger embedded applications.

NeuralSPOT wraps an AI-centric API around AmbiqSuite SDK (Ambiq's hardware abstraction layer) to ease common tasks such as sensing, computing features from the sensor data, performance profiling, and controlling Ambiq's many on-board peripherals.

NOTE for detailed compatibility notes, see the features document.

- Hardware

- Ambiq EVB: at least one of Apollo4 Plus, Apollo4 Blue Plus, Apollo4 Lite, Apollo4 Blue Lite, or https://ambiq.com/apollo3-blue-plus/.

- Energy Measurement (optional): Joulescope JS110 or JS220 (only needed for automated model energy measurements)

- Software

- Segger J-Link 7.88+

- Compilers: at least one of...

- GNU Make

- Python 3.11+

NeuralSPOT makes it easy to build and deploy your first AI model on Ambiq's EVBs. Before deploying, connect an Ambiq EVB (the following example defaults to Apollo4 Plus).

git clone git@github.com:AmbiqAI/neuralSPOT.git

cd neuralSPOT

make clean

make -j # makes everything

make deploy # flashes the default example to EVB

make view # connects to the Jlink SWO interface to show printf output from EVBThe make systems is highly configurable and flexible - see our makefile options document for more details.

See our Windows application note for Windows-specific considerations.

NeuralSPOT includes tools to automatically analyze, build, characterize, and package TFLite models. Autodeploy makes use of RPC, so it needs both USB ports to be connected (one for Jlink and one for RPC).

Two caveats:

- Autodeploy for EVBs without a second USB port requires special attention - see the Apollo4 Lite section of this document.

- RPC uses one of the PC's serial ports - these are named differently depending on the OS. The following example defaults to Linux/Mac, see this document for finding the serial port and add

--tty <your port>to use that instead.

cd .../neuralSPOT # neuralSPOT's root directory

python setup.py install

cd tools

python -m ns_autodeploy --tflite-filename=mymodel.tflite --model-name mymodel # add --tty COMx for WindowsThis one invocation will:

- Analyze the specified TFLite model file to determine needed ops, input and output tensors, etc.

- Convert the TFlite into a C file, wrap it in a baseline neuralSPOT application, and flash it to an EVB

- Perform an initial characterization over USB using RPC and use the results to fine-tune the application's memory allocation, then flash the fine-tuned version.

- Run invoke() both locally and on the EVB, feeding the same data to both, comparing the results and profiling the performance of the invoke() on the EVB

- Create a static library (with headers) containing the model, TFLM, and a minimal wrapper to ease integration into applications.

- Create a simple AmbiqSuite example suitable for copying into AmbiqSuites example directory.

Autodeploy is highly configurable and also capable of automatically measuring the power used by inference (if a joulescope is available) - see the reference guide and application note for more details.

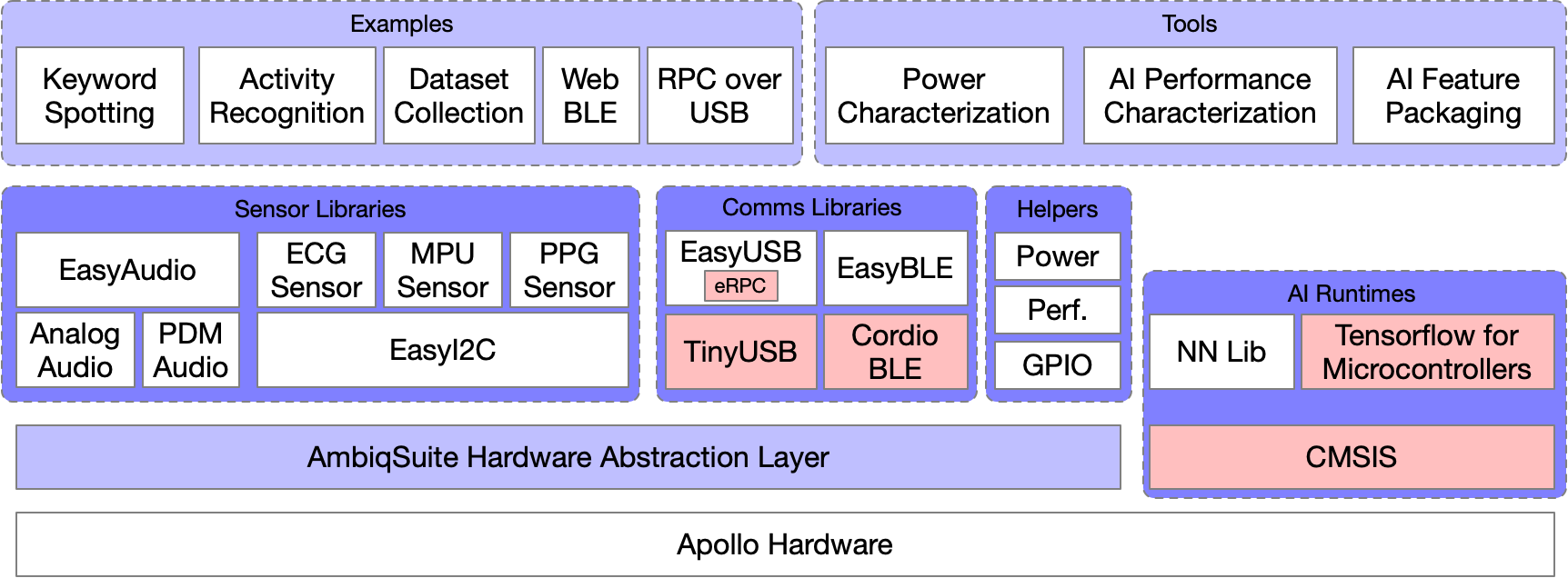

NeuralSPOT consists of the neuralspot libraries, required external components, tools, and examples.

The directory structure reflects the code structure:

/neuralspot - contains all code for NeuralSPOT libraries

/neuralspot # Sensor, communications, and helper libraries

/extern # External dependencies, including TF and AmbiqSuite

/examples # Example applications, each of which can be compiled to a deployable binary

/projects # Examples of how to integrate external projects such as EdgeImpulse models

/make # Makefile helpers, including neuralspot-config.mk and local_overrides.mk

/tools # AutoDeploy and RPC python-based tools

/tests # Simple compatibility tests

/docs # introductory documents, guides, and release notes