Rofunc: The Full Process Python Package for Robot Learning from Demonstration and Robot Manipulation

Repository address: https://github.com/Skylark0924/Rofunc

Documentation: https://rofunc.readthedocs.io/

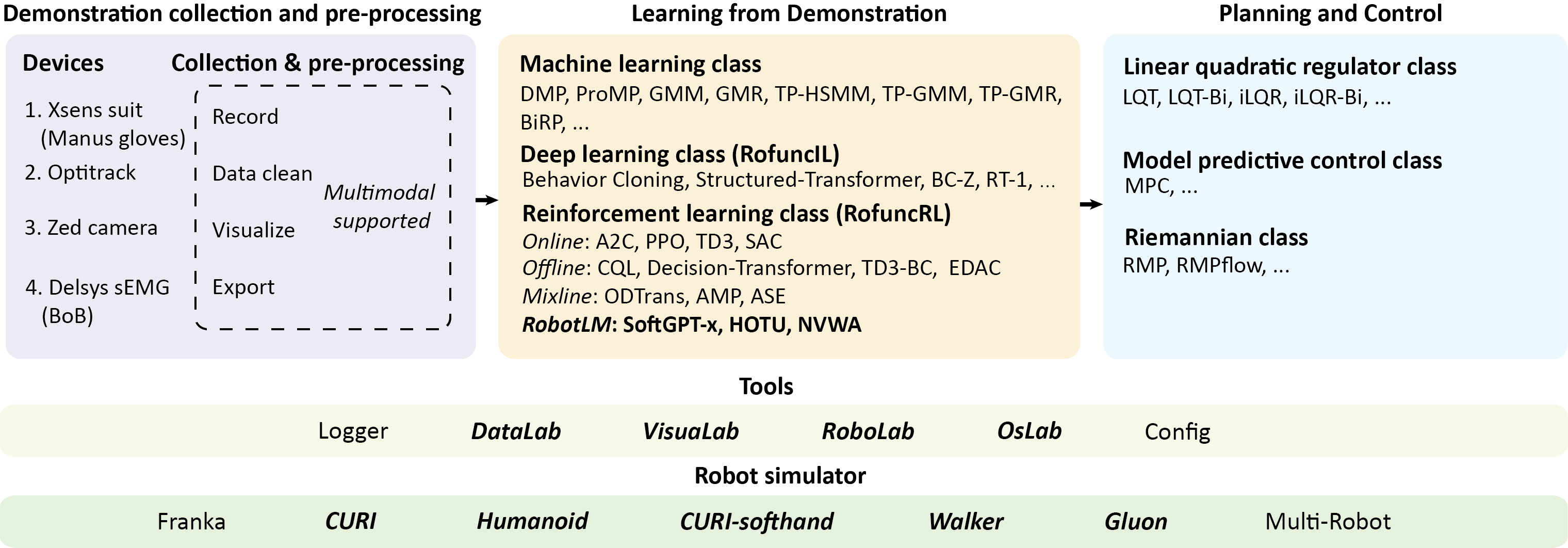

Rofunc package focuses on the Imitation Learning (IL), Reinforcement Learning (RL) and Learning from Demonstration (LfD) for (Humanoid) Robot Manipulation. It provides valuable and convenient python functions, including

demonstration collection, data pre-processing, LfD algorithms, planning, and control methods. We also provide an

IsaacGym and OmniIsaacGym based robot simulator for evaluation. This package aims to advance the field by building a full-process

toolkit and validation platform that simplifies and standardizes the process of demonstration data collection,

processing, learning, and its deployment on robots.

- Rofunc: The Full Process Python Package for Robot Learning from Demonstration and Robot Manipulation

- [2024-01-24] 🚀 CURI Synergy-based Softhand grasping tasks are supported to be trained by

RofuncRL. - [2023-12-24] 🚀 Dexterous hand (Shadow Hand, Allegro Hand, qbSofthand) tasks are supported to be trained by

RofuncRL. - [2023-12-03] 🖼️ Segment-Anything (SAM) is supported in an interactive mode, check the examples in Visualab (segment anything, segment with prompt).

- [2023-10-31] 🚀

RofuncRL: A modular easy-to-use Reinforcement Learning sub-package designed for Robot Learning tasks is released. It has been tested with simulators likeOpenAIGym,IsaacGym,OmniIsaacGym(see example gallery), and also differentiable simulators likePlasticineLabandDiffCloth. - ...

- If you want to know more about the update news, please refer to the changelog.

Please refer to the installation guide.

To give you a quick overview of the pipeline of rofunc, we provide an interesting example of learning to play Taichi

from human demonstration. You can find it in the Quick start

section of the documentation.

The available functions and plans can be found as follows.

Note ✅: Achieved 🔃: Reformatting ⛔: TODO

RofuncRL is one of the most important sub-packages of Rofunc. It is a modular easy-to-use Reinforcement Learning sub-package designed for Robot Learning tasks. It has been tested with simulators like OpenAIGym, IsaacGym, OmniIsaacGym (see example gallery), and also differentiable simulators like PlasticineLab and DiffCloth. Here is a list of robot tasks trained by RofuncRL:

Note

You can customize your own project based on RofuncRL by following the RofuncRL customize tutorial.

We also provide a RofuncRL-based repository template to generate your own repository following the RofuncRL structure by one click.

For more details, please check the documentation for RofuncRL.

The list of all supported tasks.

| Tasks | Animation | Performance | ModelZoo |

|---|---|---|---|

| Ant |  |

✅ | |

| Cartpole | |||

| Franka Cabinet |

|

✅ | |

| Franka CubeStack |

|||

| CURI Cabinet |

|

✅ | |

| CURI CabinetImage |

|

||

| CURI CabinetBimanual |

|||

| CURIQbSoftHand SynergyGrasp |

|

✅ | |

| Humanoid |  |

✅ | |

| HumanoidAMP Backflip |

|

✅ | |

| HumanoidAMP Walk |

✅ | ||

| HumanoidAMP Run |

|

✅ | |

| HumanoidAMP Dance |

|

✅ | |

| HumanoidAMP Hop |

|

✅ | |

| HumanoidASE GetupSwordShield |

|

✅ | |

| HumanoidASE PerturbSwordShield |

|

✅ | |

| HumanoidASE HeadingSwordShield |

|

✅ | |

| HumanoidASE LocationSwordShield |

|

✅ | |

| HumanoidASE ReachSwordShield |

✅ | ||

| HumanoidASE StrikeSwordShield |

|

✅ | |

| BiShadowHand BlockStack |

|

✅ | |

| BiShadowHand BottleCap |

|

✅ | |

| BiShadowHand CatchAbreast |

|

✅ | |

| BiShadowHand CatchOver2Underarm |

|

✅ | |

| BiShadowHand CatchUnderarm |

|

✅ | |

| BiShadowHand DoorOpenInward |

|

✅ | |

| BiShadowHand DoorOpenOutward |

|

✅ | |

| BiShadowHand DoorCloseInward |

|

✅ | |

| BiShadowHand DoorCloseOutward |

|

✅ | |

| BiShadowHand GraspAndPlace |

|

✅ | |

| BiShadowHand LiftUnderarm |

|

✅ | |

| BiShadowHand HandOver |

|

✅ | |

| BiShadowHand Pen |

|

✅ | |

| BiShadowHand PointCloud |

|||

| BiShadowHand PushBlock |

|

✅ | |

| BiShadowHand ReOrientation |

|

✅ | |

| BiShadowHand Scissors |

|

✅ | |

| BiShadowHand SwingCup |

|

✅ | |

| BiShadowHand Switch |

|

✅ | |

| BiShadowHand TwoCatchUnderarm |

|

✅ |

If you use rofunc in a scientific publication, we would appreciate citations to the following paper:

@software{liu2023rofunc,

title = {Rofunc: The Full Process Python Package for Robot Learning from Demonstration and Robot Manipulation},

author = {Liu, Junjia and Li, Chenzui and Delehelle, Donatien and Li, Zhihao and Chen, Fei},

year = {2023},

publisher = {Zenodo},

doi = {10.5281/zenodo.10016946},

url = {https://doi.org/10.5281/zenodo.10016946},

dimensions = {true},

google_scholar_id = {0EnyYjriUFMC},

}

- Robot cooking with stir-fry: Bimanual non-prehensile manipulation of semi-fluid objects (IEEE RA-L 2022 | Code)

@article{liu2022robot,

title={Robot cooking with stir-fry: Bimanual non-prehensile manipulation of semi-fluid objects},

author={Liu, Junjia and Chen, Yiting and Dong, Zhipeng and Wang, Shixiong and Calinon, Sylvain and Li, Miao and Chen, Fei},

journal={IEEE Robotics and Automation Letters},

volume={7},

number={2},

pages={5159--5166},

year={2022},

publisher={IEEE}

}

- SoftGPT: Learn Goal-oriented Soft Object Manipulation Skills by Generative Pre-trained Heterogeneous Graph Transformer (IROS 2023|Code coming soon)

@inproceedings{liu2023softgpt,

title={Softgpt: Learn goal-oriented soft object manipulation skills by generative pre-trained heterogeneous graph transformer},

author={Liu, Junjia and Li, Zhihao and Lin, Wanyu and Calinon, Sylvain and Tan, Kay Chen and Chen, Fei},

booktitle={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={4920--4925},

year={2023},

organization={IEEE}

}

- BiRP: Learning Robot Generalized Bimanual Coordination using Relative Parameterization Method on Human Demonstration (IEEE CDC 2023 | Code)

@article{liu2023birp,

title={BiRP: Learning Robot Generalized Bimanual Coordination using Relative Parameterization Method on Human Demonstration},

author={Liu, Junjia and Sim, Hengyi and Li, Chenzui and Chen, Fei},

journal={arXiv preprint arXiv:2307.05933},

year={2023}

}

Rofunc is developed and maintained by the CLOVER Lab (Collaborative and Versatile Robots Laboratory), CUHK.

We would like to acknowledge the following projects: