arXiv | Primary contact: Yitian Zhang

@article{zhang2023frame,

title={Frame Flexible Network},

author={Zhang, Yitian and Bai, Yue and Liu, Chang and Wang, Huan and Li, Sheng and Fu, Yun},

journal={arXiv preprint arXiv:2303.14817},

year={2023}

}

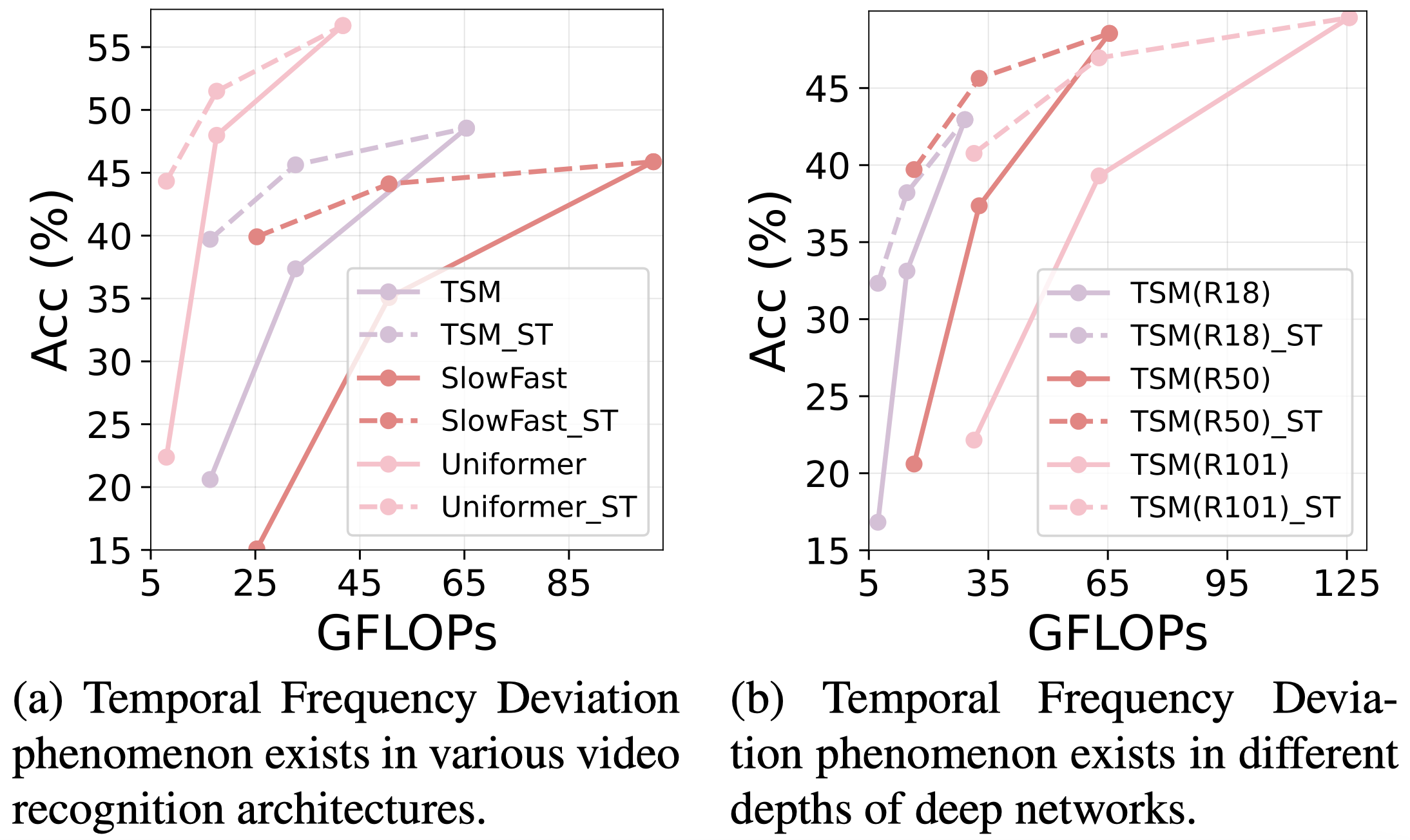

Motivation: Existing video recognition algorithms always conduct separated training pipelines for inputs with different frame numbers, which requires repetitive training operations and multiplying storage costs.Observation: If we evaluate the model at frames which are not used in training, the performance will drop significantly (see Figure above), which is summarized as Temporal Frequency Deviation phenomenon.Solution: We propose a general framework, named Frame Flexible Network (FFN), which not only enables the model to be evaluated at different frames to adjust its computation, but also reduces the memory costs of storing multiple models significantly.Strength: (1) One-shot Training (2) Obvious Performance Gain (3) Significant Parameter Saving (4) Flexible Computation Adjustment (5) Easy Installation and Strong Compatibility.

Please follow the instruction of TSM to prepare the Something-Something V1/V2, Kinetics400, HMDB51 datasets.

FFN is a general framework and can be easily applied to existing methods for stronger performance and higher flexibility during inference. Currently, FFN supports the implementation of 2D Network: TSM, TEA; 3D Network: SlowFast; Transformer Network: Uniformer. Please feel free to contact us if you want to contribute the implementation of other methods.

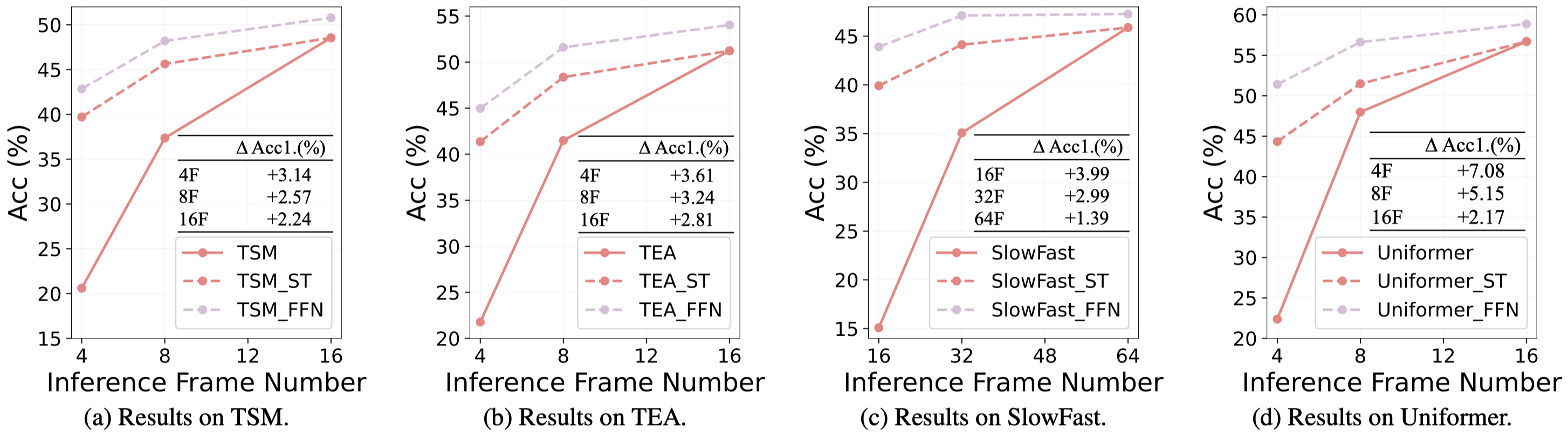

- Validation Across Architectures

FFN can obviously outperform Separated Training (ST) at all frames on different architectures with significant less parameters on Something-Something V1 dataset.

Here we provide the pretrained models on all these architectures:

| Model | Acc1.( |

Acc1.( |

Acc1.( |

Weight |

|---|---|---|---|---|

| TSM | 20.60% | 37.36% | 48.55% | link |

| TSM-ST | 39.71% | 45.63% | 48.55% | - |

| TSM-FFN | 42.85% | 48.20% | 50.79% | link |

| TEA | 21.78% | 41.49% | 51.23% | link |

| TEA-ST | 41.36% | 48.37% | 51.23% | - |

| TEA-FFN | 44.97% | 51.61% | 54.04% | link |

| SlowFast | 15.08% | 35.08% | 45.88% | link |

| SlowFast-ST | 39.91% | 44.12% | 45.88% | - |

| SlowFast-FFN | 43.90% | 47.11% | 47.27% | link |

| Uniformer | 22.38% | 47.98% | 56.71% | link |

| Uniformer-ST | 44.33% | 51.49% | 56.71% | - |

| Uniformer-FFN | 51.41% | 56.64% | 58.88% | link |

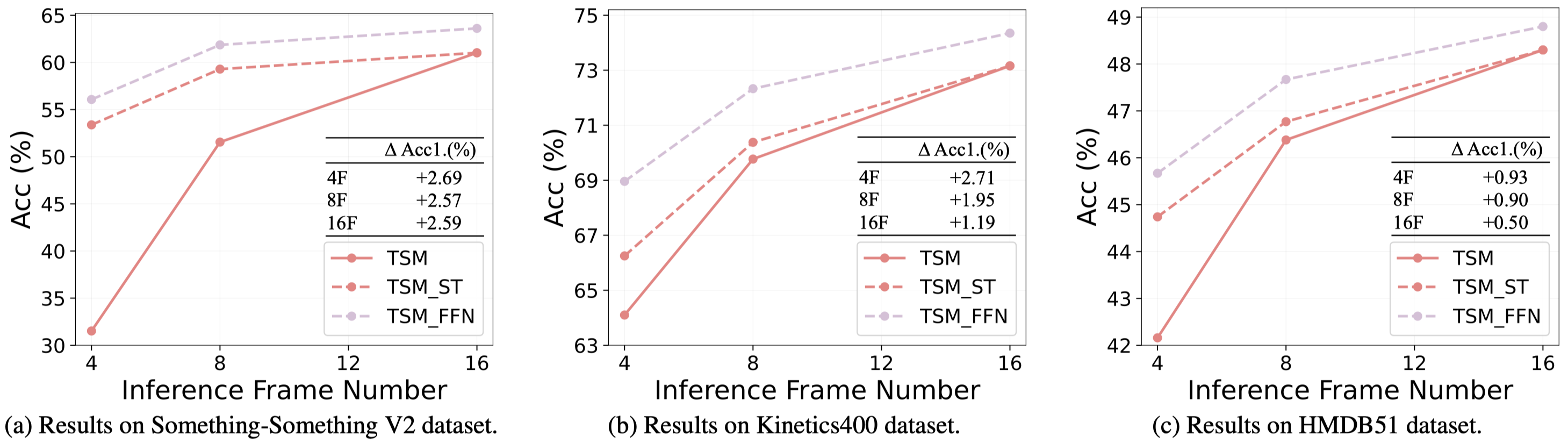

- Validation Across Datasets

FFN can obviously outperform Separated Training (ST) at all frames on different datasets with significant less parameters.

Here we provide the pretrained models on Something-Something V2:

| Model | Parameters | Acc1.( |

Acc1.( |

Acc1.( |

Weight |

|---|---|---|---|---|---|

| TSM | 25.6M | 31.52% | 51.55% | 61.02% | link |

| TSM-ST | 25.6x3M | 53.38% | 59.29% | 61.02% | - |

| TSM-FFN | 25.7M | 56.07% | 61.86% | 63.61% | link |

and Kinetics400:

| Model | Parameters | Acc1.( |

Acc1.( |

Acc1.( |

Weight |

|---|---|---|---|---|---|

| TSM | 25.6M | 64.10% | 69.77% | 73.16% | link |

| TSM-ST | 25.6x3M | 66.25% | 70.38% | 73.16% | - |

| TSM-FFN | 25.7M | 68.96% | 72.33% | 74.35% | link |

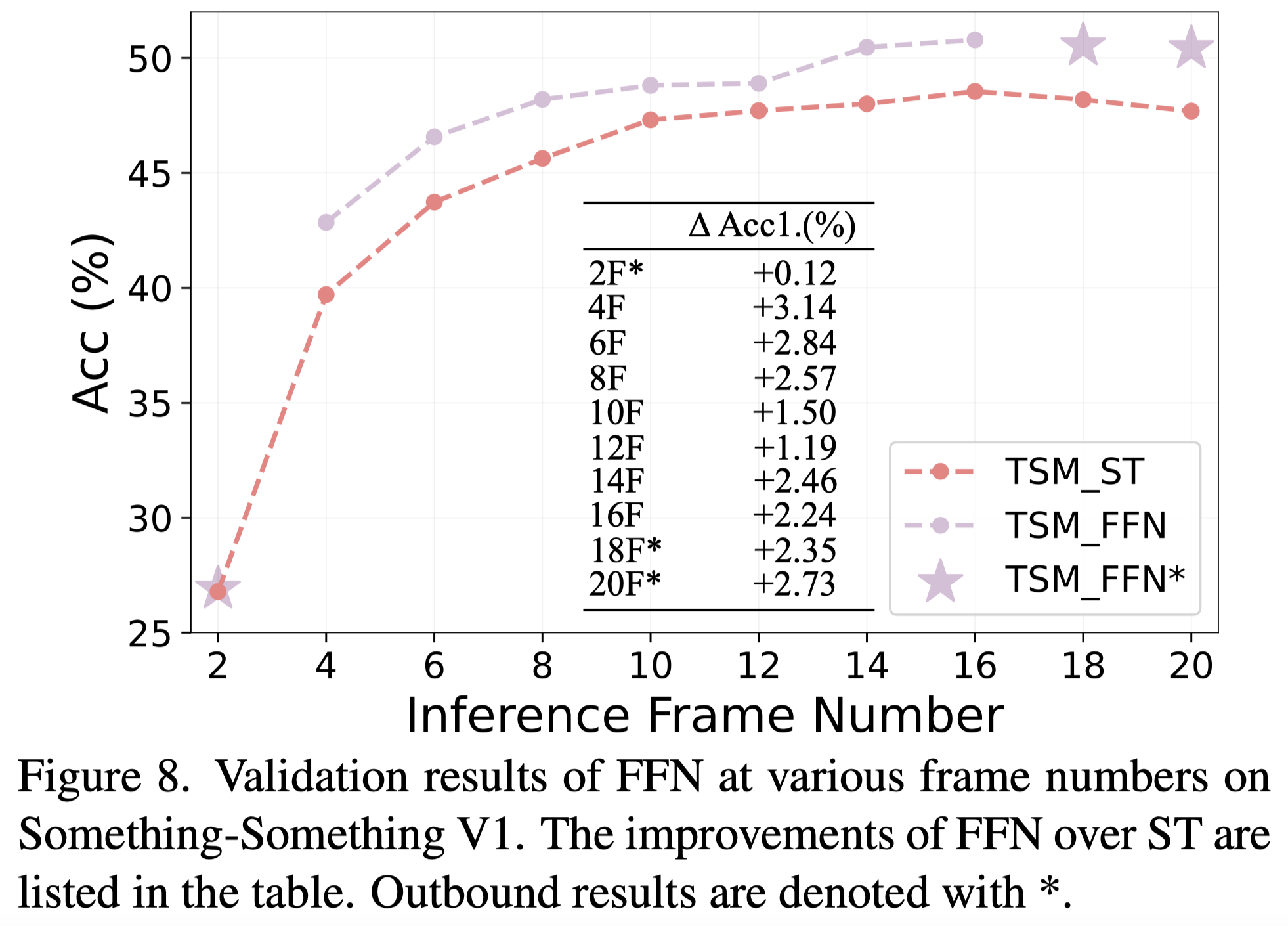

- Inference At Any Frame

FFN can be evaluated at any frame and outperform Seperated Training (ST) even at frames which are not used in training.

We provide a comprehensive codebase for video recognition which contains the implementation of 2D Network, 3D Network and Transformer Network. Please go to the folders for specific docs.

Our codebase is heavily build upon TSM, SlowFast and Uniformer. We gratefully thank the authors for their wonderful works. The README file format is heavily based on the GitHub repos of my colleague Huan Wang, Xu Ma and Yizhou Wang. Great thanks to them! We also greatly thank the anounymous CVPR'23 reviewers for the constructive comments to help us improve the paper.