In this project, we would like to compare the performance of some embarassingly simple algorithms to solve a classification problem based on the MNIST database.

The abstract aim of the program is to write a function:

result = classify(image)

that takes as input a small grey scale image of a hand-written digit (from the MNIST database), and returns the digit corresponding to the content of the image.

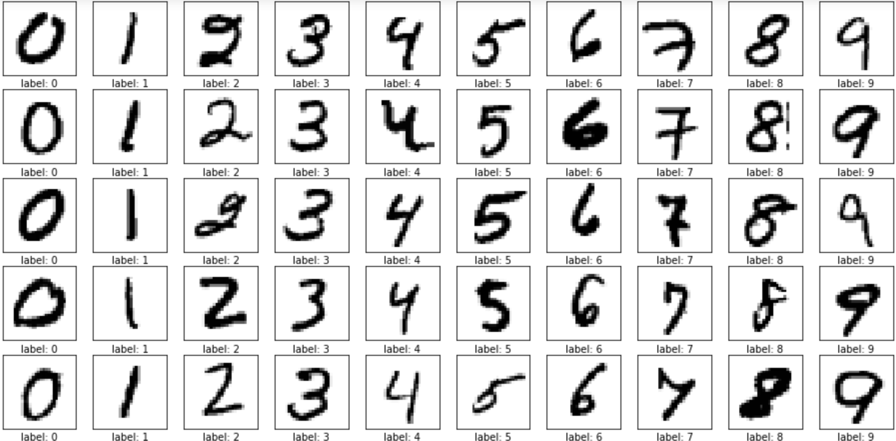

An example of the images we'll be working on is the following:

Some background on the MNIST database (from wikipedia):

From Wikipedia, the free encyclopedia

The MNIST database (Modified National Institute of Standards and Technology database) is a large database of handwritten digits that is commonly used for training various image processing systems. The database is also widely used for training and testing in the field of machine learning. It was created by "re-mixing" the samples from NIST's original datasets. The creators felt that since NIST's training dataset was taken from American Census Bureau employees, while the testing dataset was taken from American high school students, it was not well-suited for machine learning experiments. Furthermore, the black and white images from NIST were normalized to fit into a 28x28 pixel bounding box and anti-aliased, which introduced grayscale levels.

The MNIST database contains 60,000 training images and 10,000 testing images. Half of the training set and half of the test set were taken from NIST's training dataset, while the other half of the training set and the other half of the test set were taken from NIST's testing dataset. There have been a number of scientific papers on attempts to achieve the lowest error rate; one paper, using a hierarchical system of convolutional neural networks, manages to get an error rate on the MNIST database of 0.23%. The original creators of the database keep a list of some of the methods tested on it. In their original paper, they use a support vector machine to get an error rate of 0.8%. An extended dataset similar to MNIST called EMNIST has been published in 2017, which contains 240,000 training images, and 40,000 testing images of handwritten digits and characters.

We start by defining the distance between two images. Ideally, a distance function between two images is zero when the images are the same, and greater than zero when the images are different.

The bigger the distance, the more different the images should be. Ideally, the distance between an image of the number 9 should be closer to an image of the number 8 than to an image of the number 1 (the digits 9 and 8, as images, differ by the fact that the first has one closed loop, while the second has two closed loops, while the digit 1 is mostly a straight line). Two different images representing the same number should be even closer (i.e., the distance function should return a "small" number).

Given a distance and a training set of images for which we know everything, the simplest algorithm we can think of to classify an image z, is the following: given a set of train images (x_train) for which we know the digit they represent (y_train), measure the distance between z and all images in x_train, and classify the image z to represent the same digit of the image that is closest to z in x_train:

Parameters of the algorithm:

x_trainy_train- a distance function

dist

Input of the function

z

Output of the function

digit

where

def classify(z):

all_distances = array([dist(x, z) for x in x_train])

digit = y_train[argmin(all_distances)]

return digit

We will experiment with different distances, and we will try to improve the algorithm above in a step by step fashon.

Each image in the MNIST dataset represents a hand written digit, in the form of a matrix of 28x28 values between zero and one, representing gray scale values (zero = white, one = black).

We use an array of 60.000x28x28 floating point values to collect all training images, and an array of 60.000 digits containing the (correct) value of the training digits (between 0 and 9 inclusive).

The testing images are instead collected into two arrays of size 10.000x28x28 and 10.0000 respectively.