This is the official repository for the ICLR 2023 paper Learning to Segment from Noisy Annotations: A Spatial Correction Approach. This paper proposed a Markov model for simulating segmentation label noise, and a Spatial Correction method to combat such noise. The outline of this repository is given as follows.

Our code has been tested on Ubuntu 20.04.5 LTS with CUDA 11.4 (Driver Version 470.161.03), Python 3.9.7, and PyTorch v1.10.2. Other requirements can be installed by

pip install -r requirements.txt

The proposed method is compared with SOTAs in a wide range of noisy settings, including both synthetic and real-world noise.

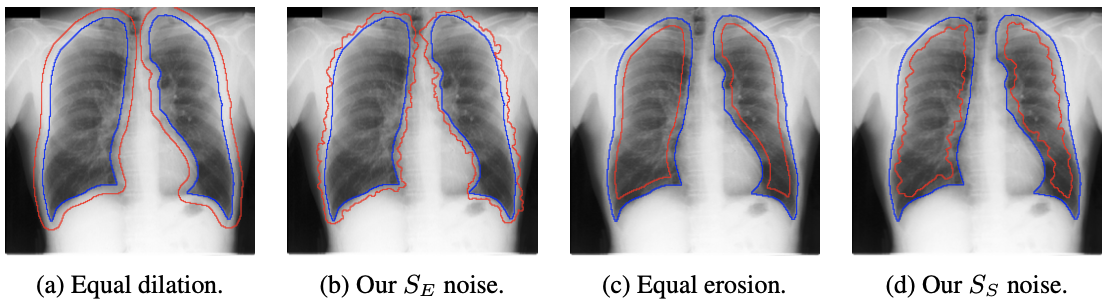

Previous works used random dilation and/or erosion noise to simulate noisy annotations, while we propose a Markov process to model such noise. The comparison is as follows,

| Comparison of different types of noise. Blue lines are true segmentation boundaries, and red lines are noisy boundaries (after removing random flipping noise). |

|---|

The Markov noise generation follows Definition 1 in our paper. There are four parameters controlling this process,

python noise_generator.py --gts_root your/gt/root/ --save_root your/save/root/

To generate random dilation and erosion noise, add --noisetype DE to the above command.

Other arguments can be set accordingly with detailed descriptions inside the function. To generate noisy labels used in Table 1 in the paper, refer to the following settings.

| ID | Dataset | --is3D |

--noisetype |

--range |

--T |

--theta1 |

--theta2 |

--theta3 |

|---|---|---|---|---|---|---|---|---|

| J1 | JSRT | False | Markov | -- | Lung: 180, Heart: 180, Clavicle: 100 | 0.8 | Lung: 0.05, Heart: 0.05, Clavicle: 0.02 | 0.2 |

| J2 | JSRT | False | Markov | -- | Lung: 180, Heart: 180, Clavicle: 100 | 0.2 | Lung: 0.05, Heart: 0.05, Clavicle: 0.02 | 0.2 |

| J3 | JSRT | False | DE | Lung: [7,9], Heart: [7,9], Clavicle: [3,5] | -- | -- | -- | -- |

| B1 | Brats2020 | True | Markov | -- | 80 | 0.7 | 0.05 | 0 |

| B2 | Brats2020 | True | Markov | -- | 80 | 0.3 | 0.05 | 0 |

| B3 | Brats2020 | True | DE | [3,5] | -- | -- | -- | -- |

| I1 | ISIC2017 | False | Markov | -- | 200 | 0.2 | 0.05 | 0.2 |

| I2 | ISIC2017 | False | Markov | -- | 200 | 0.8 | 0.05 | 0.2 |

| I3 | ISIC2017 | False | DE | [7,9] | -- | -- | -- | -- |

We include Cityscapes and LIDC-IDRI datasets for real-world label noise settings. Detailed information can be found in the paper. Our selected Cityscapes dataset can be downloaed here soon.

We provide the JSRT dataset with noisy setting J1 in ./Datasets and a trained model with the proposed Spatial Correction method in ./trained. If you use JSRT dataset in your work, please cite their original publications. More trained models will be uploaded later.

For testing on the provided model, simply run python test.py. To test other models, change the paths in test.py accordingly and run the same command.

Change the relevant dataset path in config.ini or organize your data folder accordingly. Each dataset has its own section leading by [dataset_name]. Example commands and parameters for training model with each dataset are provided in run.sh.

Preparing Dataset and Code. To train your own dataset, the first thing is to add another section in config.ini leading by [your_dataset]. Create similar path arguments as other sections. Second, create your own dataset class in uitils/dataset.py or modify existing ones to fit the structure of your dataset. Third, based on the modality and classes of your dataset, you might need to modify codes in utils/training.py, utils/evaluation.py, and utils/LabelClean.py. Note that you only need to modify relevant part (mostly some if/else conditions) to fit the dimension (same as 'Brats2020' if 3D, same as 'JSRT'/'ISIC2017' if 2D) and classes (same as 'JSRT' if multiple classes, same as 'ISIC2017'/'Brats2020' if single class). Last but not least, use the proper segmentaion network. You may put your network definition file in net folder.

Tuning Parameters. Apart from epochs -e, batch size -b, and learning rate -lr, there are other three parameters for cleaning noisy labels. -s is the hyper-parameter -s 1, which usually works in most cases. Then try to scale down to -s 0.8 or -s 0.4 to find the best performance. Other two relevant parameters are -le and --max_iter. The former is run label correction every -le epochs and start over training. The latter is the maximum iteration for the whole process.

If you find this code helpful, please consider citing as

@inproceedings{yao2023spatialcorrection,

title={Learning to Segment from Noisy Annotations: A Spatial Correction Approach},

author={Yao, Jiachen and Zhang, Yikai and Zheng, Songzhu and Goswami, Mayank and Prasanna, Prateek and Chen, Chao},

booktitle={International Conference on Learning Representations},

year={2023}

}