- We propose neural dual quaternion blend skinning (NeuDBS) as our deformation model to replace LBS, which can resolve the skin-collapsing artifacts.

- Introduce a texture filtering approach for texture rendering that effectively minimizes the impact of noisy colors outside target deformable objects.

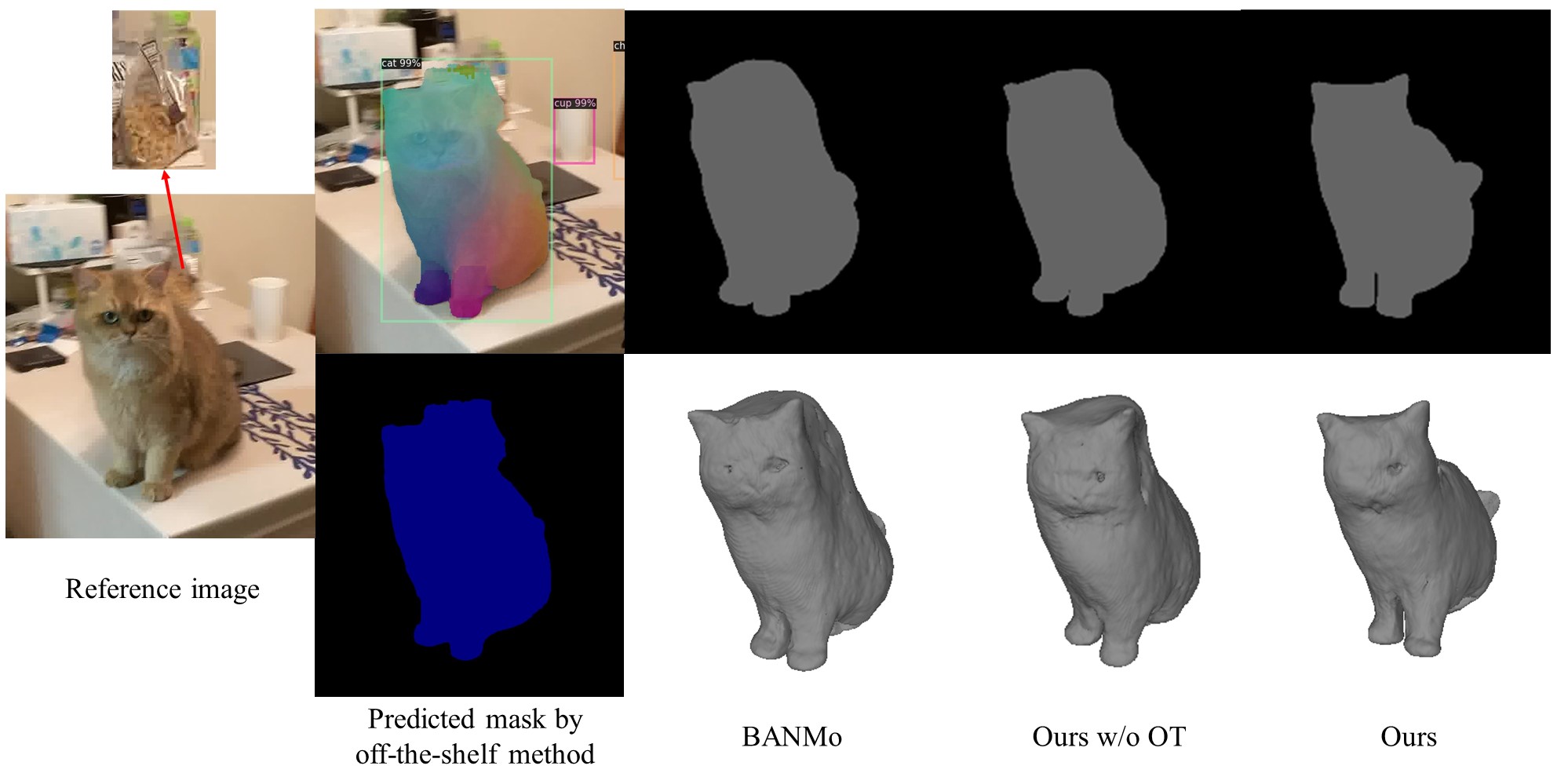

- Formulate the 2D-3D matching as an optimal transport problem that helps to refine the bad segmentation obtained from a off-the-shelf method and predict the consistent 3D shape.

- [2023/04/18] Our paper is available on arXiv now.

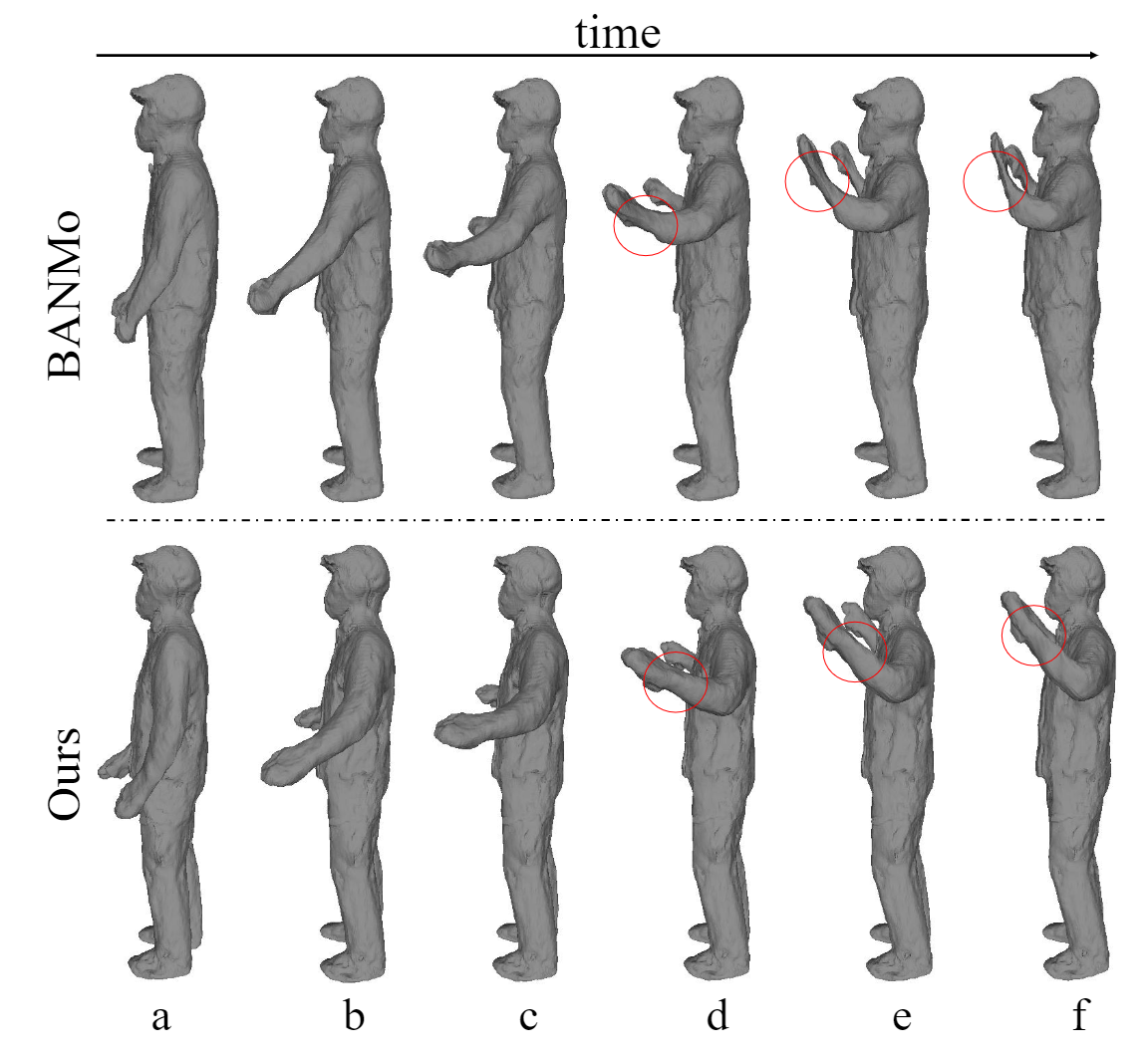

We compare reconstruction results of MoDA and BANMo, the skin-collapsing artifacts of BANMo are marked with red circles. Please refer to our Project page for more reconstruction results.

Comparison.of.BANMo.and.MoDA.mp4

BANMo has more obvious skin-collapsing artifacts for motion with large rotations, our method can resolves the artifacts with the proposed NeuDBS.

By registering 2D pixels across different frames with optimal transport, we can refine the bad segmentation and predict the consistent 3D shape of the cat.

We show the effectiveness of texture filtering appraoch by adding it to both MoDA and BANMo.

texture.filtering.mp4

We compare the motion re-targeting results of MoDA and BANMo.

Motion.re-targeting.mp4

@article{song2023moda,

title={MoDA: Modeling Deformable 3D Objects from Casual Videos},

author={Song, Chaoyue and Chen, Tianyi and Chen, Yiwen and Wei, Jiacheng and Foo, Chuan Sheng and Liu, Fayao and Lin, Guosheng},

journal={arXiv preprint arXiv:2304.08279},

year={2023}

}We thank BANMo for their code and data.