The purpose of this repository is to showcase a series of handpicked confidence measures. All the confidence measures implemented in this repository can also be found in On the confidence of stereo matching in a deep-learning era: a quantitative evaluation by by M. Poggi et al. There are no plans to add any learned confidence measures, as these are generally more complicated, therefore requiring more than a single notebook to implement. The same stereo matching algorithm, SGM, and the same example, teddy from Middlebury dataset, will be used for all examples, so the conditions remain consistent for all confidence measures.

Brief results of the confidence measures implemented thus far are shown below. See the respective notebooks for further details.

- Requirements: A single cost volume.

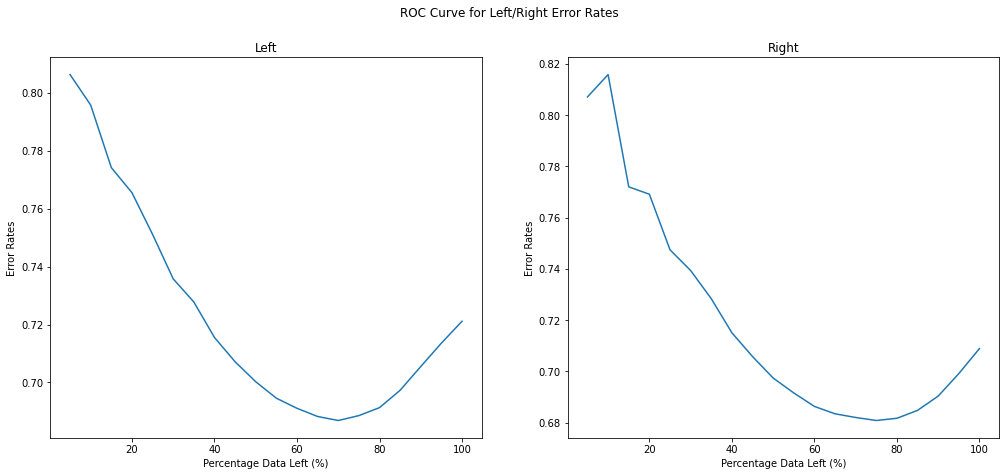

- AUC Left Score: 0.685.

- AUC Right Score: 0.681.

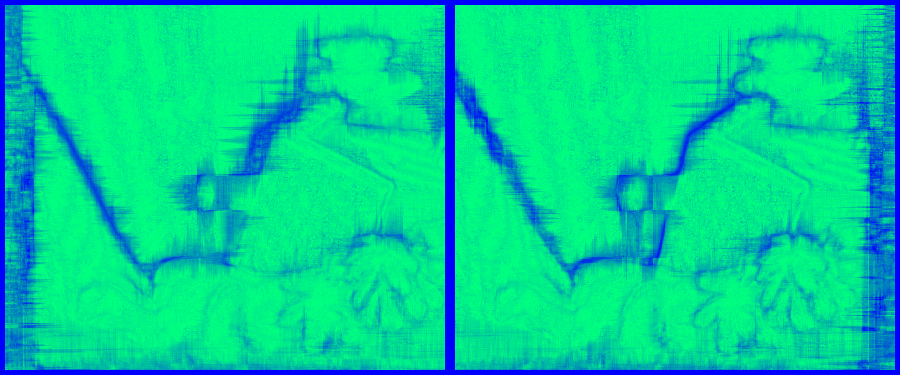

- Confidence Map:

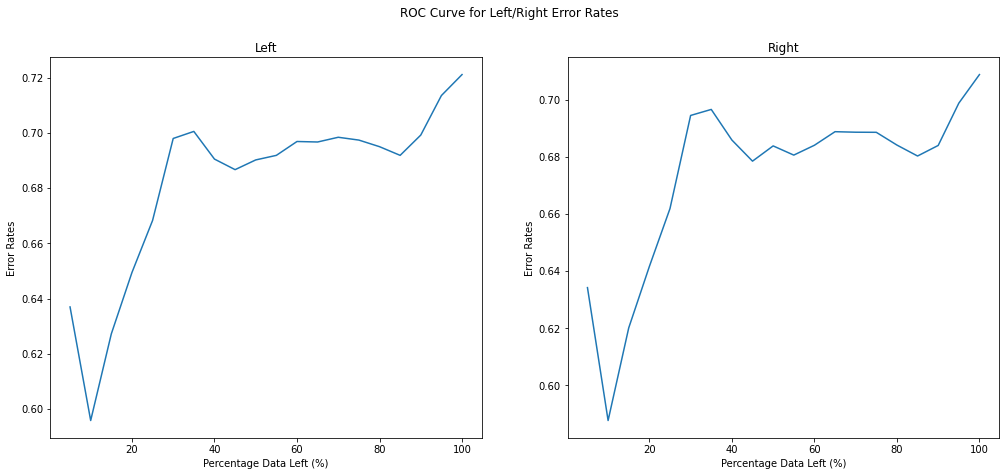

- ROC Curve:

- Requirements: A single cost volume.

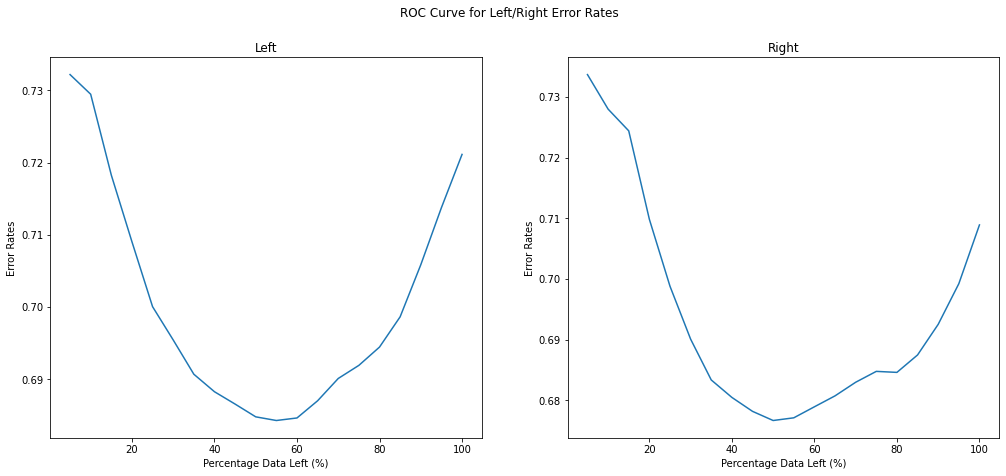

- AUC Left Score: 0.664.

- AUC Right Score: 0.658.

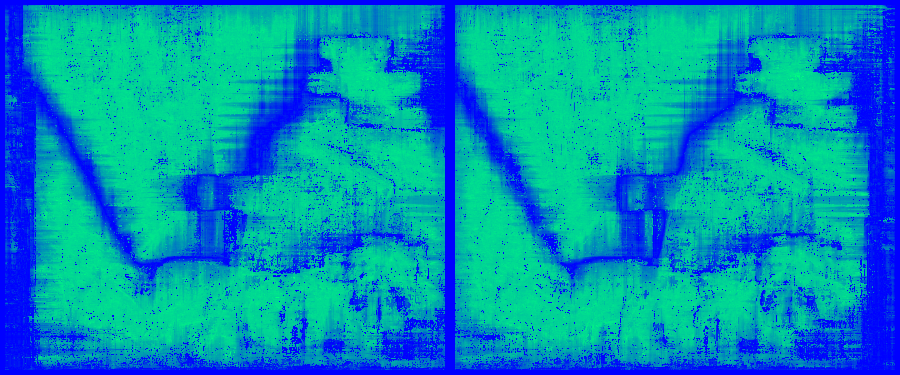

- Confidence Map:

- ROC Curve:

None Yet.

- Requirements: Left and right disparity maps.

- AUC Left Score: 0.648.

- AUC Right Score: 0.640.

- Confidence Map:

- ROC Curve:

None Yet.

None Yet.

None Yet.

None Yet.

- Matching Score Measure (MSM) by G. Egnal, M. Mintz, and R. Wilde.

- Maximum Margin (MM) by M. Poggi, F. Tosi, and S. Mattoccia.

- Left-Right Consistency (LRC) by G. Egnal and R. P. Wildes.