Code Repository of the EPFL SIE Master Project, Spring Semester 2018.

The goal of this project is to perform error detection and novelty detection in Convolutional Neural Networks (CNNs) using Density Forests. Applications to the MNIST dataset and a dataset for semantic segmentation of land cover classes in Zurich are visualized in Code/ and Zurich/.

The package can be simply installed from pip:

pip install density_forest

Density trees maximize Gaussianity at each split level. In 2D this might look as follows:

A density forest is a collection of density trees each trained on a random subset of all data.

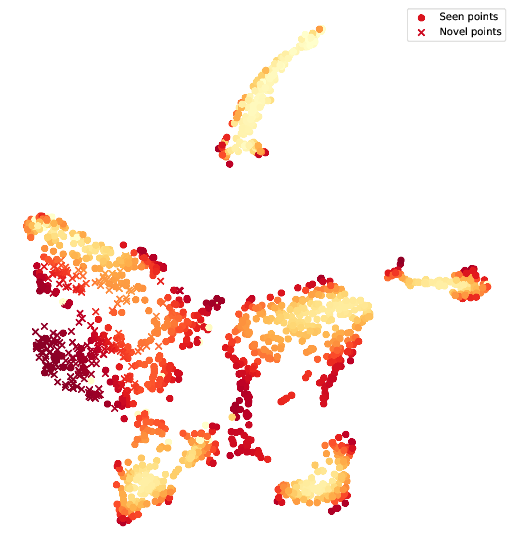

The above example shows the t-SNE of the pre-softmax activations of a network trained for semantic segmentation of the Zurich dataset, leaving out one class during training. Density trees were trained on bootstrap samples of all classes but the unseen one.

Confidence of each data point in the test set, the probability is calculated as the average Gaussian likelihood to come from the leaf node clusters.

Darker points represent regions of lower certainty and crosses represent activations of unseen classes.

Suppose you have your training data X_train and test data X_test, in [N, D] with N data points in D dimensions:

from density_forest.density_forest import DensityForest

clf_df = DensityForest(**params) # create new class instance, put hyperparameters here

clf_df.fit(X_train) # fit to a training set

conf = clf_df.decision_function(X_test) # get confidence values for test set

outliers = clf_df.predict(X_test) # predict whether a point is an outlier (-1 for outliers, 1 for inliers)Hyperparameters are documented in the docstring. To find the optimal hyperparameters, consider the section below.

To find the optimal hyperparameters, use the ParameterSearch from helpers.cross_validator, which allows CV, and hyperparameter search.

from helpers.cross_validator import ParameterSearch

# define hyperparameters to test

tuned_params = [{'max_depth':[2, 3, 4], 'n_trees': [10, 20]}] # optionally add non-default arguments as single-element arrays

default_params = [{'verbose':0, ...}] # other default parameters

# do parameter search

ps = ParameterSearch(DensityForest, tuned_parameters, X_train, X_train_all, y_true_tr, f_scorer, n_iter=2, verbosity=0, n_jobs=1, default_params=default_params)

ps.fit()

# get model with the best parameters, as above

clf_df = DensityForest(**ps.best_params, **default_params) # create new class instance with best hyperparameters

... # continue as aboveCheck the docstrings for more detailed documentation af the ParameterSearch class.

All libraries for density forests, helper libraries for semantic segmentation and for baselines.

Package for implementation of Decision Trees, Random Forests, Density Trees and Density Forests

create_data.py: functions for generating labelled and unlabelled datadecision_tree.py: data structure for decision tree nodesdecision_tree_create.py: functions for generating decision treesdecision_tree_traverse.py: functions for traversing a decision tree and predicting labelsdensity_forest.py: functions for creating density forestsdensity_tree.py: data struture for density tree nodesdensity_tree_create.py: functions for generating a density treedensity_tree_traverse.py: functions for descending a density tree and retrieving its cluster parametershelper.py: various helper functionsrandom_forests.py: functions for creating random forests

General helpers library for semantic segmentation

data_augment.py: custom data augmentation methods applied to both the image and the ground truthdata_loader.py: PyTorch data loader for Zurich datasethelpers.py: functions for importing, cropping, padding images and other related image tranformationsparameter_search.py: functions for finding optimal hyperparameters for Density Forest, OC-SVM and GMM (explained above)plots.py: Generic plotter functions for labelled and unlabelled 2D and 3D plots, used for t-SNE and PCA plots

Helper functions for confidence estimation baselines MSR, margin, entropy and MC-Dropout

Helper functions for Keras

helpers.py: get activationscallbacks.py: callbacks to be evaluated after each epochunet.py: UNET model for training of network on Zurich dataset

Visualizations of basic decision tree and density tree

Decision Forest.ipynb: Decision Trees and Random Forest on randomly generated labelled dataDensity Forest.ipynb: Density Trees on randomly generated unlabelled data

MNIST Novelty Detection.ipynb: Training of a CNN leaving out one class, baselines and DF for novelty detectionMNIST Error Detection.ipynb: Training of a CNN, baselines and DF for error detection

Zurich Dataset Novelty Detection.ipynb: Training of CNN, baselines and DF for novelty detectionZurich Dataset Error Detection.ipynb: Training of CNN, baselines and DF for error detection

- Prof. Devis Tuia, University of Wageningen

- Diego Marcos González, University of Wageningen

- Prof. François Golay, EPFL

Cyril Wendl, 2018