Sentiment Analysis of tweets written in underused Slavic languages (Serbian, Bosnian and Croatian) using pretrained multilingual RoBERTa based model XLM-R on 2 different datasets.

Sentiment Analysis is performed on 2 different datasets separately (I decided not to join the datasets, because I wanted to compare my results with the similar work):

- CLARIN.SI - Twitter sentiment for 15 European languages:

Dataset can be found here. It consits of tweet IDs, which can be used for extraction of tweets through the Tweeter API, and corresponding labels (positive, negative or neutral). From this dataset only Serbian, Croatian and Bosnian tweets were used.

Note that this dataset is 5 years old so we won't be able to extract the large number of tweets, because they are deleted. I have managed to extract only 27439 tweets out of 193827.

Similar work on the same dataset can be found here. They achieved 55.9% accuracy on the dataset that consits out of 193827 Serbian, Bosnian and Croatian tweets. - doiSerbia:

This dataset is collected by Ljajić Adela and Marović Ulfeta and there work on the same problem can be found here. They achieved 69.693% accuracy. Dataset is balanced and it consists out of only 1152 labeled tweets, written in Serbian language. The labels are 0 = positive, 2 = neutral and 4 = negative. Text of the tweets is not provided and can be extracted through the Twitter API using tweet IDs.

Since the datasets contain only tweet IDs, and not the text of the tweets, we need to extract the text thorugh the Twitter API using tweepy package.

You first need to create developer account for Twitter API. After you file in a request you will need to wait a few days for approval. When your request is approved you have to download API key, API secret key, Access token and Access token secret. My keys are placed in keys.txt file which will not be provided due to privacy and security issues.

- (CLARIN.SI only) There are a lot of NaN rows, because multiple tweets are deleted, so they are dropped.

- (CLARIN.SI only) Because some of the tweets were annotated multiple times by the same annotator, there can be duplicated rows. We first need to drop all rows, but one, with duplicated tweets where HandLabel is the same. After that we drop all duplicated tweets, since they all have different HandLabel and we do not know which one is correct. It would have been wrong if we dropped all the duplicates at once, without looking at the HandLabel, because we would threw away the highest quality data (the tweets which were labeled same multiple times).

- All tweets are converted to lowercase

- All links were removed since they do not contain any relevant information for this task and also '[video]' and '{link}' strings were removed because Twitter sometimes converts links to to these keywords.

- A lot of tweets are usually retweets, that means that they contain 'RT @tweet_user' keywords, since 'RT @' is of no use it is replaces by '@'. ('@' is kept as indicator of tweet_user, because we will be removing them in the following steps).

- All usernames are removed. Usernames are words that start with '@'.

- Dealing with hashtags: Hashtag symbol '#' is removed, but the words that follow that symbol are kept, since they usually contain a lot of useful information (they are usualy compressed representation of the tweet). Since those words are connected with '_' character, this character is converted to blank space ' ' character.

- Datasets are finally splitted into train, val and test sets (80%, 10% and 10%) and schuffled randomly.

| CLARIN.SI | doiSerbia |

|---|---|

|

|

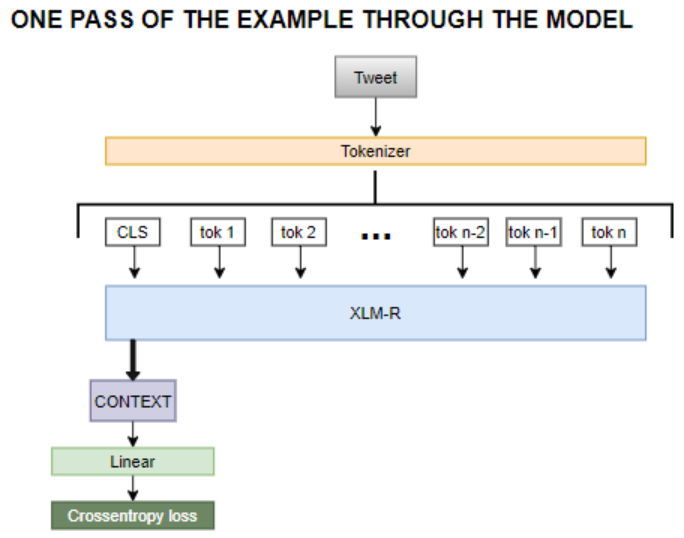

Since the datasets are relatively small we will be using pretrained multinigual RoBERTa based language model XLM-R and fine tune it for this task. XLM-Roberta Sentence Piece tokenizer is used to tokenize the tweets.

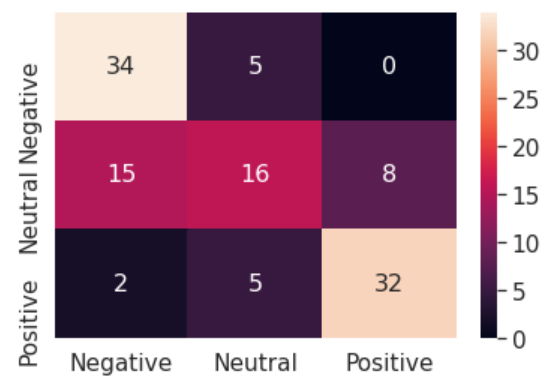

| CLARIN.SI | doiSerbia | |||

| my result | similar work | my result | similar work | |

| accuracy | 63% | 55.9% | 74% | 69.693% |

| data size | 27439 | 193827 | 1152 | 7663 |

Since the language model is pretrained and the Linear classifier has no 'knowledge' (starts with random weights), at the start of the training the language model will be frozen and classifier will be trained with large learning rate for few epochs. After that we will unfreeze the language model and train the complete model with small learning rate, because we do not want to let our language model quickly 'forget' what it already 'knows' (this can easily lead to heavy overfitting). To make the learning more stable Linear scheduler with warmup is implemented in both frozen and fine-tuning regime. Also AdamW optimizer is used, which is an improved version of Adam optimizer that does not keep track of regularization term when calculating momentum (you can find an explanation here). This is important because the models are trained with relatively large weight decay.

| CLARIN.SI | doiSerbia |

|---|---|

|

|

|

|

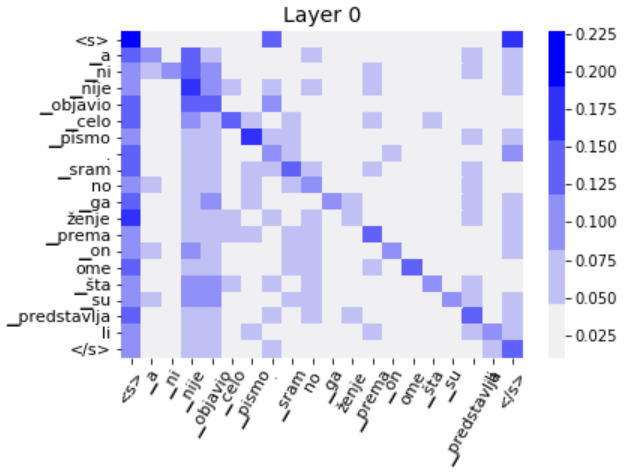

Self-attention matrix for the first layer (matricies for other layers are available in checkpoints/dataset2/best/attentions):

Tweet: A ni nije objavio celo pismo. Sramno gaženje prema onome šta su predstavljali