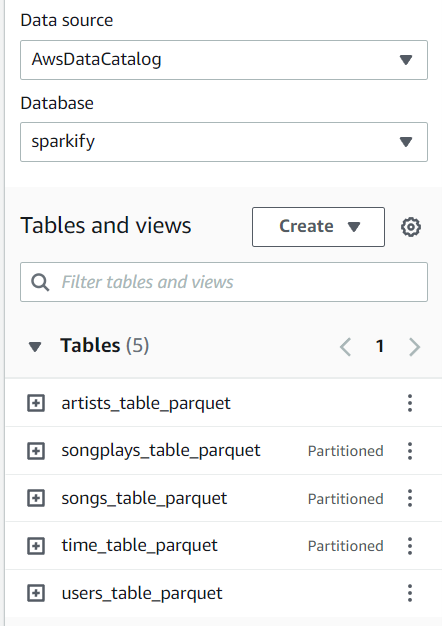

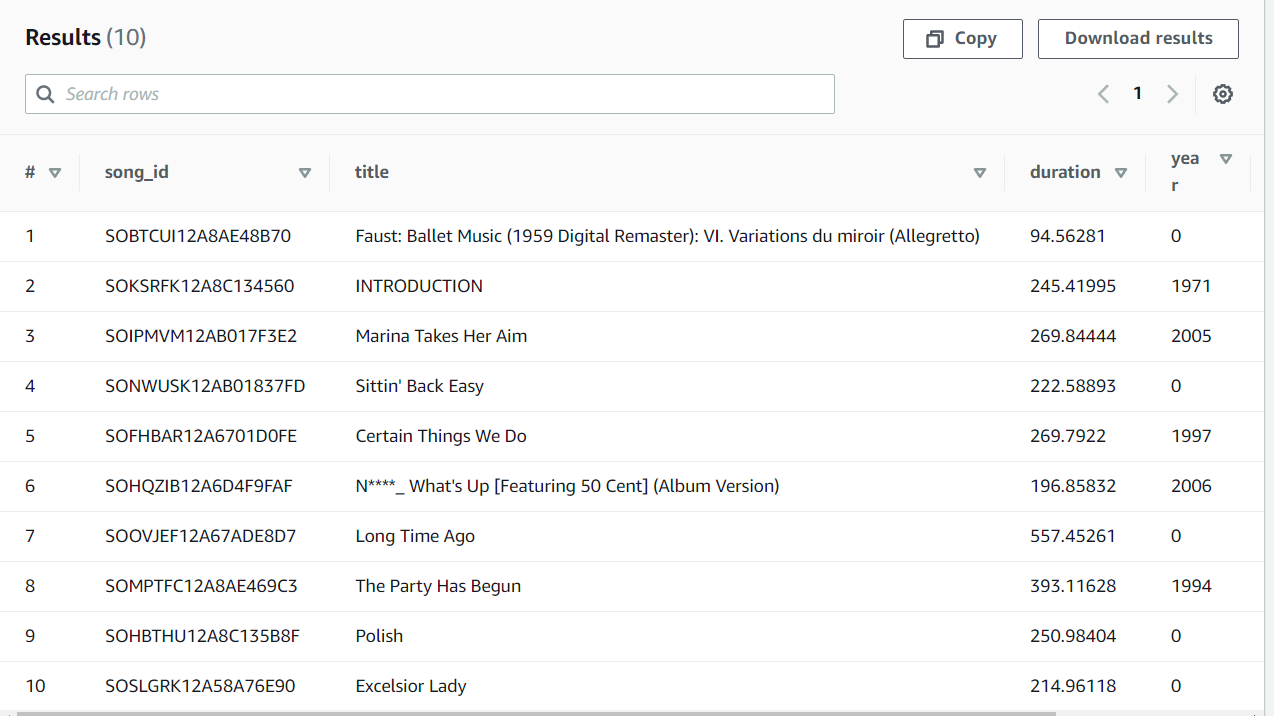

This Data Lake was created as a means to access the Sparkify data for analytical purposes. The ETL loads data into S3 in parquet format. A Glue crawler and Athena can be used to query the data.

The project has been created with the following structure:

├── README.md

├── dl_template.cfg

├── etl.py

└── iac_emrcluster.sh- dl_template.cfg: Template for the configuration file. Fill in the missing information and rename the file to dl.cfg

- etl.py: Python script that loads the data from udacity S3 into a star schema in another S3 bucket.

- iac_emrcluster.sh: Bash utility that creates and deletes an EMR Cluster (IaC).

Create the cluster

python iac_emrcluster.sh -cNote Make sure to write down the cluster id.

After a while check if the cluster is available by running the status command

python iac_emrcluster.sh -s $cluster_idThis will return a response and if the StateChangeReason is Cluster ready to run steps. the cluster is set to run the etl

The cluster can be terminated running:

python iac_emrcluster.sh -t $cluster_idwarning This will delete the cluster and all the information with it. After this point all non saved data will be lost and the creation command will be needed to recreate the cluster.**

The data is ingested and inserted into the OLAP Schema.

- songplays

- users

- songs

- artists

- time

To create the tables run ssh into the cluster

ssh -i $pem_key.pem user@urlCopy the script to the EMR cluster

scp -i $pem_key.pem etl.py user@url:/home/hadoopand run following command.

/usr/bin/spark-submit --master yarn ./etl.pyA star schema was implemented in order to make queries about the usage of the streaming app as simple as possible.

- Running the job in an EMR Cluster with appropiate roles makes the AWS credentiasl redundant. Comment those lines.

- Running the job locally does require the credentials.

A Glue Crawler may be used to get the schema from the S3 bucket. From then the AWS Console or boto3 can be used with the Athena service to query the data