Training performance dropped with the latest version

Closed this issue · 2 comments

nuomizai commented

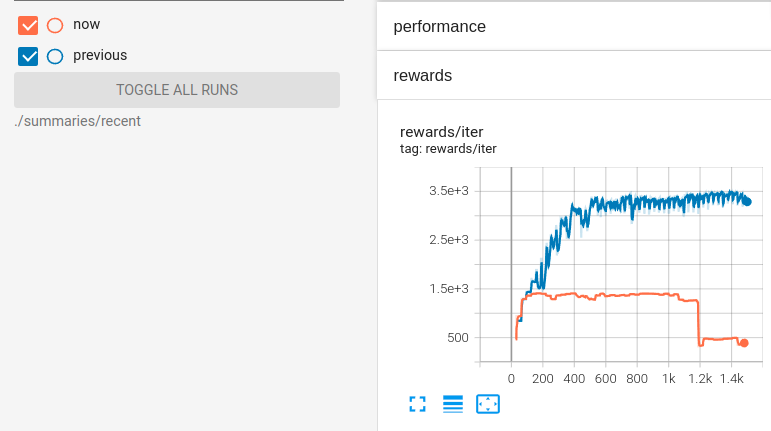

Hi, @Denys88 . I saw an appearant performance drop during training with the latest rl_games version, the reward picture is as follows (trained with the FrankaCabinet Environment in IsaacGymEnvs):

The orange line is training with the latest version and the blue one is with the old version (v1.4.0). I found that the latest code in a2c_common.py, there is no self.schedule_type, and all scheduler updates as the way when self.schedule_type=='standard'. The latest code is as follows:

for mini_ep in range(0, self.mini_epochs_num):

ep_kls = []

for i in range(len(self.dataset)):

a_loss, c_loss, entropy, kl, last_lr, lr_mul, cmu, csigma, b_loss = self.train_actor_critic(self.dataset[i])

a_losses.append(a_loss)

c_losses.append(c_loss)

ep_kls.append(kl)

entropies.append(entropy)

if self.bounds_loss_coef is not None:

b_losses.append(b_loss)

self.dataset.update_mu_sigma(cmu, csigma)

av_kls = torch_ext.mean_list(ep_kls)

if self.multi_gpu:

dist.all_reduce(av_kls, op=dist.ReduceOp.SUM)

av_kls /= self.rank_size

self.last_lr, self.entropy_coef = self.scheduler.update(self.last_lr, self.entropy_coef, self.epoch_num, 0, av_kls.item())

self.update_lr(self.last_lr)

When I changed the code as follows

for mini_ep in range(0, self.mini_epochs_num):

ep_kls = []

for i in range(len(self.dataset)):

a_loss, c_loss, entropy, kl, last_lr, lr_mul, cmu, csigma, b_loss = self.train_actor_critic(self.dataset[i])

a_losses.append(a_loss)

c_losses.append(c_loss)

ep_kls.append(kl)

entropies.append(entropy)

if self.bounds_loss_coef is not None:

b_losses.append(b_loss)

self.dataset.update_mu_sigma(cmu, csigma)

if self.multi_gpu:

dist.all_reduce(av_kls, op=dist.ReduceOp.SUM)

av_kls /= self.rank_size

self.last_lr, self.entropy_coef = self.scheduler.update(self.last_lr, self.entropy_coef, self.epoch_num, 0, av_kls.item())

self.update_lr(self.last_lr)

av_kls = torch_ext.mean_list(ep_kls)

Then the performance is as before.

So, why you choose to remove the selection of self.scheduler_type? Will it be better when you set the default self.schedule_type='legacy' as before?