- 🚀 Preprint

- Competition CodaLab Page

- Dataset paper

- Task Github Repo

- Our pretrained adapters (refer to the paper, these adapters would perform best when merged):

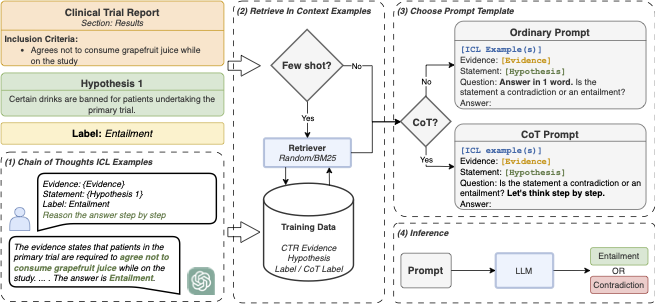

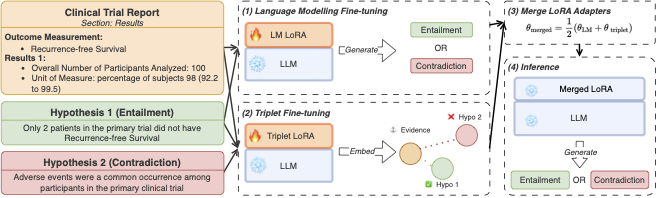

Clinical trials are conducted to assess the effectiveness and safety of new treatments. Clinical Trial Reports (CTR), outline the methodology and findings of a clinical trial, and they are used to design and prescribe experimental treatments. The application of LLMs in critical domains, such as real-world clinical trials, requires further investigations accompanied by the development of novel evaluation methodologies grounded in a more systematic behavioural and causal analyses.

This second iteration is intended to ground NLI4CT in interventional and causal analyses of NLI models, enriching the original NLI4CT dataset with a novel contrast set, developed through the application of a set of interventions on the statements in the NLI4CT test set.

conda env create -f environment.yml

conda activate semeval_nli4ctpython scripts/train.py experiment=0_shot/llama2_7b_chat

python scripts/train.py experiment=0_shot/llama2_13b_chat

python scripts/train.py experiment=0_shot/mistral_7b_instruct

python scripts/train.py experiment=0_shot/mistrallite_7bTo run the GPT-4 experiment, use the scripts/notebooks/gpt4_inference.ipynb. You have to add your own OPENAI_API_KEY as an environment variable.

# 1-shot CoT experiments

python scripts/train.py experiment=1_shot/mistral_7b_instruct retriever=bm25

python scripts/train.py experiment=1_shot/llama2_7b_chat retriever=bm25

python scripts/train.py experiment=1_shot/llama2_13b_chat retriever=bm25

python scripts/train.py experiment=1_shot/mistrallite_7b retriever=bm25

# 2-shot CoT experiments

python scripts/train.py experiment=2_shot/mistral_7b_instruct retriever=bm25

python scripts/train.py experiment=2_shot/llama2_7b_chat retriever=bm25

python scripts/train.py experiment=2_shot/llama2_13b_chat retriever=bm25

python scripts/train.py experiment=2_shot/mistrallite_7b retriever=bm25python scripts/train.py experiment=cot_0_shot/mistral_7b_instruct

python scripts/train.py experiment=cot_0_shot/llama2_7b_chat

python scripts/train.py experiment=cot_0_shot/llama2_13b_chat

python scripts/train.py experiment=cot_0_shot/mistrallite_7b# Create the ChatGPT explanation

# Replace AZURE_OPENAI_KEY and AZURE_OPENAI_ENDPOINT accordingly

python scripts/generate_cot_chatgpt.py

# 1-shot CoT experiments

python scripts/train.py experiment=cot_1_shot/mistral_7b_instruct retriever=bm25

python scripts/train.py experiment=cot_1_shot/llama2_7b_chat retriever=bm25

python scripts/train.py experiment=cot_1_shot/llama2_13b_chat retriever=bm25

python scripts/train.py experiment=cot_1_shot/mistrallite_7b retriever=bm25

# 2-shot CoT experiments

python scripts/train.py experiment=cot_2_shot/mistral_7b_instruct retriever=bm25

python scripts/train.py experiment=cot_2_shot/llama2_7b_chat retriever=bm25

python scripts/train.py experiment=cot_2_shot/llama2_13b_chat retriever=bm25

python scripts/train.py experiment=cot_2_shot/mistrallite_7b retriever=bm25python scripts/train.py experiment=fine_tune/llama2_7b_chat

python scripts/train.py experiment=fine_tune/llama2_13b_chat

python scripts/train.py experiment=fine_tune/meditron_7b

python scripts/train.py experiment=fine_tune/mistral_7b_instruct

python scripts/train.py experiment=fine_tune/mistrallite_7b# Train the triplet loss adapter separately

python scripts/fine_tune_contrastive_learning.py experiment=fine_tune/mistrallite_7b

python scripts/train.py experiment=pretrained_0_shot/mistrallite_7b_contrastive_common_avgTo run the pretrained_0_shot/mistrallite_7b_contrastive_common_avg experiment, you can download our pretrained LoRA adapters: