This is a Deep Reinforcement Learning solution for the Lunar Lander problem in OpenAI Gym using dueling network architecture and the double DQN algorithm. The solution was developed in a Jupyter notebook on the Kaggle platform, utilizing the GPU P100 accelerator. You can find the model weights in the model folder and the results in csv format in the results folder.

pip install swig, gym[box2d]Action Space |

|

Observation Space |

|

import |

|

| Action discrete value | Description |

|---|---|

| 0 | No action |

| 1 | Fire left orientation engine |

| 2 | Fire main engine |

| 3 | Fire left orientation engine |

| Position in the observation or state space list | Description |

|---|---|

| 0 | Lander horizontal coordinate |

| 1 | Lander vertical coordinate |

| 2 | Lander horizontal speed |

| 3 | Lander vertical speed |

| 4 | Lander angle |

| 5 | Lander angular speed |

| 6 | Bool: 1 if first leg has contact, else 0 |

| 7 | Bool: 1 if second leg has contact, else 0 |

For each step in the environment, a reward is granted. The total reward for an episode is the sum of the rewards at each step. For each step, the reward:

- is increased/decreased the closer/further the lander is to the landing pad.

- is increased/decreased the slower/faster the lander is moving.

- is decreased the more the lander is tilted (angle not horizontal).

- is increased by 10 points for each leg that is in contact with the ground.

- is decreased by 0.03 points each frame a side engine is firing.

- is decreased by 0.3 points each frame the main engine is firing.

- -100 for crashing the lander.

- +100 points for landing safely.

An episode is considered a solution if it scores at least 200 points.

- The lander crashes.

- The lander gets outside of the viewport (x coordinate is greater than 1).

- Episode length > 400.

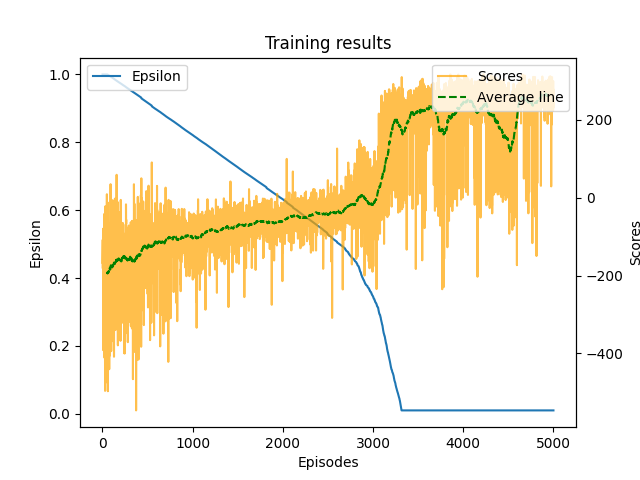

| Train | Test | ||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

Note: You can experiment with various hyperparameters to achieve improved results.