[meta] HDP Declarative Programming (working draft)

fititnt opened this issue · 9 comments

Trivia:

- HDP naming:

- HDP = 'HDP Declarative Programming' is the default name.

- When in doubt (or you or your tools can't detect intent of use in immediate context) this is a good way to call it. See Wikipedia for Declarative programming and Recursive acronym

- HDP = 'Humanitarian Declarative Programming' could be one way to call when the intent of the moment is strictly humanitarian.

- The definition of humanitarian is out of scope.

The triggering motivation

Context: the HXL-Data-Science-file-formats was aimed to use HXL as file format to direct input on softwares for data mining/machine learning/statistical analysis and, since HXL is an solution for fast sharing of humanitarian data, one problem becomes how to also make easier authorization to access data and/or minimal level of anonymization also as fast as possible (ideally in real time).

- The initial motivation for HDP was to be able to abstract "acceptable use policies" (AUP) both understood by humans (think from judges able to enforce/authorize usage to even local community representatives that could write rules knowing that machines could enforce then) on how data could be processed and by machines.

- The average scenario usage in this context is already with a huge level of lack of trust between different groups of humans.

- While by no means (in special because I'm from @EticaAI, and we mostly advocate for avoiding misuse of A/IS) implementing systems would not make mistakes, from the start we're already planning ways to allow auditing without actually needing to access sensitive data.

- Auditing rules that could be reviewed even by people outside the origin country or without knowledge of the language would make things easier,

- even if this means an human, how know the native language but no programming, could create an quick file to say what one term means

- ... and even if it means already planning ahead one way that such translations tables could be digitally signed

- The average scenario usage in this context is already with a huge level of lack of trust between different groups of humans.

- Is possible that the average usage of HDP (if actually go beyond proof of concepts or internal usage) may actually be ways to both reference datasets (eve if they are not ready to use, but could be triggered to built) and instructions to process them

- To be practical, only a syntax for abstract "acceptable use policies" (AUP) without some implementation would not be usable. So this actually is a requirement.

- At this moment (2021-03-16) is not clear what should be very optimized for end user HDP and what could be on just a few languages (like in English and Portuguese), but the idea of already try to allow use of different natural languages to express references to datasets works as a benchmark.

Some drafted goals/restrictions (as 2021-03-16):

-

Both the documentation on how to write the concept of HDP and the proof of concepts to implement are public domain dedication. BSD-0 can be used as alternative.

- No licenses or pre-authorizations to use are necessary.

-

Be in the user creator language. This means that the underlining tool should allow exchange HDP files (that in practice means how to find datasets or manipulate them) for example in Portuguese or Russian and others could still (with help of HDP) convert the key terms of don't understand such languages

- The v0.7.5 already drafted this. But > v0.8.0 should improve the proof of concepts. At the moment the core_vocab already has the 6 UN official languages and, because this project was born via the @HXL-CPLP, the Portuguese language.

2. Note that special care is done with HDP keywords and instructions that would likely to be used by people who, de facto, need to homologate how data can be used. Since often the data columns may be in the native language one or two humans with both technical skills and a way to understand the native language may need to create a filter and label such filter with tasks that accomplish what that users want and then digitally sign this filter. - The inner steps of commands delegated to underlining tools (the wonderful HXL python library is a great example!) is not aimed for average end users so, for the propose of this goal and (as new tools to abstract could increase over time) to make easier localization we strictly don't grant them translations.

- There exists a possibility that the code editors already show usage tips in English for the underlining tools, that at least for some languages (like Portuguese) we from HXL-CPLP may translate the help messages. Something similar could be done in other languages with volunteers.

- The v0.7.5 already drafted this. But > v0.8.0 should improve the proof of concepts. At the moment the core_vocab already has the 6 UN official languages and, because this project was born via the @HXL-CPLP, the Portuguese language.

-

The syntax of HDP must be planned in such a way that make it intentionally hard to average user save information that would make the file itself a secret (like passwords or direct URLs to private resources)

- Users around human rights or typical collaboration in the middle of urgent disasters may share their HDP files with average end user cloud file sharing. Since HDP files themselves may be shared across several small groups (but users only know that the dataset exists, while not requesting it), if in the worst case scenario only the data that one group is affected, the potential damage is mitigated by default.

- In general the idea is to allow some level of indirection for things that need to be kept private while still maintaining usability.

-

Be offline by default and, when applicable, be air-gapped network friendly

- In some cases people may want to potentially use HDP to manage files on one local network because they need to work offline (or the files are too big) while using the same HDP files to share with others, but others could still use an online version.

- Since HDP collection of files could potentially allow really big projects, soon or later in particular who need to have access to data from several other groups could fear that such level of abstraction could lead to being targeted. While this is actually not a problem unique to HDP potential usage and most documentation is likely to be focused to help who consumes sensitive data on the last mile, at least allow applicability of air-gapped network seems reasonable.

- Please note that each case is a case. By "being offline by default" doesn't mean that all resources must be downloaded (in fact, this would be opposite of interest of who would like to share data with others, even if they're trusted), but the fact that command line tools or projects that make reference for load resources outside of network need to have at least some explicitly authorization.

-

"Batteries included", aka try already offer tools that do syntax checks of HDP files.

1. If you use a code editor that supports JSON Schema, the v0.7.5 already has an early version that warns misuse. At the moment it still requires writing with the internal terms used (Latin). But if eventually the schema becomes generated using the internal core_vocab, this means that other languages would have such support too. -

The average HDP file should be optimized if it needs to be printed on paper as it is and have ways to express complex but common items of acceptable use policy (as 2021-03-16 not sure if this is tbe best approach) as some sort of constant. (This means the ideal max characters per line and typical indentation level should be carefully planned ahead). (This type of hint was based on suggestions we hear)

- Yes, we're in 2021, but as friendly as possible to allow the HDP files (in special the ones that are about authorization) being able to be saved as PDF or even on paper, the better. Even in places that allow attach digital archives, while the authorization can be public, the attachments may require extra authorization (like being a lawyer or at least be in person requesting the files).

- Ideally the end result could be concise enough to discourage large amounts of texts on the files themselves (even if it means we developer like "custom constants" that are part of the HDP specification itself, like an tag that means 'authorized with full non-anonymised access to strict use to red cross/MSF' or 'destruct any copy after not more necessary').

- Such types of constants can both help to make rules concise (so worst case scenario if people have to write again letter by letter something that is just not an customization of well know HDP example/template files, its possible) but also would allow with automatic translation

-

Do exist other ideas, but as much of possible, both by the syntax of HDP files (that may be easier just have translation for the core key terms) and, if necessary, creation of constants to abstract concepts, ideally should allow that the exact file (either digitally signed or with literally PDF of an judge authorization, so the "authorization" could be an link to such file) be able to be understood even outside the original country.

- Both for how some custom filters may need to be created, or if either the language used was a totally new one, or the original source wrote a term wrong, the idea here is allow an human, who accepts and digitally signs an extra HDP file, can take full responsibility for mistakes.

- Again, the idea of average HDP files not requiring ways to point to resources or have reference to passwords also is perfect when is made by paper and the decisions (and who create the underlying rules if is something more specific) could be audited.

- Note that some types of auditing could be a human reading the new rule or, since the filters start to have common patterns, the filters someone else creates can be tested against example datasets. While not as ideal as human review, as long as some example datasets for that language already exist (think for example one that simulates Spreadsheets malformed but with personal information) could be used against what was proposed to help that rule of the initial user. (this type of extra validations don't need to be public)

TL;DR: we're also using testinfra for some tests

- Related: [meta] hxlm.core and automated testing: unitary tests, integration tests; continuous integration (CI), etc #12

While hxlm.core, in particular the Htypes, makes sense to test the functions directly, at least for hdpcli in the short term, since internals are changing, it seems reasonable to test at a higher level.

By "higher level" I mean simulating the cli interface.

While this is not as detailed, it at least has more chances to get overall errors while allowing to move internal faster. Another problem is that attaching too much tests on internal methods not only force change things that don't matter for the end user, but also the tests themselves could take more time to write than code the things themselves.

Also, some sort of advantage (again, not for internal parts that could be intended to be reused) of doing such top level testing is that it actually may later be easy to move do like a lot of tests in batch. I mean in addition to the tests in this repository itself it would be possible to (either public or for private groups who would want to grant even more compliance with whatever HDP do, we somewhat would have a draft for this.

Top level tests could help with moving even faster

While retrocompativily is desired, as soon as the HDP syntax could on worst case simple require upgrade (think an human have to edit, even if is something very automatable to do in batch) but the HDP itself at very own core allow this while still able to have chain of accountability, I think that this could at least give some space for serious users that may have more localized community and may still rely on undocumented features.

In general this type of both have internal testing and (if at some point be relevant) document how to do with another repository also helps to test the full chain of environment.

If exchanged HDP files need to meet higher level of compliance

Even if people trust more on public collaboration than their ability to do very deep checks, the idea of still, if as part of agreements to allow data exchange, audit code used, I personally believe that the best approach for who do this, in addition to the initial evaluation, should already implement some sort of automated testing (like to check if filters that should anonymize or block something still work).

This type of approach seems more reasonable because without this very soon, in special who would have to meet governmental compliances, would eventually get outdated versions for weirdest reasons, like because the initial thing was paid by humans review for an period of time and then leadership chances (or budgets priorities chance) and then there is no one there even to do bare minimum checking.

Note that I by no means am saying that human auditing wouldn't be required (and, if not obviously, code released under public domain don't grant liability ), but what I'm saying is that such type of automation would still require humans to push buttons, so whoever would do auditing for this while would be an requirement continuous use, whoever pay for it should require some type of bare minimum automated test.

And what about "air gapped network"? This alone would not avoid the need to update software?

Even if we're drafting something that could be used without any access to the internet at all (so it means would not need to receive updates) no matter how paranoid is the organization threat model, soon or later people would need to update. If really fear, at least consider the possibility that may exist, and if not an critical fix to implement in hours, at least plan the scenario where in maximum one week the fix should be implemented.

But, again, "air gapped network" is something more specific and whoever uses this already should know what is doing. The thing about trying to automate extra tests is because if a country or community is Exchange data with other people from the same community, these people would still very likely use new versions so still a good idea to somewhat protect others or detect early any issue.

Also, doing automated tests help with issues not related at all with security, and in a context that would tolerate broader aspects of use of natural language instead of exact keywords, it actually very pertinent to have this.

The hxlm.core.model.hdp.py file already has a bit over 1.000 lines of code. At least for new code, I will try to break in smaller pieces.

Another point is that I recently both discovered about python doctest (so inline comments that seem like interactive python sessions can be used to test code instead of only the tests/ folder). This seems good for test smaller files or things that are not functionality at a higher level.

1. Some points

1.2 Fact: already is feasible to make every output also an valid source

If we really rush to make even the generated output from the Latin (the internal representation of HDP metadata) it would be possible. Maybe not too many lines over already exist. But it would be a hell of for loop!

The new point is both to break a bit more the functionality and also that some way to add more meaning to the keywords without actually changing much the natural language the human uses

1.2 We may actually use ( ), [ ], < >, { }

After Ansible itself (the idea about using YAML) we're hiring someone to allow use of natural language as programming language constructs. Note that Ansible uses like 95% plain English.

The problem with HDP as that is really, really pertinent to be able to be equally equivalent in several natural languages (see #15) is that we're actually using YAML as one way to have an valid base parser that allow build things over it, but we're already more near how some languages that are even used to teach programming logic allow clear syntax to allow full natural language.

Scheme/Lisp but in special Prolog (see https://www.swi-prolog.org/) can be somewhat more close on how we decide additional nap characters over only depend on the strings themselves

1.2.1 Using concept of DSLs (Domain Specific Language) to allow controller vocabulary

One thing we're very sure of: even if with HDP we tolerate/allow upgrading (like people using old verbs; or we just choose bad initial terms) the most important features NEED to be controlled words.

It means that implementations over HDP need to be both an combo of add new controlled verbs (think at least have translation for 6+1 languages, and we're just on v0.8) and, to be even more useful, software code that convert the instructions to something even more useful than allow exchange human text.

Anyway, modes to express what is controlled actions (things meant to have translations to everything) and what is something that users can extend need somewhat be more flexible. The closer to this is the use of "hrecipes[n]recipe" to "hrecipes[n]_recipe" but even then I was not sure if Arab users would use _ on the wrong side. So I think that at least we would meet _ on both sides or use other characters like ( ) / [ ] { }

End comments

Even if we tolerate some way to allow embed full Turing completeness (not just proxy commands to HXL tools, but like allow run python or other languages, like Ansible allow but complaints about), what is likely to be more an advantage may be more on the restricted DSLs (Domain Specific Language).

The approach of target be more an DSL (at least for creating the YAML files, the hdpcli could allow the full query-like thing, but this is not so soon) I think that tends to be more easy to allow large scale adoption.

But note that I'm not really assuming this would have a huge user base, but that making it less complex to teach a new person is a strong requirement. In other words: if to make it more powerful to allow conversion between natural languages the result would scare new people to test and be confident, the extra features (I suppose) may actually have less value.

The average user may actually assume that everything "is somewhat magic" (they may make comparisons with artificial intelligence or something) so just the extra features and potential may not be worth it if they think they would need something new that may already not be intuitive just looking at some previous YAML file.

Edit: edited links and quick quick errors

Even if we allow use any natural language (but, for sake of allow internationalization, at least know words used) we at least for one term (the one that could means equivalent of hsilo, or maybe something more generic like meta) this could simplify checks.

The idea of maybe use some printable characters like ( ), [ ], { } & < > could be a hint for what is the object that is equivalent to the meta (or hsilo) even for an unknow language (or simply user misspelling.

But here there is an design decision: we could either use an very generic term, like meta and then force user to explicitly add the language as one of key values, or don't require that the user always add the language code, but then meta would not be an good hint, but something like hsilolinguam: LAT & silolinguam: ENG|SPA|LAT|POR (as long as term silo we try to explicitly use different term for each language`... could work.

Note that the ideal idea is (at least for who create an document) be able to write using only own script, to a point of the baseline HDP could work even without use latin characters.

OMG I think I discovered one better approach: we use as hint the language name already in the exact script!

This not only solve the issue of have some term really unique (so, is feasible know the language without by default enforce use ISO 639-3 codes), but also we already solve the problem in detecting the script!!!

Also, to be really sure about the context, we could enforce that the prefix ([ and suffix ]) (maybe with some room to fit metadata betwen ([ and ]), so an really new language could temporaly be written in another script+language) and this could be sufficient to make things work!

The problem with script systems

While Arab (the macro language) and Chinese (the macro language) can, at least, have 2 to 4 writing systems (so, with this we not only know the language, but writing system without any additional hint!) to my current knowledge, Japanese [1] have several ones. Like a lot.

I'm not saying that we would be able to implement this for some short term, but if HDP become more used, with interest, local communities could propose new vocabularies.

Note that we're already defining vocabularies on different files.

I think that the identifier of the language could enforce an array as default. So the original document, the person could put whatever wants there (for example, strings, comments in local language, etc).

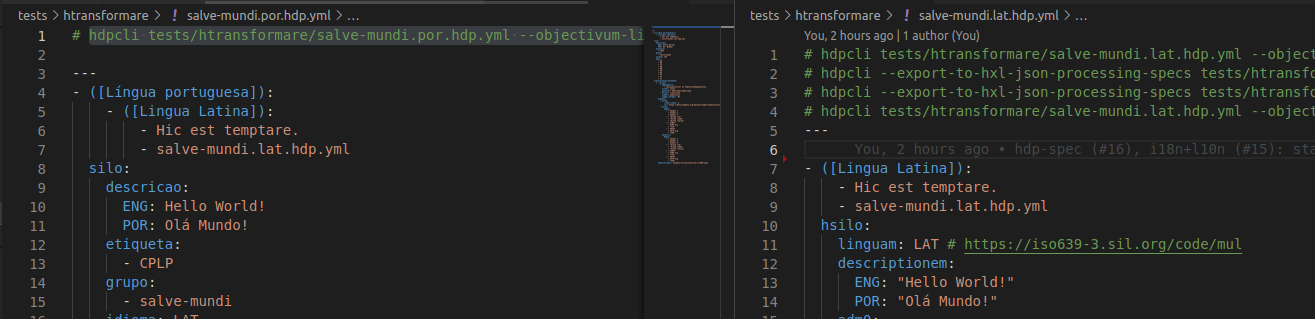

File: salve-mundi.lat.hdp.yml

- ([Lingua Latina]):

- Hic est temptare.

- salve-mundi.lat.hdp.yml

hsilo:

linguam: LAT # https://iso639-3.sil.org/code/mul

descriptionem:

ENG: "Hello World!"

POR: "Olá Mundo!"

# (...)echo $LANGUAGE

# pt_BR:pt:en

hdpcli tests/htransformare/salve-mundi.lat.hdp.yml

# Note, since my native environment already have 'pt_BR:pt:en',

# by default the `hdpcli tests/htransformare/salve-mundi.lat.hdp.yml`

# acted like `hdpcli tests/htransformare/salve-mundi.lat.hdp.yml --objectivum-linguam POR`.

# This was implemented on this [1] commit and was the reason to bump for v0.8.2)

File: salve-mundi.por.hdp.yml

- ([Língua portuguesa]):

- ([Lingua Latina]):

- Hic est temptare.

- salve-mundi.lat.hdp.yml

silo:

descricao:

ENG: Hello World!

POR: Olá Mundo!

etiqueta:

- CPLP

# (...)How the ideal worked

On this concept (not explicitly implemented, I copy and pasted, but this actually is not hard), note that the new reference to the language used was copied as array item from the new ([Língua portuguesa])

Potential need to implement hash (so is possible to know if is safe to revert)

While eventually is very pertinent to implement cryptographic hash (so not only the content would match, but we could know if some user did not intentionally tryied to fake content) we need at least some way to know if an translation need to be reversed.

Use case: if an user translated from LAT -> POR, what about (SAME document) POR -> RUS?

If we implement (like I said, even if not cryptographic secure hash) and way to the hdpcli know that it can revert back from the original file in Latin, even if the user already does not have the original LAT file, using the POR file, it could be translated to RUS as if was not in POR.

Limitations:

- This automation only apply to controlled vocabulary.

- If the POR version was changed, except if the user marked the changes with some specific meaning (like use

<< >>tokens, that could be ignored when translating to other languages)

- If the POR version was changed, except if the user marked the changes with some specific meaning (like use

- Big "hsilos" would work better if broken in smaller files.

- In other words, anything (in special if later is cryptographic signed) could be keep like equal at international level, but if users really need some customizations, we could allow some way to "import_file" such hsilo, so things become easier

- The feature of import another hsilo still not implemented explicitly. But in special if HDP files start to have entire functions (think like an macro using HXL Data processing specs checking if do exist data leak), this start to become a thing to care about.

- In other words, anything (in special if later is cryptographic signed) could be keep like equal at international level, but if users really need some customizations, we could allow some way to "import_file" such hsilo, so things become easier

EDIT 1

Edit: here one example without such hash feature, LAT -> POR -> RUS. (note that the feature from POR to other languages still not 100% done... yet, but will)

- ([Русский язык]):

- ([Língua portuguesa]):

- ([Lingua Latina]):

- Hic est temptare.

- salve-mundi.lat.hdp.yml

силосная:

группа:

- salve-mundi

описание:

ENG: Hello World!

POR: Olá Mundo!

страна:

- AO

- BR

# (...)

Without hash or some way to hdpcli know, the translation thing could go very deep because it's not sure if seems that was the original content.

Quick update:

Jupyter Notebooks from internals at this GitHub gist:

Since HDP itself is not meant to be used directly via underlining libraries, this is less useful for HDP than for other ideas from EticaAI/HXL-Data-Science-file-formats, like the hxlm (the part about try to convert files on HXL format). Also, Jupyter Notebooks can get a lot of data, and just merge then on EticaAI/HXL-Data-Science-file-formats would increase git history a lot.

Oh boy. I think to simplify things I will implement as recursive call the load of files.

I'm not finding a lot of examples using python (or at least not with classes) so I may just create an separate file just to put this sort of stuff on the hxlm.core.hdp. While not as dangerious like the python cryptography hazmat usage for packages that are not intended for end user, I think I may reuse such name.

v0.8.5 started. At the https://hdp.etica.ai/hxlm-js/ we have part of the HDP originaly written in Python ported to JavaScript

Automated transcompiled code and (if necessary) open room for desktop application

Automated transcompiled code (see ceddfa6#diff-423a7725ffc65651ef9b48047bf6f5ccbeb669bef88aaa3deaf97701bc8db885) even if it works, is not as beauty. Also, since we're trying to make as simple as possible to parse the ontologies, it may actually not be hard to at least port the more important parts.

HDP JavaScript ports and even more sensitive content

Some parts of HDP may require (in special for potential use cases of humans centralizing a lot of work) steps that require GPG sign. For individuals who actually work with data transformation I will assume they can deal with command line (or at least interact with a combo of an code editor like VSCode that help to write HDP files and them call command line). But I already was thinking about some way that at least provide simple GUIs that allow people press buttons to know if a file is valid or not (but, note, on this case, I already am considering people with so sensitive content that they cannot or are expected to not be online). But considering the alternatives, is harder to create such interfaces using GUI. Also, even if we do things with Lisp-like dialects, the strategies also have ugly guis.

So, for more sensitive content, even if proof of concepts may exist to do in JavaScript, I would still recommend that potential people on next years just do a port that bind the Javascript implementations.

Web version for less sensitive content (or last-resort check if this is signed by who should be).

By design, HDP files are not mean to contain secrets (like file passwords or direct access to resources without authentication). So do exist some cases where people may receibe HDP files and they still not do the full thing like have installed software (or maybe they don't need this at all). So in such cases, for verify signatures, were HTTPS is acceptable, I think any web version is an perfect case.

As 2021-04-17, the HXL Standard does not have either from code maintained by the HXL working group or from community.

Since (even if very primitive way) we will need deal with already well formated CSV files generated by HXL tools, we would start to create a lot of ad-hoc functions just to repeat what HXL would do.

This means we will take at least a few days extra just to make a functional draft of HXL on Racket that at least should work with our ongologies written in hxl.csv.