In this repo I will upload some of my solutions to the Farama Gymnasium reinforcement learning (RL) problems (when I have time). It would be interesting to go through the Hugging Face RL course but as far as I can tell the leader board is broken so it's not possible to get module credits. My intention is to also learn Optuna hyperparameter optimization a bit more so I will add optimizers as well. I would be interested in pr's, I'm just learning so and pr's are good learning opportunities.

I was curious about the choice of the term Farama in Farama Foundation but I could not find any information. There is some unrelated (I assume) historical context I found interesting as an antiquarian.

Praesidium in variis fortunis, in limine aeterno, ubi flumina sapientiae fluunt.

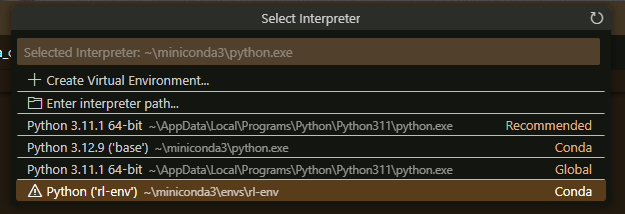

There aren't very good instructions to set up on Windows, which is common in my experience. Big mistake, Windows is life and clearly the best developer environment, don't @ me. For people interested, I set up a virtual environment using miniconda.

conda create -n rl-env

conda activate rl-env

pip install gymnasium[classic-control]

conda install swig

pip install gymnasium[box2d]

pip install gymnasium[toy-text]

pip install gymnasium[mujoco]

pip install gymnasium[atari]

The default python version was fine in my case but you can check that if you do this at some point in the future (your version of python should be compatible with the RL problems):

(rl-env) C:\> python --version

Python 3.12.9

(rl-env) C:\> conda install python=x.xx

If you use vscode or cursor you can set the interpreter to use your conda virtual environment. You are good to go.

Decription: Lunar lander is a classic rocket trajectory optimization problem.Information: You can get more details here.

Lunar Lander has two versions, one with discrete actions and one with continuous actions. This is important because real-world problems often require continuous actions but these networks also have a more complex design and are harder to train because the action space is infinite. For example, an action may be represented like this [-1,1] and include all possible values between -1 and 1.

A passing score for these problems is 200 points (rookie numbers ok). At least this is the value specified for the discrete version.

Network: The network is a feed-forward MLP with an input layer of 8 units, two hidden layers containing 436 and 137 neurons (using ReLU activations), and a final output layer with 4 linear units representing Q-values.Inputs: The network takes an 8-dimensional continuous state vector (representing the LunarLander's state) as input.Outputs: It outputs a 4-dimensional vector of Q-values, one for each discrete action available to the agent.Training: The training follows a Deep Q-Network (DQN) approach using an experience replay buffer with heuristic/prioritized sampling, epsilon-greedy exploration (with exponential decay), periodic cloning of the target network, and MSE loss for Q-value updates.

At 1400 episodes the model has excellent performance. Optuna hyperparameter optimization is working really well here, training results easily average over 260 points with individual runs over 300.

Trial Data

Checkpoint loaded from checkpoints\1400_278.5844592518902_20250215_213044

Loaded model from episode 1400 with score 278.5844592518902

Episode 1: Reward = 286.1, Steps = 250

Episode 2: Reward = 289.6, Steps = 262

Episode 3: Reward = 240.8, Steps = 271

Episode 4: Reward = 300.5, Steps = 281

Episode 5: Reward = 265.5, Steps = 298

Episode 6: Reward = 245.1, Steps = 276

Episode 7: Reward = 245.8, Steps = 302

Episode 8: Reward = 241.0, Steps = 291

Episode 9: Reward = 298.3, Steps = 270

Episode 10: Reward = 240.1, Steps = 294

Episode 11: Reward = 267.1, Steps = 272

Episode 12: Reward = 286.6, Steps = 258

Episode 13: Reward = 230.7, Steps = 321

Episode 14: Reward = 271.1, Steps = 316

Episode 15: Reward = 283.4, Steps = 262

Episode 16: Reward = 251.4, Steps = 313

Episode 17: Reward = 246.8, Steps = 299

Episode 18: Reward = 243.2, Steps = 273

Episode 19: Reward = 265.8, Steps = 316

Episode 20: Reward = 266.8, Steps = 259

Episode 21: Reward = 268.6, Steps = 276

Episode 22: Reward = 283.6, Steps = 251

Episode 23: Reward = 257.6, Steps = 269

Episode 24: Reward = 271.9, Steps = 434

Episode 25: Reward = 290.6, Steps = 259

Episode 26: Reward = 236.1, Steps = 276

Episode 27: Reward = 269.4, Steps = 257

Episode 28: Reward = 285.4, Steps = 293

Episode 29: Reward = 256.9, Steps = 328

Episode 30: Reward = 259.5, Steps = 284

Episode 31: Reward = 249.1, Steps = 278

Episode 32: Reward = 264.5, Steps = 315

Episode 33: Reward = 268.0, Steps = 273

Episode 34: Reward = 272.6, Steps = 270

Episode 35: Reward = 276.3, Steps = 284

Episode 36: Reward = 281.3, Steps = 300

Episode 37: Reward = 251.7, Steps = 274

Episode 38: Reward = 264.6, Steps = 291

Episode 39: Reward = 250.8, Steps = 305

Episode 40: Reward = 229.0, Steps = 281

Episode 41: Reward = 260.2, Steps = 284

Episode 42: Reward = 289.1, Steps = 278

Episode 43: Reward = 223.3, Steps = 274

Episode 44: Reward = 270.0, Steps = 345

Episode 45: Reward = 271.8, Steps = 281

Episode 46: Reward = 266.9, Steps = 286

Episode 47: Reward = 254.8, Steps = 293

Episode 48: Reward = 257.3, Steps = 270

Episode 49: Reward = 259.4, Steps = 292

Episode 50: Reward = 224.0, Steps = 295

Performance Summary:

Average Reward: 262.6 ± 18.9

Average Steps: 287.6 ± 29.1

Success Rate: 100.0%

Best Episode: 300.5

Worst Episode: 223.3

Network: An actor-critic architecture where the actor network contains two hidden layers (with 256 and 448 neurons respectively, using ReLU activations, followed by a tanh-activated output scaled by a custom ScalingLayer) and each of the twin critic networks mirrors this structure to output a single scalar Q-value.InputsActor: An 8-dimensional state vector.Critic: A concatenated input of the 8-dimensional state and a 2-dimensional action (total 10 dimensions).

OutputsActor: A 2-dimensional continuous action vector (after scaling to the valid action range).Critic: A scalar Q-value estimate from each network.

Training: Trained using Twin Delayed Deep Deterministic Policy Gradients (TD3) with an off-policy replay buffer, delayed actor updates relative to critic updates, Gaussian noise for exploration, and soft updates of target networks.

At 1400 episodes the model does enough to get a 210-240 point average score. However, stability is not as good as the discrete version. I think the Optuna hyperparameter implementation is likely not well suited to the actual training conditions (I also performed a manual noise intervention during training). At the same time, we can see that it still does well, it likely just needs more training time (2500 episodes may likely make it just as good as the discrete version).

Trial Data

Checkpoint loaded from continuous_checkpoints\1400_293.4392340871299_20250216_172751

Loaded model from episode 1400 with score 293.4392340871299

Episode 1: Reward = 282.4, Steps = 187

Episode 2: Reward = 270.4, Steps = 153

Episode 3: Reward = 281.8, Steps = 188

Episode 4: Reward = 305.2, Steps = 176

Episode 5: Reward = 211.7, Steps = 387

Episode 6: Reward = 265.0, Steps = 148

Episode 7: Reward = 219.5, Steps = 192

Episode 8: Reward = 224.7, Steps = 336

Episode 9: Reward = 246.4, Steps = 154

Episode 10: Reward = 304.4, Steps = 162

Episode 11: Reward = -124.1, Steps = 218

Episode 12: Reward = 260.7, Steps = 152

Episode 13: Reward = 255.9, Steps = 171

Episode 14: Reward = 241.1, Steps = 166

Episode 15: Reward = 280.7, Steps = 175

Episode 16: Reward = 266.9, Steps = 171

Episode 17: Reward = 256.3, Steps = 160

Episode 18: Reward = 269.6, Steps = 376

Episode 19: Reward = 253.2, Steps = 482

Episode 20: Reward = 276.6, Steps = 226

Episode 21: Reward = 308.7, Steps = 321

Episode 22: Reward = 273.4, Steps = 153

Episode 23: Reward = 275.4, Steps = 290

Episode 24: Reward = 267.3, Steps = 342

Episode 25: Reward = 275.6, Steps = 193

Episode 26: Reward = 264.2, Steps = 167

Episode 27: Reward = 276.3, Steps = 217

Episode 28: Reward = 43.0, Steps = 91

Episode 29: Reward = 257.7, Steps = 154

Episode 30: Reward = 264.4, Steps = 194

Episode 31: Reward = 248.6, Steps = 155

Episode 32: Reward = 298.1, Steps = 163

Episode 33: Reward = 274.5, Steps = 159

Episode 34: Reward = 268.5, Steps = 207

Episode 35: Reward = 7.8, Steps = 205

Episode 36: Reward = 266.9, Steps = 377

Episode 37: Reward = 21.5, Steps = 1000

Episode 38: Reward = 301.4, Steps = 231

Episode 39: Reward = 281.8, Steps = 147

Episode 40: Reward = 275.0, Steps = 192

Episode 41: Reward = 218.5, Steps = 574

Episode 42: Reward = 270.7, Steps = 191

Episode 43: Reward = 272.3, Steps = 188

Episode 44: Reward = 253.2, Steps = 146

Episode 45: Reward = 235.4, Steps = 186

Episode 46: Reward = 218.7, Steps = 303

Episode 47: Reward = 226.3, Steps = 418

Episode 48: Reward = 292.2, Steps = 178

Episode 49: Reward = 266.2, Steps = 268

Episode 50: Reward = 258.9, Steps = 206

Performance Summary:

Average Reward: 242.2 ± 80.7

Average Steps: 239.9 ± 144.9

Success Rate: 92.0%

Best Episode: 308.7

Worst Episode: -124.1

Scores vary, the problem is that the lander hasn't learned to decelerate an appropriate amount in some scenarios. Additional training would likely resolve this.