GoogleAPICallError: None POST https://bigquery.googleapis.com/bigquery/v2/[...]/insertAll Error 413 (Request Entity Too Large)!!1

giancarloaf opened this issue · 1 comments

Error group: https://console.cloud.google.com/errors/detail/CPiFwf-QrazTEw;time=P1D?project=httparchive

Errors are heavily prevalent when running the streaming "combined pipeline".

The pipeline currently has guards in place to ensure that data does not exceed the quota of 10MB for streaming inserts.

Following the stacktrace back from one of the Error 413 (Request Entity Too Large)!!1 errors, it's not the individual rows that are an issue. It's that google cloud's BigQuery library batches up a bunch of rows for streaming inserts and makes one fat http request with the entire batch. The error we're getting back isn't because a single har was parsed into some huge page/request row, it's because there can be up to 500 of them in a single insert call to BQ which is larger than the API will allow

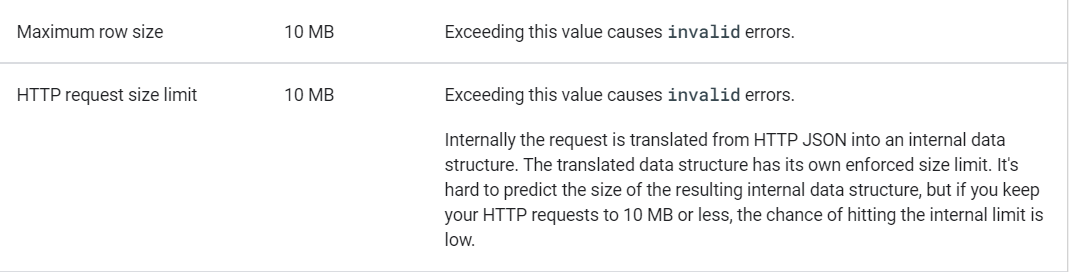

The streaming api errors are ambiguous, but I think I now understand the difference between these two quotas

https://cloud.google.com/bigquery/quotas#streaming_inserts

Maximum row size 👍

HTTP request size limit 👎

While our row size is indeed below 10mb, a batch of rows becomes a single request which can exceed 10mb 🤯