Clean up intermediary load job tables on BQ

rviscomi opened this issue · 2 comments

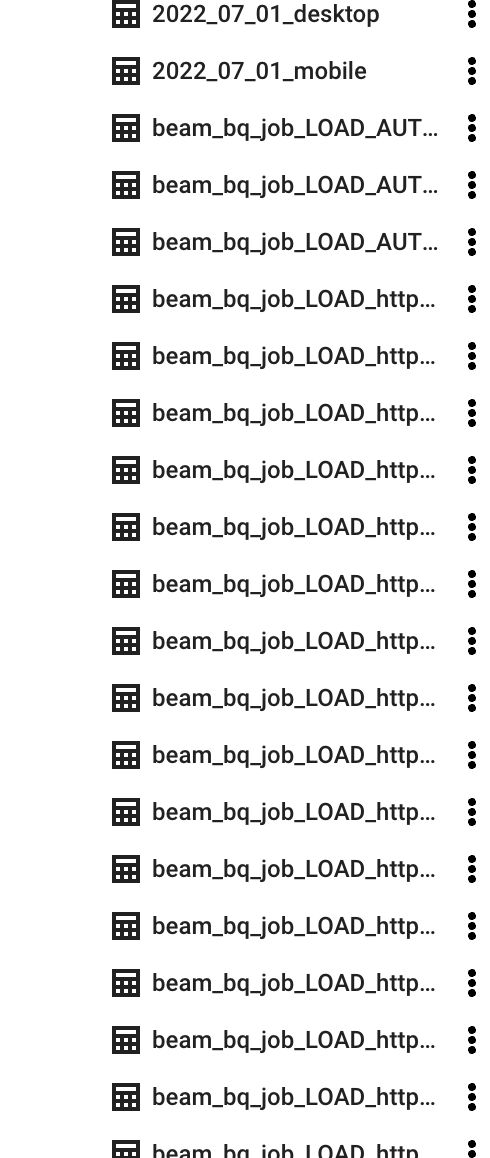

I wrote a wildcard query over the technologies dataset and found ~hundreds of tables with names like httparchive.technologies.beam_bq_job_LOAD_httparchivejulystreamingpipeline_LOAD_STEP_126_013fc2c379fe0768444c5cf87ef6c4c3_005eb94967ab4afa85b3ad236fcbd859. It seems like the httparchivejulystreamingpipeline Dataflow job failed while writing data into BQ and these intermediary tables were never cleaned up.

Any table prefixed with beam is an intermediary table and can safely be deleted. We can write a DML query to target and delete these. We should repeat this process for the other datasets that Dataflow writes into.

Will clean these up and add a note to the readme on how do do this.

Also, consider explaining how we might reuse the data instead of deleting it if this occurs during a prod run.

Created a saved query at the project level to automate temp table cleanup. Just replace the dataset name before running.

https://console.cloud.google.com/bigquery?sq=226352634162:82dad1cd1374428e8d6eaa961d286559