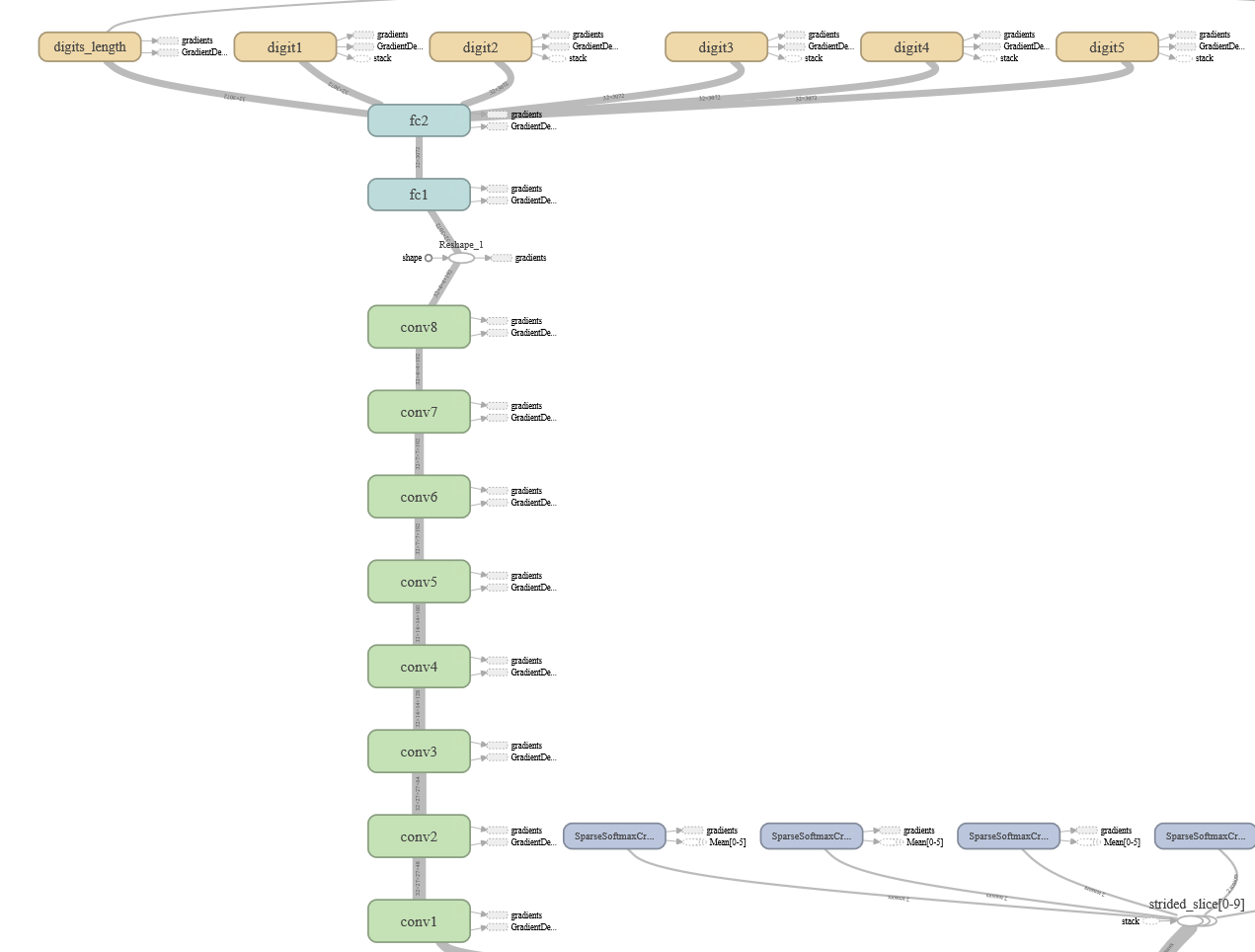

Goal: Develop an application based on Tensorflow to recognize numbers in images with cameras in real time.

Source:Multi-digit Number Recognition from Street View Imagery using Deep Convolutional Neural Networks

-

Python 3.5/Python 2.7

-

TensorFlow

-

h5py

In Windows: > pip3 install h5py In Ubuntu: $ sudo pip3 install h5py -

Pillow, Jupyter Notebook etc.

-

Android env

Android SDK & NDK (see https://github.com/tensorflow/tensorflow/blob/master/tensorflow/examples/android/README.md)

-

Street dataset

View House Numbers (SVHN http://ufldl.stanford.edu/housenumbers/)

-

Clone the source code(git bash environment)

> git clone git@github.com:Hedlen/SVHNNumber.git > cd NumberCamera -

Download the format 1 dataset based on the above dataset link

-

Extract the data from the file,The data is as follows:

-data -train -1.png -2.pnd -... -digitStruct.mat -data -test -1.png -2.pnd -... -digitStruct.mat -data -extra -1.png -2.pnd -... -digitStruct.mat

The bounding box information are stored in digitStruct.mat instead of drawn directly on the images in the dataset.Each tar.gz file contains the orignal images in png format, together with a digitStruct.mat file.In our program, we use h5py.File to read the data in .mat format.

-

Convert to TFRecords format

> python convert_to_tfrecords.py -

Train

> python train.py -

Retrain if you need

> python train.py --restore_checkpoint ./logs/train/latest.ckpt -

Evaluate

> python eval.py -

Visualize

> tensorboard --logdir=./logs -

(Optional) Perform actual testing of other images

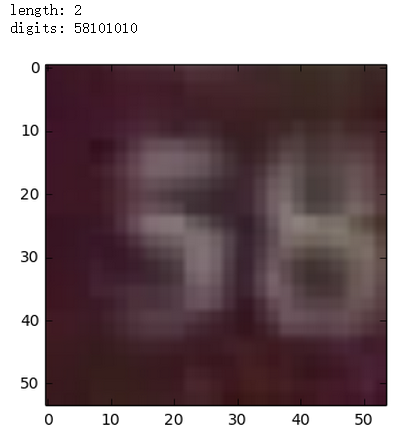

Open 'Test_sample.ipynb' in Jupyter notebook Open 'Test_outside_sample.ipynb' in Jupyter notebook Above all,you can see the results you want.

-

Convert to h5py format

> cd NumberCamber_Based_h5py Make sure the data folder is under the path. > python convert_to_h5py.py -

(Optional)Try to read h5py file and take a glance at original images.

Open "read_h5py_test.ipynb" -

Train

> python train.py -

Evaluate

> python eval.py -

Visualize

> tensorboard --logdir=./logs

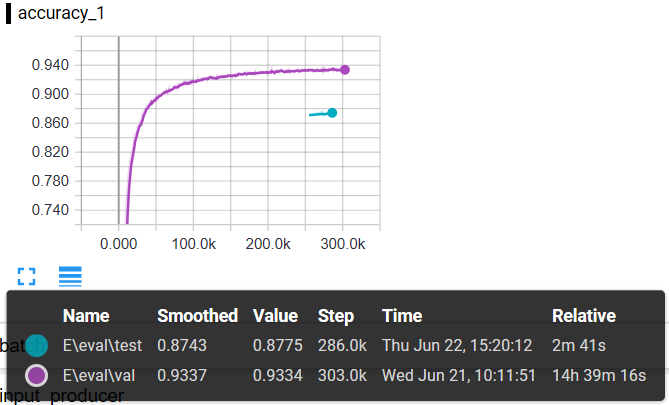

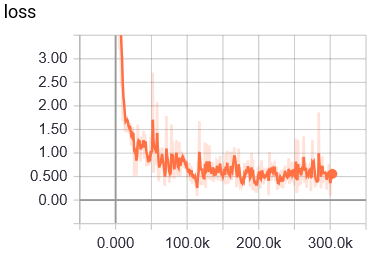

93.33% correct rate on the validation dataset

87.43% correct rate on the test dataset (The correct rate is lower than the correct rate of the original paper, and the improvement of the model continues.)

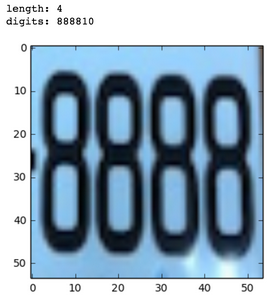

In the figure, the number 10 represents empty.