This repository will not be updated. The repository will be kept available in read-only mode. For an alternate, please see https://github.com/IBM/predictive-model-on-watson-ml or the on-premise Cloud Pak for Data version of this pattern: https://github.com/IBM/telco-customer-churn-on-icp4d

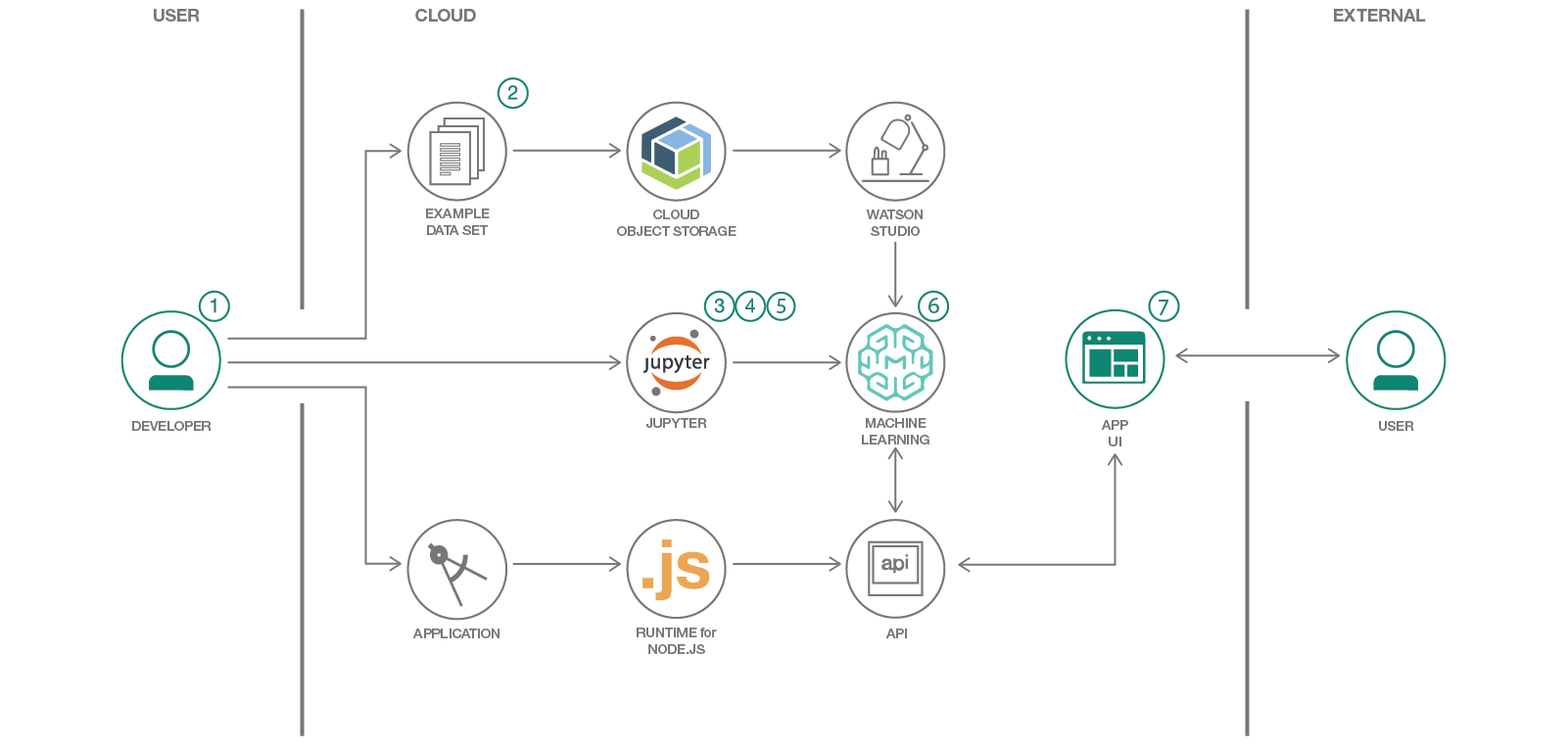

In this Code Pattern, we use IBM Watson Studio to go through the whole data science pipeline to solve a business problem and predict customer churn using a Telco customer churn dataset. Watson Studio is an interactive, collaborative, cloud-based environment where data scientists, developers, and others interested in data science can use tools (e.g., RStudio, Jupyter Notebooks, Spark, etc.) to collaborate, share, and gather insight from their data as well as build and deploy machine learning and deep learning models.

When the reader has completed this Code Pattern, they will understand how to:

- Use Jupyter Notebooks to load, visualize, and analyze data

- Run Notebooks in IBM Watson Studio

- Load data from IBM Cloud Object Storage

- Build, test and compare different machine learning models using Scikit-Learn

- Deploy a selected machine learning model to production using Watson Studio

- Create a front-end application to interface with the client and start consuming your deployed model.

- Understand the business problem.

- Load the provided notebook into the Watson Studio platform.

- Telco customer churn data set is loaded into the Jupyter Notebook.

- Describe, analyze and visualize data in the notebook.

- Preprocess the data, build machine learning models and test them.

- Deploy a selected machine learning model into production.

- Interact and consume your model using a frontend application.

- IBM Watson Studio: Analyze data using RStudio, Jupyter, and Python in a configured, collaborative environment that includes IBM value-adds, such as managed Spark.

- IBM Cloud Foundry: Deploy and run your applications without managing servers or clusters. Cloud Foundry automatically transforms source code into containers, scales them on demand, and manages user access and capacity.

- Jupyter Notebooks: An open-source web application that allows you to create and share documents that contain live code, equations, visualizations, and explanatory text.

- Pandas: An open source library providing high-performance, easy-to-use data structures and data analysis tools for the Python programming language.

- Seaborn: A Python data visualization library based on matplotlib. It provides a high-level interface for drawing attractive and informative statistical graphics.

- Scikit-Learn: Machine Learning in Python. Simple and efficient tools for data mining and data analysis.

- Watson Machine Learning Client: A library that allows working with Watson Machine Learning service on IBM Cloud. Train, test and deploy your models as APIs for application development, share with colleagues using this python library.

- NodeJS: A JavaScript runtime built on Chrome's V8 JavaScript engine, used for building full stack Javascript web applications.

- ExpressJS: A minimal and flexible Node.js web application framework that provides a robust set of features for web and mobile applications.

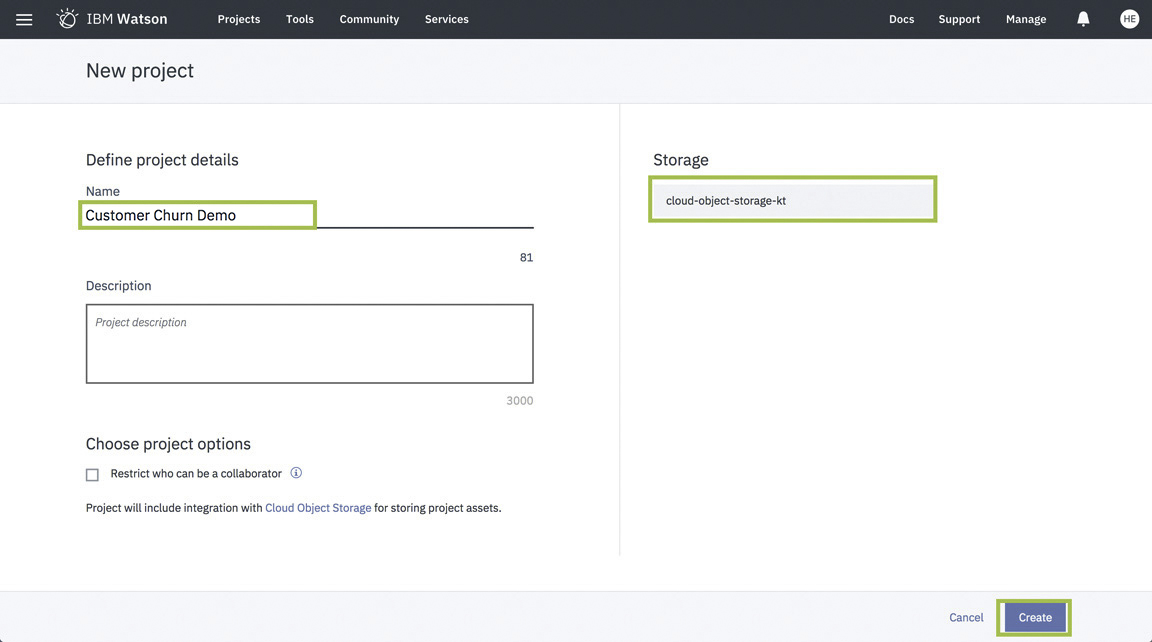

Sign up for IBM's Watson Studio. By creating a project in Watson Studio a free tier Object Storage service will be created in your IBM Cloud account. Take note of your service names as you will need to select them in the following steps.

Note: When creating your Object Storage service, select the Free storage type to avoid having to pay an upgrade fee.

Note: By creating a project in Watson Studio a free tier

Object Storageservice will be created in your IBM Cloud account. Take note of your service names as you will need to select them in the following steps.

- On Watson Studio's Welcome Page select

New Project.

- Choose the

Data Scienceoption and clickCreate Project.

- Name your project, select the Cloud Object Storage service instance and click

Create

-

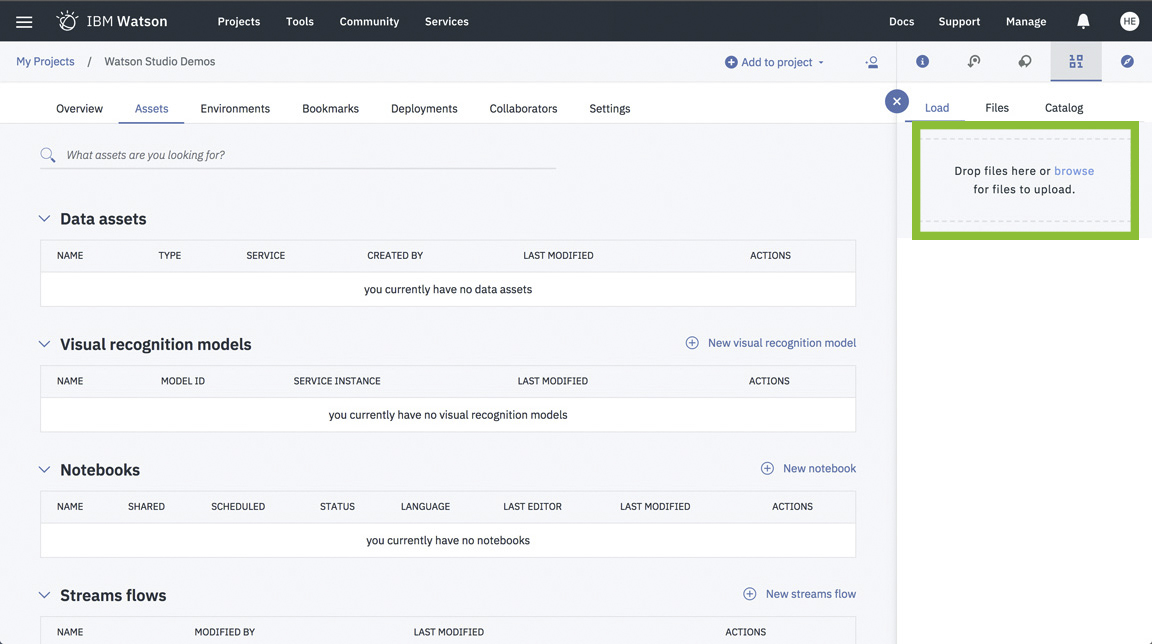

Download the dataset we will use in this pattern from the following link: https://community.watsonanalytics.com/wp-content/uploads/2015/03/WA_Fn-UseC_-Telco-Customer-Churn.csv

-

Drag and drop the dataset (

csv) file you just downloaded to Watson Studio's dashboard to upload it to Cloud Object Storage.

- Create a New Notebook.

-

Import the notebook found in this repository inside the notebook folder by copying and pasting this URL in the relevant field

https://raw.githubusercontent.com/IBM/customer-churn-prediction/master/notebooks/customer-churn-prediction.ipynb -

Give a name to the notebook and select a

Python 3.5runtime environment, then clickCreate.

To make the dataset available in the notebook, we need to refer to where it lives. Watson Studio automatically generates a connection to your Cloud Object Storage instance and gives access to your data.

- Click in the cell below

2. Loading Our Dataset - Then go to the Files section to the right of the notebook and click

Insert to codefor the data you have uploaded. ChooseInsert pandas DataFrame.

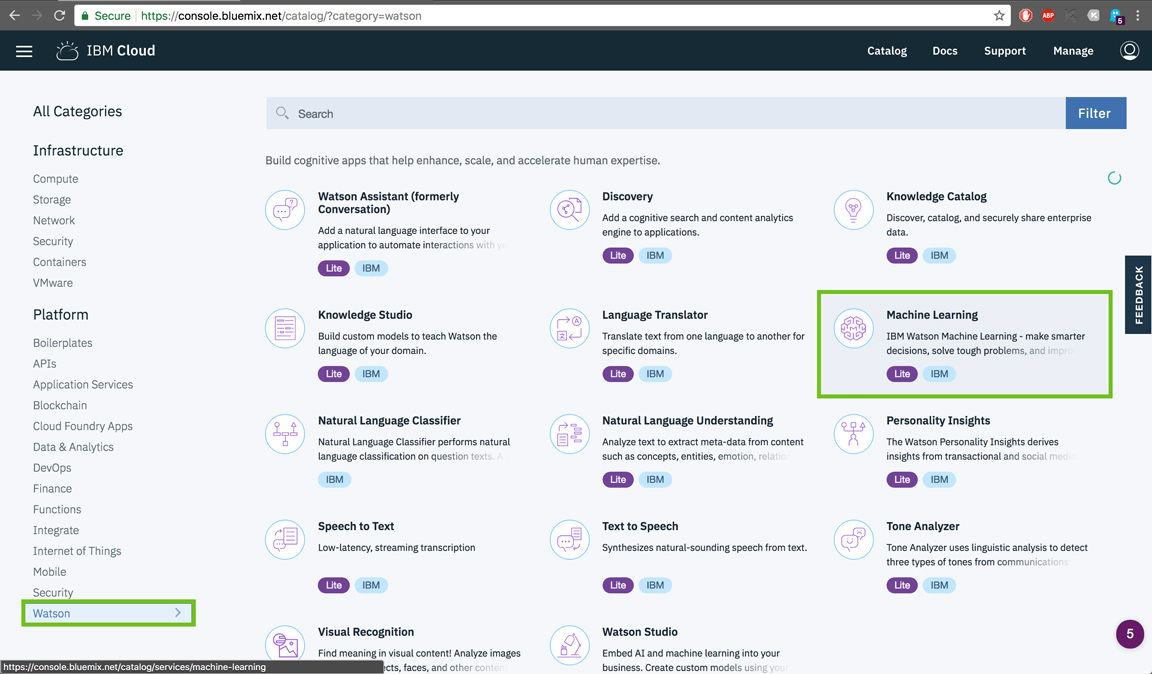

- From IBM Cloud Catalog, under the Watson category, select

Machine Learningor use the Search bar to findMachine Learning.

- Keep the setting as they are and click

Create.

- Once the service instance is created, navigate to the

Service credentialstab on the left, view credentials and make a note of them.

Note: If you can't see any credentials available, you can create a

New credential.

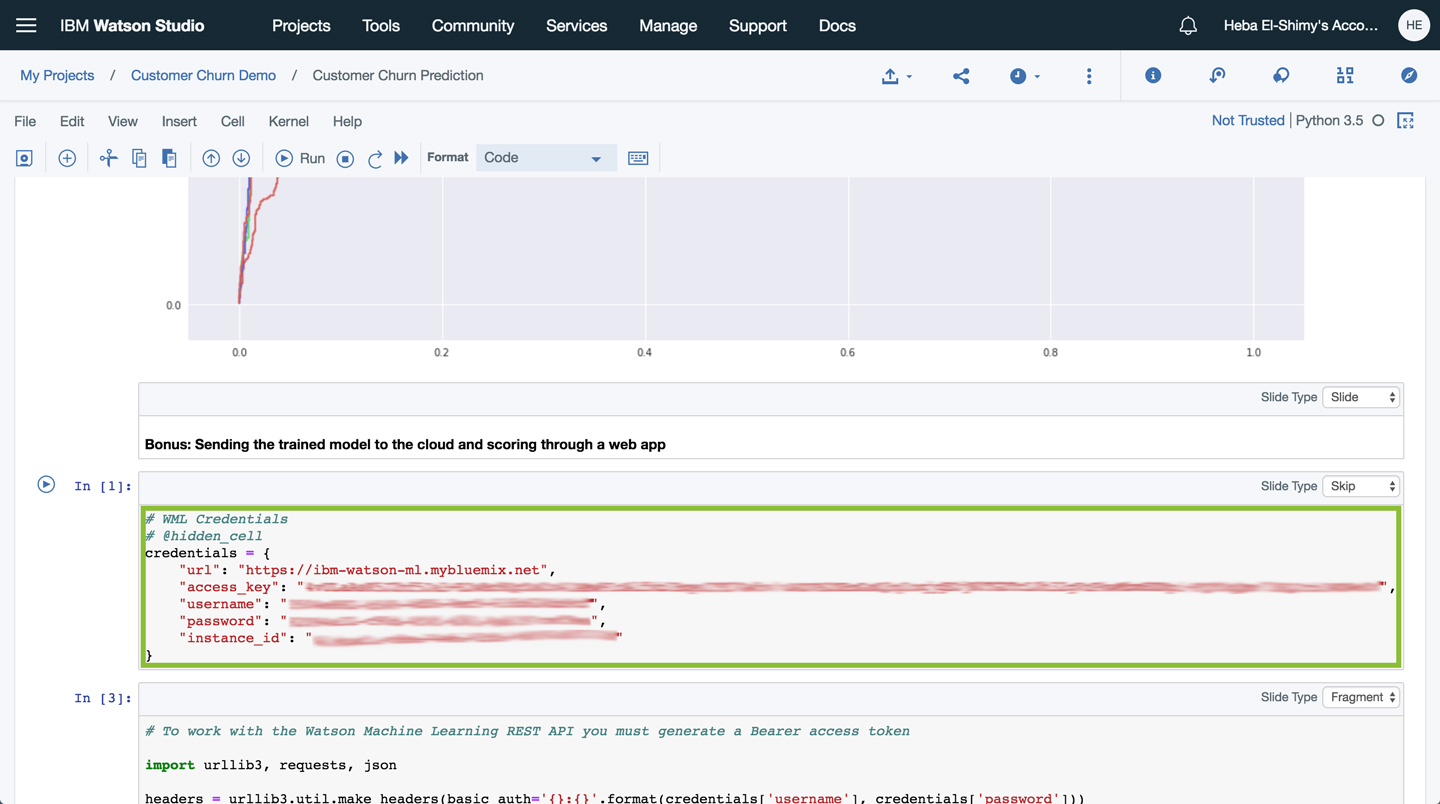

- In the notebook available with this pattern, there is a cell with the WML credentials available after

14. ROC Curve and models comparisons. You will need to replace the code inside with your credentials.

- Keep this tab open, or copy the credentials to a file to use later if you deploy the web app.

The steps should allow you to understand the dataset, analyze and visualize it. You will then go through the preprocessing and feature engineering processes to make the data suitable for modeling. Finally, you will build some machine learning models and test them to compare their performances.

Click on the following button to clone the repo for this frontend app and create a toolchain to start deploying the app from there.

- Under

IBM Cloud API Key:chooseCreate+, and then click onDeploy.

To monitor the deployment, in Toolchains click on Delivery Pipeline and view the logs while the apps are being deployed.

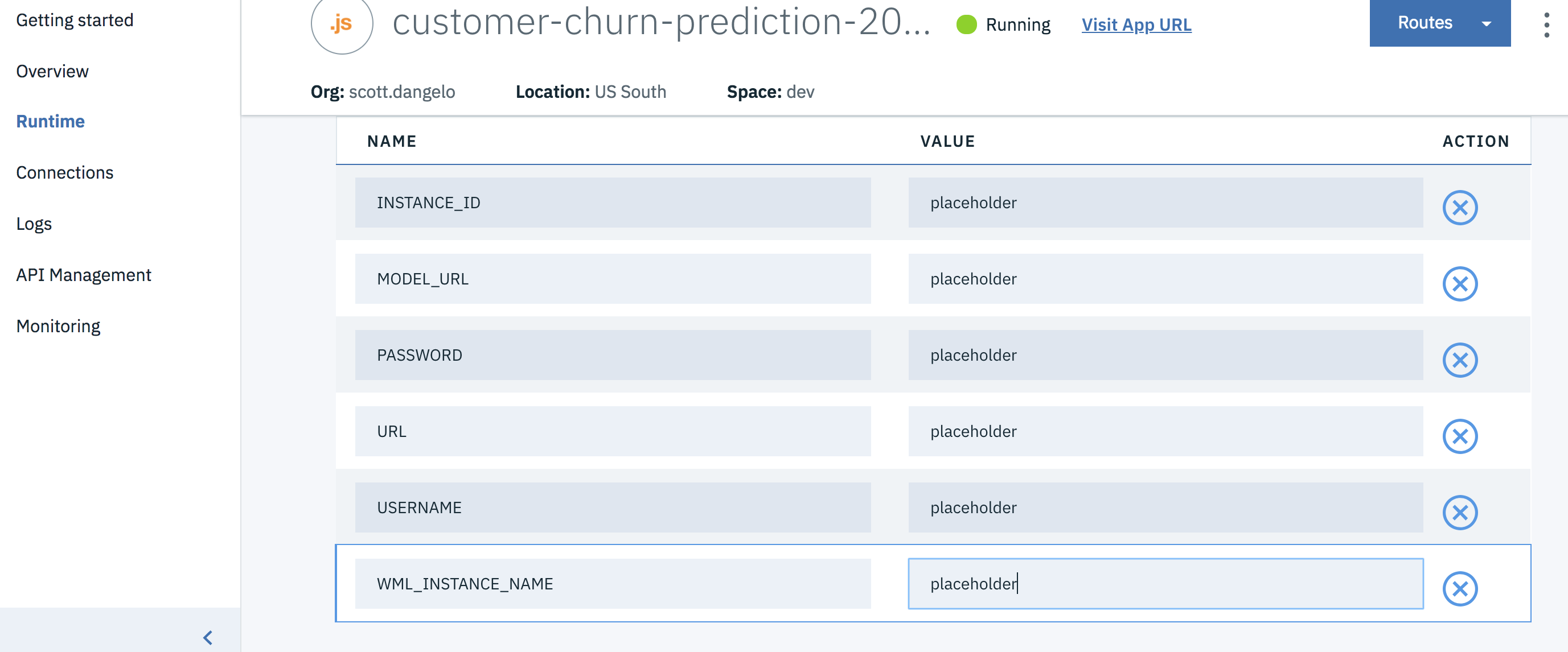

- Once the app has deployed, Click on

Runtimeon the menu and navigate to theEnvironment variablestab.

- Update the 5 environment variables with the

WML_INSTANCE_NAME,USERNAME,PASSWORD,INSTANCE_ID, andURL, that you saved at the end of Create Watson Machine Learning Service instance. Add theMODEL_URLthat you created in the Notebook as the variablescoring_endpoint. The app will automatically restart and be ready for use.

For developing the UI locally and testing it:

-

cd frontend/ -

Create a

.envfile in frontend folder (frontend/.env) to hold your credentials.

cp env.example .env

For our purposes here, our .env file will look like the following:

WML_INSTANCE_NAME=**Enter with your Watson Machine Learning service instance name**

USERNAME=**Enter your WML username found in credentials**

PASSWORD=**Enter your WML password found in credentials**

INSTANCE_ID=**Enter your WML instance_id found in credentials**

URL=**Enter your WML url found in credentials**

MODEL_URL=**Change with your model URL after deploying it to the cloud as in step 8**

-

Copy the variable for the .env file using the variables obtained at the end of Create Watson Machine Learning Service instance. Add the

MODEL_URLthat you created in the Notebook. -

Run the application.

cd frontend/

npm install

npm start

You can view the application in any browser by navigating to http://localhost:3000. Feel free to test it out.

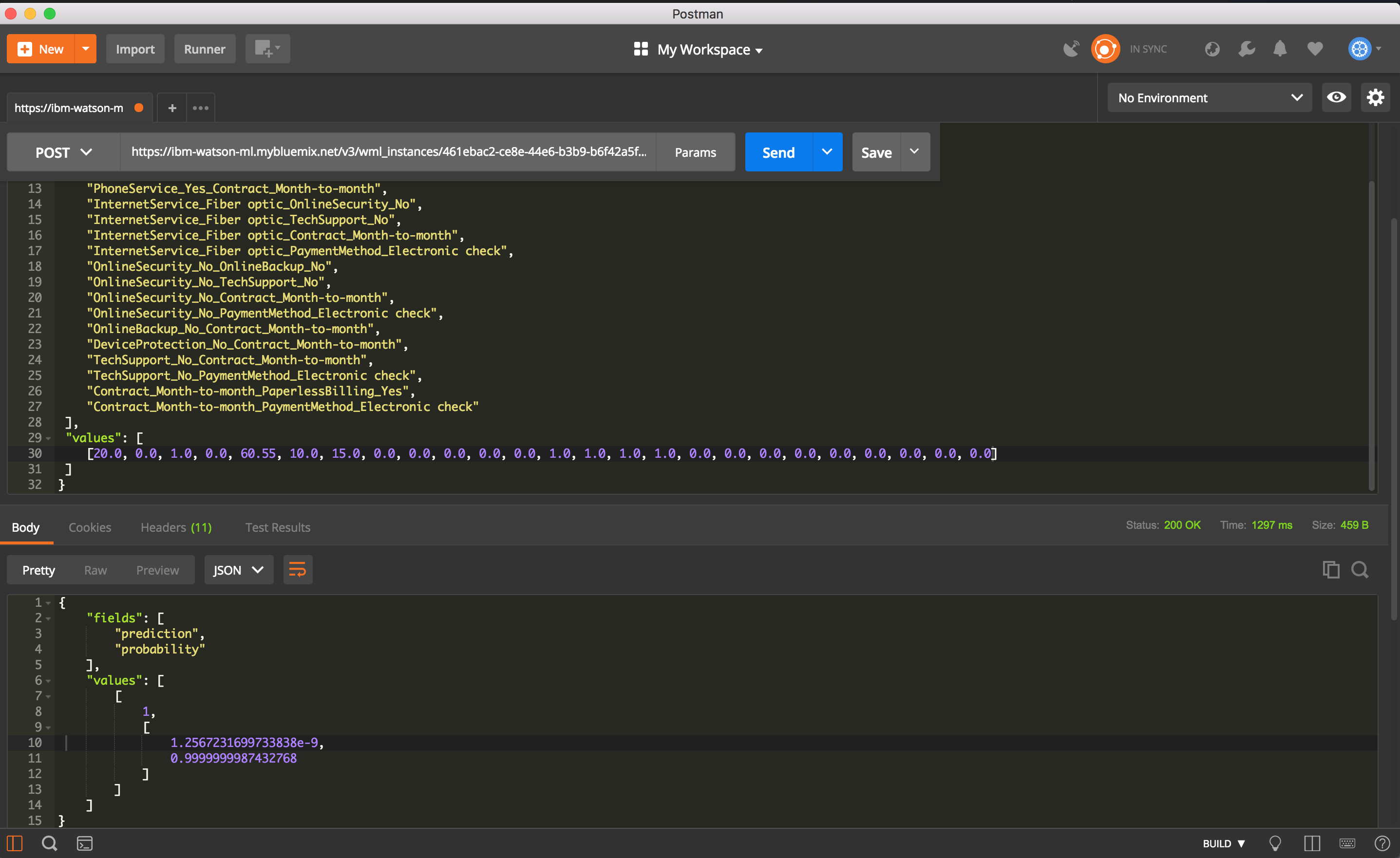

- Using Postman:

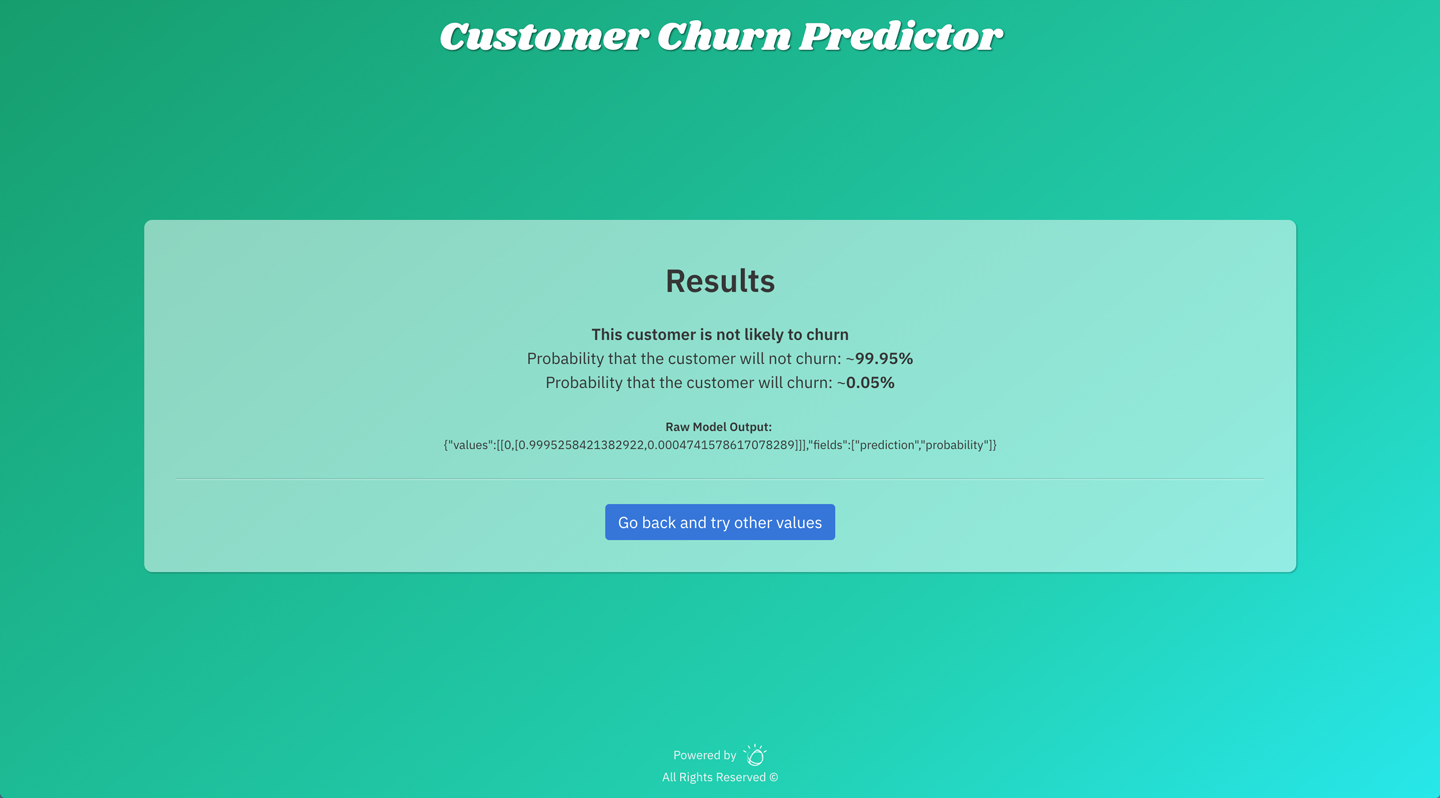

- Using the UI app:

- Artificial Intelligence Code Patterns: Enjoyed this Code Pattern? Check out our other AI Code Patterns.

- Data Analytics Code Patterns: Enjoyed this Code Pattern? Check out our other Data Analytics Code Patterns

- AI and Data Code Pattern Playlist: Bookmark our playlist with all of our Code Pattern videos

- With Watson: Want to take your Watson app to the next level? Looking to utilize Watson Brand assets? Join the With Watson program to leverage exclusive brand, marketing, and tech resources to amplify and accelerate your Watson embedded commercial solution.

- IBM Watson Studio: Master the art of data science with IBM's Watson Studio

This code pattern is licensed under the Apache Software License, Version 2. Separate third party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 (DCO) and the Apache Software License, Version 2.