- See What's New

- Introduction

- Matters Needing Attention

- Currently Support

- Minimum requirement

- Models we have tested

- Getting Started

- FAQ

- Reference

- Contributor

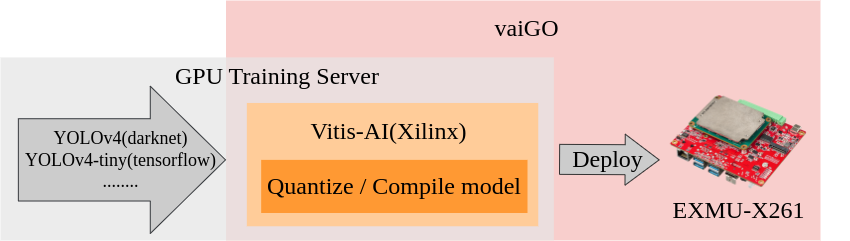

This repository is based on Xilinx Vitis-AI. To provide a way better to use Vitis-AI for AI model converter on FPGA. This repository We integrated Vitis-AI xmodel compile flow and evaluative method in our GO.

Like the following shows, the trained model can be quantize and compile to xmodel. And also deploy on FPGA. How to perform AI inference on an FPGA can be referenced in dpu-sc. On the other hand, visit to find EXMU-X261 usermanual more FPGA information.

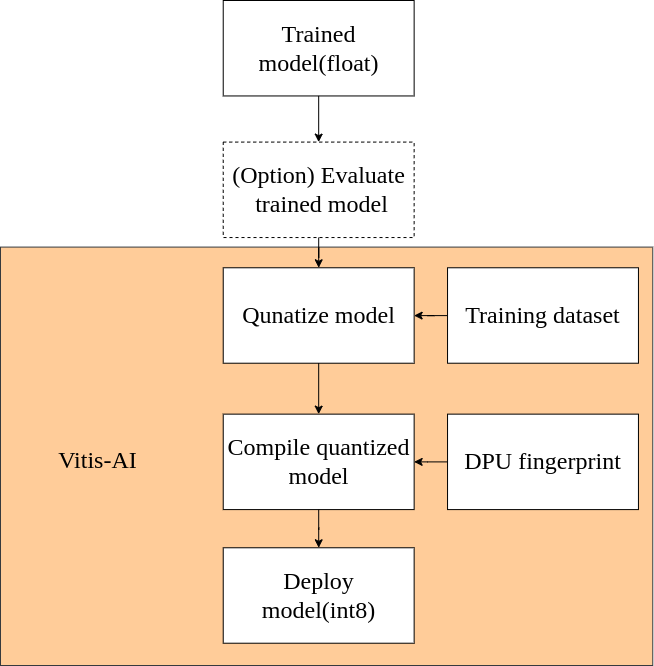

The following chart shows the Vitis-AI Model converting flow. To evaluate model can avoid the model spawned some problems during quantize model or compile model. But evaluate model step is optional which means you can choose whether you want to evaluate model.

Please select the correct version before download the Vitis-AI github. The version is depended on library of the FPGA system.- How the check VAI version reference in FAQ How to check the VAI version and fingerprint on FPGA?

In compiling model step, the fingerprint which depended on the VAI version and channel parallelism in DPU IP on the FPGA.- How the check DPU IP infromation reference in FAQ How to check the VAI version and fingerprint on FPGA?

Make sure all of your actions were executed in the continer of Vitis-AI because the OS-ENV is different.

Note: Caffe has been deprecated from Vitis-AI 2.5(See also. Vitis-AI user guide). So we won't support caffe in GO.

Support framework depend on Vitis-AI container.

- Tensorflow1

- Tensorflow2

- PyTorch

Support Dataset format

Note: Dataset including training and validation (At least 50 images for each). And make sure the training dataset folder is in the

Vitis-AI folder.

- png

- jpg

- jpeg

Support trained model framework

- Tensorflow

- PyTorch

- Darknet

- Ubuntu 18.04

- Python3, at least 3.5

- Intel Core i5-12400

- RAM, at least 32GB

- Tesla - T4 16GB or RTX2070 super 12GB

- Nvidia driver version 470.103.01

- CUDA Version 10.x

- RTX3080 12GB

- Nvidia driver version 470.141.03

- CUDA Version: 11.x

Note: We ensure the following models can be quantized and compiled successfully. Some model might be failed during quantizatoion and compilation. Please check Xilinx supported Operations list.(See also. Supported Operations and APIs in Vitis-AI user guide).

Tensorflow1

- YOLOv3-tiny

- YOLOv4

- YOLOv4-tiny

Tensorflow2

- YOLO4-tiny

- CNN

- InceptionNet

PyTorch

- LPRNet

- Vitis-AI Tutorials to convert models: https://github.com/Xilinx/Vitis-AI-Tutorials

- To convert to TensorFlow, we refer the repository:

- david8862's keras-YOLOv3-model-set: https://github.com/david8862/keras-YOLOv3-model-set

- To train yolov4-tiny, we refer the repository:

- yss9701's Ultra96-Yolov4-tiny-and-Yolo-Fastest: https://github.com/yss9701/Ultra96-Yolov4-tiny-and-Yolo-Fastest

- Vitis-AI 1.4 user guide: https://docs.xilinx.com/r/1.4.1-English/ug1414-vitis-ai

- Vitis-AI 2.5 user guide: https://docs.xilinx.com/r/2.5-English/ug1414-vitis-ai

- txt2xml: https://zhuanlan.zhihu.com/p/391137600

| Author | Corp. | |

|---|---|---|

| Allen.H | allen_huang@innodisk.com | innodisk Inc |

| Hueiru | hueiru_chen@inndisk.com | innodisk Inc |

| Wilson | wilson_yeh@innodisk.com | innodisk Inc |

| Jack | juihung_weng@innodisk.com | innodisk Inc |