Wedge Dropout drives apart Convolutional Neural Network feature maps which are too close together.

Wedge Dropout is a regularization technique for optimizing Convolutional Neural Networks. This repo contains an installable Python package for Tensorflow 2 called keras-wedge, and documentation.

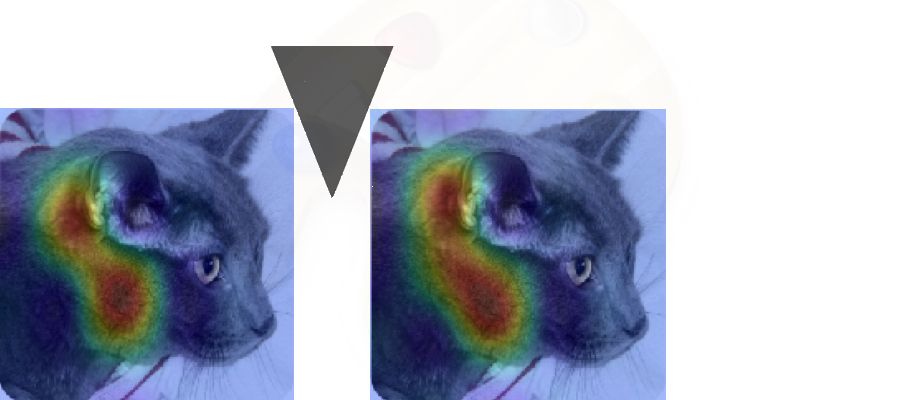

pip install git+https://github.com/LanceNorskog/keras-wedge.gitThese two feature maps were generated by a Convolutional Neural Network from a picture of a cat. As you can see, the feature maps have a lot of overlap. This is not an optimal use of feature maps- they should be independent and uncorrelated.

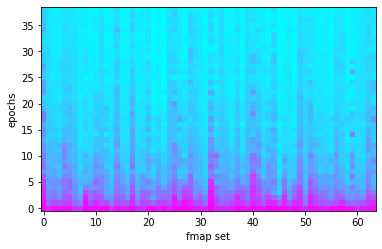

Wedge Dropout is a regularization technique which makes these feature maps less similar. Here is a heatmap showing correlation across all 64 feature maps for a very simple Convolutional Neural Network:

At the start of training, the feature maps are strongly correlated (magenta) and quickly become less correlated as training continues. Feature maps naturally become decorrelated during training; Wedge Dropout optimizes this process of decorrelation.

Wedge Dropout implements a recent technique in Convolutional Network Design: critiquing feature maps. Wedge Dropout analyzes random pairs of feature maps created by a CNN and applies negative feedback when feature maps which are too strongly correlated. This has the effect of improving the CNN's performance. After all, if two feature maps describe the same feature:

- they are redundant, which means that the feature map set is not as descriptive as it could be

- they could bias the model by ascribing extra importance to that feature

See this notebook for a detailed explanation of the concept:

See this notebook for a demonstration of our analysis function in a simple CNN: