Yifei Dong1,*,

Fengyi Wu1,*,

Guangyu Chen1,*,

Zhi-Qi Cheng1,†,

Qiyu Hu1,

Yuxuan Zhou1,

Jingdong Sun2,

Jun-Yan He1,

Qi Dai3,

Alexander G Hauptmann2

1University of Washington, 2Carnegie Mellon University, 3Microsoft Research

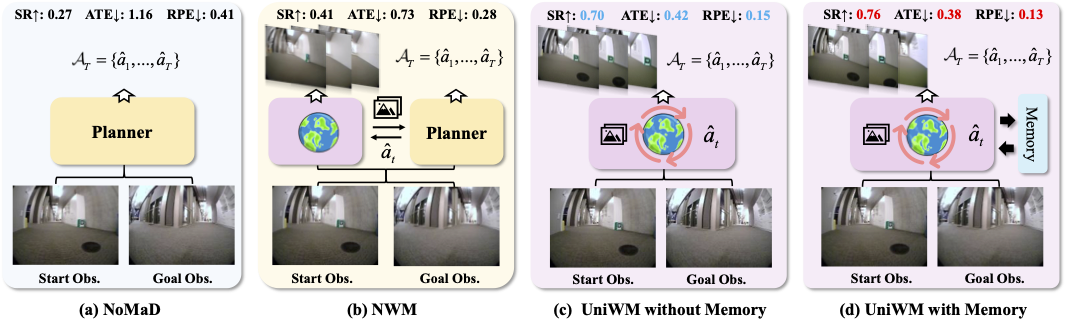

UniWM introduce a unified, memory-augmented world model paradigm integrating egocentric visual foresight and planning within a single multimodal autoregressive backbone. Unlike modular frameworks, UniWM explicitly grounds action decisions in visually imagined outcomes, ensuring tight alignment between visualization and planning. A hierarchical memory mechanism further integrates detailed short-term perceptual cues with longer-term trajectory context, enabling stable, coherent reasoning over extended horizons.

conda create -n uniwm python=3.10

conda activate uniwm

pip install torch==2.4.0

pip install -r requirements.txt --userWe now release a partial dataset for the purpose of debugging and demonstrating the data format. You can find them in data_samples

To train the model on multiple datasets, use the following torchrun command. This script supports multi-GPU distributed training (we provide an example in train.sh).

torchrun --nproc_per_node={GPU_NUM_PER_NODE} train.py \

--model anole \

--data go_stanford,scand,sacson,recon \

--data_dir /path/to/your/data_samples \

--decoder_type anole \

--image_seq_length 784 \

--input_format anole \

--output /path/to/save/output \

--note {experiment_note} \

--report_to none \

--do_train \

--bfloat16To evaluate a trained model, use the command below. The script supports several evaluation modes, which can be selected by using the appropriate flag (we provide an example in eval.sh).

torchrun --nproc_per_node=<GPU_NUM_PER_NODE> train.py \

--model anole \

--model_ckpt /path/to/your/checkpoint \

--data go_stanford,scand,sacson,recon \

--data_dir /path/to/your/data_samples \

--decoder_type anole \

--image_seq_length 784 \

--input_format anole \

--output /path/to/save/eval_results \

--note {experiment_note} \

--report_to none \

\

# Choose ONE of the following evaluation flags for different eval mode:

--do_single_step_eval

# --do_task_level_eval

# --do_rollout_eval

# Optional: --use_memory_bank_inference--do_single_step_eval: Evaluates the model's performance on a single step of prediction.

--do_task_level_eval: Evaluates the model on the full end-to-end task across an entire trajectory. You can optionally enable the memory bank mechanism by adding the --use_memory_bank_inference flag to the command. If this flag is omitted, the evaluation runs with the memory bank disabled.

--do_rollout_eval: Generates a full trajectory autoregressively (i.e., the model uses its own previous predictions and ground truth actions as input for the next step) and evaluates the result.

We would like to thank ANOLE and MVOT for their publicly available codebase, which we referenced during the implementation of Anole training.

If you find this repository or our paper useful, please consider starring this repository and citing our paper:

@misc{dong2025unifiedworldmodelsmemoryaugmented,

title={Unified World Models: Memory-Augmented Planning and Foresight for Visual Navigation},

author={Yifei Dong and Fengyi Wu and Guangyu Chen and Zhi-Qi Cheng and Qiyu Hu and Yuxuan Zhou and Jingdong Sun and Jun-Yan He and Qi Dai and Alexander G Hauptmann},

year={2025},

eprint={2510.08713},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2510.08713},

}