Transfer learning from a Nvida's Pre-trained StyleGAN (FFHQ)

Bearwithchris opened this issue · 3 comments

Hi,

Utilize the pre-trained pkl file: https://api.ngc.nvidia.com/v2/models/nvidia/research/stylegan2/versions/1/files/stylegan2-ffhq-256x256.pkl. I've attempted to transfer learning (without augmentation) from (FFHQ->CelebA-HQ).

python train.py --outdir=~/training-runs --data=~/datasets/FFHQ/GenderTrainSamples_0.025.zip --gpus=1 --workers 1 --resume=https://api.ngc.nvidia.com/v2/models/nvidia/research/stylegan2/versions/1/files/stylegan2-ffhq-256x256.pkl --aug=noaug --kimg 200

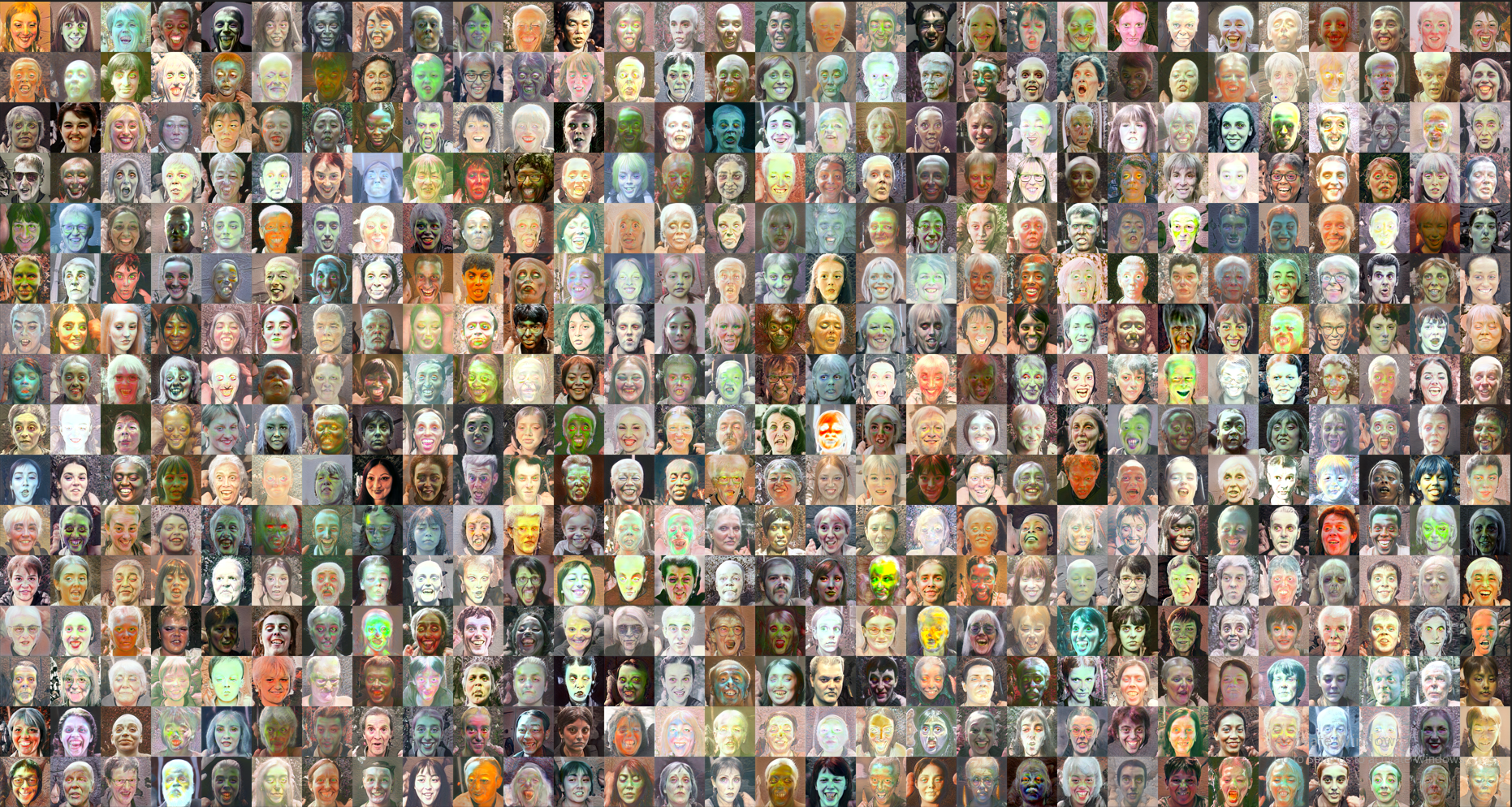

However, when looking a the init generated images, I see this:

but when checking the FID against the FFHQ dataset FID=~9.

Can anyone explain what is going?

Hi, I have faced the same problem and found out that the default configuration on the model is different from the pre-trained one.

Solution

Change the configuration from --cfg=auto (default) to --cfg=paper256 for this pre-trained model.

(For other pre-trained models, use the same model configuration as they were trained)

fakes_init.png with --cfg=auto:

fakes_init.png with --cfg=paper256:

Explanation

The configuration controls the model's channel_base, the number of the mapping network's layers, and the minibatch standard deviation layer of the discriminator. For instance, the mapping network has 8 layers with --cfg=paper256, while it has only 2 layers with --cfg=auto.

To keep the model structure the same as the pre-trained one, you should ensure that fmaps, map, and mdstd in the configuration are the same as it was trained.

stylegan2-ada-pytorch/train.py

Lines 154 to 161 in 6f160b3

stylegan2-ada-pytorch/train.py

Lines 176 to 183 in 6f160b3

In addition, when loading the pre-trained model, the function copy_params_and_buffers would ignore the unexpected parameters in the pre-trained model without informing of such inconsistency.

stylegan2-ada-pytorch/torch_utils/misc.py

Lines 153 to 160 in 6f160b3

Hi, I have faced the same problem and found out that the default configuration on the model is different from the pre-trained one.

Solution

Change the configuration from

--cfg=auto(default) to--cfg=paper256for this pre-trained model. (For other pre-trained models, use the same model configuration as they were trained)

fakes_init.pngwith--cfg=auto:

fakes_init.pngwith--cfg=paper256:Explanation

The configuration controls the model's channel_base, the number of the mapping network's layers, and the minibatch standard deviation layer of the discriminator. For instance, the mapping network has 8 layers with

--cfg=paper256, while it has only 2 layers with--cfg=auto.To keep the model structure the same as the pre-trained one, you should ensure that

fmaps,map, andmdstdin the configuration are the same as it was trained.stylegan2-ada-pytorch/train.py

Lines 154 to 161 in 6f160b3

stylegan2-ada-pytorch/train.py

Lines 176 to 183 in 6f160b3

In addition, when loading the pre-trained model, the function

copy_params_and_bufferswould ignore the unexpected parameters in the pre-trained model without informing of such inconsistency.stylegan2-ada-pytorch/torch_utils/misc.py

Lines 153 to 160 in 6f160b3

@JackywithaWhiteDog Hi. Where did this weight come from? I only get 256*256 pretrained weight in transfer-lerarning folder. The website is different from the one in this issue.

Hi @githuboflk, sorry that I didn't notice your question. I also used the pre-trained weight provided in README as you mentioned.

However, I think the checkpoint in this issue is available at NVIDIA NGC Catalog.