|

Note

|

db-Objekts is currently in alpha. The API is subject to change and not ready for production.

Starting from release 0.4.3.-alpha, deprecated API behavior will be marked as such and become breaking from the first beta. These changes will however be minor and should not deter you from trying the software in its current state.

At present, it supports H2, MariaDB, MySQL and PostrgreSQL.

A beta is planned for early 2023 and a first stable release later that year. Roadmap

|

db-Objekts is a Kotlin library to query relational databases. It uses generated metadata objects in a DSL that produces type-safe and fluent queries while the engine takes care of join syntax and type conversions. The project was conceived by Jasper Sprengers, a software developer living in the Netherlands. Contact

In short, db-Objekts lets you do things like this:

transaction.select(Employee, Address, EmployeeAddress.kind, Country.name)

.where(Employee.id.eq(empId)).asList().forEach {

(emp, add, addType, country) ->

println("${emp.name}'s $addType address is ${add.street} ${add.postcode}, $country")

//Example: John's HOME address is Zuidhoek 80 3082TR, Nederland

}Or get the names of all employees who have more than one certificate:

val row: Tuple2<String, Long> = transaction.select(Employee.name, Certificate.name.count())

.having(Aggregate.gt(1))

.asList()db-Objekts utilizes the power and convenience of your IDE’s auto-complete as much as possible.

So, how is this different from other offerings, notably object relational mapping (ORM)?

db-Objekts centers around queries and static metadata, not stateful managed entities. ORMs abstract away tables and foreign keys. This works fine at the level of individual entities, but makes batch performance at scale hard. They are neither lightweight nor easy to master in-depth. Queries in db-Objekts always result in a single SQL statement and are easier to debug.

Other DSL alternatives target similar use cases, so the similarities seem obvious at first glance. I want to stress the design decisions that drove this project because I believe the details matter.

-

CRUD queries follow the same fluent syntax. Your IDE’s autocomplete helps you every step of the way.

-

Metadata objects are auto-generated from the db. No manual maintenance is required.

-

Made for Kotlin: all query parameters and results are type-safe and null-safe. In the world of databases, null is significant.

-

Gentle learning curve when your needs are simple, and highly customizable if you want it.

In striking a balance between feature-completeness and usability, db-Objekts always favors the latter. It aims to cover 80% of query needs with 20% of the complexity. It does not emulate all you can do in native SQL, although it facilitates native queries as well.

Feel free to start with the xref:_getting_started if you can’t wait to try it out, or take a few minutes for a quick overview of the major features.

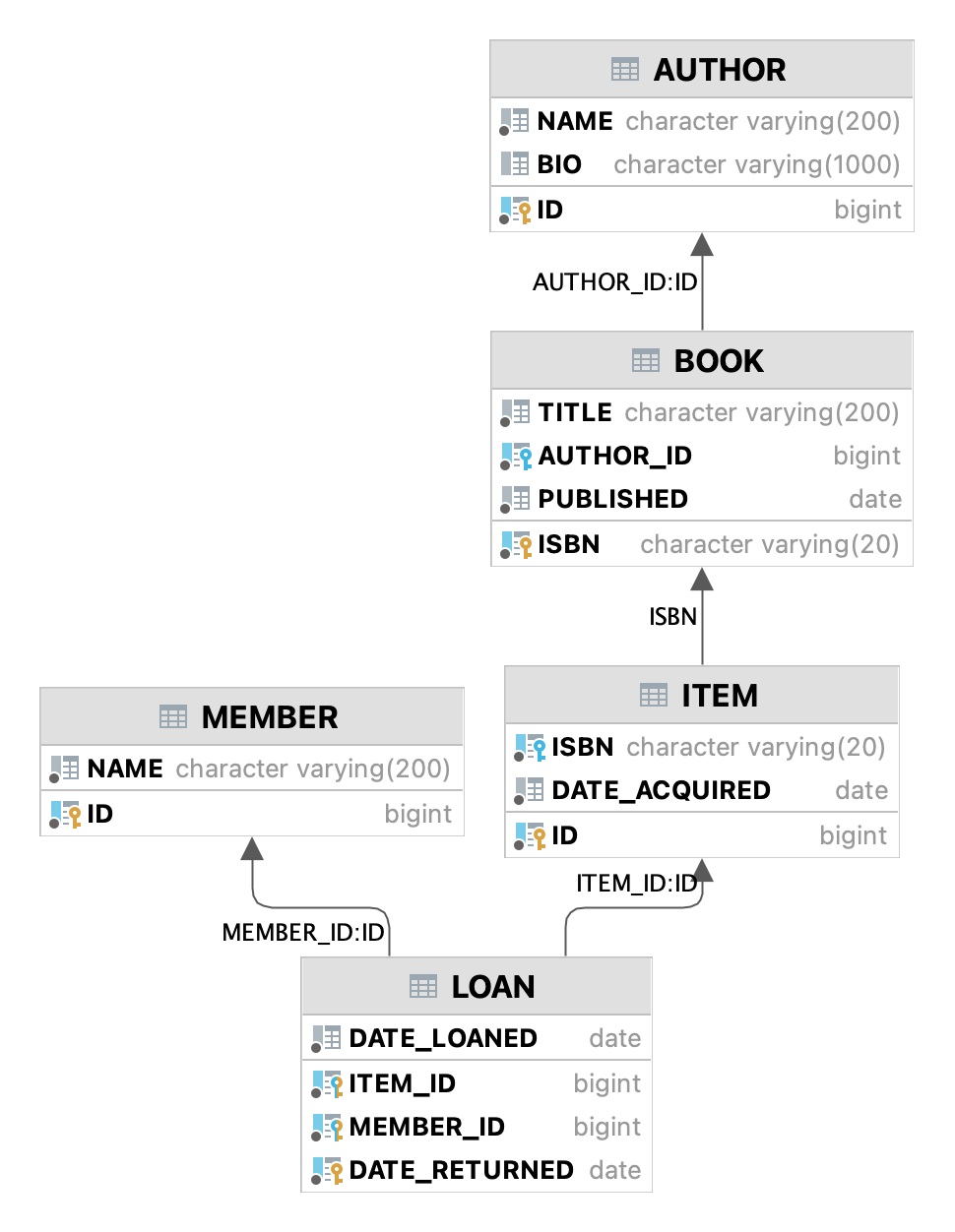

We have a simple in-memory H2 database that models a lending library with five tables:

The first step is to create our metadata objects. You do this at the outset and whenever the db structure changes.

val generator = CodeGenerator()

.withDataSource(datasource = H2DB.dataSource)

generator.outputConfigurer()

.basePackageForSources("com.acme.dbobjekts")

.outputDirectoryForGeneratedSources(Paths.get("src/gen/kotlin")

.toAbsolutePath().toString())

generator.generateSourceFiles()This bare-bones setup produces a package com.acme.dbobjekts in the gen source folder. We now have Book, Author, Loan,Item and Member source files, which correspond to the tables. The CatalogDefinition object ties the schema(s) and tables together.

Per application, you also configure a TransactionManager, which takes a javax.sql.DataSource and the CatalogDefinition that was just generated. We use an in-memory H2 database.

val dataSource = HikariDataSourceFactory.create("jdbc:h2:mem:test","sa",null)

val transactionManager = TransactionManager.builder()

.withCatalog(CatalogDefinition)

.withDataSource(dataSource).build()The TransactionManager hands out Transaction instances and manages their life cycle. These wrap a short-lived javax.sql.Connection. You use the following syntax to get a Transaction reference.

val resultOfQuery = tm.newTransaction { tr->

//execute your query/queries here

}We’ll stick to tm for TransactionManager and tr for Transaction throughout this documentation.

The signature of newTransaction is fun <T> newTransaction(function: (Transaction) → T): T. The invoke operator does the same, so to select the isbn column from all books you can also write:

val books: List<String> = tm { it.select(Book.isbn).asList() }That was your first query. Now let’s add an author, book title and member.

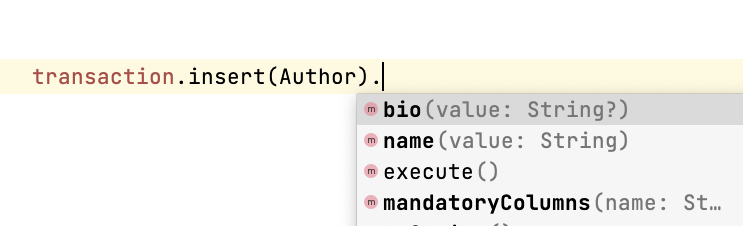

// Author has an auto-generated primary key, which is returned by execute()

val orwell: Long = tr.insert(Author)

.mandatoryColumns("George Orwell").execute()

// The book key (isbn) id is not auto-generated. In this case execute() returns 1.

tr.insert(Book)

.mandatoryColumns("ISBN-1984", "Nineteen-eighty Four", orwell, LocalDate.of(1948,1,1))

.execute()

val john = tr.insert(Member)

.mandatoryColumns("John").execute()-

The

mandatoryColumns(..)call is a convenience method to supply values for the non-nullable columns (except auto-generated primary keys, which are always read-only). They are available as distinct setter methods on the builder. -

When the table in question has an auto-generated id, it is returned as a

Long.

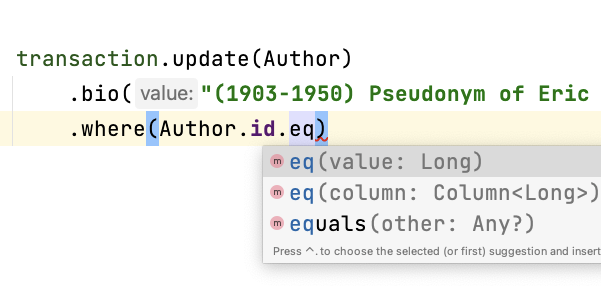

Let’s update the Orwell record with an author bio. Notice the use of the where clause. Common sql operator symbols (=,<,>,!=) have textual counterparts eq, lt, ne, etc:

tr.update(Author)

.bio("(1903-1950) Pseudonym of Eric Blair. Influential writer of novels, essays and journalism.")

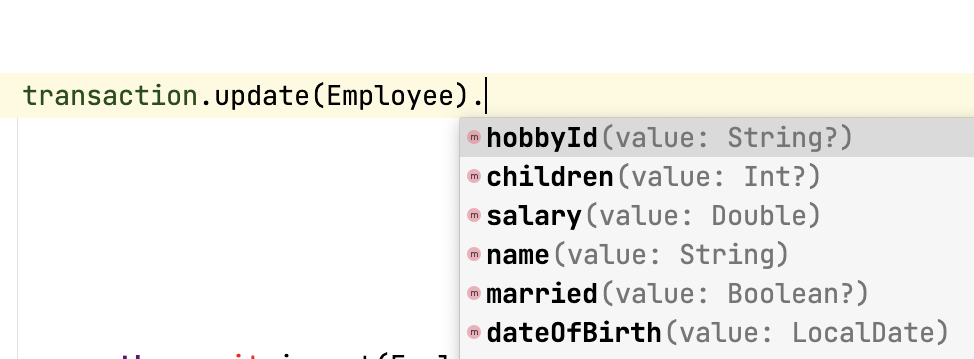

.where(Author.id.eq(orwell))Notice the power of autocomplete. You don’t need to memorize which columns are in a table, which Kotlin types they take and whether they may be null. It’s right in front of you.

Add a physical copy of the book and a loan record.

// copy was acquired in 1990

val itemId = tr.insert(Item)

.mandatoryColumns("ISBN-1984", LocalDate.of(1990,5,5))

.execute()

//John takes out the copy of 1984

tr.insert(Loan).mandatoryColumns(memberId = john,

itemId = itemId,

dateLoaned = LocalDate.now()).execute()We want a list of all titles and their authors. This is what a select query in db-Objekts looks like:

val bookAuthors: List<Tuple2<String, AuthorRow>> =

tr.select(Book.title, Author).asList()Note that there’s no from clause. If db-Objekts can figure out the foreign key links from the columns provided in select(..), you can omit it. You can confgure left and right outer joins manually.

asList() terminates the statement and returns a list of type-safe tuples that correspond to the number and types of the columns provided.

You can supply individual columns or an entire table in the select clause (similar to book.* in native sql). Each Table subclass has a stateful, immutable data class (AuthorRow in this case) which contains the values of a single row. Later we will use these same row objects for updates and inserts.

Let’s take it up a notch. This query involves all five tables and returns List<Tuple5<LocalDate, Long, String, String, String>>. Since all Tuple* classes are data classes, you can deconstruct them into a more readable output

// the type returned is List<Tuple5<LocalDate, Long, String, String, String>>

tr.select(Loan.dateLoaned, Item.id, Book.title, Author.name, Member.name).asList()

.forEach { (dateLoaned, item, book, author, member) ->

println("Item $item of $book by $author loaned to $member on $dateLoaned")

//"Item 1 of Nineteen-eighty Four by George Orwell loaned to John on 2022-12-23"

}Native SQL queries are also possible, using the same convenient type-safe tuples:

val (id, name, salary, married, children, hobby) =

tr.sql(

"select e.id,e.name,e.salary,e.married, e.children, h.NAME from core.employee e left join hr.HOBBY h on h.ID = e.HOBBY_ID where e.name = ?",

"John"

).withResultTypes()

.long()//refers to employee.id

.string()//refers to employee.name

.double()//refers to employee.salary

.booleanNil()//refers to employee.married

.intNil()//refers to employee.children

.stringNil()//refers to hobby.name, possibly null because it's an outer join

.first()This concludes our bird’s eye view of db-Objekts. Check out QueryOverviewComponentTest to get you going.

There is much more to explore in the following sections, so let’s dig in!

Get the latest release from Maven central

The sub module db-objekts-spring-demo contains examples from this section and is a good starting point to get you going.

The main jar is com.db-objekts:db-objekts-core, and you also need a vendor-specific implementation. Since they all depend on core, just add the vendor-specific dependency to your maven or gradle file configuration.

<dependency>

<groupId>com.db-objekts</groupId>

<artifactId>db-objekts-mariadb</artifactId>

<version>...</version>

</dependency>There is no transitive dependency on a JDBC driver, as this is most likely already on the classpath. If not, you must add it explicitly.

For a Spring Boot setup you create a Bean for your TransactionManager (provided a DataSource is already configured).

// Call the method something other than transactionManager(), or it will clash with the one in org.springframework.transaction

@Bean()

fun dbObjektsTransactionManager(dataSource: DataSource): TransactionManager {

return TransactionManager.builder()

.withDataSource(dataSource)

.withCatalog(CatalogDefinition)

.build()

}Now you can inject the TransactionManager and you’re ready to query. This examples assumes you have already generated the metadata objects (Employee in this case), which we’ll cover in the next section.

@Service

class DataService(val transactionManager: TransactionManager) {

fun getAllEmployees(): List<EmployeeRow> {

return transactionManager {

it.select(Employee).asList()

}

}

}Before we dive into the details of code generation, some clarification is in order.

Generating code is an established practice to implement service specification. You can create richly annotated interfaces from an openapi.yaml file that specify REST endpoints and the expected messages. By implementing these interfaces you create a compile-time dependency on the generated code.

db-Objekts is similar in that its generated metadata objects become tightly coupled to the business source code. If the db structure changes, the (re)generated code must also change. This may introduce compiler errors in the application code that uses it. That is not a bad thing, because the database is already an integral part of the application logic, in whatever way you interact with the database. If you only use raw SQL in your code, structural changes to the db go unnoticed unless you have extensive integration tests (unit tests won’t catch it). Otherwise, defects pop up only in production. Not good.

When a component implements a service, it often owns the specification (or rather the team does). Such files belong to the source repository and since you manage them, it’s fine to re-generate the code whenever you do a fresh build .

A database creation script serves a similar purpose as an openapi.yaml file. However, the difference is often one of ownership: your project may not own the db. Even if you create a containerized db from a dump file, it matters whether that file is the single source of truth. If not, unannounced changes may mess up the status quo. So, we need regular and automatic validation.

When the generated code is used by application code, it makes sense to compare the current db structure to the generated metadata before you overwrite anything. The following practices are recommended:

-

Always write generated code to a separate source folder, called

genorgenerated-sources. Never alter this code manually. All the tweaks you need are possible through configuration of theCodeGenerator. -

Put the generated kotlin sources under version control — yes, even though they are generated. Remember, the state of the database may not be under your control and you must be able to revert unexpected changes.

-

Use an automated test as part of the regular test cycle to validate the database against the generated sources. This should take place in the test phase, not the generate-sources phase. See MariaDBIntegrationTest for an example.

With this in mind, let’s have a detailed look at the process. CodeGenerator is our port of call for the entire process. CodeGenerationComponentTest has a comprehensive example.

Configuration consists of the following:

-

Mandatory

DataSource. -

Optional configuration for exclusions.

-

Optional configuration for mapping column types to SQL types and using custom types for specific columns

-

Optional configuration for setting the sequence names for auto-generated keys.

-

Mandatory configuration of the output

We’re making the code generation part of the standard test phase and include a component test for it.

class CodeGenerationAndValidationTest {

@Test

fun validate(){

val generator = CodeGenerator()

}

}First you need to set up the DataSource. Make sure the user has sufficient privileges to read the relevant metadata tables (INFORMATION_SCHEMA in MySQL/MariaDB)

val generator = CodeGenerator().withDataSource(myDataSource)Sometimes the database has columns, tables, or even entire schemas that are not relevant to the application’s business logic. A typical example is read-only audit columns that are populated by triggers.

We don’t want these in the generated code, and here’s how you keep them out:

generator.configureExclusions()

//any column with the string 'audit', in any table or schema

.ignoreColumnPattern("audit")

//all 'date_created' columns in any table or schema

.ignoreColumn("date_created")

//skip the entire finance schema

.ignoreSchemas("finance")

//ignore the table country, but only in the hr schema

.ignoreTable("country", schema = "hr")|

Note

|

Vendor-specific system schemas like sys, mysql or information_schema are already ignored. No need to exclude them explicitly.

|

db-Objekts chooses a suitable implementation of Column, depending on the db type (e.g. CHAR(10) or INT(6)). There is a Column class to represent every possible flavor of values that you can read and write through the JDBC API: all the numeric primitives, booleans, byte arrays and date/time types. But also vendor-specific types are possible, for UUIDs or geographical data. You find them in the metada/column package.

Sometimes you want to fine-tune this mapping. For example: in MySQL a TINYINT(1) is mapped to a Byte by default, but as it is often used as a boolean value (with 1 or 0), it’s more convenient to map it to Boolean. Another scenario is when you create a custom type to represent a String value by a business enum, e.g. your own AddressType.

Another common scenario is using a business enum for a limited range of values. The permissible range can be hardcoded in the schema through an enum type, or expressed as a character or integer numeric type, in which case it is mapped to the enum literal name, or its ordinal value, respectively. In this example the employee_address.kind column will be mapped to an enum. db-Objekts detects that the underlying column type is varchar, so it will store the values as HOME and WORK. Had the column been numeric, addressType values would be stored as 0 or 1.

generator.configureColumnTypeMapping()

.setEnumForColumnName(column = "kind", table = "EMPLOYEE_ADDRESS", enumClass = AddressType::class.java)While custom mapping are usually optional, sometimes you have to provide one. PostgreSQL, for example, supports enumeration types which are represented in the metadata as gender or payment_type. Naturally the default mechanism cannot help you out there.

db-Objekts iterates through a list of ColumnTypeMapper instances. These receive the metadata for a given column in a ColumnMappingProperties object and match it to an appropriate Column, or null if the mapping does not apply.

db-Objekts tries you custom mappings in order of registration to find a match, and then defaults to the vendor specific mapping, which has a mapping for every SQL type in the database, like MariaDBDataTypeMapper. Do have a look at that file: it will make the mechanism clear.

Here’s how to override the default mapping of TINYINT to a numeric type and use a Boolean instead.

setColumnTypeForJDBCType takes the SQL type and the class of the appropriate Column. com.dbobjekts.metadata.columnNumberAsBoolean takes care of storing a Boolean value as an integer zero or one.

generator.configureColumnTypeMapping()

.setColumnTypeForJDBCType("TINYINT(1)", NumberAsBooleanColumn::class.java)

// when you vendor (like PostgreSQL) supports custom column types

generator.configureColumnTypeMapping()

.setColumnTypeForJDBCType("gender", GenderColumn::class.java)db-Objekts lets you write your own Column implementations. This can be useful to:

-

use a business enum instead of an integer or character value, e.g. an

AddressTypeAsStringColumnto map to your customAddressTypeenum. -

add extra validation or formatting to a column, e.g. a

DutchPostCodeColumn. -

cover up poor database design decision, for example a CHAR column which is treated as a Boolean with Yes/No and inconsistent lower/upper case in the values.

See the advanced section for details.

generator.configureColumnTypeMapping()

.setColumnTypeForName(

table = "EMPLOYEE_ADDRESS",

column = "KIND",

columnType = AddressTypeAsStringColumn::class.java)The AddressTypeAsStringColumn is a custom specialization of EnumAsStringColumn<AddressType>.

While the above strategies should be sufficient for most cases, it’s possible that you need even greater control. For this, register your own implementation of api.CustomColumnTypeMapper<C : NonNullableColumn<*>> and override its single abstract method:

abstract operator fun invoke(properties: ColumnMappingProperties): Class<C>?The properties argument provides metadata about the db column (schema, table, name, nullability and its vendor-specific db type). Judged on these data you then return a class reference to a subclass of NonNullableColumn. db-Objekts will create an instance with the correct table reference and column name. If the column in question is nullable, it picks the nullable counterpart.

Many vendors support sequences for generating primary keys, but the information schema does not store which sequence is used for which table. So, unfortunately, you have to configure this manually, as follows:

generator.configurePrimaryKeySequences()

.setSequenceNameForPrimaryKey("core", "employee", "id", "EMPLOYEE_SEQ")This is cumbersome with a hundred tables to configure. If you have a consistent naming scheme, you can write your own implementation of SequenceForPrimaryKeyResolver

generator.mappingConfigurer()

.sequenceForPrimaryKeyResolver(AcmeSequenceMapper)

object AcmeSequenceMapper : SequenceForPrimaryKeyMapper {

//every column offered is a numeric primary key. No need to check this explicitly

override fun invoke(properties: ColumnMappingProperties): String? =

properties.table.value + "_SEQ"

}db-Objekts uses the following rules to convert schema, table and column names to common JVM patterns:

-

Snake case is converted to camel case:

employee_tablebecomesEmployee.corebecomesCore. -

Schema/table names are always capitalized:

Employee -

Column names are always lower camel case:

addressId -

Any name that is a reserved Java/Kotlin keyword or platform class (e.g. fun, public, true, String, Boolean) is rejected, because generated code would not compile. Since keywords are case-sensitive,

Publicis allowed — which is a common name for the default schema. -

Table names must be unique across schemas.

Because of the last two rules it may be necessary to set an explicit name mapping for a table or column. Another reason could be that the naming scheme in the db is inconsistent or confusing, and you want a clean metamodel. For this purpose there’s the configureObjectNaming() option.

In this example there’s a database with all tables and columns in Dutch, and there are two employee tables in separate schemas.

Now you can do select(Employee.dateOfBirth) without learning Dutch first.

generator.configureObjectNaming()

//Sets the name of the Kotlin object (why one would ever call a schema 'string' is not the point here)

.setObjectNameForSchema(schema = "string", objectName = "StringSchema")

.setObjectNameForTable(schema = "core",table = "werknemer", objectName = "Employee")

.setObjectNameForTable("hr","werknemer", "HrEmployee")

//sets the field name to dateOfBirth in the Employee object

.setFieldNameForColumn(schema = "core", table = "werknemer", column = "geboorte_datum", fieldName = "dateOfBirth")That was a lot of information! Don’t worry, we’re almost done. To produce the metadata, CodeGenerator only needs to know where to put things.

This example points to src/generated-sources/kotlin in your project root and creates a package tree com.dbobjekts.testdb.acme under it.

In this package will be a CatalogDefinition.kt kotlin object with subpackages for each schema, which contain one Schema object and a Table object for each table in the schema.

generator.configureOutput()

.basePackageForSources("com.dbobjekts.testdb.acme")

.outputDirectoryForGeneratedSources(Paths.get("src/generated-sources/kotlin").toAbsolutePath().toString())|

Note

|

The root folder for the generated sources specified in outputDirectoryForGeneratedSources must exist. All its content will be recursively cleared upon each code generation run. So don’t store keep code there that is not auto-generated by the mechanism.

|

Now you’re set to produce your code, like so.

generator.generateSourceFiles()If all is well, you now have a bunch of files and packages under the designated source folder, ready to be used for querying.

However, after you have done your first code generation run, we need to build in validation to ensure there are no unexpected db changes in the future. We want to do a regular dry-run of the code generation and compare the output to the current state of the metadata. If there are no differences there is no point to overwrite the generated source files. And if there are differences you probably want to inspect them first.

generator.validateCatalog(CatalogDefinition).assertNoDifferences()This call runs the code generation without writing anything to file. It compares the results to the target CatalogDefinition (that would normally be overwritten). Now, if the employee table suddenly has a non-null column shoe_size added to it, the assertion will throw with DB column EMPLOYEE.SHOE_SIZE not found in catalog. If you want to inspect the differences manually, you can:

val differences : List<String> = generator.validateCatalog(CatalogDefinition).differencesIf you have investigated the impact of such changes, you can generate the catalog again and make appropriate changes to the application code, because now the Employee metadata object has an extra mandatory column and calls to mandatoryColumns will have compiler errors.

Or would you rather fix it in production?

The next section is all about writing queries. For that, you need a reference to a TransactionManager.

You already met the TransactionManager briefly. It contains a javax.sql.DataSource, which manages connections to the db-server, authentication, pooling and creating short-lived javax.sql.Connection objects. These details are abstracted away. Live connections are wrapped by a Transaction. This acts as a builder factory for queries.

You only need a single TransactionManager for each DataSource per application, so it makes sense to create it centrally and make it available through dependency injection. Since a TransactionManager is stateless, there is no harm in assigning it to a singleton: different threads can use the same instance.

The static call to TransactionManager.builder() returns a builder with configuration methods for the CatalogDefinition and the DataSource.

val transactionManager = TransactionManager.builder()

.withCatalog(CatalogDefinition)

.withDataSource(someDataSource)

.build()You must always supply a DataSource. The catalog is mandatory if you query with metadata objects, and optional if you only use native sql queries.

There is a third, optional method if you want complete control over the way Connection objects are obtained from the DataSource: withCustomConnectionProvider.

TransactionManager.builder()

[..]

.withCustomConnectionProvider { ds: DataSource ->

val conn = ds.connection

conn.autoCommit = autoCommit

conn

}In this example you override the default setting for autocommit, which is usually configured at the level of the DataSource.

Transaction is the primary class you interact with and here’s a short overview of what it offers.

-

Methods to start a query

-

selectto start a select query -

insertto start an insert query -

saveto persist a stateful`TableRowData` object -

updateto start an update query -

deleteFromto start a delete query -

sqlto start a native sql query.

-

-

Low-level control of the underlying

java.sql.Connectionwithcommit,rollbackandclose. You should not need these often. -

Inspect detailed execution results with

transactionExecutionLog()

Every query against db-Objekts is executed through a call to TransactionManager.newTransaction or its shortcut invoke method. This takes a lambda that provides a fresh Transaction object. In the body of the lambda you execute queries. The TransactionManager then commits the underlying Connection and returns whatever was returned by the lambda.

val verboseForm: List<BookRow> = tm.newTransaction { tr: Transaction -> tr.select(Book).asList() }

val shortForm: List<BookRow> = tm { it.select(Book).asList() }If the lambda throws an Exception, a roll-back is attempted, depending on the autocommit setting of the session. Some data may have been successfully persisted. You can find a comprehensive example in TransactionLifeCycleComponentTest.

The Transaction is a short-lived object that should never leave the scope of its lambda. Don’t assign it to a variable outside that scope. Its lifecycle is no longer be managed and the underlying Connection will go stale.

Let’s explore the query methods of the Transaction: inserting, updating, deleting, selecting and native sql. Do look at the component tests, which are linked in every section and act as living documentation.

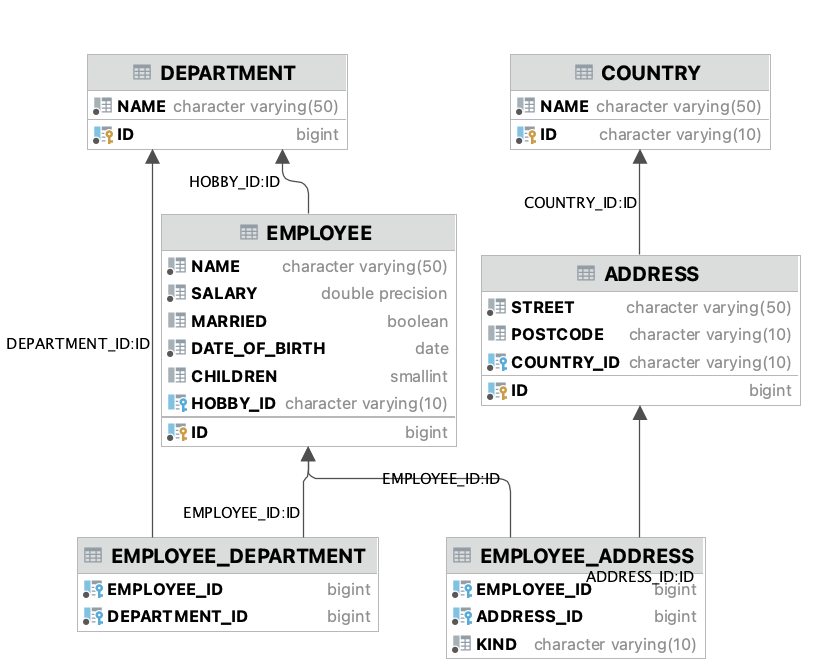

For the next examples we have a more meaty test database which has tables in a core and hr schema, cross-schema relationships, and many-to-many columns to link employees to addresses and departments, and distinguish work and home addresses in the employee_address.kind column.

The insert(..) method takes a Table implementation and returns a corresponding builder instance on which to set values. InsertStatementComponentTest

Insert builders contain setter methods for all columns. In addition, they have a mandatoryColumns(..) convenience method (provided the table has at least one non-nullable column) to make sure you provide all the required values.

transaction.insert(Country).mandatoryColumns("nl", "Netherlands").execute()

val petesId: Long = transaction.insert(Employee)

.mandatoryColumns("Pete", 5020.34, LocalDate.of(1980, 5, 7))

.married(true)

.execute()-

The

Countryobject has two mandatory columns and no auto-generated key. Theexecute()method returns the value of the JDBC callPreparedStatement.executeUpdate(), which is 1 for a successful insert. -

The

Employeetable has four mandatory columns. The optionalmarriedproperty is supplied in a setter method. The table has a generated primary key, which is returned by theexecute()method.

For each stateless Kotlin object that represents a db table there is also a corresponding stateful, immutable data class to represent a single row of data, called TableRow They are useful for retrieving all columns in a table, but you can also use them to insert data.

val row = EmployeeRow(

name = "John",

salary = 300.5,

married = true,

dateOfBirth = LocalDate.of(1980, 3, 3),

children = 2,

hobbyId = "chess"

)

val johnsId = tr.insert(row) //immediately executes and returns the auto-generated IDIt’s your own responsibility to make sure all the mandatory columns have non-null values.

The save(..) method does the same. Read more.

|

Note

|

About auto-generated primary keys. EmployeeRow has a non-null id field, which is auto-generated, hence unavailable until after the insert(…) call. Making such numeric PKs nullable (Long? or Int?), might make sense for inserts, but means ugly !! when the id is guaranteed to be non-null, after a fetch. The compromise is to provide a default value of zero. db-Objekts assumes that zero is equivalent to null for generated numeric keys. You should never set such ids to a positive value. It will be rejected in an insert() statement.

|

Let’s move on to select statements. These consist of the following parts:

-

At least one column or data row reference in the

select(..)call. -

An optional

from(..)clause for when you need custom join syntax. -

An optional

where(..)clause to constrain the selection. -

An optional

orderBy()orlimit()clause. -

An optional

having(..)clause when you use an aggregated column. -

Finally, a call to

asList()orfirst[OrNull]()executes the statement and returns the result.

This query selects name and salary for all rows in the employee table. The result is always a Tuple* object that corresponds in size and type to the columns you specified in the .select(..) call.

val asList: List<Tuple2<Long, String>> = it.select(e.id, e.name).asList() // potentially empty

val asOption: Tuple2<Long, String>? = it.select(e.id, e.name).firstOrNull() // None if no row can be retrieved

val singleResult: Tuple2<Long, String> = it.select(e.id, e.name).first() //Will throw an exception if no row can be foundNotice we use the 'e' alias from the generated Aliases object. This is a handy shortcut that refers to the same Employee object. It implements a corresponding HasAliases interface, so you can import all the shortcuts in one go, using delegation.

class SelectStatementComponentTest : HasAliases by AliasesSince all Tables are singleton objects, you can also define your own shortcuts as class members: val emp = Employee.

The Employee and Address tables are linked via the EmployeeAddress table in a many-to-many fashion. Since the foreign key relations are explicit in the source code, db-Objekts can build the joins for you:

transaction.select(e.name, e.dateOfBirth, e.children, e.married).where(Address.street.eq("Pete Street")).asList()We can select from the Employee table with a constraint on the Address table, without specifying the join. This mechanism saves you much typing, but comes with limitations:

-

There must be an explicit foreign-key relationship between the tables referenced in either the

selector thewherecalls, or there must be a many-to-many join table that links two tables referred in your query, like in the above example. -

By default, joins are inner joins. Using outer joins is possible, but comes with some caveats. Check the dedicated section.

|

Note

|

How can db-Objekts figure out the parameterized Tuple* return type from the arguments to select()? By having 22 overloaded versions of select(). Since you can also retrieve an entire table row as a single Selectable, that should be more than enough. The number 22 is inspired by Scala, which supports built-in Tuples up to that number.

|

A call like select(Employee.name, Country.name) is a bridge too far. db-Objekts cannot figure out that it needs address and employee_address. In that case you need to specify the joins manually. Call the from(..) method with the driving table of your selection, and add the tables to be joined as follows:

transaction.select(e.name, c.name)

.from(Employee.innerJoin(ea).innerJoin(Address).innerJoin(Country))

.where(ea.kind eq "WORK")

.asList()The table provided in the innerJoin() must have an explicit foreign key relationship with its parent, so there’s no need to specify the columns. This resolves to the following SQL (we’ll look at left/right outer joins later).

FROM EMPLOYEE e JOIN EMPLOYEE_ADDRESS ae on e.id = ae.employee_id

JOIN ADDRESS a on a.id = ae.address_id

JOIN COUNTRY c on c.id = a.country_idAs long as there is an explicit foreign key relationship between parent and child, there is no limit to the number of tables you can involve in the join chain. Consider this example from MariaDBNationsIntegrationTest, which needs two intermediate tables to join up the target column

tr.select(Continents.name, CountryStats.population.sum())

.from(CountryStats.innerJoin(Countries).innerJoin(Regions).innerJoin(Continents))

.asList()You already saw asList(). There are five more methods available to collect the results from a select or native sql query.

-

asList() : List<T>fetches the entireResultSetinto an immutableList, which can be empty. -

first(): Tfetches the first result in theResultSet, throwing a [StatementExecutionException] when there are no results. -

firstOrNull(): T?fetches only the first result in theResultSet, which may be null. Note that when you usefirstOrNull()to fetch a single nullable column and it returns null, there is no way to distinguish between a null value and an empty result set. In that case, usefirst()and catch the exception. -

asSlice(skip: Long, limit: Long): List<T>iterates through theResultSet, skipping the first skip rows and collects a maximum of limit rows to a list. -

forEachRow(): Unititerates through the results with a custom predicate. -

iterator(): ResultSetIterator<T>lets you fetch and process each row one by one

When you execute a select statement, db-Objekts pulls all results into a list structure, which add to the JVM heap. This can become problematic.

forEachRow() lets you inspect the Resultset row by row through a custom predicate. Return false to stop further retrieval.

tr.select(e.name).orderAsc(e.name).forEachRow({ rowNumber, row ->

// handle the row result any way you want

//there could be many more rows in the resultset, but we stop fetching after two

rowNumber <= 2

})|

Warning

|

Do not use asSlice() in conjunction with a limit() clause, as this will probably lead to confusing results. A limit clause is executed on the database side, whereas the limit parameter of the asSlice method determines the maximum number of rows to fetch after skipping an initial number. asSlice can be used as a paging mechanism, but it is still less efficient than a combination of a greater-than operator and a limit clause.

|

You can further tweak selection results with the orderBy and limit(..) methods. This example orders all employees by salary (highest first), then by name (A-Z), and retrieves the first ten rows.

tr.select(e.name).orderDesc(e.salary).orderAsc(e.name).limit(10).asList()Note that these constraints are executed server-side, as they are part of the SQL. db-Objekts takes care of the proper syntax, because vendors handle retrieval limits differently.

When a query refers to columns whose tables are linked through foreign key relationships, then db-Objekts can figure out the correct join syntax. Consider the hobbyId field in the Employee table metadata object

val hobbyId = OptionalForeignKeyVarcharColumn(this, "hobby_id", Hobby.id)This is enough information to create the necessary join.

FROM EMPLOYEE e LEFT JOIN HOBBY H on e.id = h.employee_idThis mechanism also works when you reference employee and address, that are connected through the many-to-many table emmployee_address.

This section deals with scenarios where automatic discovery is not possible or desired. When it comes to join syntax, there are three options:

-

Automatic join discovery: only possible when all relevant tables are referenced either in the

select()or thewhere()clause and they have explicit foreign key relationships. -

Semi-manual

from()clause. You specify the tables to join, but db-Objekts can figure out which columns to use for the join. -

Fully manual

from()clause. You specify both the tables and the columns to join, when db-Objekts cannot reliably determine this automatically.

Recall the previous query tm.select(Employee.name, Hobby.name), which does an inner join and only returns results where a hobby record is linked to an employee. If we want all employee records, what we need is a left outer join.

tr.select(Employee.name, Hobby.name).useOuterJoins()The method useOuterJoins instructs the standard join mechanism to use left outer joins instead of inner joins. Alternatively, you can build the join chain yourself. This gives greater control when there are more tables involved, because maybe not everything should be an outer join.

But the above code will fail at runtime. Hobby.name is an instance of a non-nullable VarcharColumn, which demands a String data type. But when there are no matches, it reads a null from the database and slaps you with an exception, because the query needs to return Tuple2<String,String> and cannot put a null in the second element.

The fix is to use the nullable counterpart if a non-null column can return null as the result of an outer join. Each non-nullable column implementation has a nullable counterpart for this purpose. Now the return type of the query will be Tuple2<String,String?>.

tr.select(Employee.name, Hobby.name.nullable).useOuterJoins()The previous query could also be written as follows:

tr.select(Employee.name, Hobby.name).from(Employee.leftJoin(Hobby))The join() method takes a JoinChain, which is created from a Table object and at least one call to innerJoin(), leftJoin() or rightJoin().

A more typical use of an explicit join would be the following:

tr.select(Employee.name, Country.name).from(Employee.innerJoin(EmployeeAddress).innerJoin(Address).innerJoin(Country))employee and country are linked through two intermediary tables (employee_address and address). That’s not enough information to join the tables together, so you need to lend a hand. But since the metadata objects contain enough information about the relevant columns to use in the join, you can omit those.

If there is no foreign key relationship between two tables in the metadata, you need to provide the relevant join columns yourself. The on() clause takes a clause of column.operator(column)]. It’s the same syntax you will meet in the next section about the where clause.

val manualJoin =

tr.select(Employee, Address.street, EmployeeAddress.kind, Hobby.name.nullable)

.from(Employee

.innerJoin(ea).on(ea.employeeId.eq(e.id))

.innerJoin(a).on(ea.addressId.eq(a.id))

.leftJoin(h).on(e.hobbyId.eq(h.id))

).asList()

//in this example there is a composite foreign key between parent and child on the name and address column. db-Objekts does not handle composite foreign keys automatically.

tr.select(Parent,Child).from(Parent.innerJoin(Child).on(p.name.eq(c.name).and(p.address).eq(c.address))).asList()Updates, selects and deletes are executed against a range of database rows that satisfy certain criteria. These criteria are expressed in the where-clause.

The canonical form of the where clause is statement.where(column .. operator .. [value, otherColumn] [and|or] … ) which is analogous to normal SQL usage.

where(Employee.name.eq("Janet"))

where(Employee.dateOfBirth.gt(LocalDate.of(1980,1,1)))These are the available operators.

-

eq: is equal to. A call with a null argument delegates toisNull() -

ne: is not equal to. A call with a null argument delegates toisNotNull() -

gt: is greater than. Comparison operators can be used with numeric as well as date/time and char types -

lt: is less than. -

gte: is greater than or equal. -

lte: is less than or equal. -

within: is within a range of values. -

notIn: is not within a range of values. -

startsWith: become LIKE '%?' in SQL -

endsWith: become LIKE '?%' in SQL -

contains: become LIKE '%?%' in SQL -

isNull: becomes IS NULL in SQL -

isNotNull: becomes IS NOT NULL in SQL

You can chain conditions using and or or. Nested conditions are also possible

where(e.married.eq(true)

.or(

e.name.eq("John").or(e.name).eq("Bob"))

) // all married people, plus John and BobIf you have no conditions to constrain your selection you can omit the where clause: tr.select(Book).asList()

db-Objekts supports standard SQL aggregate functions COUNT(), SUM(), AVG(), MIN(), MAX(), DISTINCT() and COUNT DISTINCT(). To turn a normal select query into an aggregated one you designate one column in the select() call as the aggregate by calling the appropriate method.

// order departments by their number of employees

it.select(EmployeeDepartment.departmentId.count(), Department.name).orderDesc(ed.departmentId).first()

it.select(e.children.countDistinct()).asList()// returns a list of one, with value 5

// who is the highest earner?

it.select(e.salary.max()

// get the distinct number of children

val kiddos = tr.select(e.children.distinct()).asList()// returns [0,1,2,3,5] for our test data setThe ground rules:

-

Every column supports

count(),countDistinct()anddistinct(), but only numeric columns supportsum(),min(),max(),avg() -

The four numeric aggregators return either a

LongorDouble, depending on whether they operate on floating-point or integer column type. Aggregations on aBigDecimalColumnalways returnBigDecimal. -

Only one column can be designated as an aggregator.

-

A

GROUP BYclause is automatically created over all the non-aggregated columns in the query. You have no further control over this. -

To include the aggregated column in the order by clause, refer to it by its original column reference (see above example)

To put constraints on the values in the aggregated column, you need to supply an optional having() call, which creates a HAVING clause in the resulting SQL.

it.select(e.name, Certificate.name.count()).having(Aggregate.gt(0).and().lt(3))This selects the names of employees who have one or two certificates. db-Objekts supports only the simple use case of restricting a numeric aggregation result. Hence, the options compared to a regular where clause are fewer.

-

You refer to the singleton

Aggregateobject to build the condition chain. You can only restrict the aggregated result, and it has to be numeric. -

Only operators

eq,ne,lt,le,gtandgeare supported. -

You use

and()anor()to chain conditions, but since nested conditions are not supported, mixing them can be confusing.

|

Note

|

Native SQL provides more possibilities with aggregate queries (some of them vendor-specific) than are supported by db-Objekts. As with everything else in this library, it is a conscious design decision to balance usability and complexity. You can always resort to creating a native SQL query if the default behavior doesn’t cut it. |

The update(..) method, like the insert(..), takes a table and returns an *UpdateBuilder. See UpdateStatementComponentTest

transaction.update(Employee)

.salary(4500.30)

.married(null)

.where(e.id.eq(12345))-

You can provide a null to a setter method if the corresponding database column is nullable:

update(Employee).married(null). -

Note that you cannot do that with

salary, because it is non-nullable:.salary(null)will not compile -

The call to

where(…)is always mandatory as it terminates the statement and executes it. If you want to update all rows, use the no-arg versionwhere() -

If your vendor supports it, you can involve other tables in the where clause:

tr.update(Employee)[..].where(Hobby.name.eq("chess)). H2 does not support it.

You can use the data row objects to perform updates, but restrictions apply. db-Objekts needs one or more designated primary keys, because it constructs a clause where(Table.id.eq(pk)). Let’s give John a well-earned raise:

val retrieved: EmployeeRow = tr.select(Employee).where(Employee.id.eq(johnsId)).first()

tr.update(retrieved.copy(salary = retrieved.salary + 100))The row data object retrieved is immutable, so we need to make a copy with an updated salary field. Note that data rows in db-Objekts are very different from managed entities in ORM.

Transaction has a save(..) method that takes TableRowData instances and acts as a convenient delegator to insert() or update(). Its performance depend on the type and state of the primary key(s), so note the following:

-

Rows with a single generated primary key (sequences and auto_increment), are delegated to update or insert depending on a positive or zero value of the key, respectively.

-

For all other primary keys db-Objekts must first check in the database if the primary key already exists before it can update or insert accordingly. If you know beforehand that a row is or isn’t persisted, it is more efficient to invoke update/insert directly and avoid this unnecessary check.

-

Rows without a primary key are delegated to

insert()without any checks.

The statement to delete rows has the fewest options. See DeleteStatementComponentTest

tr.deleteFrom(Employee).where(Employee.id.eq(id))-

The

deleteFrommethod takes a single table or a join (not all vendors supports this:tm { it.deleteFrom(e.innerJoin(Hobby)).where(h.name.eq("chess")) }) -

The call to

where(…)is always mandatory. If you want to delete all rows, use the no-arg version:tr.deleteFrom(Employee).where()

db-Objekts does not try to cover all your query needs with its own DSL. That is a deliberate design decision. It aims to make mundane queries more pleasant and safe, but recognizes that other times you need native SQL. See CustomSQLComponentTest

You can run native SQL queries through the TransactionManager and still get type-safe results. There are two flavors: queries that return results and those that don’t. Both are started with the sql(..) method.

// no arguments, and no results

tr.sql("CREATE SCHEMA if not exists core").execute()Transaction.execute takes a SQL String and a vararg of Any? parameters and is terminated with execute(). The length must match the number of ? placeholders in the query.

// no results, one argument

tr.sql("update core.employee where e.name=?", "john").execute()That was easy. Let’s move to statements that return results.

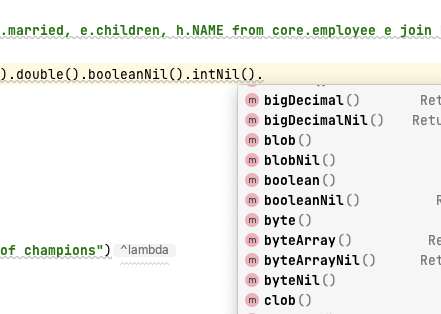

val (id, name, salary, married, children, hobby) =

it.sql(

"select e.id,e.name,e.salary,e.married, e.children, h.NAME from core.employee e join hr.HOBBY h on h.ID = e.HOBBY_ID where e.name = ?",

"John"

).withResultTypes().long().string().double().booleanNil().intNil().stringNil()

.first()The sql method on Transaction works the same as regular select statements when it comes to retrieving parameterized tuples. It has the same methods as select queries on metadata. But instead of providing type information through a list of Column references, you do it through withResultTypes.

This returns a builder with methods for each available standard SQL type in a nullable and non-nullable flavour. String together all the methods you need, call first[orNull](), or asList(), and the output of the custom query will be returned in a type-safe tuple.

Apart from standard column types you can also map retrieved values using a custom column type. For this there are the custom(..) and customNil(..) builder methods.

These come in a nullable and non-nullable flavor and take a reference to the appropriate Column class. Suppose the following query selects two columns which are stored as TINYINT(1) used as a Boolean, and VARCHAR(10) nullable, respectively. We want to read them out as Boolean and our own AddressType.

val rows: List<Tuple2<Boolean, AddressType?>> = it.sql(

"select e.has_children,e.address_type from EMPLOYEE e"

).withResultTypes()

.custom(NumberAsBooleanColumn::class.java)

.customNil(NullableAddressTypeAsStringColumn::class.java)

.asList()Static objects com.dbobjekts.api.[Nil]ColumnClasses gives a handy overview, so you could also write:

.custom(ColumnClasses.NUMBER_AS_BOOLEAN)The custom and customNil methods take a NonNullableColumn and NullableColumn reference, respectively. You can’t go wrong there, as mismatches won’t compile.

db-Objekts creates three main types of metadata that correspond to the database schema:

-

A single

CatalogDefinitionobject with reference to one or more Schemas and a specification of the vendor type. -

A

Schemaobject for each schema in the Catalog. -

One or more

Tableobjects for each table in the schema.

Starting with CatalogDefinition:

object CatalogDefinition : Catalog("H2", listOf(Core, Hr, Library))the Library schema

object Library : Schema("LIBRARY", listOf(Author, Book, Item, Loan, Member))and the Book table (omitting the stuff that’s only for internal use)

object Book:Table("BOOK"){

val isbn = com.dbobjekts.metadata.column.VarcharColumn(this, "ISBN")

val title = com.dbobjekts.metadata.column.VarcharColumn(this, "TITLE")

val authorId = com.dbobjekts.metadata.column.ForeignKeyLongColumn(this, "AUTHOR_ID", Author.id)

val published = com.dbobjekts.metadata.column.DateColumn(this, "PUBLISHED")

}All that is needed to create queries is expressed in the column types: how to create primary keys (auto generated, with a sequence, or manually), whether a type is nullable, and the parent table/column for primary keys.

There is also an Aliases.kt file alongside the CatalogDefinition that contains unique placeholders for each table across all schemas. This contains an interface and implementation that you can import using delegation. This makes the values available to be used in your queries.

class MyRepository : HasAliases by Aliases {

[..]

tm { val rows = it.select(em.id, ad.name).where(ea.kind.eq(HOME)).asList()}

}Aliases are resolved according to the following rules:

-

When the table name is a single word, use the first two letters (unless the table name is only one character);

membersbecomesme. -

When the table name resolves to camel case, take the capital letters:

employee_addressbecomesea. -

In case of naming conflicts, an incremental number is added. If we have three tables

member,measureandmember_exemption, the aliases becomeme,me1andme2, respectively.

|

Note

|

Aliases are created for your convenience and not used by the query engine. You may edit the field names to better suit your taste. Move the file out of the generated directory in that case, as it will be overwritten when you run the generator. |

db-Objekts lets you customize the way values are read from and written to the database. For this you need to know about the Column hierarchy.

Abstract com.dbobjekts.metadata.column.Column<T> is at the basis and has two direct abstract descendants: NonNullableColumn<T> and NullableColumn<T>, parameterized for a certain value type. Implementations must override

abstract fun getValue(position: Int, resultSet: ResultSet): I?

abstract fun setValue(position: Int, statement: PreparedStatement, value: I)getValue deals with ResultSet, setValue with PreparedStatement. For the concrete class VarcharColumn, this looks as follows:

override fun getValue(position: Int, resultSet: ResultSet): String? = resultSet.getString(position)

override fun setValue(position: Int, statement: PreparedStatement, value: String) = statement.setString(position, value)Notice that getValue must always return a nullable result, also for NonNullableColumn, because getter calls on a ResulSet can return null. The base class will cast it to a non-nullable value if desired by the concrete class. You don’t invoke getValue in application code.

The JDBC getter and setter methods cover all the Java primitives, including dates, String, Blob and more esoteric types. For each of these there is a suitable implementation in com.dbobjekts.metadata.column, with a companion Nullable*Column.

This section lists all the column types available in db-Objekts.

For numeric types we have, from small to large

| Column class | value class |

|---|---|

ByteColumn |

Byte |

ShortColumn |

Short |

IntegerColumn |

Int |

LongColumn |

Long |

FloatColumn |

Float |

DoubleColumn |

Double |

BigDecimalColumn |

java.math.BigDecimal |

Then there is String and Booleancustomsql

| Column class | value class |

|---|---|

VarcharColumn |

String |

BooleanColumn |

Boolean |

Byte arrays and large objects:

| Column class | value class |

|---|---|

BlobColumn |

java.sql.Blob |

ByteArrayColumn |

ByteArray |

ClobColumn |

Clob |

Date and time columns. All except LegacyDateColumn convert from/to java.sql.Date to the appropriate java.time.* classes.

| Column class | value class | comment |

|---|---|---|

LegacyDateColumn |

java.sql.Date |

Processes standard JDBC java.sql.Date without conversion |

DateColumn |

java.time.LocalDate |

|

DateTimeColumn |

java.time.LocalDateTime |

|

OffsetDateTimeColumn |

java.time.OffsetDateTime |

|

TimeColumn |

java.time.Time |

|

TimeStampColumn |

java.time.Instant |

And a number of utility columns, most of them abstract, to serve as a basis for custom extensions.

| Column class | value class | abstract? |

|---|---|---|

EnumAsIntColumn |

Enum stored by its |

Yes |

EnumAsStringColumn |

Enum, stored by its |

Yes |

ObjectColumn |

Any |

Yes |

NumberAsBooleanColumn |

Boolean stored as an Int 0/1 |

No |

Remember the previous example where we mapped an AddressType enum to a varchar or numeric column. If you can use the literal values HOME and WORK in the database, there is no need for customization. Imagine however that the values are stored by their description as Home address and Work address, and there is a fallback to be used for null results. Now the default behavior won’t work.

enum class AddressType(val description: String) : Serializable {

UNKNOWN("Unknown"), HOME("Home address"), WORK("Work address");

companion object {

fun fromDescription(desc: String) = AddressType.values().firstOrNull { it.description == desc }?:throw IllegalArgumentException("Invalid address type: $desc")

}

}To make a compliant Column, you need to override EnumAsStringColumn<AddressType>. You need to provide a Nullable counterpart as well:

class AddressTypeAsStringColumn(table: Table, name: String, aggregateType: AggregateType?) : EnumAsStringColumn<AddressType>(table, name, AddressType::class.java, aggregateType) {

override fun toEnum(name: String): AddressType = AddressType.fromDescription(value)

}

class NullableAddressTypeAsStringColumn(table: Table, name: String, aggregateType: AggregateType?) :

NullableEnumAsStringColumn<AddressType>(table, name, AddressType::class.java, aggregateType) {

override fun setValue(position: Int, statement: PreparedStatement, value: AddressType?) {

super.setValue(position, statement, value?:AddressType.UNKNOWN)

}

override fun toEnum(value: String?) = value?.let { AddressType.fromDescription(it) } ?: AddressType.UNKNOWN

}There is some mandatory boilerplate involved, but it gives you the opportunity for fine-grained handling, like mapping a null value to the UNKNOWN literal.

You need to tell CodeGenerator where this custom column applies, generate the code, and then you can use it in queries.

generator.configureColumnTypeMapping()

.setColumnTypeForName(table = "EMPLOYEE_ADDRESS", column = "KIND", columnType = AddressTypeAsStringColumn::class.java)

tr.insert(EmployeeAddress)

.mandatoryColumns(employeeId = 43,

addressId = 42,

kind = AddressType.WORK)

.execute()H2 supports a data type for storing UUIDs (universally unique identifiers), which has no JDBC counterpart. To read and retrieve java.util.UUID, you extend ObjectColumn.

package com.dbobjekts.vendors.h2

import java.util.UUID

class UUIDColumn(table: Table, name: String) : ObjectColumn<UUID>(table, name, UUID::class.java) {

}

class NullableUUIDColumn(table: Table, name: String) : NullableObjectColumn<UUID?>(table, name, UUID::class.java) {

}There’s nothing to override other than the nullable property. UUIDColumn is only there to fill in the <T>. The base class takes care of calling getObject(..) on the ResultSet.

Sometimes it would be good to have some extra validation. Suppose we want to make sure only valid Dutch postcodes are stored in Address.postcode. See the full example in com.dbobjekts.testdb.DutchPostCodeColumn.

You extend from a regular VarcharColumn, but add some extra validation of your own.

class DutchPostCodeColumn(table: Table, name: String) : VarcharColumn(table, name) {

override fun setValue(position: Int, statement: PreparedStatement, value: String) {

validate(value)

super.setValue(position, statement, value)

}

companion object {

val pattern = Pattern.compile("^\\d{4}[A-Z]{2}$")

fun validate(postcode: String) {

if (!pattern.matcher(postcode).matches())

throw IllegalStateException("$postcode is not a valid Dutch postcode.")

}

}

}Much sensitive data flows through a database library like db-Objekts, so it takes security seriously. Note the following design decisions and principles:

-

db-Objekts never logs concrete data read from or written to the tables. It keeps such data in-memory for debugging purposes in an [ExecutedStatementInfo] data class only for the duration of a single

Transaction, after which it is cleared. -

All generated statements use placeholder

?syntax to guard against SQL injection attacks. You should still be careful to sanitise end-user data before you pass them as parts of a query, like intr.select(Employee).where(e.name.eq(someValueFromRestCall)) -

This warning applies especially to custom sql statements, for which db-Objekts cannot provide similar safeguards.

db-Objekts has been a long time in the making. I started it in Scala, but switched to Kotlin because its null-safety is perfectly suited to the world of (relational) data. For several years it remained a hobby project and was not mature enough to become the Open Source tool I had in mind.

This is the very first alpha release. The API may change slightly, so it is not yet ready for a beta release and you should not use it in production yet. While I’m confident and proud of my work, more rigorous testing is needed.

-

The alpha releases will cover the following:

-

Close to 100% unit test coverage

-

Full coverage for all column types in the H2 database, used for testing

-

Improving and cleaning up source code documentation and user docs

-

Minor alterations the API with regard to method names and possible refactoring of packages

-

-

The first beta release is planned for early 2023. These will cover:

-

Addition of PostgreSQL and MySQL as vendor types

-

Rigorous integration tests on dockerized images of all database vendors as part of the build

-

No more API changes are to be expected.

-

db-Objekts grew from a hobby project (originally started in Scala) by myself, Jasper Sprengers. I have been working in IT as a developer since 1999 and am also an active blogger. You can read more about me on my site.

Remarks, questions and suggestions are very welcome. Please contact me via any of the following channels: