This tutorial shows how to deploy and scale Palo Alto Networks VM-Series Next Generation Firewall with Terraform to secure a hub and spoke architecture in Google Cloud. The VM-Series enables enterprises to secure their applications, users, and data deployed across Google Cloud and other virtualization environments.

This tutorial is intended for network administrators, solution architects, and security professionals who are familiar with Compute Engine and Virtual Private Cloud (VPC) networking.

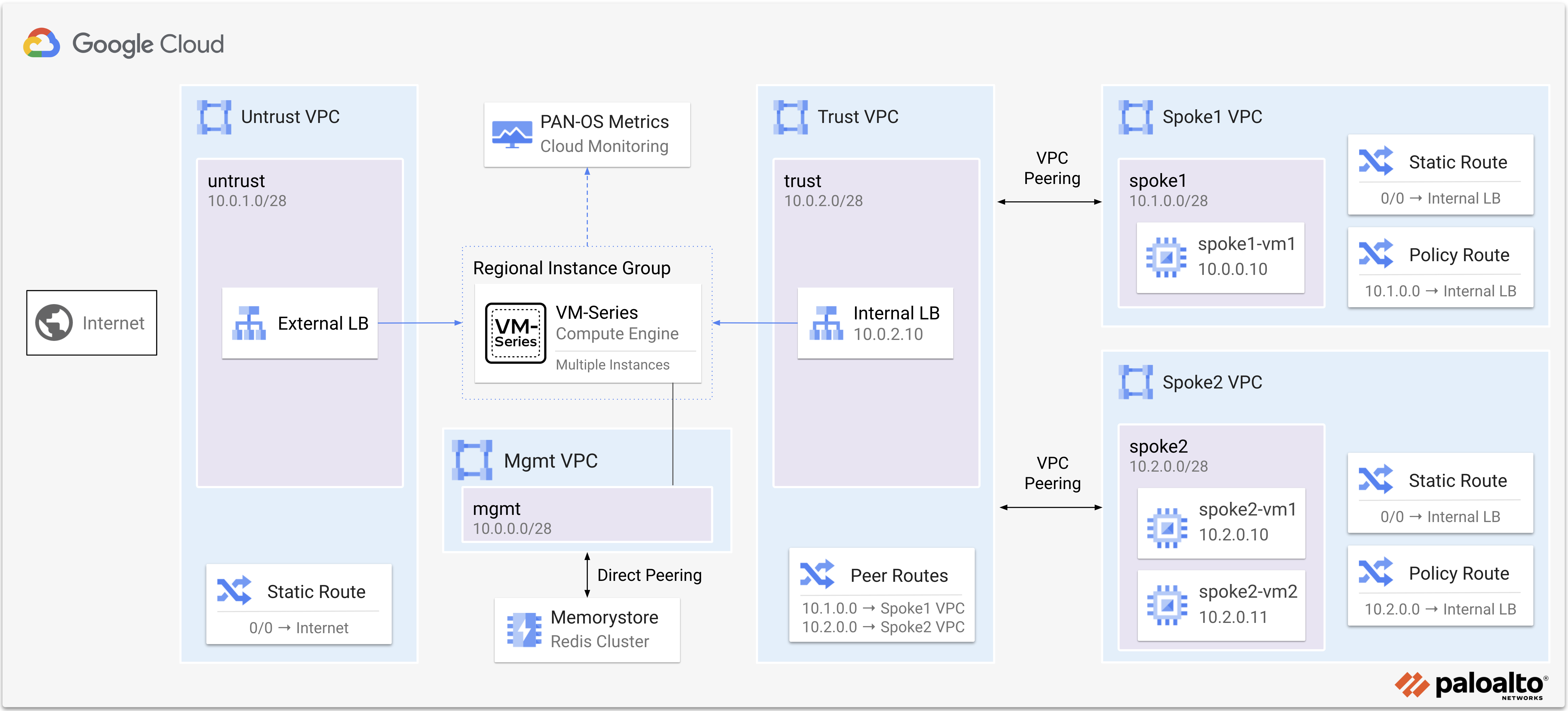

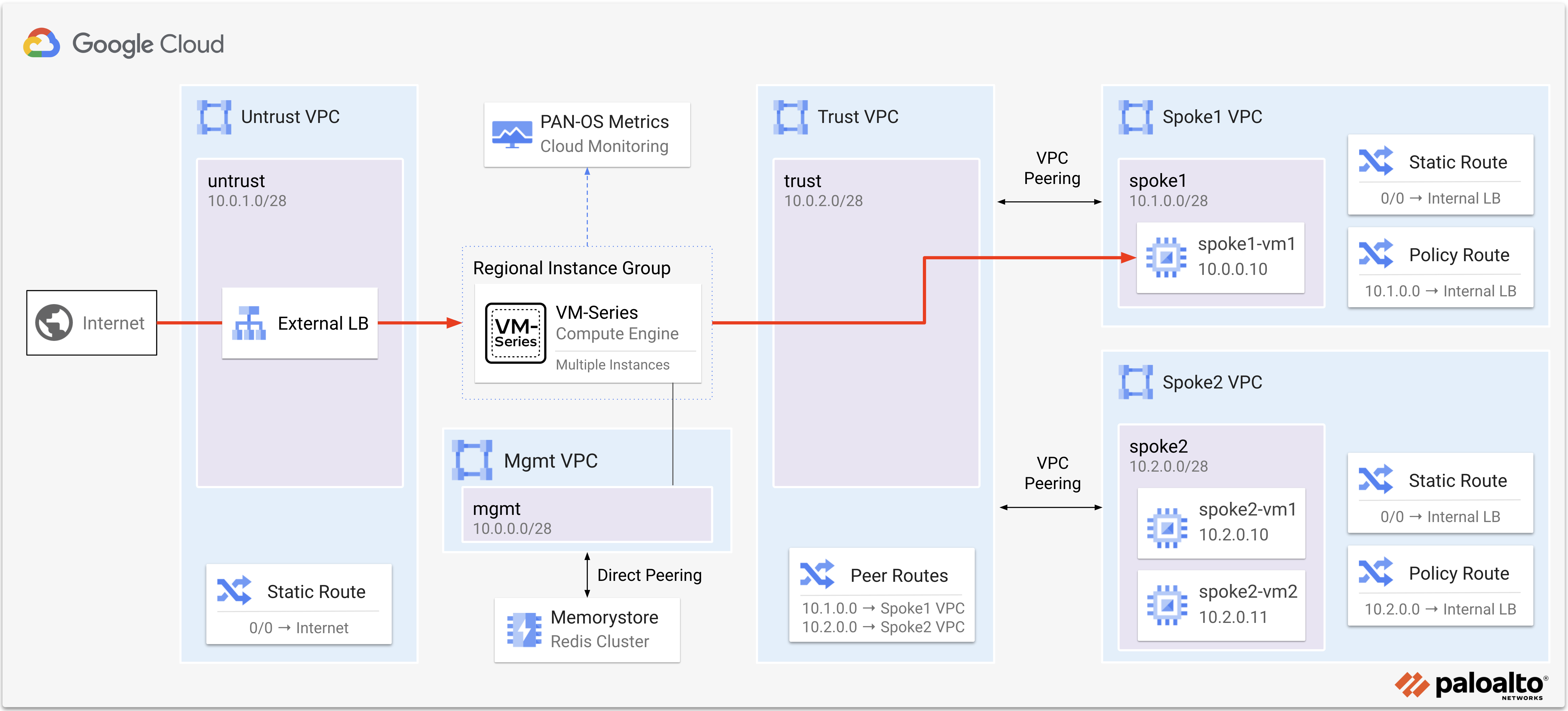

Below is a diagram of the tutorial. VM-Series firewalls are deployed with a regional managed instance group to secure north/south and east/west traffic for two spoke VPC networks.

| Traffic Pattern | Description |

|---|---|

| Internet inbound | Traffic from the internet to apps hosted in the spoke VPCs is distributed by the External Load Balancer to the VM-Series untrust interfaces (NIC0). The VM-Series translates the traffic through its trust interface (NIC2) to the spoke network. |

| Internet outbound | Traffic from the spoke VPCs to the internet is routed to the internal load balancer balancer in the trust VPC. The VM-Series translates the traffic through its untrust interface (NIC0) to the internet destination. |

| East-west (Intra & Inter VPC) | Traffic between networks (inter-VPC) and traffic within a network (intra-VPC) is routed to the internal load balancer in the trust VPC via Policy-Based routes. The VM-Series inspects and hairpins the traffic through the trust interface (NIC2) and to the destination. |

The following is required for this tutorial:

- A Google Cloud project.

- A machine with Terraform version:

"~> 1.7"

Note

This tutorial assumes you are using Google Cloud Shell.

-

Enable the required APIs, generate an SSH key, and clone the repository.

gcloud services enable compute.googleapis.com ssh-keygen -f ~/.ssh/vmseries-tutorial -t rsa git clone https://github.com/PaloAltoNetworks/google-cloud-hub-spoke-tutorial cd google-cloud-hub-spoke-tutorial -

Create a

terraform.tfvarsfile.cp terraform.tfvars.example terraform.tfvars -

Edit the

terraform.tfvarsfile and set values for the following variables:Key Value Default project_idThe Project ID within Google Cloud. nullpublic_key_pathThe local path of the public key you previously created ~/.ssh/vmseries-tutorial.pubmgmt_allow_ipsA list of IPv4 addresses which have access to the VM-Series management interface. ["0.0.0.0/0"]create_spoke_networksSet to falseif you do not want to create the spoke networks.truevmseries_image_nameSet to the VM-Series image you want to deploy. vmseries-flex-bundle2-1022h2enable_session_resiliencySet to trueto enable Session Resiliency using Memorystore for Redis.false

Tip

For vmseries_image_name, a full list of public images can be found with this command:

gcloud compute images list --project paloaltonetworksgcp-public --filter='name ~ .*vmseries-flex.*'

Note

If you are using BYOL image (i.e. vmseries-flex-byol-*), the license can be applied during or after deployment. To license during deployment, add your VM-Series Authcodes to bootstrap_files/authcodes. See VM-Series Bootstrap Methods for more information.

- Save your

terraform.tfvarsfile.

In production environments, it is highly recommended to use Panorama to manage the VM-Series. Panorama enables you to scale the VM-Series for performance while managing the them as a single entity.

For more information, see the Panorama Staging community guide.

-

In your

terraform.tfvarsset values for your Panorama IP, device group, template stack, and VM Auth Key.panorama_ip = "1.1.1.1" panorama_dg = "your-device-group" panorama_ts = "your-template-stack" panorama_auth_key = "your-auth-key"

-

Save your

terraform.tfvars.

Note

In this Terraform plan, setting a value for panorama_ip removes the GCS Storage Bucket from the VM-Series metadata configuration.

When no further changes are necessary in the configuration, deploy the resources:

-

Initialize and apply the Terraform plan.

terraform init terraform apply -

After all the resources are created, Terraform displays the following message:

Apply complete! Outputs: EXTERNAL_LB_IP = "35.68.75.133"

Note

The EXTERNAL_LB_IP output displays the IP address of the external load balancer’s forwarding rule.

To access the VM-Series user interface, a password must be set for the admin user.

-

Retrieve the

EXTERNAL_IPattached to the VM-Series MGT interface (NIC1).gcloud compute instances list \ --filter='tags.items=(vmseries-tutorial)' \ --format='value(EXTERNAL_IP)' -

SSH to the VM-Series using the

EXTERNAL_IPwith your private SSH key.ssh admin@<EXTERNAL_IP> -i ~/.ssh/vmseries-tutorial -

On the VM-Series, set a password for the

adminusername.configure set mgt-config users admin password -

Commit the changes.

commit -

Enter

exittwice to terminate the session. -

Access the VM-Series web interface using a web browser. Login with the

adminuser and password.https://<EXTERNAL_IP>

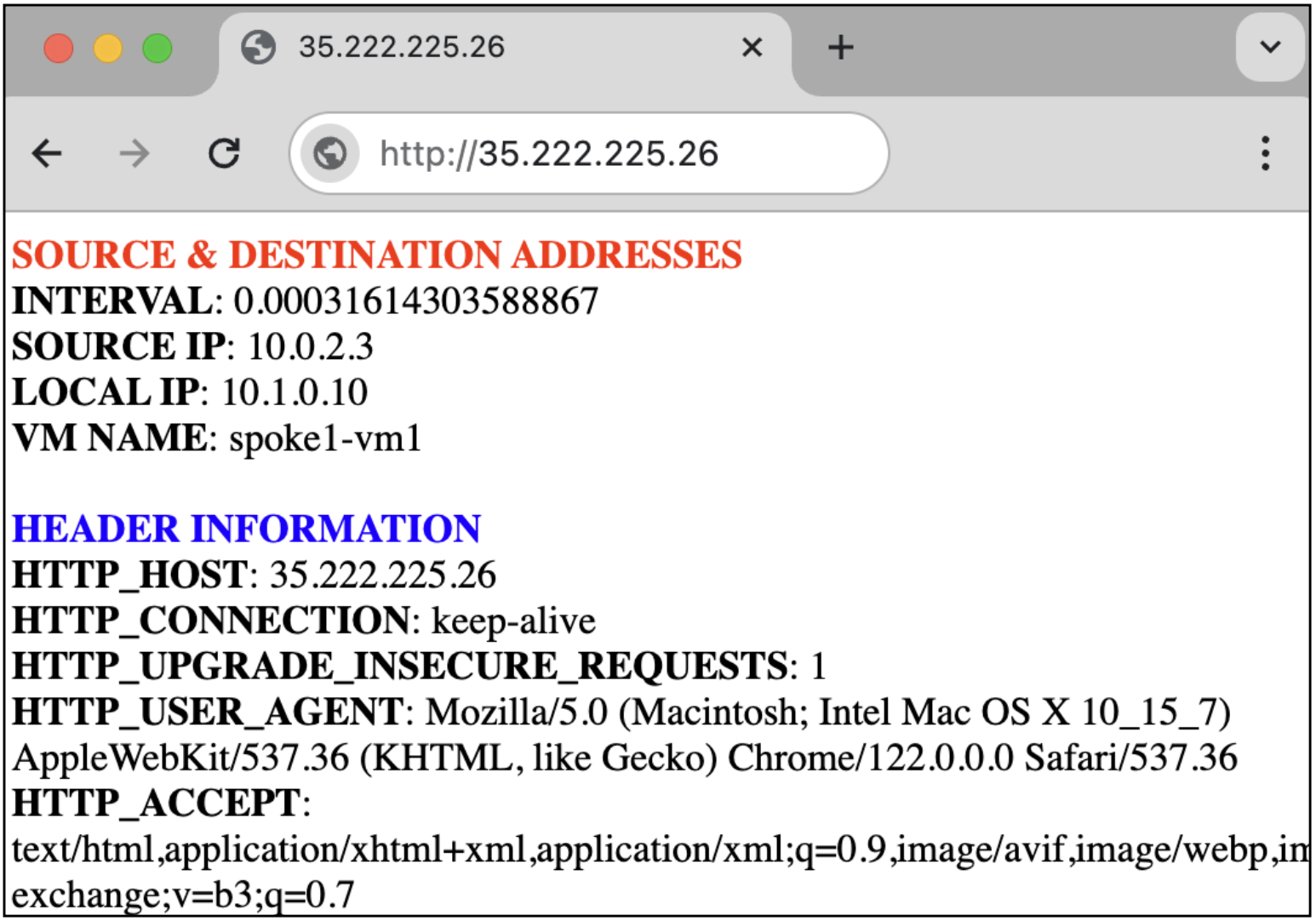

Internet traffic is distributed by the external load balancer to the VM-Series untrust interfaces. The VM-Series inspects and translates the traffic to spoke1-vm1 in the spoke 1 network.

Important

The spoke VMs in this tutorial are configured with Jenkins and a generic web service.

-

Open a HTTP connection to the web service on

spoke1-vm1by copying theEXTERNAL_LB_IPoutput value into a web browser.http://<EXTERNAL_LB_IP>

-

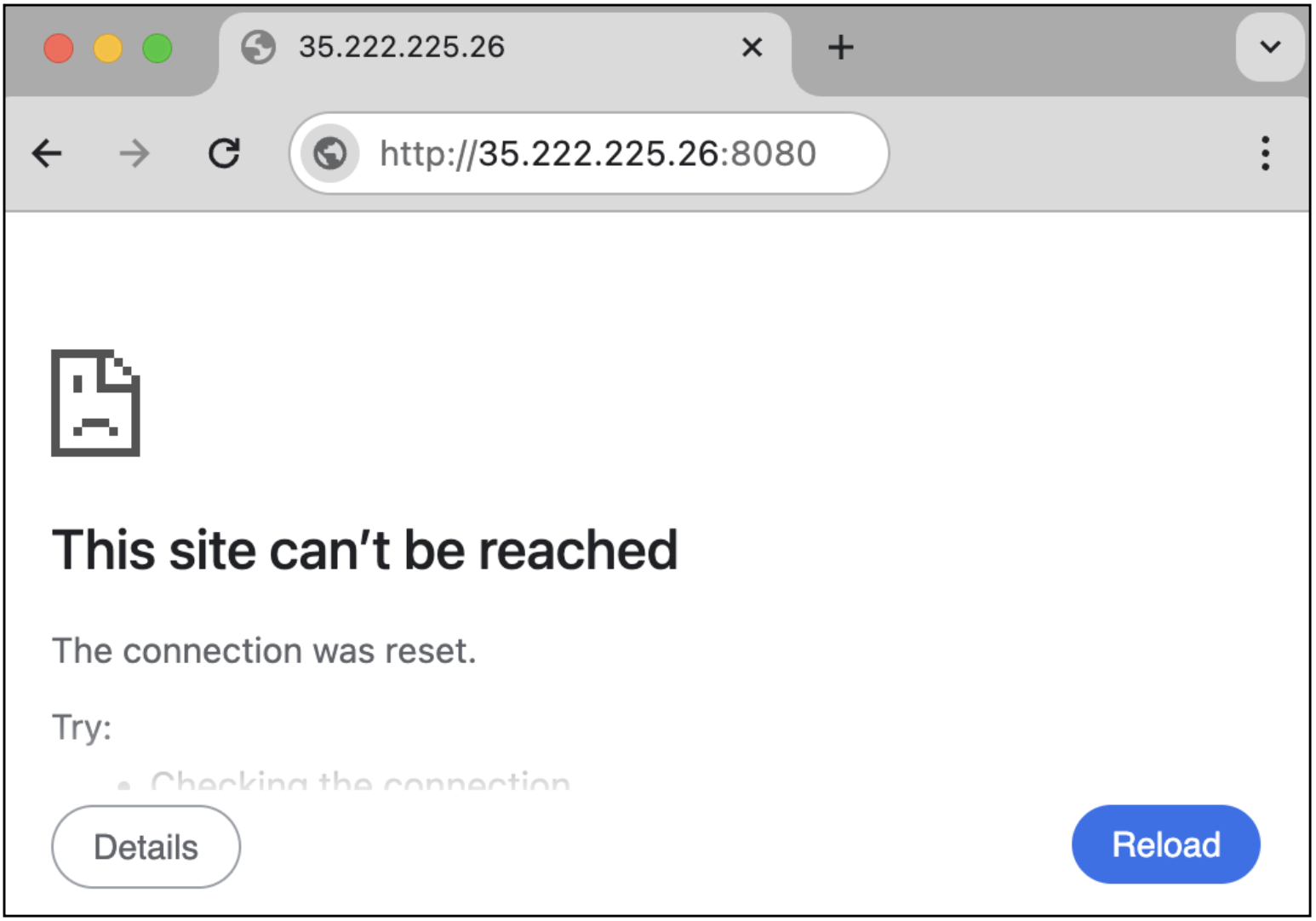

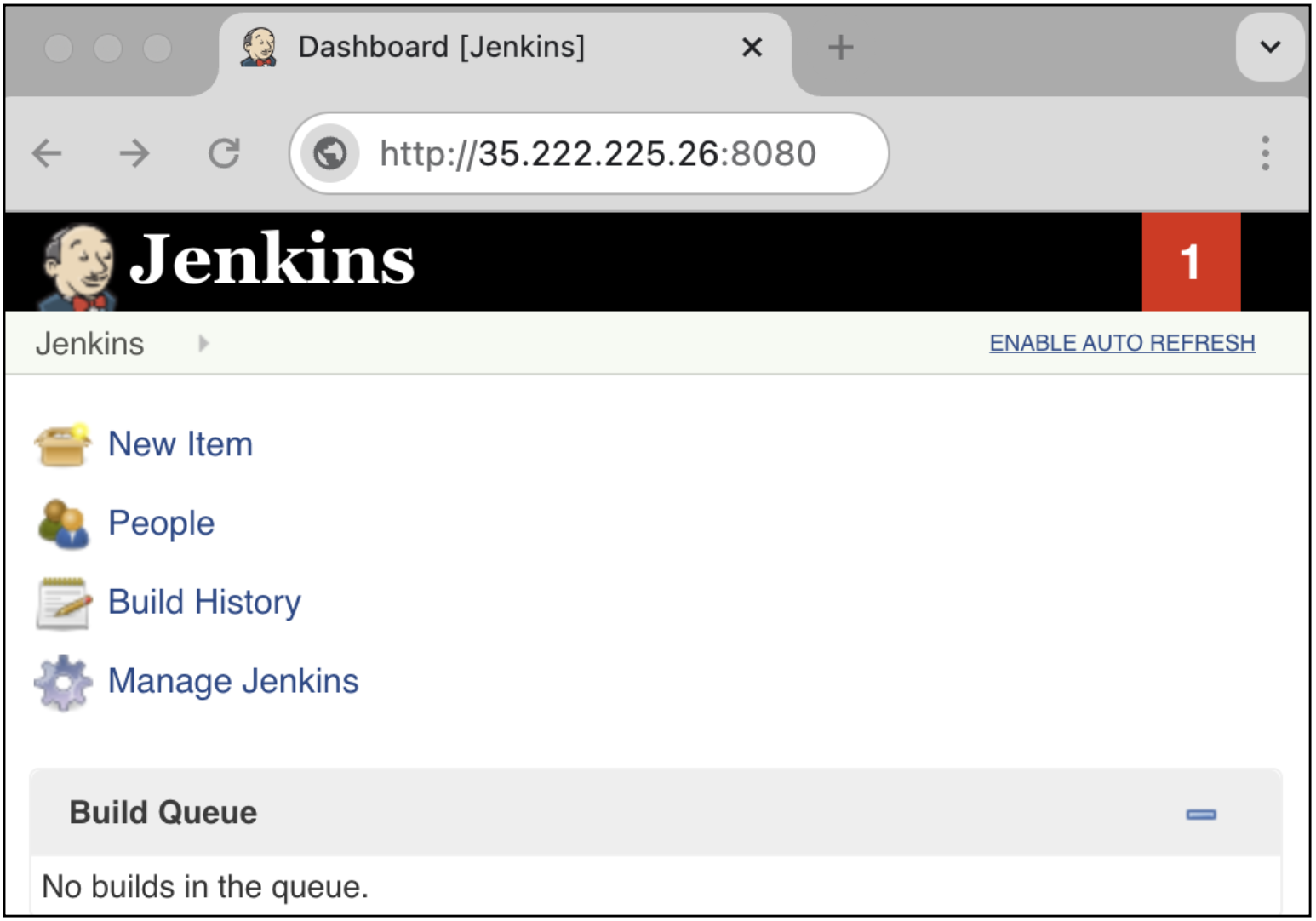

Open a session to the Jenkins service on

spoke1-vm1by appending port8080to the URL.http://<EXTERNAL_LB_IP>:8080

Tip

Your request to Jenkins should fail. This is because its App-ID™ has not been enabled on the VM-Series, yet.

Palo Alto Networks App-ID™ enables you to see applications on your network and learn their behavioral characteristics with their relative risk. You can use App-ID™ to enable Jenkins traffic through the VM-Series security policies.

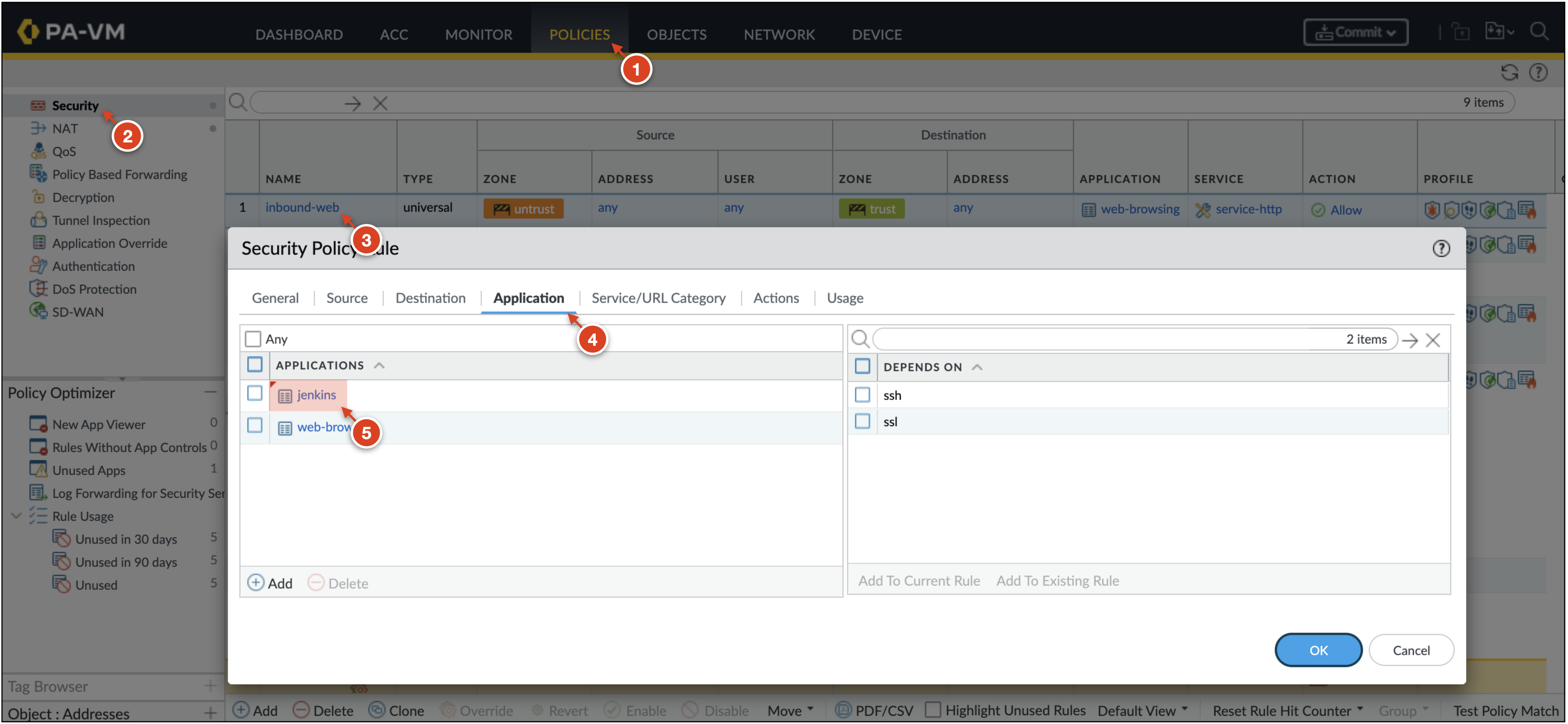

-

On the VM-Series, go to Policies → Security and open the

inbound-websecurity policy. -

In the Application tab, add the

jenkinsApp-ID. Click OK.

-

Click Commit → Commit to apply the changes to the VM-Series configuration.

-

Attempt to access the

jenkinsservice again. The page should now resolve.

-

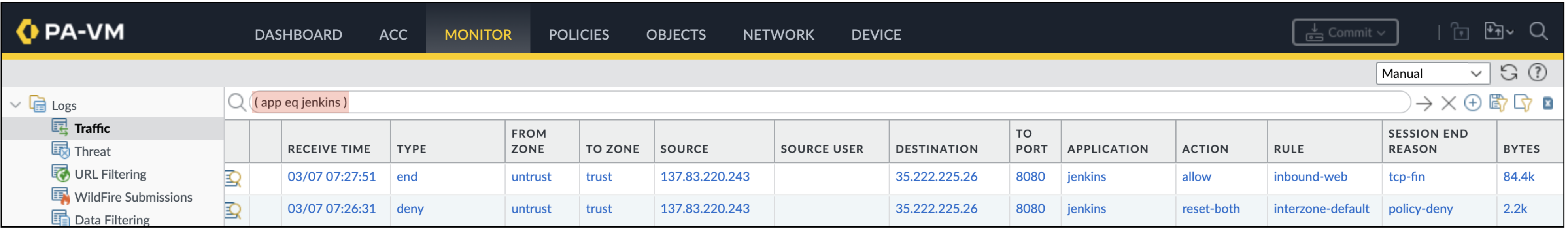

On the VM-Series, go to Monitor → Traffic and enter the filter below to search for

jenkinstraffic.( app eq jenkins )

Tip

You should see the jenkins traffic was denied before its App-ID was added to the security policy.

Policy based routes & custom static routes defined within the spoke's route table steer traffic to the VM-Series internal load balancer. This enables the VM-Series to secure egress traffic from the spoke networks, including: outbound internet, inter-VPC, and intra-VPC traffic.

-

Open an SSH session with

spoke2-vm1.ssh paloalto@<EXTERNAL_LB_IP> -i ~/.ssh/vmseries-tutorialThe external load balancer distributes the request to the VM-Series. The VM-Series inspects and translates the traffic to

spoke2-vm1. -

Test outbound internet inspection by downloading at pseudo malicious file from the internet.

wget www.eicar.eu/eicar.com.txt --tries 1 --timeout 2 -

Test inter-vpc inspection by generating pseudo malicious traffic between

spoke2-vm1andspoke1-vm1.curl http://10.1.0.10/cgi-bin/../../../..//bin/cat%20/etc/passwd -

Test intra-vpc inspection by generating pseudo malicious traffic between

spoke2-vm1andspoke2-vm2.curl -H 'User-Agent: () { :; }; 123.123.123.123:9999' http://10.2.0.11/cgi-bin/test-critical -

On the VM-Series, go to Monitor → Threat to view the threat logs.

Tip

The security policies enable you to allow or block traffic based on the user, application, and device. When traffic matches an allow rule, the security profiles that are attached to the rule provide further content inspection. See Cloud-Delivered Security Services for more information.

Regional managed instance groups enable you to scale VM-Series across zones within a region. This allows you to scale the security protecting cloud workloads.

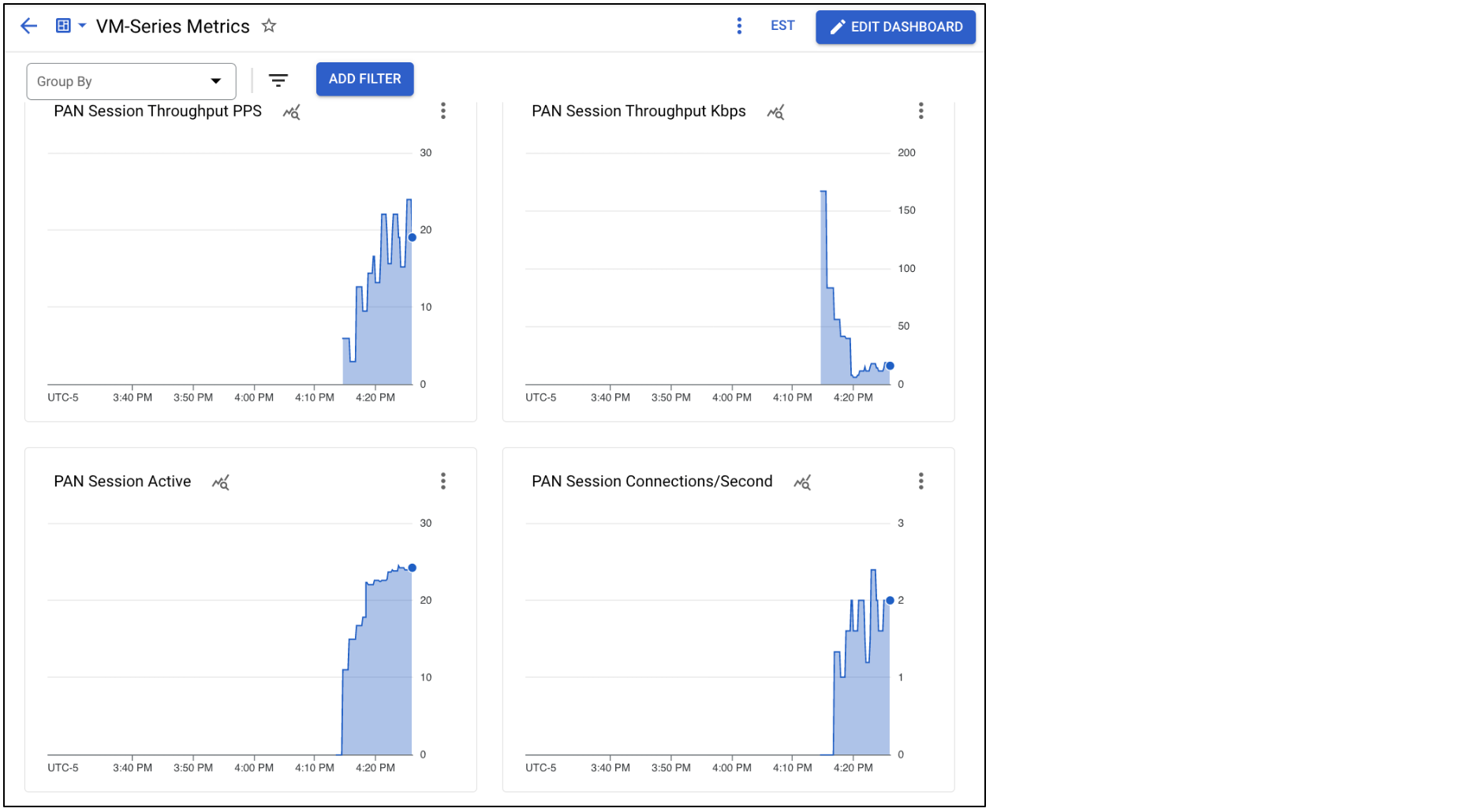

The VM-Series publishes PAN-OS metrics to Google Cloud Monitoring.

The Terraform plan creates a custom Cloud Monitoring dashboard that displays the VM-Series performance metrics. To view the dashboard, perform the following:

-

In the Google Cloud console, select Monitoring → Dashboards.

-

Select the dashboard named VM-Series Metrics.

Tip

Each metric can be set as an scaling parameter for the instance group. See Publishing Custom PAN-OS Metrics for more information.

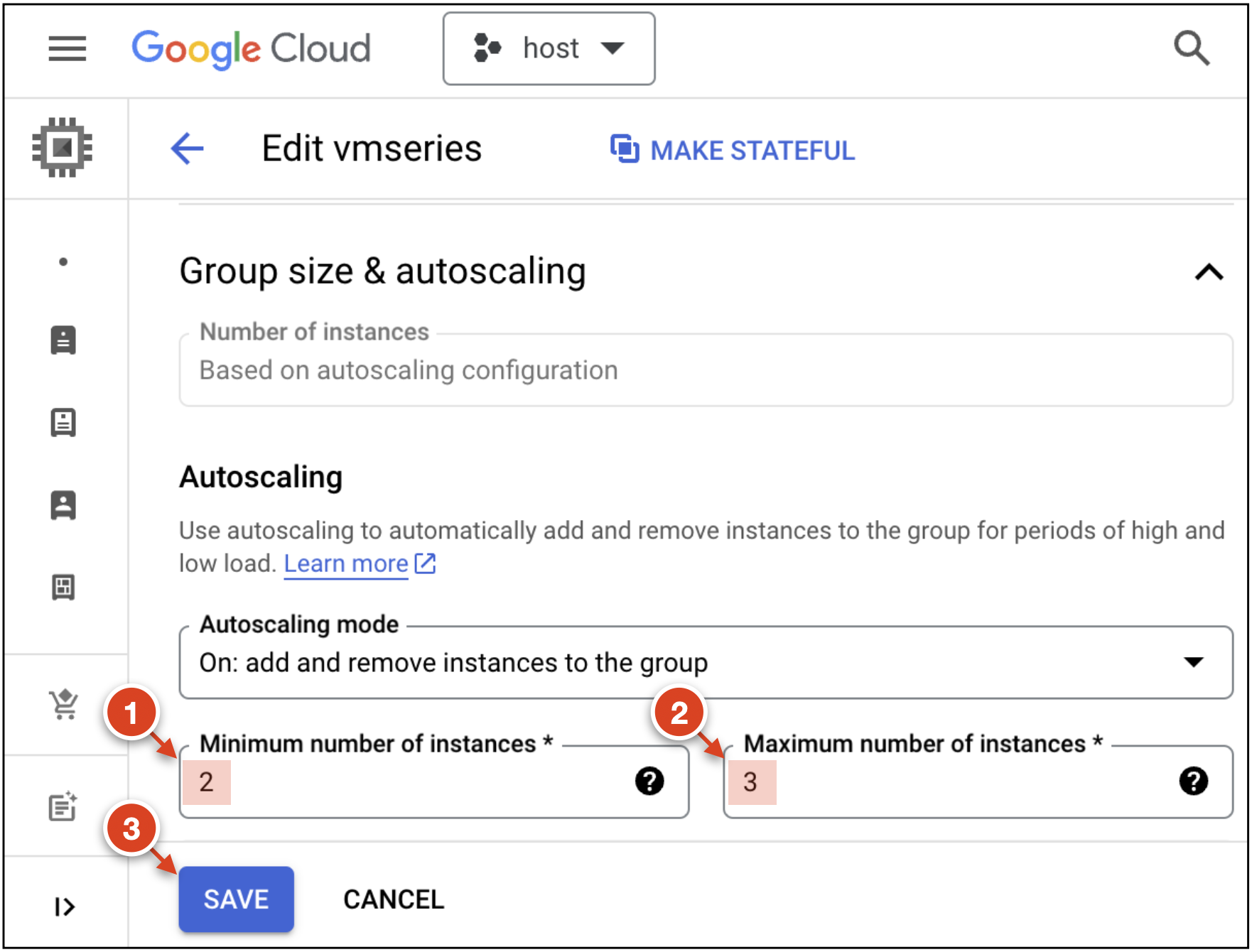

The Terraform plan sets the min/max firewall count to 1. To simulate a scaling event, modify the instance group min/max thresholds.

-

Go to Compute Engine → Instance Groups.

-

Open the

vmseriesinstance group and click EDIT. -

Under Group size & autoscaling, set the minimum to

2and the maximum number of instances to3.

-

Click Save.

-

Go to Compute Engine → VM instances to view the new VM-Series firewall.

-

Once the VM-Series finishes bootstrapping, follow the Access the VM-Series firewall instructions to gain access to the firewall’s web interface.

Important

This step is not required if you are bootstrapping the VM-Series to Panorama. This is because Panorama pushes the entire configuration to the scaled firewalls.

- On the scaled VM-Series, navigate to Monitor → Traffic. The traffic logs should be populated demonstrating the scaled VM-Series is now processing traffic.

Tip

You can also perform this in Terraform by adding the following values to your terraform.tfvars.

vmseries_replica_minimum = 2

vmseries_replica_maximum = 3

If session resiliency is enabled prior to deployment (enable_session_resiliency = true), sessions are stored in a Google Cloud Memorystore Redis Cache. This enables you to maintain layer-4 sessions by transferring sessions to healthy firewalls within the instance group.

Use iPerf to create parallel TCP connections between spoke2-vm1 (client) and spoke1-vm1 (server).

-

In cloud shell, SSH to

spoke1-vm1.gcloud compute ssh paloalto@spoke1-vm1 --zone=us-central1-a -

Install

iperfonspoke1-vm1.sudo apt-get update sudo apt-get install iperf -

Make

spoke1-vm1a server listening onTCP:5001.iperf -s -f M -p 5001 -t 3600 -

In a separate cloud shell tab, SSH into

spoke2-vm1.gcloud compute ssh paloalto@spoke2-vm1 --zone=us-central1-a -

Install

iperfonspoke2-vm1sudo apt-get update sudo apt-get install iperf -

Create

50parallel connections fromspoke2-vm1tospoke1-vm1using portTCP:5001.iperf -c 10.1.0.10 -f M -p 5001 -P 50 -t 3600 -

On each firewall, go to Monitor → Traffic and enter the filter to search for the

iperfconnections.( port.dst eq '5001' )

Note

On both firewalls you should see active connections on TCP:5001.

Simulate a failure event by randomly descaling one of the firewalls. After the descale completes, verify all of the iperf connections are still transfering data.

-

Go to Compute Engine → Instance Groups.

-

Open the

vmseriesinstance group and click EDIT. -

Under Group size & autoscaling, set the minimum to

1and the maximum number of instances to1.

-

Wait for one of the VM-Series firewalls to descale.

-

On

spoke2-vm1, kill theiperfconnection by enteringctrl + c.

Tip

You should see the connection transfers are still running, indicating the sessions have successfully failed over.

Delete all the resources when you no longer need them.

-

Run the following command

terraform destroy -

At the prompt to perform the actions, enter

yes.After all the resources are deleted, Terraform displays the following message:

Destroy complete!

- Learn about the VM-Series on Google Cloud.

- Getting started with Palo Alto Networks PAN-OS.

- Read about securing Google Cloud Networks with the VM-Series.

- Learn about VM-Series licensing on all platforms.

- Use the VM-Series Terraform modules for Google Cloud.