KubeClarity is a tool for detection and management of Software Bill Of Materials (SBOM) and vulnerabilities of container images and filesystems. It scans both runtime K8s clusters and CI/CD pipelines for enhanced software supply chain security.

- Why?

- Features

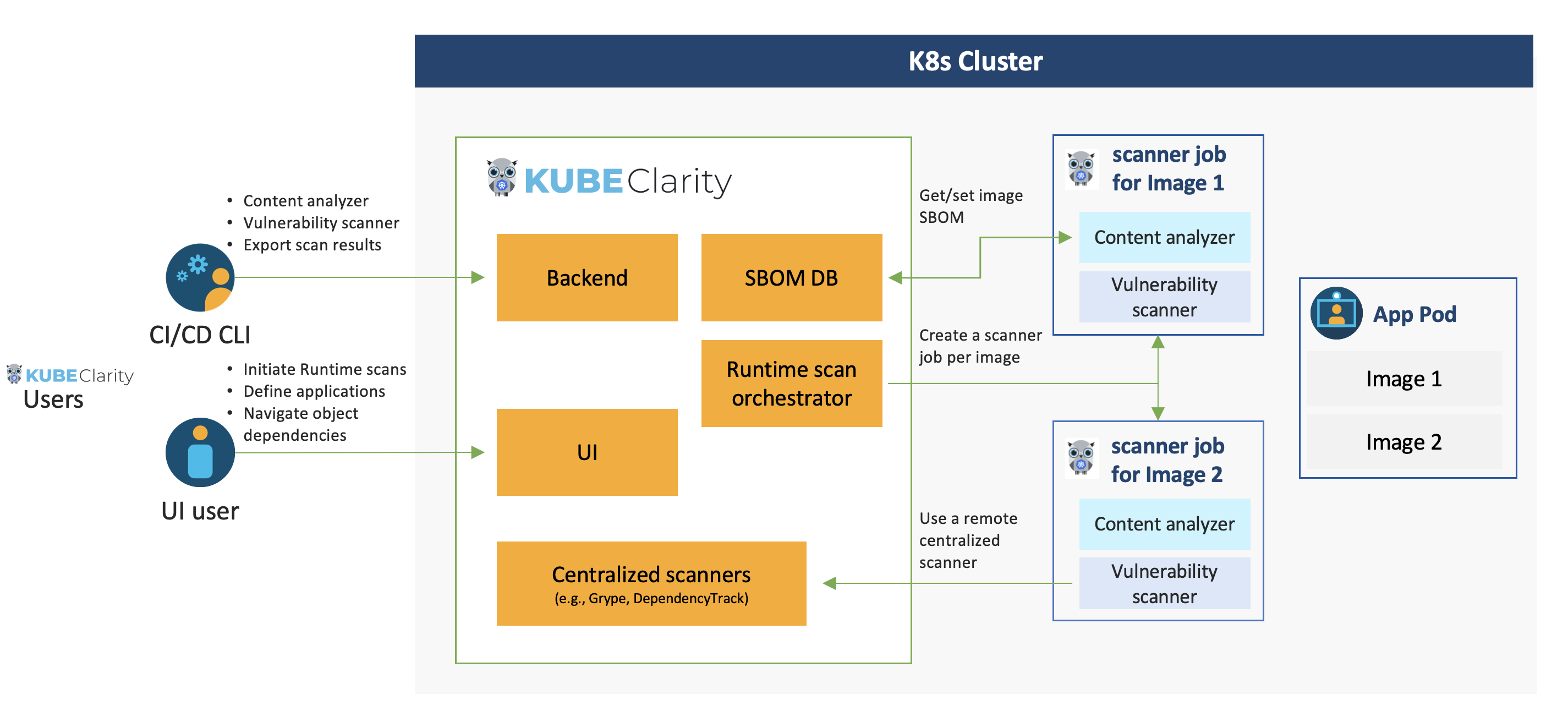

- Architecture

- Getting Started

- Advanced Configuration

- SBOM generation using local docker image as input

- Vulnerability scanning using local docker image as input

- Private Registry Support For CLI

- Private Registry Support For K8s Runtime Scan

- Merging of SBOM and vulnerabilities across different CI/CD stages

- Output Different SBOM Formats

- Remote Scanner Servers For CLI

- Limitations

- Roadmap

- Contributing

- License

- Effective vulnerability scanning requires an accurate Software Bill Of Materials (SBOM) detection:

- Various programming languages and package managers

- Various OS distributions

- Package dependency information is usually stripped upon build

- Which one is the best scanner/SBOM analyzer?

- What should we scan: Git repos, builds, container images or runtime?

- Each scanner/analyzer has its own format - how to compare the results?

- How to manage the discovered SBOM and vulnerabilities?

- How are my applications affected by a newly discovered vulnerability?

- Separate vulnerability scanning into 2 phases:

- Content analysis to generate SBOM

- Scan the SBOM for vulnerabilities

- Create a pluggable infrastructure to:

- Run several content analyzers in parallel

- Run several vulnerability scanners in parallel

- Scan and merge results between different CI stages using KubeClarity CLI

- Runtime K8s scan to detect vulnerabilities discovered post-deployment

- Group scanned resources (images/directories) under defined applications to navigate the object tree dependencies (applications, resources, packages, vulnerabilities)

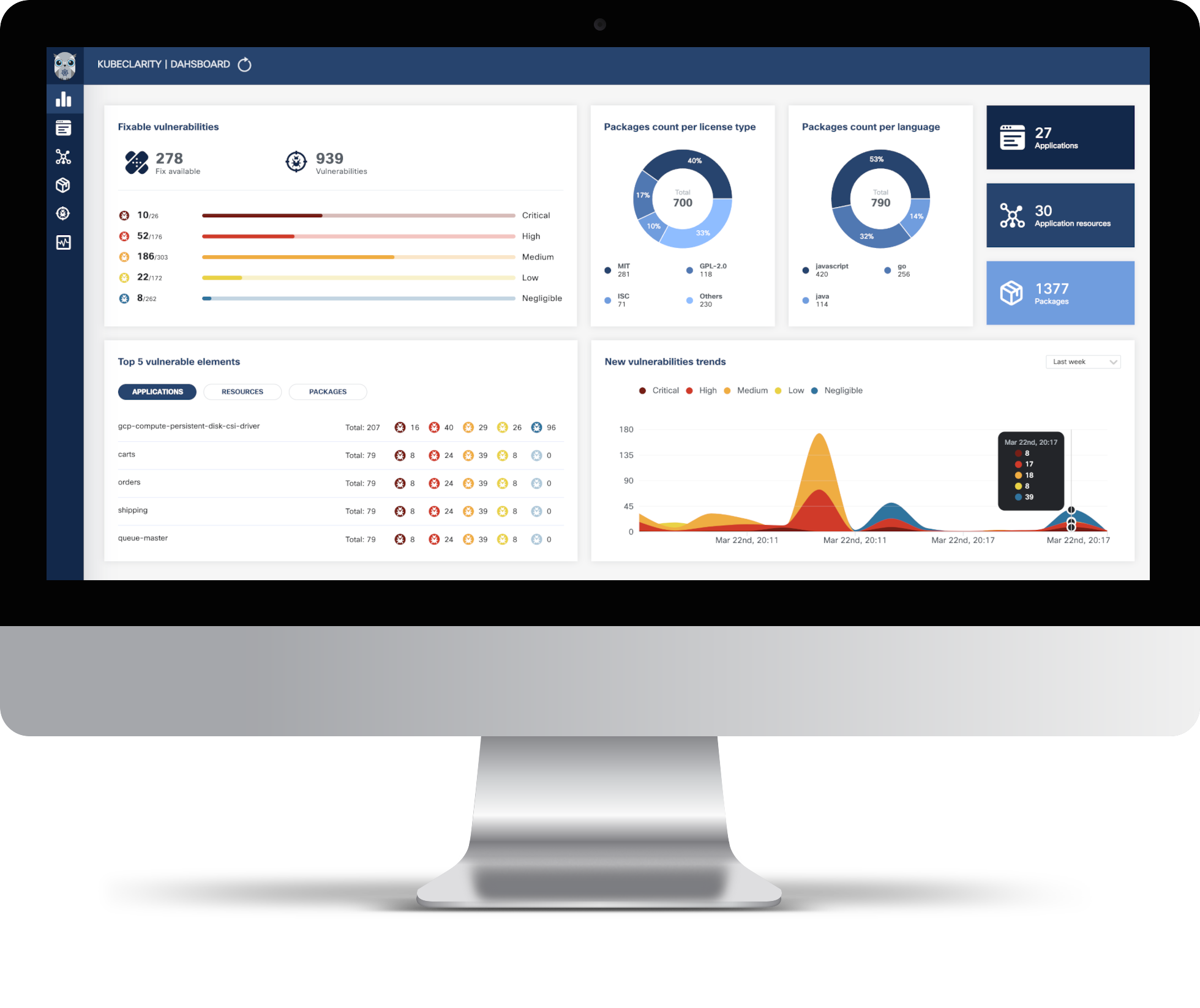

- Dashboard

- Fixable vulnerabilities per severity

- Top 5 vulnerable elements (applications, resources, packages)

- New vulnerabilities trends

- Package count per license type

- Package count per programming language

- General counters

- Applications

- Automatic application detection in K8s runtime

- Create/edit/delete applications

- Per application, navigation to related:

- Resources (images/directories)

- Packages

- Vulnerabilities

- Licenses in use by the resources

- Application Resources (images/directories)

- Per resource, navigation to related:

- Applications

- Packages

- Vulnerabilities

- Per resource, navigation to related:

- Packages

- Per package, navigation to related:

- Applications

- Linkable list of resources and the detecting SBOM analyzers

- Vulnerabilities

- Per package, navigation to related:

- Vulnerabilities

- Per vulnerability, navigation to related:

- Applications

- Resources

- List of detecting scanners

- Per vulnerability, navigation to related:

- K8s Runtime scan

- On-demand or scheduled scanning

- Automatic detection of target namespaces

- Scan progress and result navigation per affected element (applications, resources, packages, vulnerabilities)

- CIS Docker benchmark

- CLI (CI/CD)

- SBOM generation using multiple integrated content analyzers (Syft, cyclonedx-gomod)

- SBOM/image/directory vulnerability scanning using multiple integrated scanners (Grype, Dependency-track)

- Merging of SBOM and vulnerabilities across different CI/CD stages

- Export results to KubeClarity backend

- API

- The API for KubeClarity can be found here

KubeClarity content analyzer integrates with the following SBOM generators:

KubeClarity vulnerability scanner integrates with the following scanners:

-

Add Helm repo

helm repo add kubeclarity https://openclarity.github.io/kubeclarity

-

Save KubeClarity default chart values

helm show values kubeclarity/kubeclarity > values.yaml -

Check the configuration in

values.yamland update the required values if needed. To enable and configure the supported SBOM generators and vulnerability scanners, please check the "analyzer" and "scanner" config under the "vulnerability-scanner" section in Helm values. -

Deploy KubeClarity with Helm

helm install --values values.yaml --create-namespace kubeclarity kubeclarity/kubeclarity -n kubeclarity

or for OpenShift Restricted SCC compatible install:

helm install --values values.yaml --create-namespace kubeclarity kubeclarity/kubeclarity -n kubeclarity --set global.openShiftRestricted=true \ --set kubeclarity-postgresql.securityContext.enabled=false --set kubeclarity-postgresql.containerSecurityContext.enabled=false \ --set kubeclarity-postgresql.volumePermissions.enabled=true --set kubeclarity-postgresql.volumePermissions.securityContext.runAsUser="auto" \ --set kubeclarity-postgresql.shmVolume.chmod.enabled=false -

Port forward to KubeClarity UI:

kubectl port-forward -n kubeclarity svc/kubeclarity-kubeclarity 9999:8080

-

Open KubeClarity UI in the browser: http://localhost:9999/

NOTE

KubeClarity requires these K8s permissions:

Permission Reason Read secrets in CREDS_SECRET_NAMESPACE (default: kubeclarity) This is allow you to configure image pull secrets for scanning private image repositories. Read config maps in the KubeClarity deployment namespace. This is required for getting the configured template of the scanner job. List pods in cluster scope. This is required for calculating the target pods that need to be scanned. List namespaces. This is required for fetching the target namespaces to scan in K8s runtime scan UI. Create & delete jobs in cluster scope. This is required for managing the jobs that will scan the target pods in their namespaces.

-

Helm uninstall

helm uninstall kubeclarity -n kubeclarity

-

Clean resources

By default, Helm will not remove the PVCs and PVs for the StatefulSets. Run the following command to delete them all:

kubectl delete pvc -l app.kubernetes.io/instance=kubeclarity -n kubeclarity

-

Build UI & backend and start the backend locally (2 options):

- Using docker:

- Build UI and backend (the image tag is set using VERSION):

VERSION=test make docker-backend

- Run the backend using demo data:

docker run -p 8080:8080 -e FAKE_RUNTIME_SCANNER=true -e FAKE_DATA=true -e ENABLE_DB_INFO_LOGS=true -e DATABASE_DRIVER=LOCAL ghcr.io/openclarity/kubeclarity:test run

- Build UI and backend (the image tag is set using VERSION):

- Local build:

- Build UI and backend

make ui && make backend - Copy the built site:

cp -r ./ui/build ./site

- Run the backend locally using demo data:

FAKE_RUNTIME_SCANNER=true DATABASE_DRIVER=LOCAL FAKE_DATA=true ENABLE_DB_INFO_LOGS=true ./backend/bin/backend run

- Build UI and backend

- Using docker:

-

Open KubeClarity UI in the browser: http://localhost:8080/

KubeClarity includes a CLI that can be run locally and especially useful for CI/CD pipelines. It allows to analyze images and directories to generate SBOM, and scan it for vulnerabilities. The results can be exported to KubeClarity backend.

Binary Distribution

Download the release distribution for your OS from the releases page

Unpack the kubeclarity-cli binary, add it to your PATH, and you are good to go!

Docker Image

A Docker image is available at ghcr.io/openclarity/kubeclarity-cli with list of

available tags here.

Local Compilation

make cli

Copy ./cli/bin/cli to your PATH under kubeclarity-cli.

Usage:

kubeclarity-cli analyze <image/directory name> --input-type <dir|file|image(default)> -o <output file or stdout>

Example:

kubeclarity-cli analyze --input-type image nginx:latest -o nginx.sbom

Optionally a list of the content analyzers to use can be configured using the ANALYZER_LIST env

variable seperated by a space (e.g ANALYZER_LIST="<analyzer 1 name> <analyzer 2 name>")

Example:

ANALYZER_LIST="syft gomod" kubeclarity-cli analyze --input-type image nginx:latest -o nginx.sbom

Usage:

kubeclarity-cli scan <image/sbom/directoty/file name> --input-type <sbom|dir|file|image(default)> -f <output file>

Example:

kubeclarity-cli scan nginx.sbom --input-type sbom

Optionally a list of the vulnerability scanners to use can be configured using the SCANNERS_LIST env

variable seperated by a space (e.g SCANNERS_LIST="<Scanner1 name> <Scanner2 name>")

Example:

SCANNERS_LIST="grype trivy" kubeclarity-cli scan nginx.sbom --input-type sbom

To export CLI results to the KubeClarity backend, need to use an application ID as defined by the KubeClarity backend. The application ID can be found in the Applications screen in the UI or using the KubeClarity API.

# The SBOM can be exported to KubeClarity backend by setting the BACKEND_HOST env variable and the -e flag.

# Note: Until TLS is supported, BACKEND_DISABLE_TLS=true should be set.

BACKEND_HOST=<KubeClarity backend address> BACKEND_DISABLE_TLS=true kubeclarity-cli analyze <image> --application-id <application ID> -e -o <SBOM output file>

# For example:

BACKEND_HOST=localhost:9999 BACKEND_DISABLE_TLS=true kubeclarity-cli analyze nginx:latest --application-id 23452f9c-6e31-5845-bf53-6566b81a2906 -e -o nginx.sbom

# The vulnerability scan result can be exported to KubeClarity backend by setting the BACKEND_HOST env variable and the -e flag.

# Note: Until TLS is supported, BACKEND_DISABLE_TLS=true should be set.

BACKEND_HOST=<KubeClarity backend address> BACKEND_DISABLE_TLS=true kubeclarity-cli scan <image> --application-id <application ID> -e

# For example:

SCANNERS_LIST="grype" BACKEND_HOST=localhost:9999 BACKEND_DISABLE_TLS=true kubeclarity-cli scan nginx.sbom --input-type sbom --application-id 23452f9c-6e31-5845-bf53-6566b81a2906 -e

# Local docker images can be analyzed using the LOCAL_IMAGE_SCAN env variable

# For example:

LOCAL_IMAGE_SCAN=true kubeclarity-cli analyze nginx:latest -o nginx.sbom

# Local docker images can be scanned using the LOCAL_IMAGE_SCAN env variable

# For example:

LOCAL_IMAGE_SCAN=true kubeclarity-cli scan nginx.sbom

The KubeClarity cli can read a config file that stores credentials for private registries.

Example registry section of the config file:

registry:

auths:

- authority: <registry 1>

username: <username for registry 1>

password: <password for registry 1>

- authority: <registry 2>

token: <token for registry 2>

Example registry config without authority: (in this case these credentials will be used for all registries)

registry:

auths:

- username: <username>

password: <password>

# The default config path is $HOME/.kubeclarity or it can be specified by `--config` command line flag.

# kubeclarity <scan/analyze> <image name> --config <kubeclarity config path>

# For example:

kubeclarity scan registry/nginx:private --config $HOME/own-kubeclarity-config

Kubeclarity is using k8schain of google/go-containerregistry for authenticating to the registries. If the necessary service credentials are not discoverable by the k8schain, they can be defined via secrets described below.

In addition, if service credentials are not located in "kubeclarity" Namespace, please set CREDS_SECRET_NAMESPACE to kubeclarity Deployment. When using helm charts, CREDS_SECRET_NAMESPACE is set to the release namespace installed kubeclarity.

Create an AWS IAM user with AmazonEC2ContainerRegistryFullAccess permissions.

Use the user credentials (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, AWS_DEFAULT_REGION) to create the following secret:

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: ecr-sa

namespace: kubeclarity

type: Opaque

data:

AWS_ACCESS_KEY_ID: $(echo -n 'XXXX'| base64 -w0)

AWS_SECRET_ACCESS_KEY: $(echo -n 'XXXX'| base64 -w0)

AWS_DEFAULT_REGION: $(echo -n 'XXXX'| base64 -w0)

EOF

Note:

- Secret name must be

ecr-sa - Secret data keys must be set to

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEYandAWS_DEFAULT_REGION

Create a Google service account with Artifact Registry Reader permissions.

Use the service account json file to create the following secret

kubectl -n kubeclarity create secret generic --from-file=sa.json gcr-sa

Note:

- Secret name must be

gcr-sa sa.jsonmust be the name of the service account json file when generating the secret- KubeClarity is using application default credentials. These only work when running KubeClarity from GCP.

# Additional SBOM will be merged into the final results when '--merge-sbom' is defined during analysis. The input SBOM can be CycloneDX XML or CyclonDX json format.

# For example:

ANALYZER_LIST="syft" kubeclarity-cli analyze nginx:latest -o nginx.sbom --merge-sbom inputsbom.xml

The kubeclarity-cli analyze command can format the resulting SBOM into different formats if required to integrate with another system. The supported formats are:

| Format | Configuration Name |

|---|---|

| CycloneDX JSON (default) | cyclonedx-json |

| CycloneDX XML | cyclonedx-xml |

| SPDX JSON | spdx-json |

| SPDX Tag Value | spdx-tv |

| Syft JSON | syft-json |

WARNING

KubeClarity processes CycloneDX internally, the other formats are supported through a conversion. The conversion process can be lossy due to incompatibilities between formats, therefore not all fields/information are promised to be present in the resulting output.

To configure the kubeclarity-cli to use a format other than the default, the ANALYZER_OUTPUT_FORMAT environment variable can be used with the configuration name from above:

ANALYZER_OUTPUT_FORMAT="spdx-json" kubeclarity-cli analyze nginx:latest -o nginx.sbom

When running the kubeclarity CLI to scan for vulnerabilties, the CLI will need to download the relevant vulnerablity DBs to the location where the kubeclarity CLI is running. Running the CLI in a CI/CD pipeline will result in downloading the DBs on each run, wasting time and bandwidth. For this reason several of the supported scanners have a remote mode in which a server is responsible for the DB management and possibly scanning of the artifacts.

Note

The examples below are for each of the scanners, but they can be combined to run together the same as they can be in non-remote mode.

Trivy scanner supports remote mode using the Trivy server. The trivy server can be deployed as documented here: trivy client-server mode. Instructions to install the Trivy CLI are available here: trivy install. The Aqua team provide an offical container image that can be used to run the server in kubernetes/docker which we'll use in the examples here.

To start the server:

docker run -p 8080:8080 --rm aquasec/trivy:0.41.0 server --listen 0.0.0.0:8080

To run a scan using the server:

SCANNERS_LIST="trivy" SCANNER_TRIVY_SERVER_ADDRESS="http://<trivy server address>:8080" ./kubeclarity_cli scan --input-type sbom nginx.sbom

The trivy server also provides token based authentication to prevent unauthorized use of a trivy server instance. You can enable it by running the server with the extra flag:

docker run -p 8080:8080 --rm aquasec/trivy:0.41.0 server --listen 0.0.0.0:8080 --token mytoken

and passing the token to the scanner:

SCANNERS_LIST="trivy" SCANNER_TRIVY_SERVER_ADDRESS="http://<trivy server address>:8080" SCANNER_TRIVY_SERVER_TOKEN="mytoken" ./kubeclarity_cli scan --input-type sbom nginx.sbom

Grype supports remote mode using grype-server a RESTful grype wrapper which provides an API that receives an SBOM and returns the grype scan results for that SBOM. Grype-server ships as a container image so can be run in kubernetes or via docker standalone.

To start the server:

docker run -p 9991:9991 --rm gcr.io/eticloud/k8sec/grype-server:v0.1.5

To run a scan using the server:

SCANNERS_LIST="grype" SCANNER_GRYPE_MODE="remote" SCANNER_REMOTE_GRYPE_SERVER_ADDRESS="<grype server address>:9991" SCANNER_REMOTE_GRYPE_SERVER_SCHEMES="https" ./kubeclarity_cli scan --input-type sbom nginx.sbom

If Grype server is deployed with TLS you can override the default URL scheme like:

SCANNERS_LIST="grype" SCANNER_GRYPE_MODE="remote" SCANNER_REMOTE_GRYPE_SERVER_ADDRESS="<grype server address>:9991" SCANNER_REMOTE_GRYPE_SERVER_SCHEMES="https" ./kubeclarity_cli scan --input-type sbom nginx.sbom

See example configuration here

- Supports Docker Image Manifest V2, Schema 2 (https://docs.docker.com/registry/spec/manifest-v2-2/). It will fail to scan earlier versions.

- Integration with additional content analyzers (SBOM generators)

- Integration with additional vulnerability scanners

- CIS Docker benchmark in UI

- Image signing using Cosign

- CI/CD metadata signing and attestation using Cosign and in-toto (supply chain security)

- System settings and user management

Pull requests and bug reports are welcome.

For larger changes please create an Issue in GitHub first to discuss your proposed changes and possible implications.

More more details please see the Contribution guidelines for this project