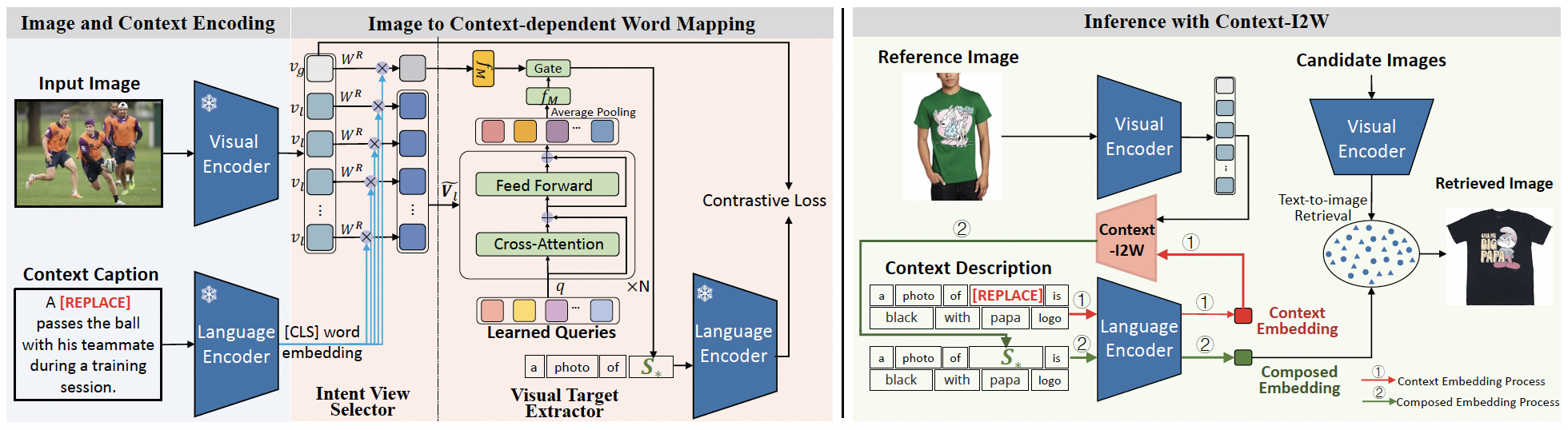

Different from Composed Image Retrieval task that requires expensive labels for training task-specific models, Zero-Shot Composed Image Retrieval (ZS-CIR) involves diverse tasks with a broad range of visual content manipulation intent that could be related to domain, scene, object, and attribute. The key challenge for ZS-CIR tasks is to learn a more accurate image representation that has adaptive attention to the reference image for various manipulation descriptions. In this paper, we propose a novel context-dependent mapping network, named Context-I2W, for adaptively converting description-relevant Image information into a pseudo-word token composed of the description for accurate ZS-CIR. Specifically, an Intent View Selector first dynamically learns a rotation rule to map the identical image to a task-specific manipulation view. Then a Visual Target Extractor further captures local information covering the main targets in ZS-CIR tasks under the guidance of multiple learnable queries. The two complementary modules work together to map an image to a context-dependent pseudo-word token without extra supervision. Our model shows strong generalization ability on four ZS-CIR tasks, including domain conversion, object composition, object manipulation, and attribute manipulation. It obtains consistent and significant performance boosts ranging from 1.88% to 3.60% over the best methods and achieves new state-of-the-art results on ZS-CIR.

This repository contains the code for the paper "Context-I2W: Mapping Images to Context-dependent words for Accurate Zero-Shot Composed Image Retrieval".

- We consider manipulation descriptions and learnable queries multi-level constraints for visual information filtering, which sheds new light on the vision-to-language alignment mechanism.

- Our Intent View Selector and Visual Target Extractor selectively map images dependent on the context of manipulation descriptions, enhancing the ability of the CLIP Language Encoder to generalize images to complex manipulation descriptions effectively.

- Our Context-I2W is the first to mask the object name while retaining the original caption in CIR tasks. It builds on the insight that context clues should be paid more attention to in the objective, which is an interesting insight that will motivate future works.

Inference code and checkpoints- Training code

We utilize Conceptual Captions URLs to train a model. See open_clip to see the process of getting the dataset.

The training data directory has to be in the root of this repo, and should be structured like below.

cc_data

├── train ## training image diretories.

└── val ## validation image directories.

cc

├── Train_GCC-training_output.csv ## training data list

└── Validation_GCC-1.1.0-Validation_output.csv ## validation data listSee README to prepare test dataset.

The model is available in GoogleDrive.

See open_clip for the details of installation. The same environment should be usable in this repo. setenv.sh is the script we used to set-up the environment in virtualenv.

Also run below to add directory to pythonpath:

. env3/bin/activate

export PYTHONPATH="$PYTHONPATH:$PWD/src"

export PYTHONWARNINGS='ignore:semaphore_tracker:UserWarning'Evaluation on COCO, ImageNet, or CIRR.

python src/eval_retrieval.py \

--openai-pretrained \

--resume /path/to/checkpoints \

--eval-mode $data_name \ ## replace with coco, imgnet, or cirr

--gpu $gpu_idEvaluation on fashion-iq (shirt or dress or toptee)

python src/eval_retrieval.py \

--openai-pretrained \

--resume /path/to/checkpoints \

--eval-mode fashion \

--source $cloth_type \ ## replace with shirt or dress or toptee

--gpu $gpu_idIf you found this repository useful, please consider citing:

@inproceedings{tang2024context,

title={Context-I2W: Mapping Images to Context-dependent Words for Accurate Zero-Shot Composed Image Retrieval},

author={Tang, Yuanmin and Yu, Jing and Gai, Keke and Zhuang, Jiamin and Xiong, Gang and Hu, Yue and Wu, Qi},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={6},

pages={5180--5188},

year={2024}

}- Thanks to Pic2Word authors, our baseline code adapted from there.