Disrupt Hogwarts is a project done during the 2-week stay at Ecole 42. The aim was to build a machine-learning-powered Harry Potter's Sorting Hat (Choipeaux magique in French) that could tell which Hogwarts House you belong to based on given features.

More prosaically, the project consisted in implementing from scratch (no scikit-learn) a Logistic Regression model.

The model was then wrapped into a Flask API (GitHub repo available here)

Run git clone https://github.com/SachaIZADI/DisruptHogwarts.gitin your shell.

Then mkvirtualenv --python=`which python3` hogwarts (You can escape the virtualenv by running deactivate)

Then finally run pip3 install requirements.txt. And You're up to play with the project.

/!\ Make sure to use python3 and not python2 /!\ .

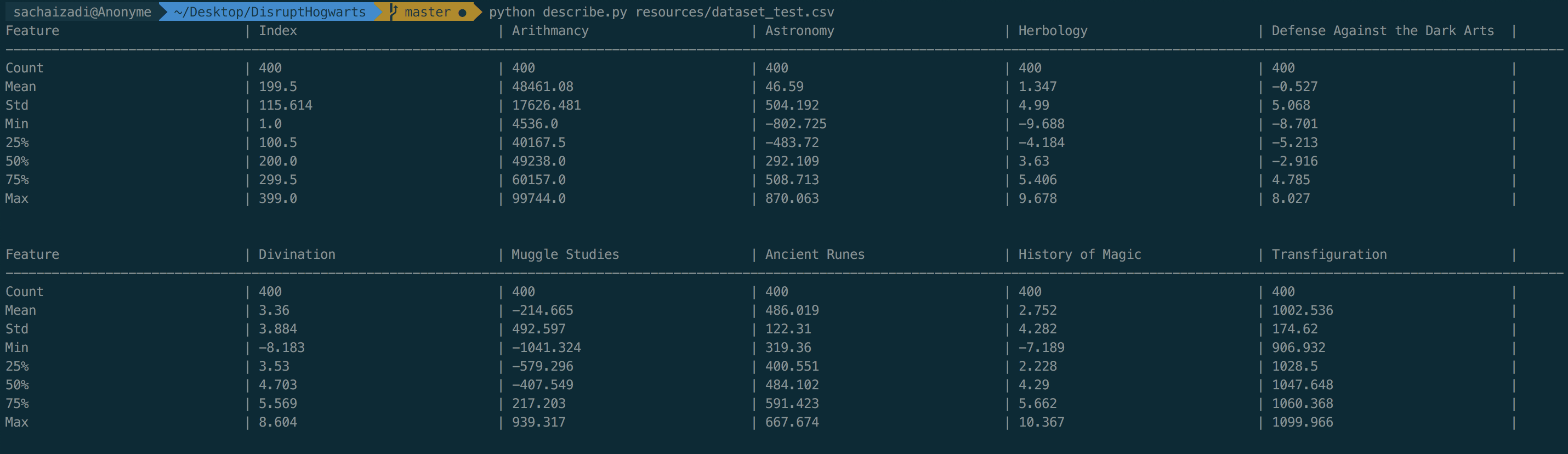

Input: Run python3 describe.py resources/dataset_train.csv in your terminal

Output:

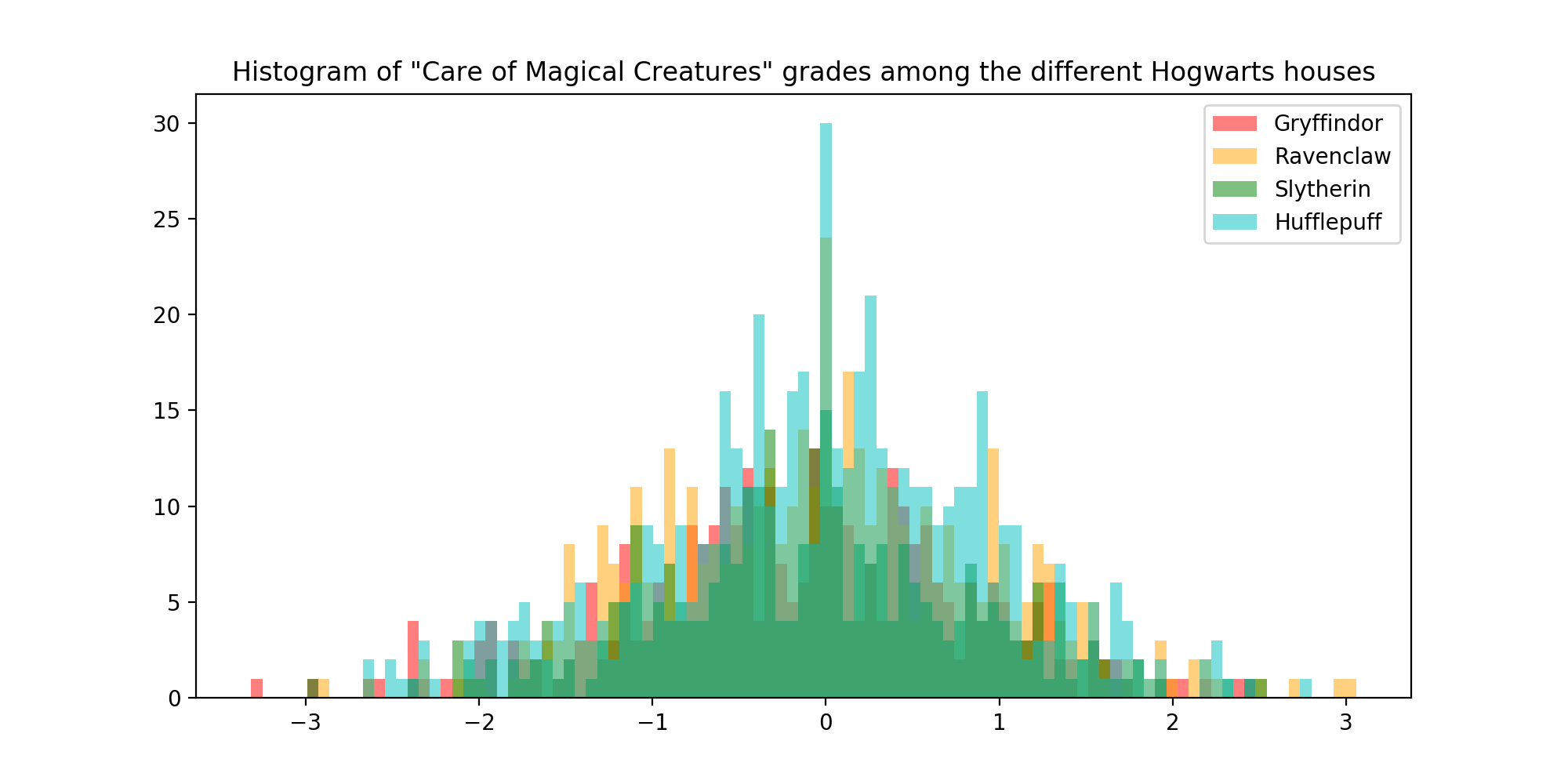

Input: Run python3 histogram.py in your terminal

Output:

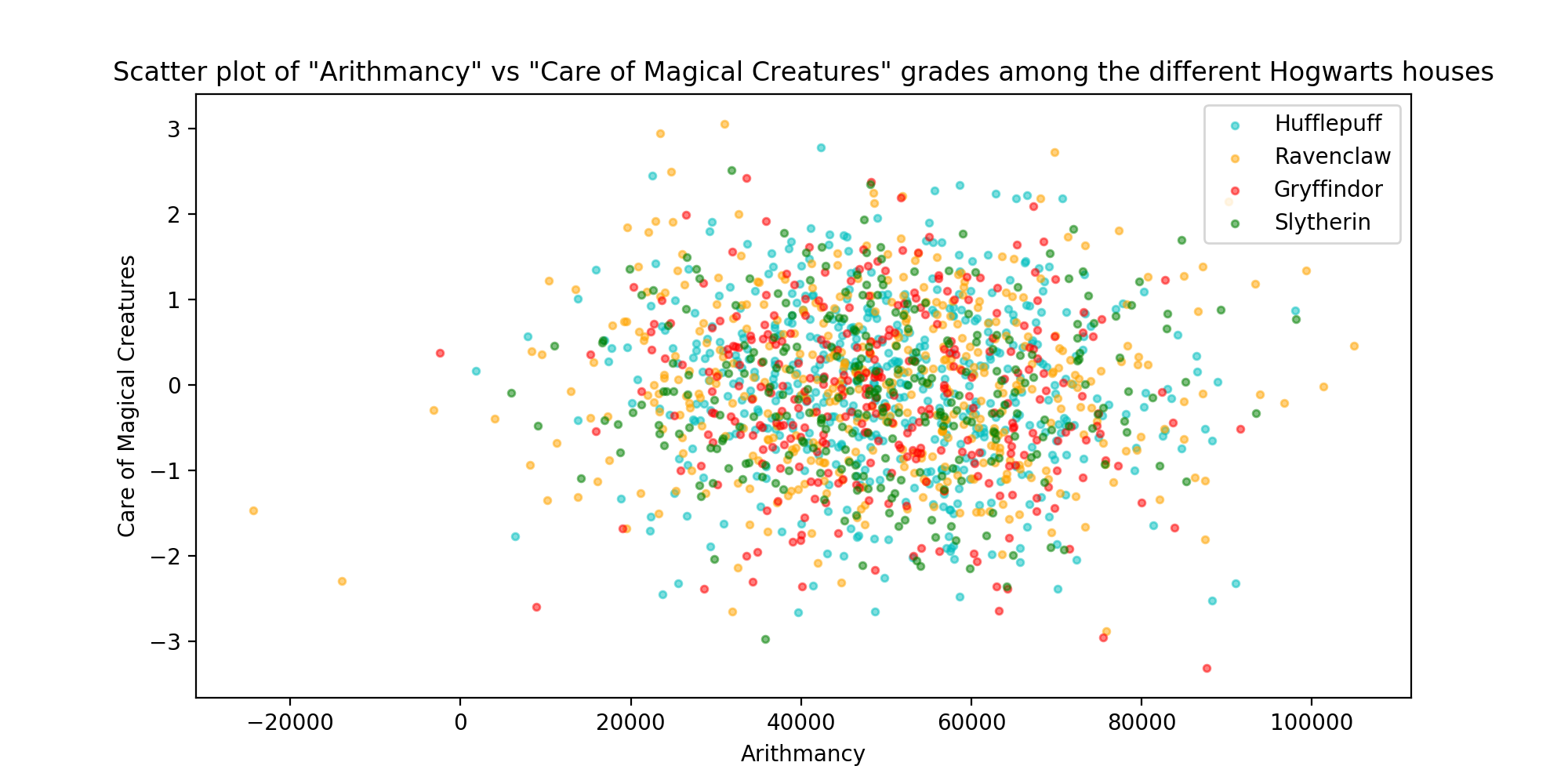

Input: Run python3 scatter_plot.py in your terminal

Output:

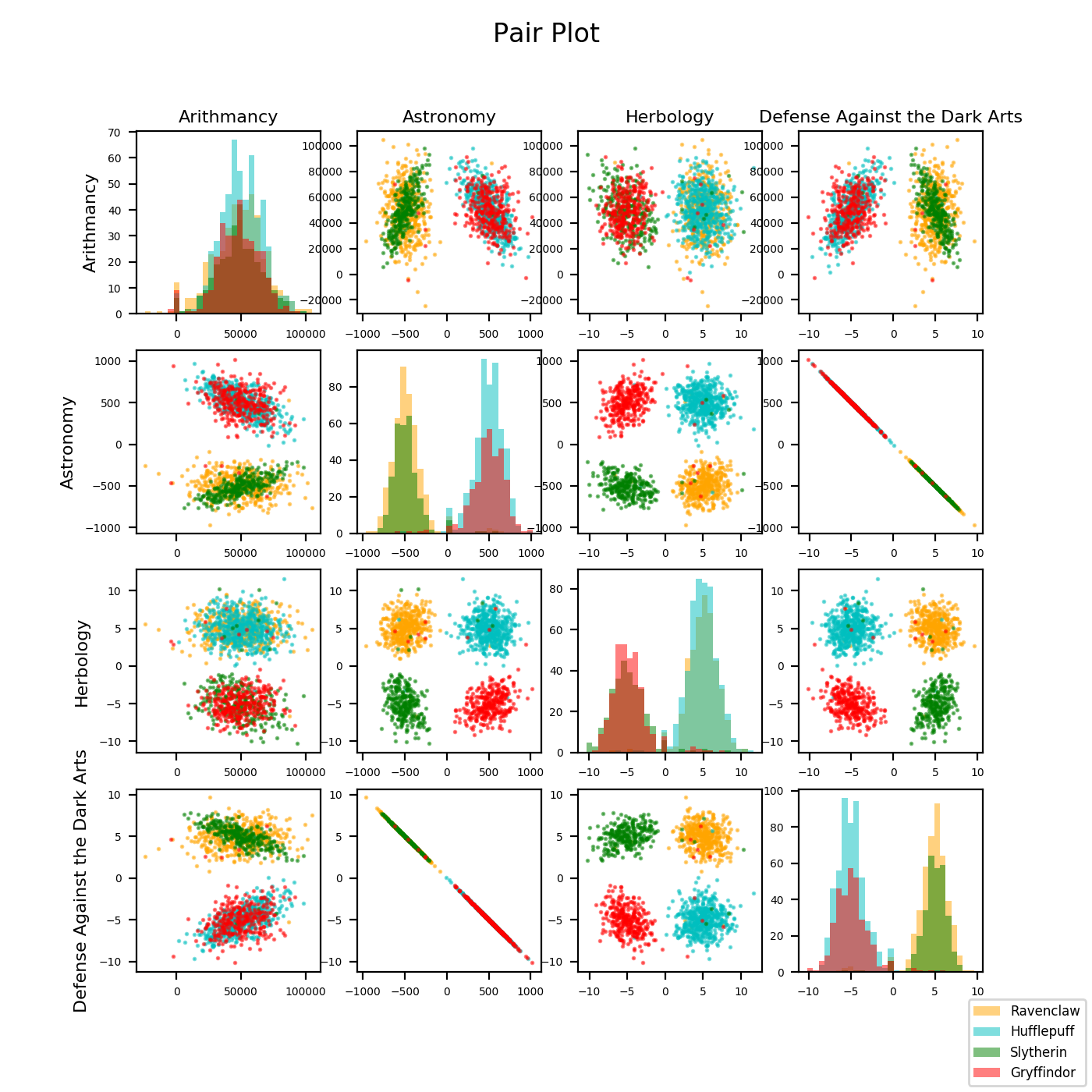

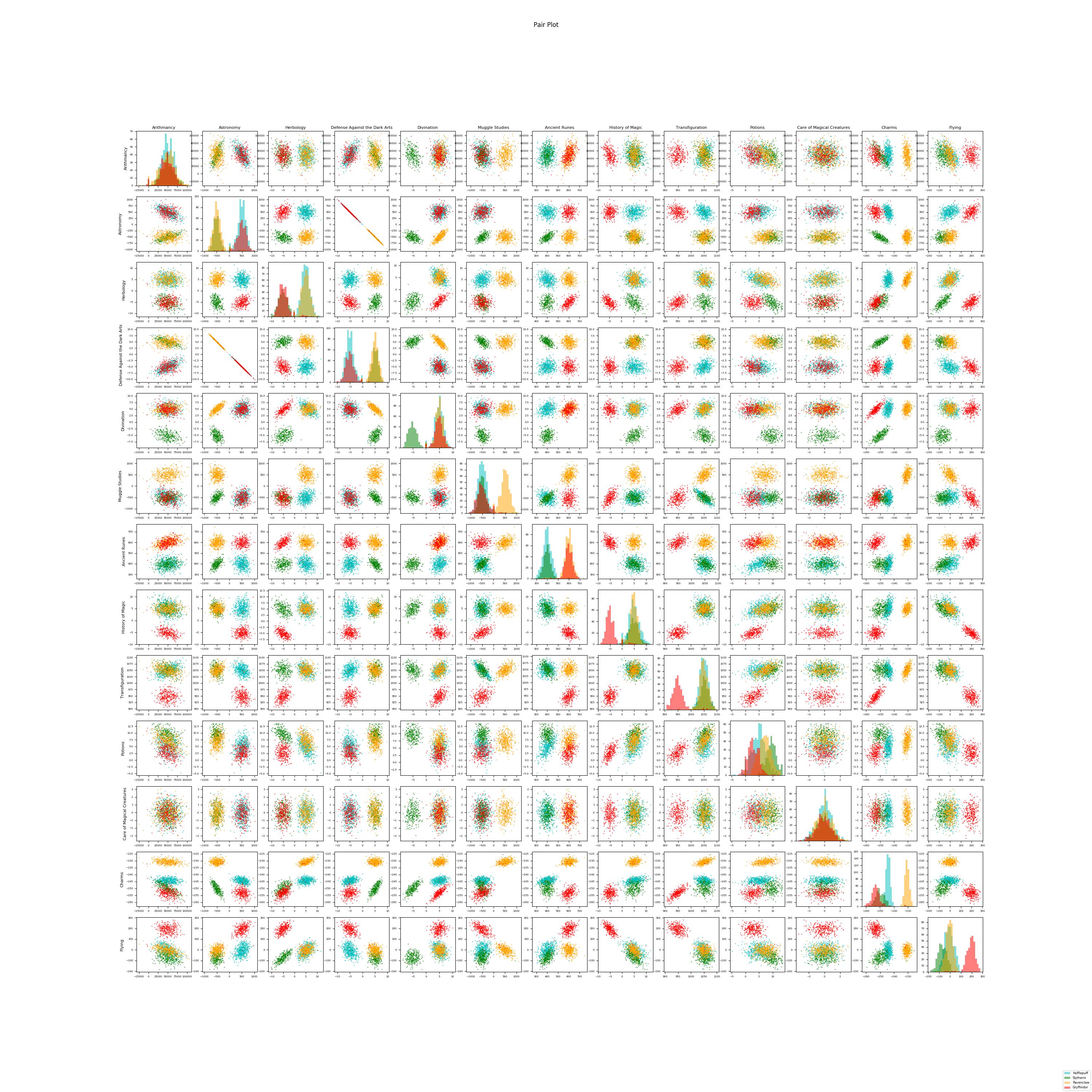

Input: Run python3 pair_plot.py in your terminal

Output:

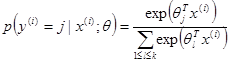

The maths behind logistic regression rely on the modelling of the probability P(Y=yi|X=xi) as an expit function of xi :

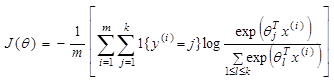

To estimate the parameters ßj, we maximize the log-likelihood of the model, or equivalently we minimize the opposite of the log-likelihood (=the loss function).

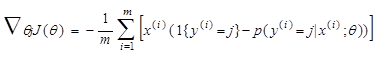

To do so, we use the gradient descent algorithm that updates the values of the ßj by going the opposite way of the gradient:

Use the following script to split the model into a training and a testing set (by default 70%-30%), train a logistic regression and compute the confusion matrix. Think of this script as a lab to run experiments.

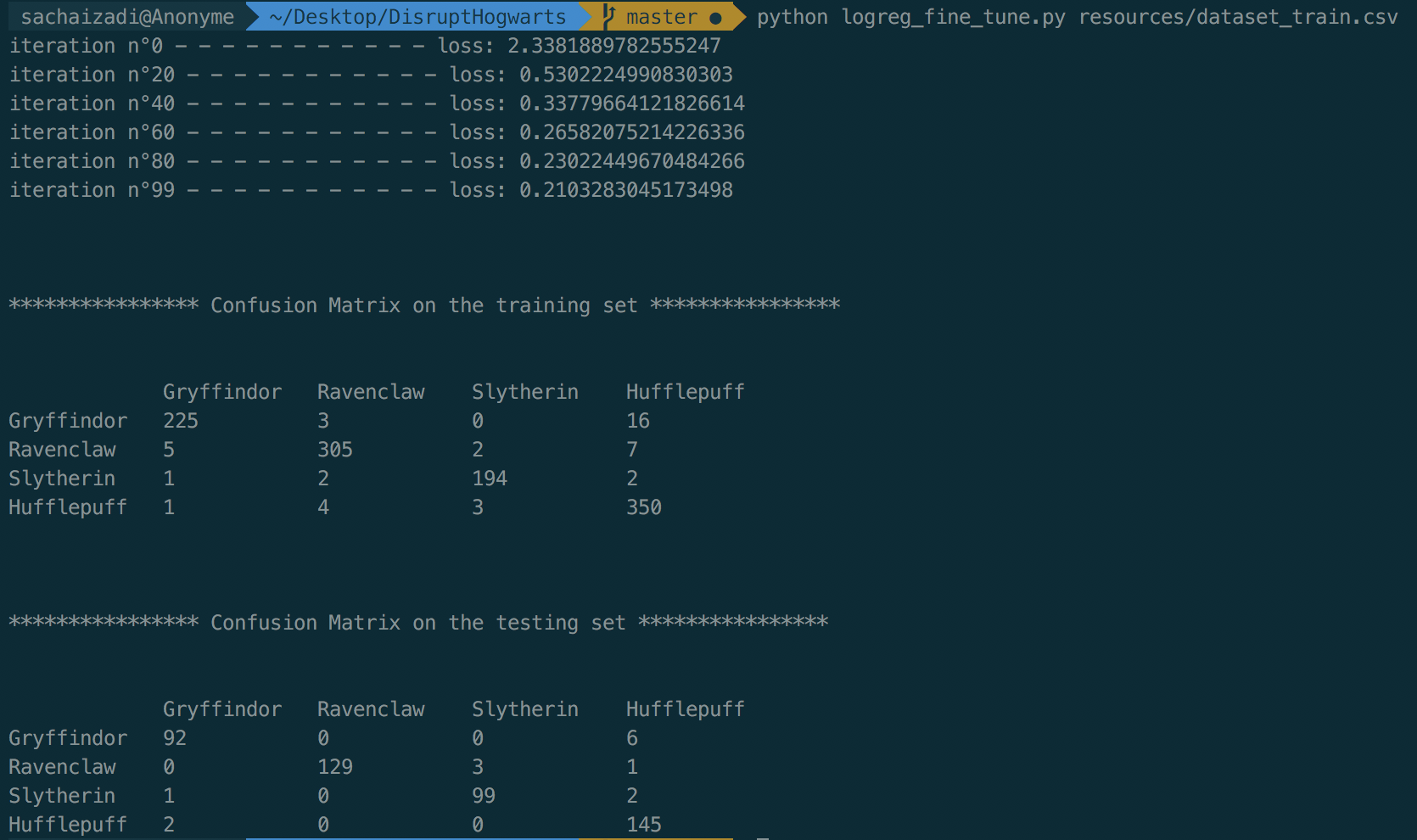

Input: Run python3 logreg_fine_tune.py resources/dataset_train.csv

Output: NB: true labels are on the 1st row & predicted labels are on the 1st column.

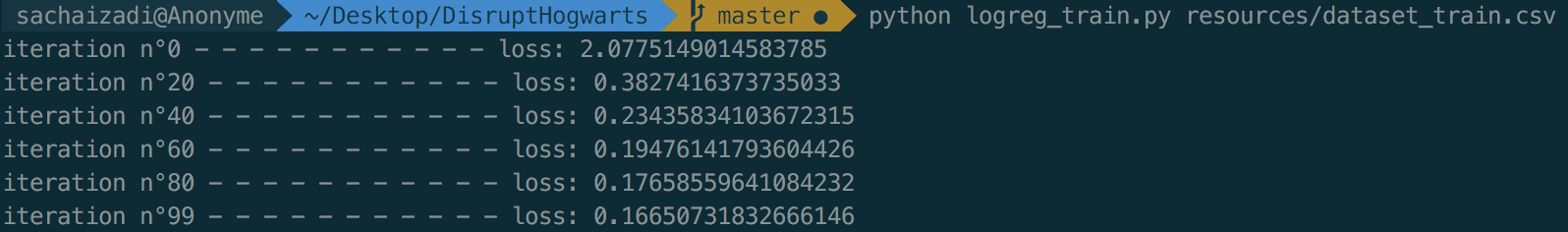

Input: Run python3 logreg_train.py resources/dataset_train.csv

Output:

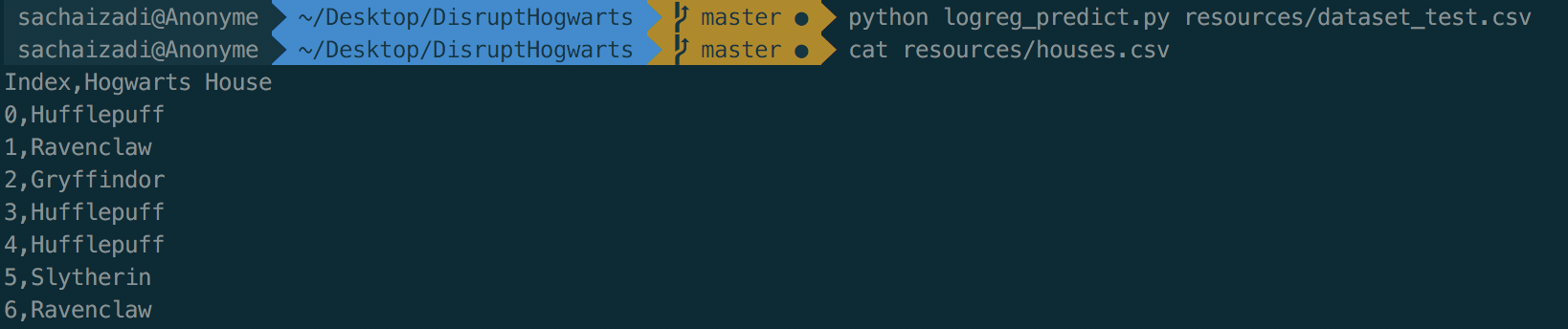

Input: Run python3 logreg_predict.py resources/dataset_test.csv

Output:

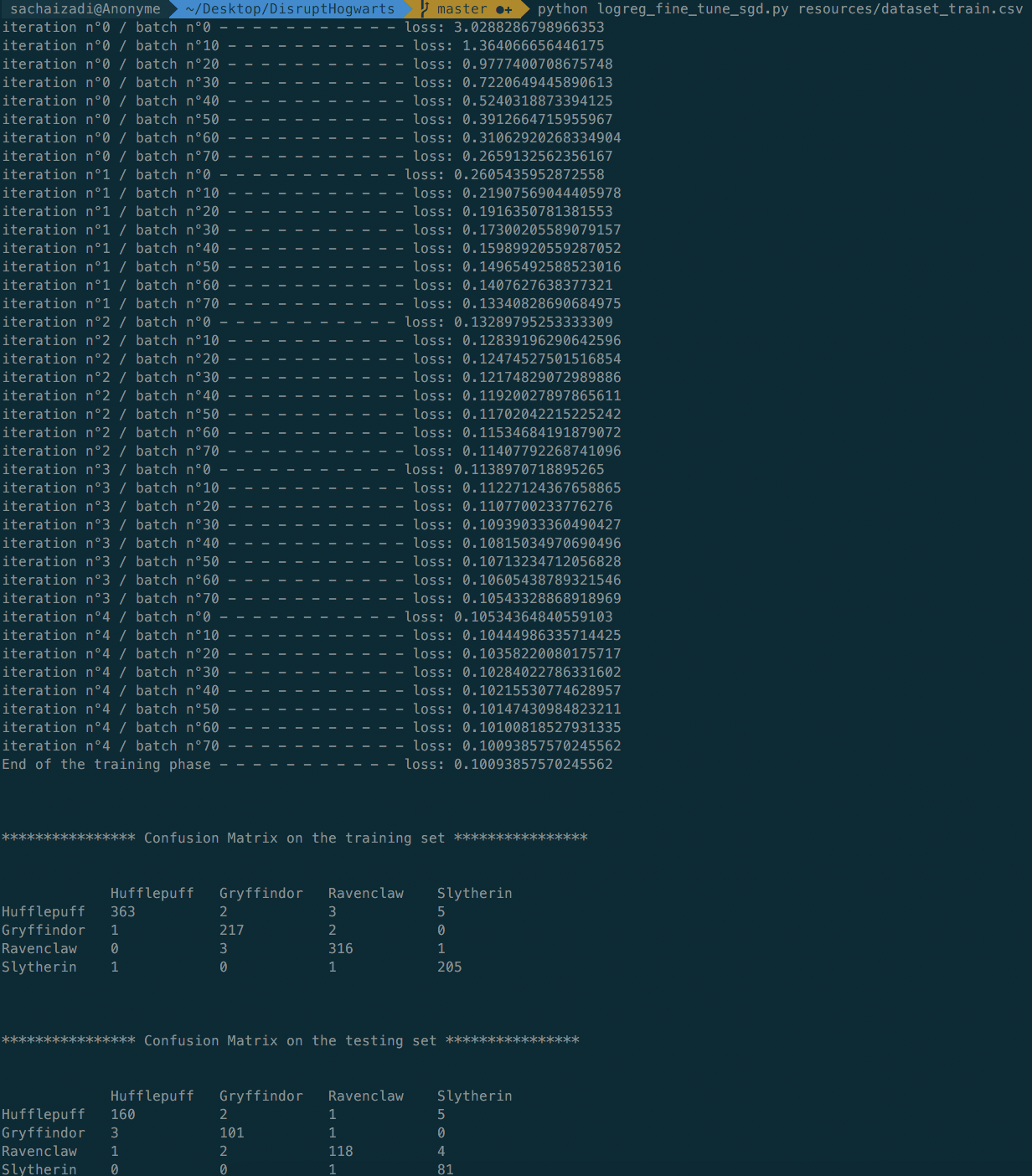

Input: Run python3 logreg_fine_tune_sgd.py resources/dataset_train.csv

Output: