This is UCSD ECE 285 Final Project B-Style Transfer, developed by team Learning Machine composed of

Bowen Zhao

Bolin He

Kunpeng Liu.

This is the version based on Gatys paper Style Transfer, it has tested on the UCSD DSMLP.

The code locates in Origin folder.

install the package as fllow:

$ pip3 install torch torchvision

The origin style transfer also requires to download VGG19 network.

demo.ipynb -- Run a demo of our code and showing different alpha/beta ratio's effect on the output image.

image.py -- Contains the function that used to load and show image.

loss.py -- Contains the function to calculate the content loss, gram martix, style loss.

model.py -- Contains the VGG19 network and record the layer that need to calculate content loss and style loss.

run.py -- Contains the function with the initialization and how to compute the loss and optimize them.

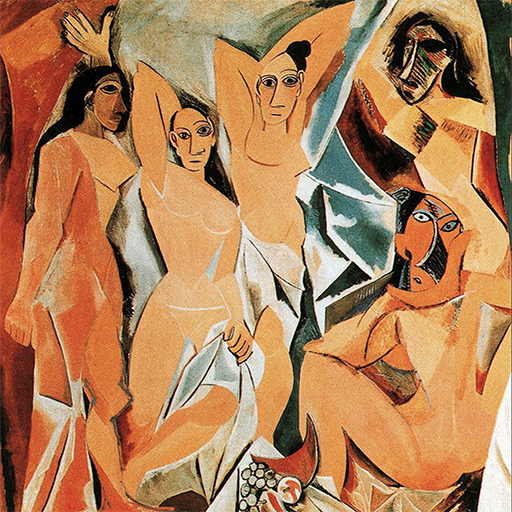

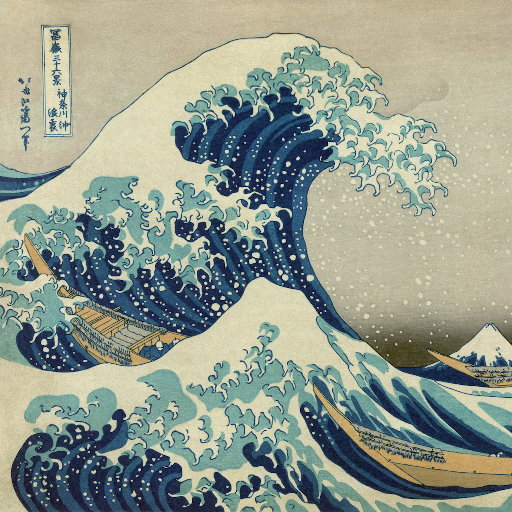

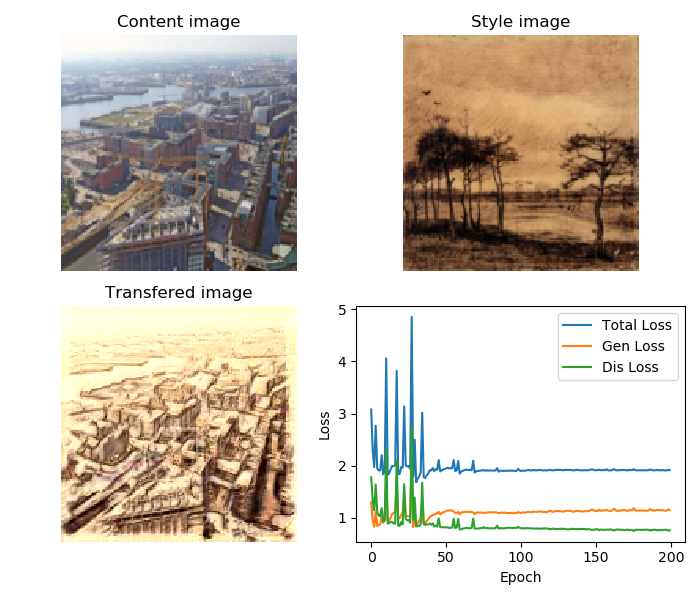

We use the content image from one of Picasso's masterpiece, and style image from Kanagawa. Here is the output with α/β ratio equals to 108(α is the weight of style loss, β is the weight of content loss).

To visualize the result, check

Image-to-Image Translation using Cycle-GANs has been tested on UCSD DSMLP.

The code locates in cycleGAN folder.

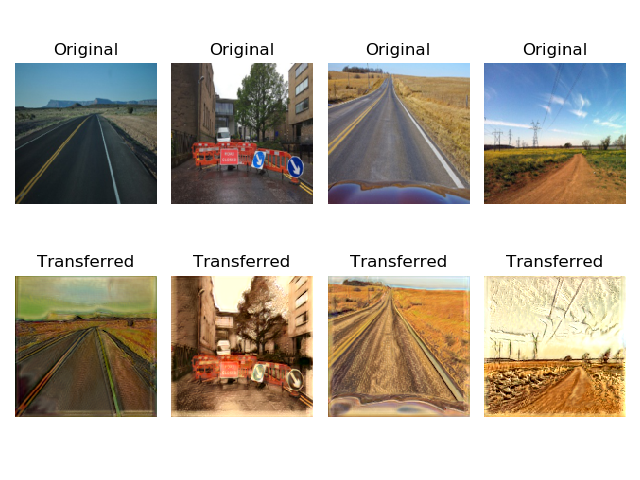

In this part, we implemented a real-time Sytle Transfer using Cycle-GANs introduced in paper Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks.

In our experiment, the content train dataset is landcape pictures from FLickr, and the style train dataset is Vincent van Gogh artworks from WikiArt5. We successful use DnCNN in Assignment 4 with three configuration:

exp_smallBS: Experiment small batch-size without decay

exp_largeBS: Experiment large batch-size without decay

exp_largeBS_decay: Experiment large batch-size with decay

In our implementation of the project, we do not require any extra packages besides the ones provided by the class environment: os, time, numpy, pandas, torch, torchvision, PIL, and matplotlib.

To run the training scripts outside of the class environment, you may need to install some of the packages above. To install a missing package LIBRARY_MISSING, you may do the following command:

$ pip install --user LIBRARY_MISSING./

-

-

__init__.py -

Main classes of Generator, Discriminator, Trainer, and Training Experiment for picture-orientated CycleGAN and domain-orientated CycleGAN respectively -

styleDataSet.py,domainStyleDataSet.pyClass(_td.Dataset_) for loading dataset from given path for picture-orientated CycleGAN and domain-orientated CycleGAN respectively -

Classes adapeted from Assignment 4, used for building CycleGAN networks

-

-

Contains numerous checkpoint folders for loading/continue pre-trained models -

Run a demo of our code transfering content picture based on an aritist's domain. (reproduces Figure 3 of our report) -

Run training of our model through python script. Able to run in background with flags to customize settings such as domain to train, use large train_set, and etc.. -

Some function that help to plot.

To visualize the result, check