Code for NeurIPS 2021 paper "Better Safe Than Sorry: Preventing Delusive Adversaries with Adversarial Training" by Lue Tao, Lei Feng, Jinfeng Yi, Sheng-Jun Huang, and Songcan Chen.

This repository contains an implementation of the attacks (P1~P5) and the defense (adversarial training) in the paper.

Our code relies on PyTorch, which will be automatically installed when you follow the instructions below.

conda create -n delusion python=3.8

conda activate delusion

pip install -r requirements.txt

- Pre-train a standard model on CIFAR-10 (the dataset will be automatically download).

python main.py --train_loss ST- Generate perturbed training data.

python poison.py --poison_type P1

python poison.py --poison_type P2

python poison.py --poison_type P3

python poison.py --poison_type P4

python poison.py --poison_type P5- Visualize the perturbed training data (optional).

tensorboard --logdir ./results- Standard training on the perturbed data.

python main.py --train_loss ST --poison_type P1

python main.py --train_loss ST --poison_type P2

python main.py --train_loss ST --poison_type P3

python main.py --train_loss ST --poison_type P4

python main.py --train_loss ST --poison_type P5- Adversarial training on the perturbed data.

python main.py --train_loss AT --poison_type P1

python main.py --train_loss AT --poison_type P2

python main.py --train_loss AT --poison_type P3

python main.py --train_loss AT --poison_type P4

python main.py --train_loss AT --poison_type P5

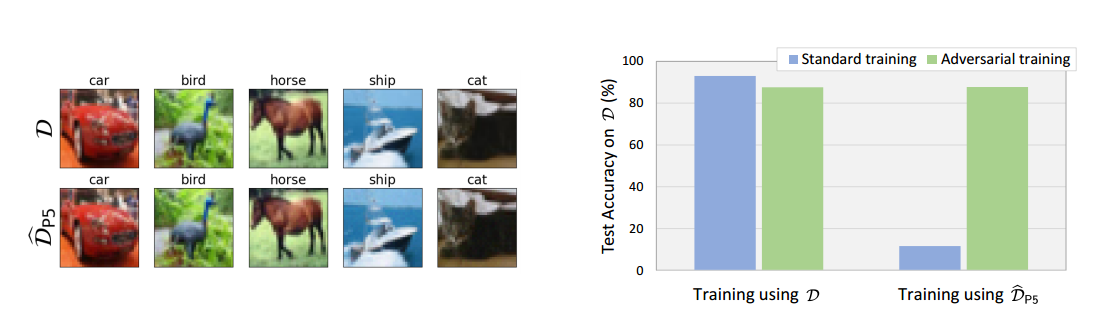

Table 1: Below we report mean and standard deviation of the test accuracy for the CIFAR-10 dataset. As we can see, the performance deviations of the defense (i.e., adversarial training) are very small (< 0.50%), which hardly effect the results. In contrast, the results of standard training are relatively unstable.

| Training method \ Training data | P1 | P2 | P3 | P4 | P5 |

|---|---|---|---|---|---|

| Standard training | 37.87±0.94 | 74.24±1.32 | 15.14±2.10 | 23.69±2.98 | 11.76±0.72 |

| Adversarial training | 86.59±0.30 | 89.50±0.21 | 88.12±0.39 | 88.15±0.15 | 88.12±0.43 |

Key takeaways: Our theoretical justifications in the paper, along with the empirical results, suggest that adversarial training is a principled and promising defense against delusive attacks.

@inproceedings{tao2021better,

title={Better Safe Than Sorry: Preventing Delusive Adversaries with Adversarial Training},

author={Tao, Lue and Feng, Lei and Yi, Jinfeng and Huang, Sheng-Jun and Chen, Songcan},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2021}

}