multi-chain bottom-up consensus model prototype

Closed this issue · 54 comments

Research superior consensus models, resilient against 51% attacks.

Create an operational prototype of a mechanisms which spreads the last known record of all multi-chain.

- All peers crawl to some extend

- They create 1 Bittorrent swarm with a database dump of all these records and seed it

- Download a swarm with records from the nearest neighbor

- Merge, double spending check, and remove duplicates

- Together with this neighbor, form a group, seed your unified database dump

- Repeat with exponentially increasing group size

The idea of merging shares some similarities as the GHS MST algorithm. But unlike the GHS algorithm, bottom-up consensus needs to work in a dynamic environment (multichain is always changing) and also under churn, so that is a challenge.

Please read some related work:

- The Quest for Scalable Blockchain Fabric: Proof-of-Work vs. BFT Replication

- Difficulty control for blockchain-based consensus systems

- The Sleepy Model of Consensus

- Hybrid Consensus: Efficient Consensus in the Permissionless Model

- SCP: A Computationally-Scalable Byzantine Consensus Protocol For Blockchains

- Enabling Blockchain Innovations with Pegged Sidechains

My advise would be to do a boring incremental steps approach, learn what you need to solve :

- get live edges operational

- get TrustChain edges from neighbor and pack into a single file

- publish all edges in a .torrent, broadcast hash to neighbor and download all neighbors

- join and merge operation on two files

- etc.

Currently the closest related work to Delft blockchain (MultiChain,TruchtChain): "THE SWIRLDS HASHGRAPH CONSENSUS ALGORITHM: FAIR, FAST, BYZANTINE FAULT TOLERANCE"

It is also graph-based, instead of a single chain. They provide an amazing polished description of the idea we call "offline voting", they describe as gossip-about-gossip. Their patented technology uses a graph-based blockchain and mentions a HashDAG. Uses proof-of-stake and single-party signed messages.

Corda is also transaction focussed. However, they lack a gossip mechanism. Transactions are listed on a chain, but stored in "vaults": http://r3cev.com/s/corda-introductory-whitepaper-final.pdf

Brainstorm outcome, detailing the three algorithm phases per round:

- consensus on ordered merge candidate list

- consensus on group merge

- consensus on group outcome

Using a commitment scheme we can generate guesses, winner becomes temporary elected leader for coordinating group consensus. Drawback: we don't like leader dependancies, DDoS vunerabilities, and failure/resume problems. Kelong: proof-of-luck

Just came across this consensus algorithm, might be interesting to read: https://www.stellar.org/papers/stellar-consensus-protocol.pdf

The main disadvantage, also mentioned in the paper, is that the quorum intersection cannot only contain faulty nodes. This requirement depends on the assumption that the individual nodes to choose good quorum slices. In the application of Stellar it is reasonable, because nodes represent banks or other organisations. But the same isn't true for Tribler. Nevertheless, the idea of Federated Byzantine Agreement is very interesting.

I will spend some time on this in the coming months.

I'm currently writing up my idea, expect something by the end of the week.

First write-up by Kelong: bottom-up.pdf

Updated my write-up with some negative results and my reasoning, see "2.5.1. Merging consensus groups does not guarantee BFT with a probability of 1"

Create a repository for my thesis work, which contains the pdf. It'll be a bit unstructured in the beginning, just me writing ideas and results down. Hopefully it'll converge into a proper thesis by summer.

New section since last time - "2.5.2 Tail bounding the number of Byzantine nodes in a consensus group"

Some remarks:

- Section 2.3.2: The querying for the valid set of block, is this indeed the set, or just the hash of the set? (If it is the former, does this scale?)

- Section 2.5.2: Are we really interested in the probability that the number white balls is lower than the expected value? I would suppose we are interested in the probability that the number of white balls is so low that there are too many black balls to get Byzantine agreement?

@pimotte thanks for the remarks

- It is the hash of the set, I'll be more specific.

- If the number of white balls is lower than (2N +1)/3, then that implies the number of black balls is more than f = (N - 1)/3. In this case Byzantine agreement cannot be achieved, because (2N + 1)/3 is the necessary and sufficient number of honest nodes to reach agreement (http://zoo.cs.yale.edu/classes/cs426/2013/bib/bracha85asynchronous.pdf).

Ideas:

- magic for scalability by Kelong: self-reporting! The only service provided is that each node has signed their hash/checkpoint value and the signature is correct. Thus concensus is reached on valid self-reported chain checkpoints. A fraud detection mechanism is still required to check the validity of each self-signed report.

- 1 hash represents 1 unique chain proof

- each consensus group, has just 1 lucky node now. Improve elegance of algorithm and each node proposes a consensus. All nodes follow: the most lucky responsive node with also truthful execution of algorithm.

- probabilistic fraud detection, full chain/full graph equals proven 100% fraud detection (determine this P())

I can't take all the credit, a lot of it resulted from the fruitful discussions with Zhejie.

Consensus Protocol.pdf

I have written my ideas down. It might look totally different from the initial ideas, but Kelong knows how I get here.

Starting reading the PDF. In the Transaction Block, I'm assuming it should also have a number of outputs (probably 2). I'm thinking in terms of Bitcoin where one of the output is "spare change" which the sender can re-use later.

The spare change are used so that the receiver of a transaction doesn't need to track all the way back to the root to make sure if the sender has enough for this transaction. In this sense, we should to add this spare change for the sake of simplicity and storage requirement. However, we still need the track to the root for the validation.

Yeah, we need to traverse to the root to validate a Tx regardless of spare change. But I was just thinking it'll be useful to freely set the transaction amount. For example if I only have 1 unit from 1 source, but I want to send 0.1 unit, then I can set 0.9 as UTXO (the spare change) and 0.1 as the actual output.

I see what you mean. Yes we should do that as well. It will eventually save the storage.

Just spoke to @synctext. He suggested that we should focus on something that's more generic. Probably no Bitcoin style transaction, just arbitrary data in the block (at least for my thesis). I guess we need to re-think the validation protocol in this case. My initial feel is that there will be more overhead in the validation protocol.

The validation protocol I think can be done using a BFS or DFS. On every step check that the checkpoints that surrounds the node (transaction) is in some consensus result and the node is not a fork of previously traversed nodes. We can prune branches if they've been validated previously. This should guarantee validity, still thinking about a way to prove it.

idea by Pim. Keep the scalability, use checkpoints. Prove that you have a time-bounded worst case detection time of double spend, if a certain fraction of network is non-malicious.

as a harmed party you can detect a double spend by agents you interacted with. Just check once after doing a transaction if it made it into the consensus, as reported by your counterparty

Made a lot of changes since last time - pdf.

Defined a few things formally, i.e. the basic mode, what is a fork, etc.

The consensus part should work if we assume the 2n/3 promoters (the nodes that execute the consensus algorithm) are honest. I also provide some proof sketch. OTOH a lot of it will change if we use an invitation system. Personally I'm not a fan because that's using humans as a part of the protocol so it won't be possible to prove useful results. Unless the invitation is based on some of reputation score.

I also wrote down a few things about fraud detection. Basically it's quite simple to detect fork (the way I defined it) if one of the party in the transaction is honest. The hard part is to detect fork when both parties are malicious. The more forks there are, the harder it is to detect them all.

Implemented a simplified version (no erasure coding, no threshold signatures) of HoneybadgerBFT using Twisted - code is here.

The algorithm described in the papers are of synchronous (blocking) style, so they don't fit nicely with the asynchronous (event loop + futures/promises) style in Twisted. So the algorithms are implemented using state machines. Every time a message comes in, I call a handle function to mutate the state.

Good progress, BFT operational.

No incentive for running promotor node. Storyline: BFT motivation needs to come from application. Sybil defense and incentives need to come from application architect. Just neutral layer, that scales.

2017-03-23 11:38:38,403 - WARNING - peer rWfvW1TIavntT2tDgPgjjgEgOBrkq0JphFId6FTuHv8= already deleted

2017-03-23 11:39:17,096 - DEBUG - Discovery: discovery sent y3JlSK1EwuGwy6L3BhDloQOLo0I0sVAFufAnkbWpNSM= 12345

2017-03-23 11:39:17,097 - DEBUG - Discovery: received msg <src.utils.messages.DiscoverReplyMsg instance at 0x7f9b6ba38ea8>

2017-03-23 11:39:17,097 - DEBUG - Discovery: making new clients...

2017-03-23 11:39:17,097 - DEBUG - client y3JlSK1EwuGwy6L3BhDloQOLo0I0sVAFufAnkbWpNSM=,127.0.0.1:12345 already exist

2017-03-23 11:39:18,097 - DEBUG - sent ping

2017-03-23 11:39:18,097 - DEBUG - got ping, <src.utils.messages.PingMsg instance at 0x7f9b6ba732d8>

2017-03-23 11:39:18,098 - DEBUG - sent pong

2017-03-23 11:39:18,098 - DEBUG - got pong, <src.utils.messages.PongMsg instance at 0x7f9b6ba732d8>

2017-03-23 11:39:18,098 - DEBUG - pong: found myself in peers.keys

2017-03-23 11:39:18,098 - DEBUG - done pong

2017-03-23 11:39:19,499 - DEBUG - got ping, <src.utils.messages.PingMsg instance at 0x7f9b6ba4a560>

2017-03-23 11:39:19,500 - DEBUG - sent pong

2017-03-23 11:39:20,806 - DEBUG - got ping, <src.utils.messages.PingMsg instance at 0x7f9b6b9d31b8>

2017-03-23 11:39:20,807 - DEBUG - sent pong

2017-03-23 11:39:21,557 - DEBUG - got ping, <src.utils.messages.PingMsg instance at 0x7f9b6ba4afc8>

2017-03-23 11:39:21,557 - DEBUG - sent pong

2017-03-23 11:39:22,093 - DEBUG - Mo14: broadcast est: v = 1, r = 1

2017-03-23 11:39:22,094 - INFO - Mo14: initial message broadcasted 1

2017-03-23 11:39:22,095 - DEBUG - Mo14: stored msg (ty: 1, v: 1, r: 1), from y3JlSK1EwuGwy6L3BhDloQOLo0I0sVAFufAnkbWpNSM=

2017-03-23 11:39:22,096 - DEBUG - Mo14: no bin values

2017-03-23 11:39:23,492 - DEBUG - Mo14: stored msg (ty: 1, v: 1, r: 1), from baj18tzBbCMQd8axsqzvh9/3eiMjLlSZXbiWjTCaGX4=

2017-03-23 11:39:23,492 - DEBUG - Mo14: relaying v 1

2017-03-23 11:39:23,492 - DEBUG - Mo14: broadcast est: v = 1, r = 1

2017-03-23 11:39:23,493 - DEBUG - Mo14: no bin values

2017-03-23 11:39:23,493 - DEBUG - Mo14: stored msg (ty: 1, v: 1, r: 1), from baj18tzBbCMQd8axsqzvh9/3eiMjLlSZXbiWjTCaGX4=

2017-03-23 11:39:23,493 - DEBUG - Mo14: no bin values

2017-03-23 11:39:23,493 - DEBUG - Mo14: stored msg (ty: 1, v: 1, r: 1), from y3JlSK1EwuGwy6L3BhDloQOLo0I0sVAFufAnkbWpNSM=

2017-03-23 11:39:23,494 - DEBUG - Mo14: no bin values

2017-03-23 11:39:24,799 - DEBUG - Mo14: stored msg (ty: 1, v: 1, r: 1), from pcgltYBjOmo6WZRoG/3XP+YTkhW9ri3icdn9xdg3Q0o=

2017-03-23 11:39:24,799 - DEBUG - Mo14: adding to bin_values

2017-03-23 11:39:24,799 - DEBUG - Mo14: reached est state

2017-03-23 11:39:24,800 - DEBUG - Mo14: relaying w 1

2017-03-23 11:39:24,800 - DEBUG - Mo14: broadcast aux: v = 1, r = 1

2017-03-23 11:39:24,801 - DEBUG - Mo14: reached aux state

2017-03-23 11:39:24,802 - DEBUG - Mo14: self.r 1 not in self.aux_values {}

2017-03-23 11:39:24,802 - DEBUG - Mo14: stored msg (ty: 2, v: 1, r: 1), from baj18tzBbCMQd8axsqzvh9/3eiMjLlSZXbiWjTCaGX4=

2017-03-23 11:39:24,802 - DEBUG - Mo14: reached aux state

2017-03-23 11:39:24,803 - DEBUG - Mo14: stored msg (ty: 2, v: 1, r: 1), from y3JlSK1EwuGwy6L3BhDloQOLo0I0sVAFufAnkbWpNSM=

2017-03-23 11:39:24,804 - DEBUG - Mo14: reached aux state

2017-03-23 11:39:24,805 - DEBUG - Mo14: stored msg (ty: 1, v: 1, r: 1), from pcgltYBjOmo6WZRoG/3XP+YTkhW9ri3icdn9xdg3Q0o=

2017-03-23 11:39:24,805 - DEBUG - Mo14: adding to bin_values

2017-03-23 11:39:24,805 - DEBUG - Mo14: reached aux state

2017-03-23 11:39:24,805 - DEBUG - Mo14: stored msg (ty: 2, v: 1, r: 1), from pcgltYBjOmo6WZRoG/3XP+YTkhW9ri3icdn9xdg3Q0o=

2017-03-23 11:39:24,806 - DEBUG - Mo14: reached aux state

2017-03-23 11:39:24,808 - DEBUG - Mo14: vals =? set([v]), set([1]) =? set([1])

2017-03-23 11:39:24,808 - INFO - Mo14: DECIDED 1

2017-03-23 11:39:25,550 - DEBUG - Mo14: not processing due to stopped state

Sybil defense and incentives need to come from application architect.

scientific publication requires theorem material. Proof of spam vulnerability, consensus guarantee, agreement, gossip for signature propagation?

Efficient, scalable, eventual double spending and fork detection.

This thesis work is focused on the light-weight BFT consensus model. Each party needs to truthfully disclose a hash value of its state. Our algorithm guarantees that this value can't be altered in the future and it is also impossible to reveal different hashes to different parties. Together this work provides tamper-proof state and prevents forks. With full scalability.

Second phase is validating transactions between the global consensus checkpoints. We assume an n-neighbor workload, typical for Internet-of-Things scenarios where interactions are localized. With a 5-neighbor workload these 5 neighbors need to receive the full translation list and cryptographically validate their correctness. Correctness check validates the signature sequences, transaction counters, and ensures re-ordering, gaps, or re-writing of the transaction list is impossible; if we select truthful validators. Our protocol enforces only signing transactions if and only if the counterparty chain has been fully checked for correctness. We call this policy the due diligence requirement which eases the identification of faulty or bad actors. Together within our architecture the only bound to the global transactions rate: local validation.

Brainstorm: only include the sha256(Tx_block) in the blockchain. Enable nano-transactions and giga-transaction blocks.

Please include in thesis related work section. Related work from 2016 this Tangle system contain many of the ideas of our 2012 work, genesis blocks for all; transactions graph, no native cybercurrency. However, no (global) consensus or spam prevention mechanism is provided. Also similar to Corda.

consensus-thesis-code>python -m pytest -v -x

============================================================ test session starts =============================================================

platform linux2 -- Python 2.7.12, pytest-2.8.7, py-1.4.31, pluggy-0.3.1 -- /usr/bin/python

cachedir: .cache

rootdir: /home/pouwelse/GITHUB/consensus-thesis-code, inifile:

collected 45 items

tests/test_consensus.py::test_acs[4-1-omission] ERROR

=================================================================== ERRORS ===================================================================

__________________________________________________ ERROR at setup of test_acs[4-1-omission] __________________________________________________

pytest.fixture functions cannot use ``yield``. Instead write and return an inner function/generator and let the consumer call and iterate over it.:

@pytest.fixture

def discover():

p = subprocess.Popen(['python2', '-m', 'src.discovery'])

time.sleep(1) # wait for it to spin up

yield None

print "Test: tear down discovery"

p.terminate()

/home/pouwelse/GITHUB/consensus-thesis-code/tests/tools.py:15

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! Interrupted: stopping after 1 failures !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

========================================================== 1 error in 0.28 seconds ===========================================================

XPS13:~/GITHUB/consensus-thesis-code>python --version

Python 2.7.12

consensus-thesis-code>env PYTHONPATH=. python -m pytest --version

This is pytest version 2.8.7, imported from /usr/lib/python2.7/dist-packages/pytest.pyc

Current protocol implementation blocks your local chain for any outstanding signature-request. During blocked state all other incoming requests are responded to with an abort message. Full concurency has been achieved in Tribler true_halves implementation by directly putting half-signed blocks on your local chain. Thus data inconsistencies are allowed to achieve concurrency, some half-signed blocks may not be signed by the counterparty (#2135). Yes, I don't like it either; but have no elegant design alternative.

ToDo: fix performance bug or logical bug + true halves.

As of 1336bb5, true halves and compact blocks are implemented.

Initial experiments show better results than 6 days ago. That is higher (verified) transaction rate and the graph looks a bit more reasonable.

A lot of performance performances improvements were merged these couple of weeks, the most notable one is changing serialisation library from jsonpickle to protobuf (PR).

I'm pleased to say the performance results improved significantly, previously we were just below 1000 tx/s, with the new code it can reach 5000 tx/s. Here is the same graph but with new code.

@kc1212 If you are still unhappy with performance, you can make protobuf another 12-20 times faster by using the C++ backend:

os.environ["PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION"] = "cpp"

os.environ["PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION_VERSION"] = "2"

import google.protobufOfficial doc: https://developers.google.com/protocol-buffers/docs/reference/python-generated

My serializer using this: https://github.com/qstokkink/TriblerProtobufSerialization/blob/master/serializer.py

@qstokkink Thanks for the suggestion, 12-20 times faster is hard to ignore.

The document mentioned that "environment variable needs to be set before installing the protobuf library", is the protobuf on the TUD DAS5 a bit different from what you get by simply pip install protobuf?

@kc1212 pip install protobuf will work without a problem on most nix based systems, mac requires a brew install protobuf and on Windows.. well.. it's a bit more work to get the cpp backend.

tl;dr Yes, that should work just fine on the DAS5. (I have a manual build script here for CentOS, but I think it is obsolete now)

Sybil defense and incentives need to come from application architect.

scientific publication requires theorem material. Proof of spam vulnerability, consensus guarantee,

agreement, gossip for signature propagation?

Efficient, scalable, eventual double spending and fork detection.

This work provides the scalable blockchain fabric. Our efficient consensus mechanism is generic and does not pose any restrictions on the Sybil defense mechanism. Within our architecture you can select a proof-of-work, proof-of-stake or our own preferred trust-based approach.

ToDo:

- malicious behavior experiment ?

- correctness proof

- guaranteed eventual detection of cheaters

- performance bounds and scalability

My current model is fairly loose and fails to capture state transitions.

I'm considering to model my system using Canetti's technique described in Universally Composable Security, if I can digest it. This appears to be the technique used in The Bitcoin Backbone Protocol and Hybrid Consensus.

- Holy Grail: we present a consensus model with proven correctness. Avoid probabilistic behavior?

- bit more intro + blockchain picture in intro (1 MB block size, global consistent broadcast primitive, near civil war for the blockchain, governance problem, 5 nerds in control, R3 consensus == NULL, ... )

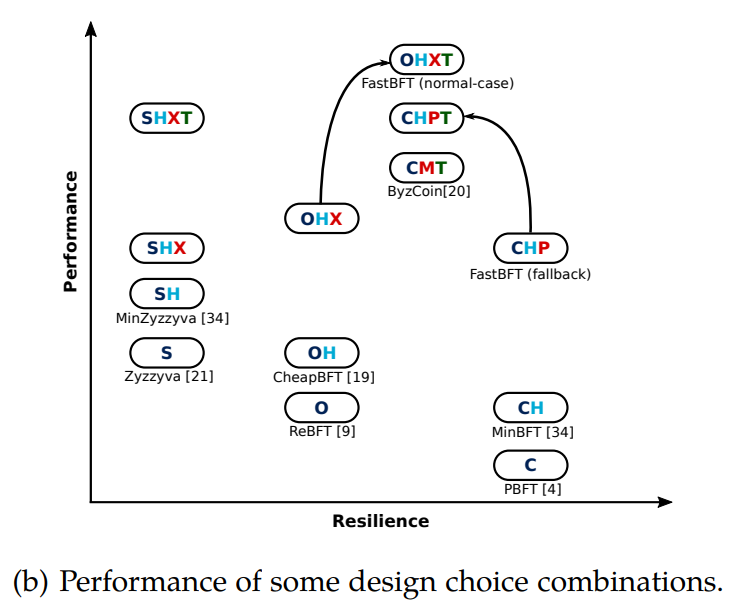

- Chapter Problem Description (30 years of prior BFT work, quote: "PBFT triggered a renaissance in BFT replication research, with protocols like Q/U" etc.

- Security and Fault Tolerance Analysis: Agreement, validity, termination as key topics ("However, not everything is perfect. Now we show a negative result, where the liveness property cannot be attained.")

Malicious nodes and collusion ? - impact of 51% attack, how fast would it crumble to bits and pieces?

Related work from Sydney Consensus_Red_Belly_Blockchain.pdf, great blockchain course slides (including Red-Bellied blockchain): http://poseidon.it.usyd.edu.au/~gramoli/largescalenetworks/lectures/10-blockchains.pdf

Fast byzantine consensus: https://www.cs.utexas.edu/~lorenzo/papers/Martin06Fast.pdf

Solid progress: mathematical proof that transaction rate now scales linear with participants!

- 2.4 Non-goals

Rephrase that as 'limitations'. Our work removes the transaction bound from the blockchain, however, the price to pay is that the Sybil attack prevention mechanism moves from the blockchain towards the application layer. This is a similar approach as seen successfully in the database world, where for large-scale systems the costly ACID-guarantees moved to the application layer (DynamoDB, NoSQL, ..) - 3 System Architecture

We present a design which removes the transaction rate restrictions. We combine HoneyBFT with our prior work on providing each entity with their own blockchain. We duplicate the essence of the architecture of the Internet itself, creating loosely coupled autonomous blockchain entities. Our Trustchain only interactions with others is exchange of a checkpoint. - Chapter: 4 Correctness and Complexity Analysis

- 4.2 Performance and Complexity Analysis

- Fig 5.3 excellent stuff

- Figures: compare to prior work with real or estimated performance bounds!

@kc1212 very related work, also quite new (credits for @synctext for finding this one): http://poseidon.it.usyd.edu.au/~concurrentsystems/doc/ConsensusRedBellyBlockchain.pdf

I was unable to verify the claims in the "Red-Bellied blockchain" work. Especially the 250k transaction throughput for "CGLR" and "Boosted-MMR". I couldn't find any information on these keywords, the document also did not provide any reference.

Title: Blockchain Consensus Protocol with Horizontal Scalability

Can the mathematical proof of linear scalability also be given without a global consistent reliable broadcast? How about diminishing return factors? Can a gossip-based approach also yield the same guaranteed performance and scale? It can be assumed that we have a 2D network model where nodes are aware of their lowest latency neighbors.

DDoS and spam vulnerability analysis/considerations?

Thesis: "Our consensus protocol runs on top of Extended TrustChain."

More like: we used unmodified ACS as the key building block for our scalable consensus model.

More like: our key difference from related work is the independence of our transaction validation. Prior systems such a Bitcoin and Ethereum entangled the consensus algorithm with the transaction validation, restricting performance. By splitting this functionality we achieved mathematically proven horizontal scalability for the first time.

typo: Meaning that trasanctions with adversaries

Attempt matters like: no-forks-possible proof ?

Another proof? protocol will always terminate for any finite-sized input. (with minimal time assumptions)

4.2.4 Global "linear" throughput

"then the network cannot keep up with the large number of fragments". Our default cautious policy is to download all their new blocks since the last checkpoint before transacting with a stranger. This causes a bottleneck which could be avoided if encounters are predictable and pre-fetching is employed.

we dedicate this chapter on comparing our results with related work == no entropy.

Use word taxonomy.

6.4 Hybrid systems; not informative

What is the name of the thing your did in your thesis?

(trick you use is hash-based state consensus; leaderless)

@kc1212 thanks! I expected that already. You probably don't have time to do this but I was thinking, maybe it's helpful to make an interaction model (i.e. based on interactions in the Bitcoin or Ethereum blockchain) and run your consensus algorithm on top of that. This closely resembles real-world transactions.

Fast AUS blockchain: http://poseidon.it.usyd.edu.au/~concurrentsystems/rbbc/Benchmark.html

shows a single graph with 400k transactions/second...

A 9-page paper version of my thesis is available:

https://github.com/kc1212/consensus-thesis/raw/master/paper/paper.pdf

The thesis also went through many polishing rounds, it can be found at the usual place:

https://github.com/kc1212/consensus-thesis/raw/master/thesis/thesis.pdf

The source code for 10k transactions per second: https://github.com/kc1212/checo

Had a look at the new PARSEC Algorithm?

https://github.com/maidsafe/rfcs/blob/master/text/0049-parsec/0049-parsec.md

PARSEC Algorithm is certainly interesting, thnx!

A gossip protocol is used to allow efficient communication between nodes, as in Hashgraph

and [5]. Propagating a message, and indeed, reaching consensus only costs O(N log N)

communications and O(log N) stages.

Excellent related BFT work overview, Scalable Byzantine Consensus via Hardware-assisted Secret Sharing

This thesis finished successfully: https://repository.tudelft.nl/islandora/object/uuid%3A86b2d4d8-642e-4d0f-8fc7-d7a2e331e0e9

Closing issue.