News: The A2A Bittensor Subnet is now on the mainchain with UID SN25! Please join the Bittensor Discord and see us at Channel א·alef·25! Please also check our X for our vision of creating an avenue where everyone can contribute to creating the best open-source A2A models. 5th May 2024

Note: For more details around Virtuals Protocol's Audio-to-Animation (A2A) Bittensor Subnet, please feel free to check out our whitepaper.

Audio-to-Animation (A2A), also referred to as audio-driven animation, generates visuals that dynamically respond to audio inputs. This technology finds applications across a wide range of domains including gaming AI agents, livestreaming AI idols, virtual companions, metaverses, and more.

This Bittensor subnet offers a platform for democratizing the creation of A2A models, gathering the help of the wider ML community in Bittensor to generate the best animated motions and bring life to on-chain AI agents.

We will divide the development of A2A models into several phases, with an iterative approach to make it better over time.

- Phase 1: Focus on generating full body motions, such as dance movements, based on the audio input from a specific curated dataset.

- Phase 2: Recognise the mood and genre of the audio input and adjust the generated animation accordingly.

- Phase 3: Widen models capabilities beyond dance audio and motions to general animation motions (i.e. gestures etc.).

- Phase 4: Expand A2A model into Audio-to-Video network in order to enhance visual experiences.

Currently, we're at Phase 1, where audio-to-dance motions will be the focus. Validators will choose a prompt from the reference library and send the prompt to miners for generation of animation outputs.

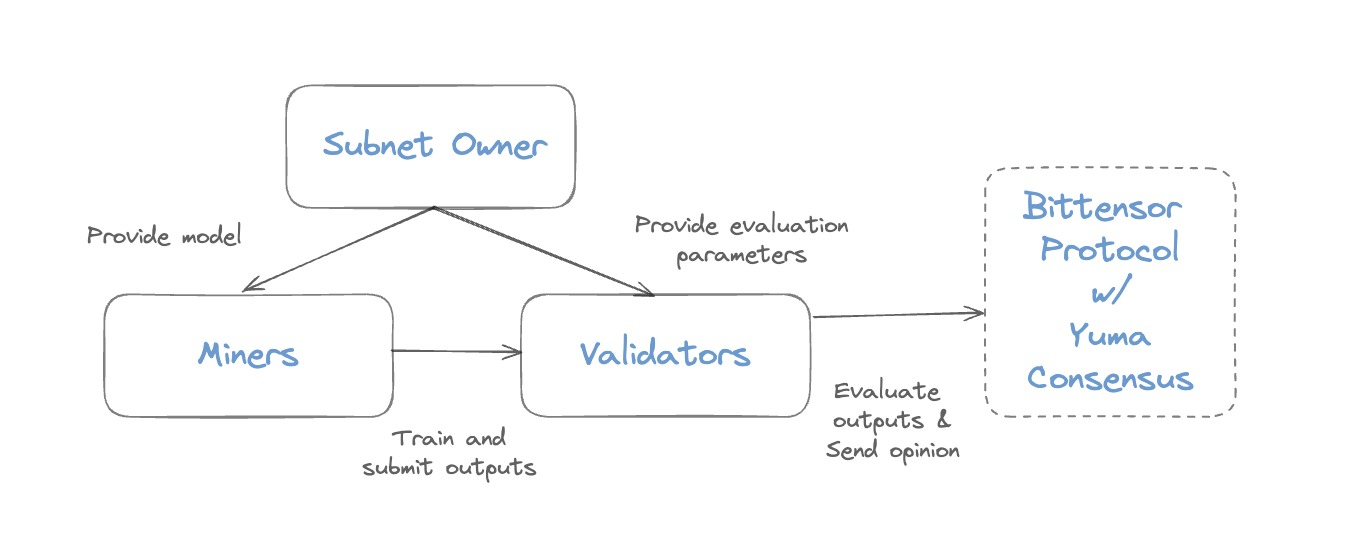

- Subnet owner: Virtuals Protocol as the subnet owner, creates the modules for miners and validators to train and evaluate the generated animation. Subnet Owner also decides on the parameters involved in evaluating the animation’s performance.

- Miners: Generate animations with A2A models using reference models or other models.

- Validators: Provide audio prompts to miners and evaluate the submitted animation from miners based on the parameters suggested by the subnet owner.

- Bittensor protocol: Aggregate weights using Yuma Consensus and determine the final weights and allocation ratios for each miner and validator.

Given the non-determinism of an animation output, an evaluation mechanism which considers multiple parameters will be implemented in a phased approach. Read up more about the evaluation mechanism under Validator documentation and in the whitepaper.

See Validator documentation and guide.

See Miner documentation and guide.

Reference A2A models and implementations are from open-source projects EDGE and DiffuseStyleGesture.

This repository is licensed under the MIT License.

# The MIT License (MIT)

# Copyright © 2023 Yuma Rao

# Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated

# documentation files (the “Software”), to deal in the Software without restriction, including without limitation

# the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software,

# and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

# The above copyright notice and this permission notice shall be included in all copies or substantial portions of

# the Software.

# THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO

# THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

# THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

# OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

# DEALINGS IN THE SOFTWARE.