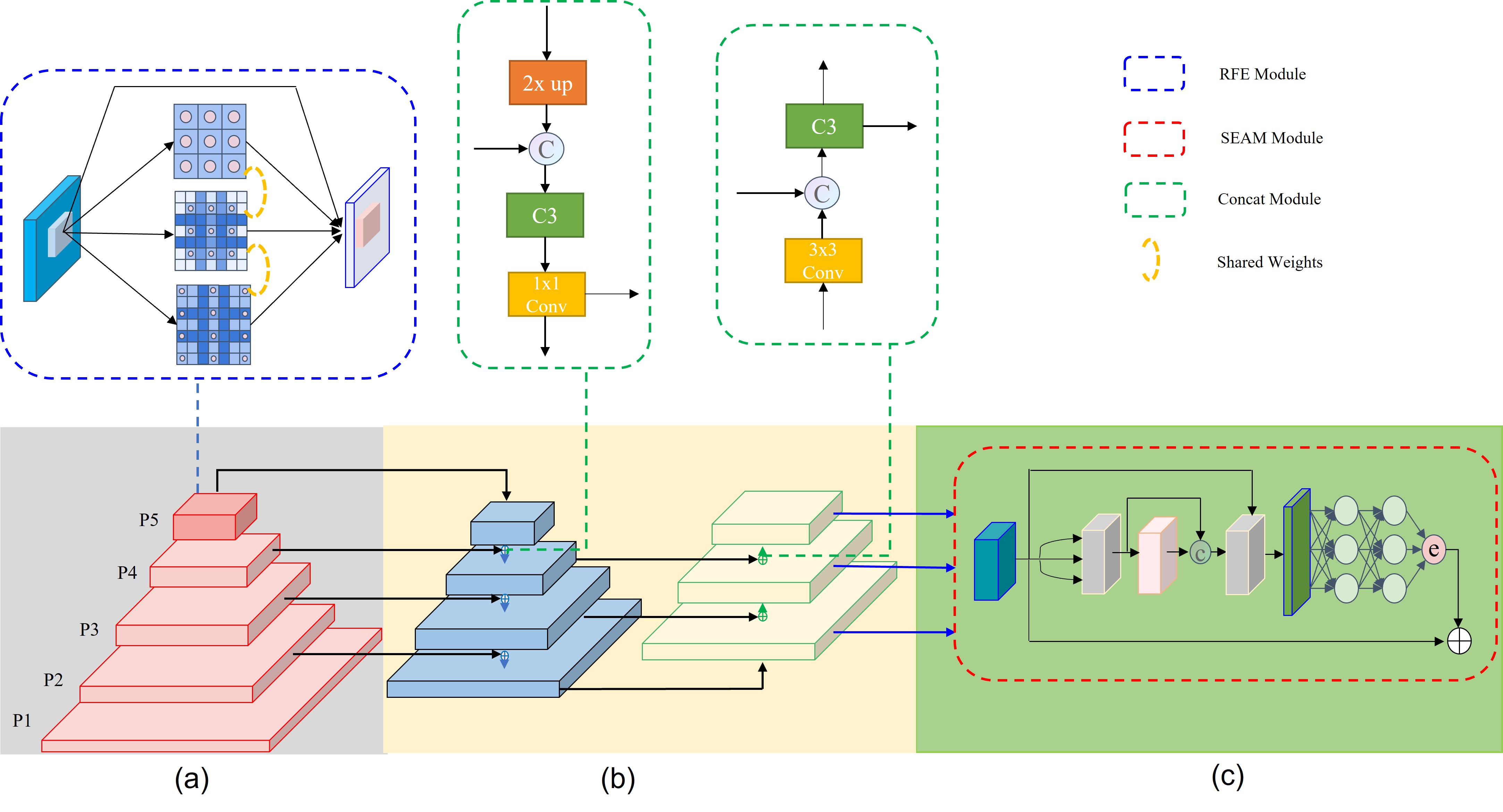

YOLO-FaceV2: A Scale and Occlusion Aware Face Detector

https://arxiv.org/abs/2208.02019

Create a Python Virtual Environment.

conda create -n {name} python=x.xEnter Python Virtual Environment.

conda activate {name}Install pytorch in this.

pip install torch==1.10.0+cu111 torchvision==0.11.0+cu111 torchaudio==0.10.0 -f https://download.pytorch.org/whl/torch_stable.htmlInstall other python package.

pip install -r requirements.txtGet the code.

git clone https://github.com/Krasjet-Yu/YOLO-FaceV2.gitDownload the WIDER FACE dataset. Then convert it to YOLO format.

# You can modify convert.py and voc_label.py if needed.

python3 data/convert.py

python3 data/voc_label.pylink: https://pan.baidu.com/s/1FVIY20qtTSM9gDhz7DtJkA

code: tzfs

Train your model on WIDER FACE.

python train.py --weights preweight.pt

--data data/WIDER_FACE.yaml

--cfg models/yolov5s_v2_RFEM_MultiSEAM.yaml

--batch-size 64

--epochs 250 Evaluate the trained model via next code on WIDER FACE

python widerface_pred.py --weights runs/train/x/weights/best.pt

--save_folder ./widerface_evaluate/widerface_txt_x

cd widerface_evaluate/

python evaluation.py --pred ./widerface_txt_xDownload the eval_tool to show the performance.

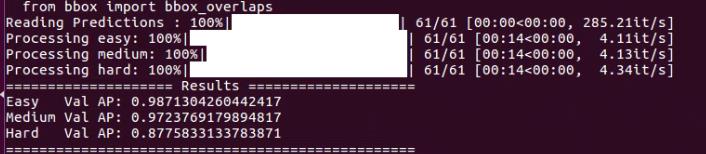

The result is shown below:

see in ultralytics/yolov5#607

# Single-GPU

python train.py --epochs 10 --data coco128.yaml --weights yolov5s.pt --cache --evolve

# Multi-GPU

for i in 0 1 2 3 4 5 6 7; do

sleep $(expr 30 \* $i) && # 30-second delay (optional)

echo 'Starting GPU '$i'...' &&

nohup python train.py --epochs 10 --data coco128.yaml --weights yolov5s.pt --cache --device $i --evolve > evolve_gpu_$i.log &

done

# Multi-GPU bash-while (not recommended)

for i in 0 1 2 3 4 5 6 7; do

sleep $(expr 30 \* $i) && # 30-second delay (optional)

echo 'Starting GPU '$i'...' &&

"$(while true; do nohup python train.py... --device $i --evolve 1 > evolve_gpu_$i.log; done)" &

donehttps://github.com/ultralytics/yolov5

https://github.com/deepcam-cn/yolov5-face

https://github.com/open-mmlab/mmdetection

https://github.com/dongdonghy/repulsion_loss_pytorch

If you think this work is helpful for you, please cite

@ARTICLE{2022arXiv220802019Y,

author = {{Yu}, Ziping and {Huang}, Hongbo and {Chen}, Weijun and {Su}, Yongxin and {Liu}, Yahui and {Wang}, Xiuying},

title = "{YOLO-FaceV2: A Scale and Occlusion Aware Face Detector}",

journal = {arXiv e-prints},

keywords = {Computer Science - Computer Vision and Pattern Recognition},

year = 2022,

month = aug,

eid = {arXiv:2208.02019},

pages = {arXiv:2208.02019},

archivePrefix = {arXiv},

eprint = {2208.02019},

primaryClass = {cs.CV},

adsurl = {https://ui.adsabs.harvard.edu/abs/2022arXiv220802019Y},

adsnote = {Provided by the SAO/NASA Astrophysics Data System}

}

We use code's license is MIT License. The code can be used for business inquiries or professional support requests.