ECOLE is a deep learning based software that performs CNV call predictions on WES data using read depth sequences.

The manuscript can be found here: ECOLE: Learning to call copy number variants on whole exome sequencing data

The repository with the necessary data and scripts to reproduce the results in the paper can be found here: ECOLE results reproduction

Deep Learning, Copy Number Variation, Whole Exome Sequencing

Berk Mandiracioglu, Furkan Ozden, Gun Kaynar, M. Alper Yilmaz, Can Alkan, A. Ercument Cicek

[firstauthorname].[firstauthorsurname]@epfl.ch

Warning: Please note that ECOLE software is completely free for academic usage. However it is licenced for commercial usage. Please first refer to the License section for more info.

- ECOLE is a python3 script and it is easy to run after the required packages are installed.

For easy requirement handling, you can use ECOLE_environment.yml files to initialize conda environment with requirements installed:

$ conda env create --name ecole_env -f ECOLE_environment.yml

$ conda activate ecole_envNote that the provided environment yml file is for Linux systems. For MacOS users, the corresponding versions of the packages might need to be changed.

- ECOLE provides GPU support optionally. See GPU Support section.

Important notice: Please call the ECOLE_call.py script from the scripts directory.

- Pretrained models of the paper, one of the following: (1) ecole, (2) ecole-ft-expert, (3) ecole-ft-somatic.

- Batch size to be used to perform CNV call on the samples.

- Relative or direct path for are the processed WES samples, including read depth data.

- Relative or direct output directory path to write ECOLE output file.

- Level of resolution you desire, choose one of the options: (1) exonlevel, (2) merged.

- Relative or direct path for mean&std stats of read depth values to normalize. These values are obtained precalculated from the training dataset before the pretraining.

- Set to PCI BUS ID of the gpu in your system.

- You can check, PCI BUS IDs of the gpus in your system with various ways. Using gpustat tool check IDs of the gpus in your system like below:

-Check the version of ECOLE.

-See help page.

Usage of ECOLE is very simple!

-

This project uses conda package management software to create virtual environment and facilitate reproducability.

-

For Linux users:

-

Please take a look at the Anaconda repo archive page, and select an appropriate version that you'd like to install.

-

Replace this

Anaconda3-version.num-Linux-x86_64.shwith your choice

$ wget -c https://repo.continuum.io/archive/Anaconda3-vers.num-Linux-x86_64.sh

$ bash Anaconda3-version.num-Linux-x86_64.sh- It is important to set up the conda environment which includes the necessary dependencies.

- Please run the following lines to create and activate the environment:

$ conda env create --name ecole_env -f ECOLE_environment.yml

$ conda activate ecole_env- It is necessary to perform preprocessing on WES data samples to obtain read depth and other meta data and make them ready for CNV calling.

- Please run the following line:

$ source preprocess_samples.sh- Here, we demonstrate an example to run ECOLE on gpu device 0, and obtain exon-level CNV call.

- Please run the following script:

$ source ecole_call.shYou can change the argument parameters within the script to run it on cpu and/or to obtain merged CNV calls.

- At the end of the CNV calling procedure, ECOLE will write its output file to the directory given with -o option. In this tutorial it is ./ecole_calls_output

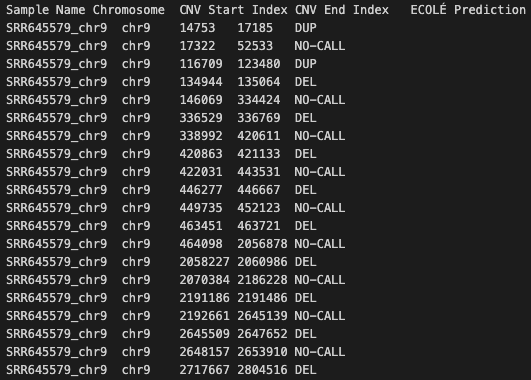

- Output file of ECOLE is a tab-delimited .bed like format.

- Columns in the output file of ECOLE are the following with order: 1. Sample Name, 2. Chromosome, 3. CNV Start Index, 4. CNV End Index, 5. ECOLE Prediction

- Following figure is an example of ECOLE output file.

Important notice: Please call the ECOLE_finetune.py script from the scripts directory.

- Batch size to be used to perform CNV call on the samples.

- Relative or direct path for are the processed WES samples, including read depth data.

- Relative or direct output directory path to write ECOLE output file.

- Relative or direct path for mean&std stats of read depth values to normalize. These values are obtained precalculated from the training dataset before the pretraining.

- The number of epochs the finetuning will be performed.

- The learning rate to be used in finetuning

- The path for the pretrained model weights to be loaded for finetuning

- Set to PCI BUS ID of the gpu in your system.

- You can check, PCI BUS IDs of the gpus in your system with various ways. Using gpustat tool check IDs of the gpus in your system like below:

-Check the version of ECOLE.

-See help page.

We provide an ECOLE Finetuning example with WES sample of NA12891 using only chromosome 21. Step-0 and Step-1 are the same as the ECOLE call example.

-

This project uses conda package management software to create virtual environment and facilitate reproducability.

-

For Linux users:

-

Please take a look at the Anaconda repo archive page, and select an appropriate version that you'd like to install.

-

Replace this

Anaconda3-version.num-Linux-x86_64.shwith your choice

$ wget -c https://repo.continuum.io/archive/Anaconda3-vers.num-Linux-x86_64.sh

$ bash Anaconda3-version.num-Linux-x86_64.sh- It is important to set up the conda environment which includes the necessary dependencies.

- Please run the following lines to create and activate the environment:

$ conda env create --name ecole_env -f ECOLE_environment.yml

$ conda activate ecole_env-

It is necessary to perform preprocessing on WES data samples to obtain read depth and other meta data and make them ready for ECOLE finetuning.

-

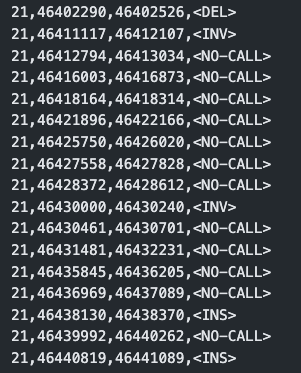

ECOLE Finetuning requires .bam and ground truth calls as provided under /finetune_example_data. Please see the below image for a sample ground truths format.

-

Please run the following line:

$ source finetune_preprocess_samples.sh- Here, we demonstrate an example to run ECOLE Finetuning on gpu device 0.

- Please run the following script:

$ source ecole_finetune.shYou can change the argument parameters within the script to run it on cpu.

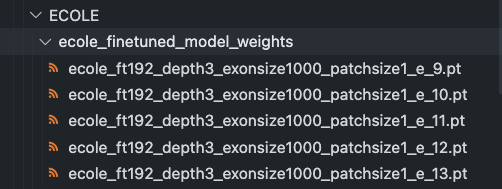

- At the end of ECOLE Finetuning, the script will save its model weights file to the directory given with -o option. In this tutorial it is ./ecole_finetuned_model_weights

- CC BY-NC-SA 2.0

- Copyright 2022 © ECOLE.

- For commercial usage, please contact.