-

May 22, 2020 Releasing the pre-trained FQ-BigGAN/BigGAN at resolution 64x64 and their training logs at the link (10.34G):

-

May 22, 2020

Selfie2Anime Demois released. Try it out. -

Colab file for training and testing. Put it into

FQ-GAN/FQ-U-GAT-ITand follow the training/testing instruction. -

Selfie2Anime pretrained models are available now!! Halfway checkpoint and Final checkpoint.

-

Photo2Portrait pretrained model is released!

This repository contains source code to reproduce the results presented in the paper:

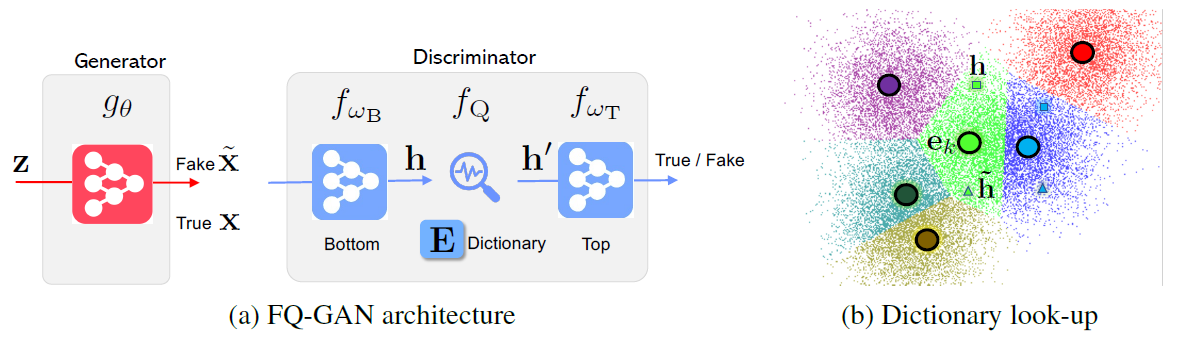

Feature Quantization Improves GAN Training, ICML 2020

Yang Zhao*,

Chunyuan Li*,

Ping Yu,

Jianfeng Gao,

Changyou Chen

This code is based on PyTorchGAN. Here we will give more details of the code usage. You will need python 3.x, pytorch 1.x, tqdm ,h5py

- CIFAR-10 or CIFAR-100 (change C10 to C100 to prepare CIFAR-100)

python make_hdf5.py --dataset C10 --batch_size 256 --data_root data

python calculate_inception_moments.py --dataset C10 --data_root data --batch_size 128

- ImageNet, first you need to manually download ImageNet and put all image class folders into

./data/ImageNet, then execute the following command to prepare ImageNet (128×128)

python make_hdf5.py --dataset I128 --batch_size 256 --data_root data

python calculate_inception_moments.py --dataset I128_hdf5 --data_root data --batch_size 128

We have four bash scripts in FQ-BigGAN/scripts to train CIFAR-10, CIFAR-100, ImageNet (64×64) and ImageNet (128×128), respectively. For example, to train CIFAR-100, you may simply run

sh scripts/launch_C100.sh

To modify the FQ hyper-parameters, we provide the following options in each script as arguments:

--discrete_layer: it specifies which layers you want quantization to be added, i.e. 0123--commitment: it is the quantization loss coefficient, default=1.0--dict_size: the size of the EMA dictionary, default=8, meaning there are 2^8 keys in the dictionary.--dict_decay: the momentum when learning the dictionary, default=0.8.

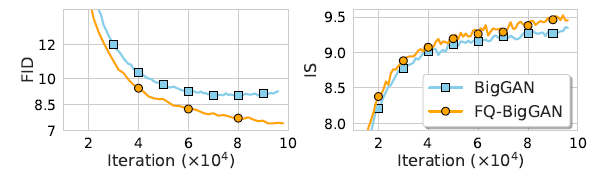

Learning curves on CIFAR-100.

FID score comparison with BigGAN on ImageNet

| Model | 64×64 | 128×128 |

|---|---|---|

| BigGAN | 10.55 | 14.88 |

| FQ-BigGAN | 9.67 | 13.77 |

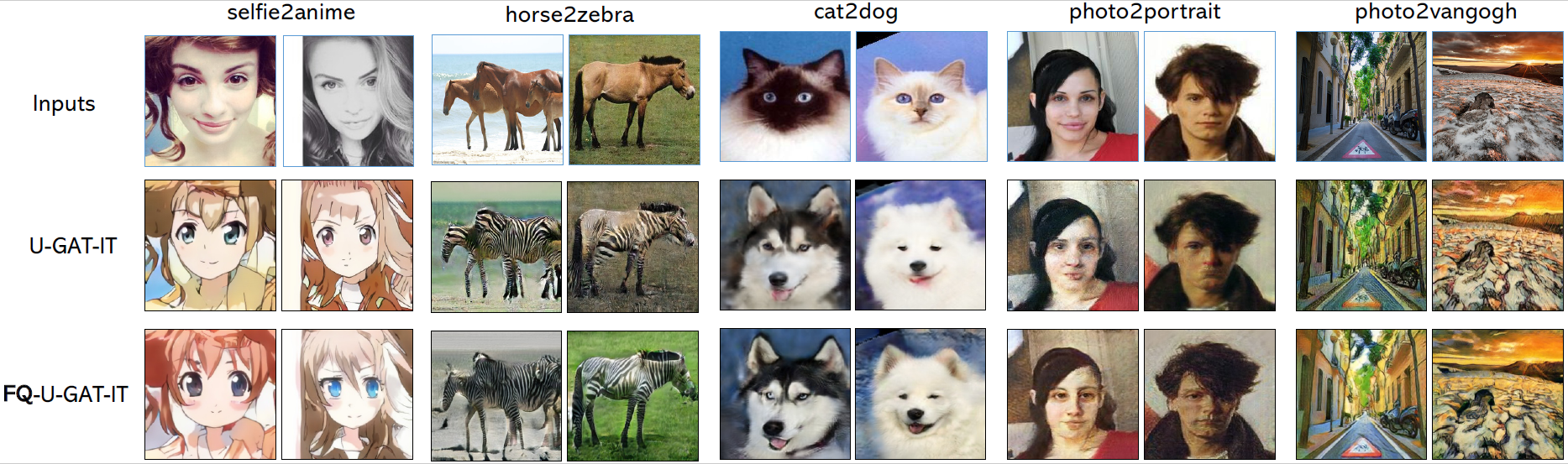

This experiment is based on the official codebase U-GAT-IT. Here we plan to give more details of the dataset preparation and code usage. You will need python 3.6.x, tensorflow-gpu-1.14.0, opencv-python, tensorboardX

We use selfie2anime, cat2dog, horse2zebra, photo2portrait, vangogh2photo.

- selfie2anime: go to U-GAT-IT to download the dataset and unzip it to

./dataset. - cat2dog and photo2portrait: here we provide a bash script adapted from DRIT to download the two datasets.

cd FQ-U-GAT-IT/dataset && sh download_dataset_1.sh [cat2dog, portrait]

- horse2zebra and vangogh2photo: here we provide a bash script adapted from CycleGAN to download the two datasets.

cd FQ-U-GAT-IT && bash download_dataset_2.sh [horse2zebra, vangogh2photo]

python main.py --phase train --dataset [type=str, selfie2anime/portrait/cat2dog/horse2zebra/vangogh2photo] --quant [type=bool, True/False] --commitment_cost [type=float, default=2.0] --quantization_layer [type=str, i.e. 123] --decay [type=float, default=0.85]

By default, the training procedure will output checkpoints and intermediate translations from (testA, testB) to checkpoints (checkpoints_quant) and results (results_quant) respectively.

python main.py --phase test --test_train False --dataset [type=str, selfie2anime/portrait/cat2dog/horse2zebra/vangogh2photo] --quant [type=bool, True/False] --commitment_cost [type=float, default=2.0] --quantization_layer [type=str, i.e. 123] --decay [type=float, default=0.85]

If the model is freshly loaded from what I have shared, remember to put them into

checkpoint_quant/UGATIT_q_selfie2anime_lsgan_4resblock_6dis_1_1_10_10_1000_sn_smoothing_123_2.0_0.85

by default and modify the file checkpoint accordingly. This structure is inherited from the official U-GAT-IT. Please feel free to modify it for convinience.

├── FQ-GAN

└── FQ-U-GAT-IT

├── dataset

├── selfie2anime

├── portrait

├── vangogh2photo

├── horse2zebra

└── cat2dog

├── checkpoint_quant

├── UGATIT_q_selfie2anime_lsgan_4resblock_6dis_1_1_10_10_1000_sn_smoothing_123_2.0_0.85

├── checkpoint

├── UGATIT.model-480000.data-00000-of-00001

├── UGATIT.model-480000.index

├── UGATIT.model-480000.meta

├── UGATIT_q_portrait_lsgan_4resblock_6dis_1_1_10_10_1000_sn_smoothing_123_2.0_0.85

└── ...

If you choose the halfway pretrained model, contents in checkpoint should be

model_checkpoint_path: "UGATIT.model-480000"

all_model_checkpoint_paths: "UGATIT.model-480000"

This experiment is based on the official codebase StyleGAN2. The original Flicker-Faces dataset includes multi-resolution data. You will need python 3.6.x, tensorflow-gpu 1.14.0, numpy

To obtain the FFHQ dataset, please refer to FFHQ repository and download the tfrecords dataset FFHQ-tfrecords into datasets/ffhq.

python run_training.py --num-gpus=8 --data-dir=datasets --config=config-e --dataset=ffhq --mirror-augment=true --total-kimg 25000 --gamma=100 --D_type=1 --discrete_layer [type=string, default=45] --commitment_cost [type=float, default=0.25] --decay [type=float, default=0.8]

| Model | 32×32 | 64×64 | 128×128 | 1024×1024 |

|---|---|---|---|---|

| StyleGAN | 3.28 | 4.82 | 6.33 | 5.24 |

| FQ-StyleGAN | 3.01 | 4.36 | 5.98 | 4.89 |

We thank official open-source implementations of BigGAN, StyleGAN, StyleGAN2 and U-GAT-IT.