This is the Repo for InteRecAgent, a interactive recommender agent, which applies Large Language Model(LLM) to bridge the gap between traditional recommender systems and conversational recommender system(CRS).

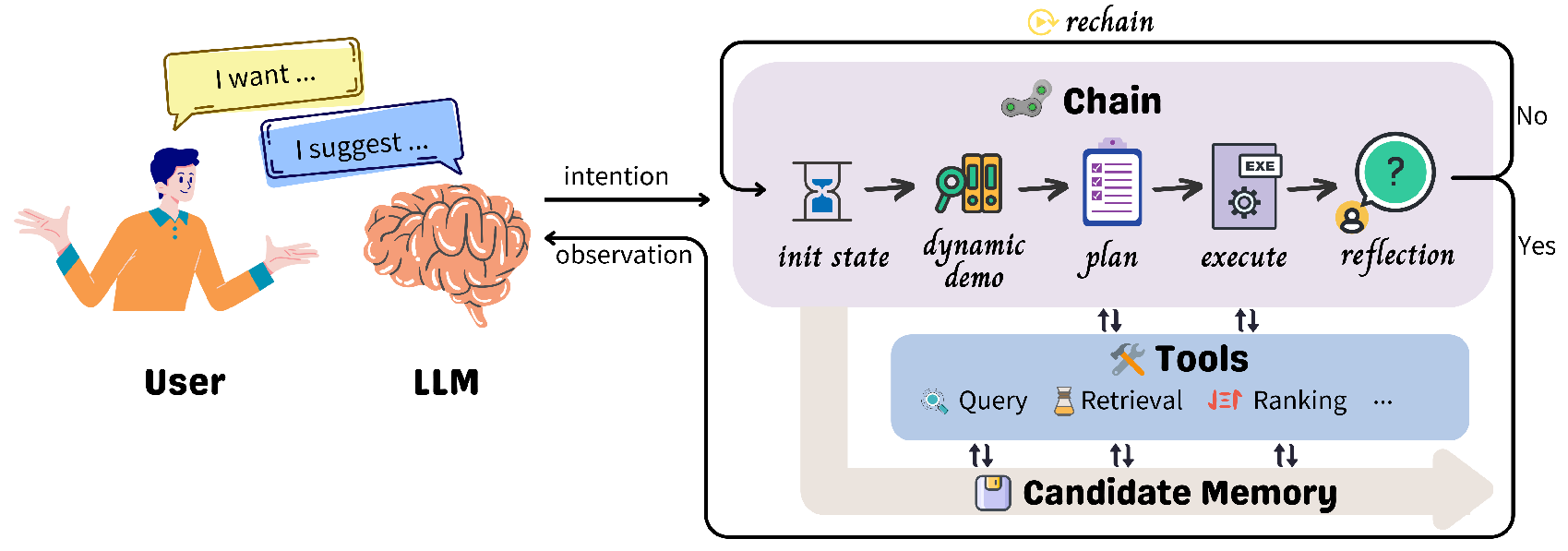

Figure 1: InteRecAgent Framework

InteRecAgent (Interactive Recommender Agent) is a framework to utilize pre-trained domain-specific recommendation tools (such as SQL tools, id-based recommendation models) and large language models (LLM) to implement an interactive, conversational recommendation agent. In this framework, the LLM primarily engages in user interaction and parses user interests as input for the recommendation tools, which are responsible for finding suitable items.

Within the InteRecAgent framework, recommendation tools are divided into three main categories: query, retrieval, and ranking. You need to provide the API for the LLM and the pre-configured domain-specific recommendation tools to build an interactive recommendation agent using the InteRecAgent framework. Neither the LLM nor the recommendation tools will be updated or modified within InteRecAgent.

This repository mainly implements the right-hand side of the figure, i.e., the communication between the LLM and the recommendation tools. For more details, please refer to our paper Recommender AI Agent: Integrating Large Language Models for Interactive Recommendations.

InteRecAgent consists of 4 necessary components:

-

LLM: A large language model, which serves as conversational agent.

-

Item profile table: A table containing item informations, whose columns consists of id, title, tag, description, price, release_date, visited_num, et al.

-

Query module: A SQL module to query item information in the item profile table.

- Input: SQL command

- Output: information queried with SQL

-

Retrieval module: The module aims to retrieve item candidates from the all item corups according to user's intention(requirements). Note that the module does not function in deal with user's personal profile, like user history, user age, et al. Instead, it focuses on what user wants, like "give me some sports games", "I want some popular games". The module should consist of at least two kinds of retrieval tools:

-

SQL tool: The tools is used to deal with complex search condition, which is related to item information. For example, "I want some popular sports games". Then the tool would use SQL command to search in the item profile table.

- Input: SQL command

- Output: execution log

-

Item similarity tool: The tools aims to retrieve items according to item similarity. Sometimes, user's intention is not clear enough to organized as SQL command, for example, "I want some games similar with Call of Duty", where the requirements is expressed implicitly through item similarity instead of explicit item features.

- Input: item name list as string.

- Output: execution log

-

-

Ranking module: Refine the rank of item candidates according to schema (popularity, similarity, preference). User prefernece comprises

preferandunwanted. The module could be a traditional recommender model, which inputs user and item features and outputs relevant score.- Input: ranking schema, user's profile (prefer, unwanted)

- Output: execution log

-

Environments

First, install other packages listed in

requirements.txt```bash cd LLM4CRS conda create -n llm4crs python==3.9 conda activate llm4crs pip install -r requirements.txt ``` -

Copy resource

Copy all files in "InteRecAgent/{domain}" in OneDrive / RecDrive to your local "./LLM4CRS/resources/{domain}". If you cannot access those links, please contact jialia@microsoft.com or xuhuangcs@mail.ustc.edu.cn.

-

Run

-

Personal OpenAI API key:

cd LLM4CRS DOMAIN="game" OPENAI_API_KEY="xxxx" python app.py

-

Azure OpenAI API key:

cd LLM4CRS DOMAIN="game" OPENAI_API_KEY="xxx" OPENAI_API_BASE="xxx" OPENAI_API_VERSION="xxx" OPENAI_API_TYPE="xxx" python app.py

Note

DOMAINrepresents the item domain, supportedgame,movieandbeauty_productnow.We support two types of OpenAI API: Chat and Completions. Here are commands for running RecBot with GPT-3.5-turbo and text-davinci-003.

cd LLMCRS # Note that the engine should be your deployment id # completion type: text-davinci-003 OPENAI_API_KEY="xxx" [OPENAI_API_BASE="xxx" OPENAI_API_VERSION="xxx" OPENAI_API_TYPE="xxx"] python app.py --engine text-davinci-003 --bot_type completion # chat type: gpt-3.5-turbo, gpt-4 (Recommended) OPENAI_API_KEY="xxx" [OPENAI_API_BASE="xxx" OPENAI_API_VERSION="xxx" OPENAI_API_TYPE="xxx"] python app.py --engine gpt-3.5-turbo/gpt-4 --bot_type chat

We also provide a shell script

run.sh, where commonly used arguments are given. You could directly set the API related information inrun.sh, or create a new shell scriptoai.shthat would be loaded inrun.sh. GPT-4 API is highly recommended for the InteRecAgent since it has remarkable instruction-following capability.Here is an example of the

oai.shscript:API_KEY="xxxxxx" # your api key API_BASE="https://xxxx.azure.com/" # [https://xxxx.azure.com, https://api.openai.com/v1] API_VERSION="2023-03-15-preview" API_TYPE="azure" # ['open_ai', 'azure'] engine="gpt4" # model name for OpenAI or deployment name for Azure OpenAI. GPT-4 is recommended. bot_type="chat" # model type, ["chat", "completetion"]. For gpt-3.5-turbo and gpt-4, it should be "chat". For text-davinci-003, it should be "completetion"

-

-

Features

There are several optional features to enhance the agent.

-

History Shortening

enable_shorten: if true, enable shortening chat history by LLM; else use all chat history

-

Demonstrations Selector

demo_mode: mode to choose demonstration. Optional values: [zero,fixed,dynamic]. Ifzero, no demonstration would be used (zero-shot). Iffixed, the firstdemo_numsexamples indemo_dir_or_filewould be used. Ifdynamic, mostdemo_numsrelated examples would be selected for in-context learning.demo_dir_or_file: the directory or file path of demostrations. If a directory path is given, all.jsonlfile in the folder would be loaded. Ifdemo_modeiszero, the argument is invalid. Default None.demo_nums: number of demonstrations used in prompt for in-context learning. The argument is invalid whendemo_diris not given. Default 3.

-

Reflection

enable_reflection: if true, enable reflection for better plan makingreflection_limits: maximum times of reflection

Note that reflection happens after the plan is finished. That means the conversational agent would generate an answer first and then do reflection.

-

Plan First

plan_first: if true, the agent would make tool using plan first. There would only a tool executor for LLM to call, where the plan is input. Default true.

Additionally, we have implemented a version without using the black-box API calling in langchain. To enable it, use the following arguments.

langchain: if true, use langchain in plan-first strategy. Otherwise, the API calls would be made by directly using openai. Default false.

-

InteRecAgent uses MIT license. All data and code in this project can only be used for academic purposes.

Please cite the following paper as the reference if you use our code or data

@misc{huang2023recommender,

title={Recommender AI Agent: Integrating Large Language Models for Interactive Recommendations},

author={Xu Huang and Jianxun Lian and Yuxuan Lei and Jing Yao and Defu Lian and Xing Xie},

year={2023},

eprint={2308.16505},

archivePrefix={arXiv},

primaryClass={cs.IR}

}

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

Thanks to the open source codes of the following projects:

UniRec VisualChatGPT JARVIS LangChain guidance

Please refer to RecAI: Responsible AI FAQ for document on the purposes, capabilities, and limitations of the RecAI systems.