Paper: (arXiv:2405.14174)

May. 23th, 2024: We release the code, log and ckpt for MSVMamba

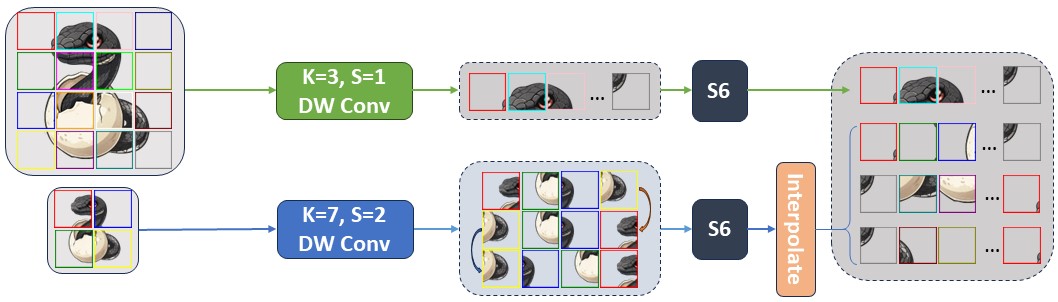

MSVMamba is a visual state space model that introduces a hierarchy in hierarchy design to the VMamba model. This repository contains the code for training and evaluating MSVMamba models on the ImageNet-1K dataset for image classification, COCO dataset for object detection, and ADE20K dataset for semantic segmentation. For more information, please refer to our paper.

| name | pretrain | resolution | acc@1 | #params | FLOPs | logs&ckpts |

|---|---|---|---|---|---|---|

| MSVMamba-Nano | ImageNet-1K | 224x224 | 77.3 | 7M | 0.9G | log&ckpt |

| MSVMamba-Micro | ImageNet-1K | 224x224 | 79.8 | 12M | 1.5G | log&ckpt |

| MSVMamba-Tiny | ImageNet-1K | 224x224 | 82.8 | 33M | 4.6G | log&ckpt |

| Backbone | #params | FLOPs | Detector | box mAP | mask mAP | logs&ckpts |

|---|---|---|---|---|---|---|

| MSVMamba-Micro | 32M | 201G | MaskRCNN@1x | 43.8 | 39.9 | log&ckpt |

| MSVMamba-Tiny | 53M | 252G | MaskRCNN@1x | 46.9 | 42.2 | log&ckpt |

| MSVMamba-Micro | 32M | 201G | MaskRCNN@3x | 46.3 | 41.8 | log&ckpt |

| MSVMamba-Tiny | 53M | 252G | MaskRCNN@3x | 48.3 | 43.2 | log&ckpt |

| Backbone | Input | #params | FLOPs | Segmentor | mIoU(SS) | mIoU(MS) | logs&ckpts |

|---|---|---|---|---|---|---|---|

| MSVMamba-Micro | 512x512 | 42M | 875G | UperNet@160k | 45.1 | 45.4 | log&ckpt |

| MSVMamba-Tiny | 512x512 | 65M | 942G | UperNet@160k | 47.8 | - | log&ckpt |

The steps to create env, train and evaluate MSVMamba models are followed by the same steps as VMamba.

Step 1: Clone the MSVMamba repository:

git clone https://github.com/YuHengsss/MSVMamba.git

cd MSVMambaStep 2: Environment Setup:

Create and activate a new conda environment

conda create -n msvmamba

conda activate msvmambaInstall Dependencies

pip install -r requirements.txt

cd kernels/selective_scan && pip install .Dependencies for Detection and Segmentation (optional)

pip install mmengine==0.10.1 mmcv==2.1.0 opencv-python-headless ftfy regex

pip install mmdet==3.3.0 mmsegmentation==1.2.2 mmpretrain==1.2.0Classification

To train MSVMamba models for classification on ImageNet, use the following commands for different configurations:

python -m torch.distributed.launch --nnodes=1 --node_rank=0 --nproc_per_node=8 --master_addr="127.0.0.1" --master_port=29501 main.py --cfg </path/to/config> --batch-size 128 --data-path </path/of/dataset> --output /tmpIf you only want to test the performance (together with params and flops):

python -m torch.distributed.launch --nnodes=1 --node_rank=0 --nproc_per_node=1 --master_addr="127.0.0.1" --master_port=29501 main.py --cfg </path/to/config> --batch-size 128 --data-path </path/of/dataset> --output /tmp --resume </path/of/checkpoint> --evalDetection and Segmentation

To evaluate with mmdetection or mmsegmentation:

bash ./tools/dist_test.sh </path/to/config> </path/to/checkpoint> 1use --tta to get the mIoU(ms) in segmentation

To train with mmdetection or mmsegmentation:

bash ./tools/dist_train.sh </path/to/config> 8If MSVMamba is helpful for your research, please cite the following paper:

@article{shi2024multiscale,

title={Multi-Scale VMamba: Hierarchy in Hierarchy Visual State Space Model},

author={Yuheng Shi and Minjing Dong and Chang Xu},

journal={arXiv preprint arXiv:2405.14174},

year={2024}

}

This project is based on VMamba(paper, code), Mamba (paper, code), Swin-Transformer (paper, code), ConvNeXt (paper, code), OpenMMLab, thanks for their excellent works.