In this final lab, we shall apply the regression analysis and diagnostics techniques covered in this section to a familiar "Boston Housing" dataset. We performed a detailed EDA for this dataset in earlier section and hence carry a good understanding of how this dataset is composed. This this lab we shall try to identify the predictive ability of some of features found in this dataset towards identifying house price.

You will be able to:

- Build many linear models with boston housing data set using OLS

- For each model, analyze OLS diagnostics for model validity

- Visually explain the results and interpret the diagnostics from Statsmodels

- Comment on the goodness of fit for a simple regression model

Let's get started.

# Your code hereThe data features and target are present as columns in boston data. Boston data gives a set of independent as independent variables in data and the housing rate as MEDV in target property. Also feature names are listed in feature_names. The desription is available at KAGGLE.

# Your code here.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| crim | zn | indus | chas | nox | rm | age | dis | rad | tax | ptratio | b | lstat | medv | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0 | 0.538 | 6.575 | 65.2 | 4.0900 | 1 | 296 | 15.3 | 396.90 | 4.98 | 24.0 |

| 1 | 0.02731 | 0.0 | 7.07 | 0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2 | 242 | 17.8 | 396.90 | 9.14 | 21.6 |

| 2 | 0.02729 | 0.0 | 7.07 | 0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2 | 242 | 17.8 | 392.83 | 4.03 | 34.7 |

| 3 | 0.03237 | 0.0 | 2.18 | 0 | 0.458 | 6.998 | 45.8 | 6.0622 | 3 | 222 | 18.7 | 394.63 | 2.94 | 33.4 |

| 4 | 0.06905 | 0.0 | 2.18 | 0 | 0.458 | 7.147 | 54.2 | 6.0622 | 3 | 222 | 18.7 | 396.90 | 5.33 | 36.2 |

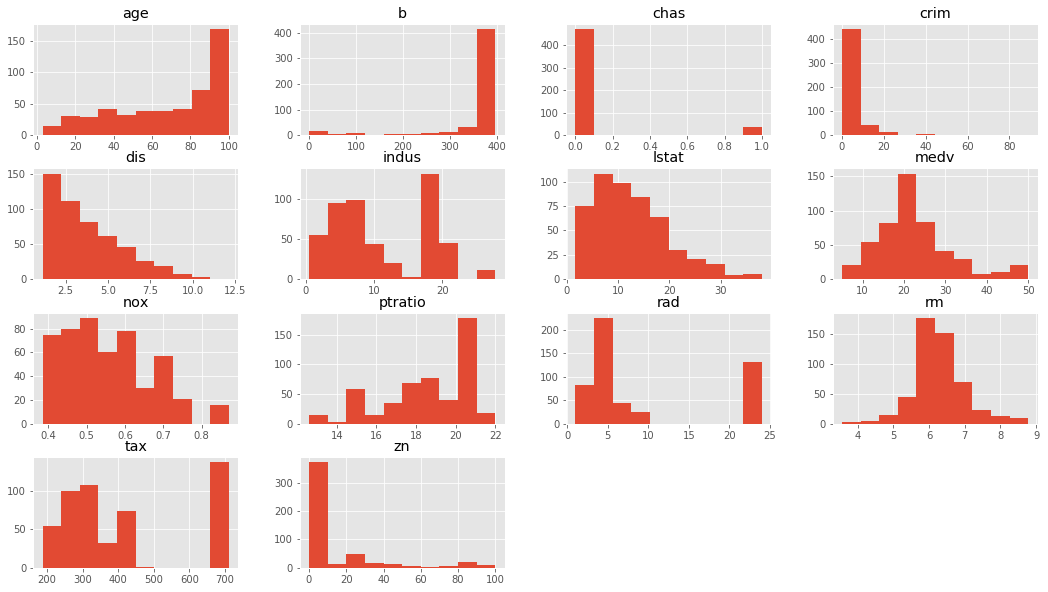

# Record your observations here # Your code here # You observations here Based on this , we shall choose a selection of features which appear to be more 'normal' than others.

# Your code here.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| crim | dis | rm | zn | age | medv | |

|---|---|---|---|---|---|---|

| 0 | 0.00632 | 4.0900 | 6.575 | 18.0 | 65.2 | 24.0 |

| 1 | 0.02731 | 4.9671 | 6.421 | 0.0 | 78.9 | 21.6 |

| 2 | 0.02729 | 4.9671 | 7.185 | 0.0 | 61.1 | 34.7 |

| 3 | 0.03237 | 6.0622 | 6.998 | 0.0 | 45.8 | 33.4 |

| 4 | 0.06905 | 6.0622 | 7.147 | 0.0 | 54.2 | 36.2 |

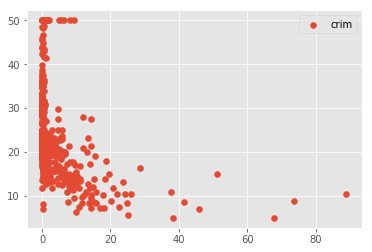

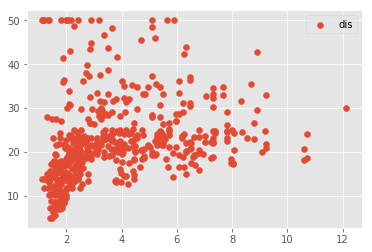

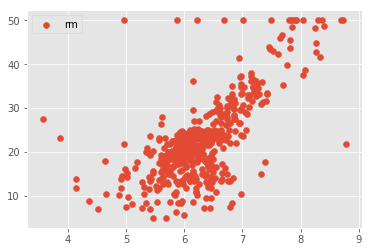

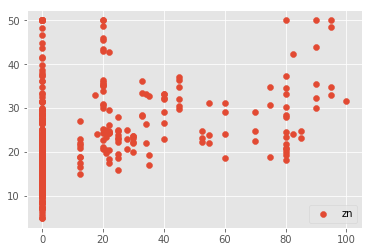

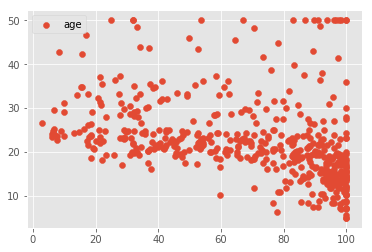

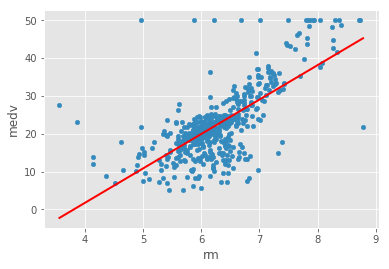

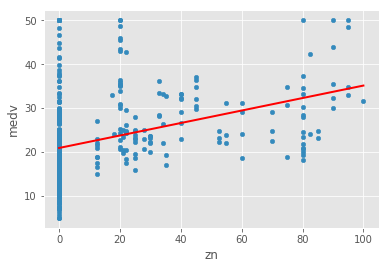

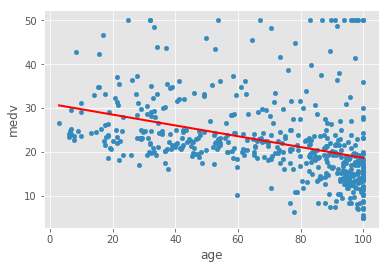

Check for linearity assumption for all chosen features with target variable using scatter plots and comment on the results

# Your code here # Your observations here Okie so obviously our data needs a lot of pre-procesing to improve the results. This key behind such kaggle competitions is to process the data in such a way that we can identify the relationships and make predictions in the best possible way. For now, we shall leave the dataset untouched and just move on with regression. So far, our assumptions, although not too strong, but still hold to a level that we can move on.

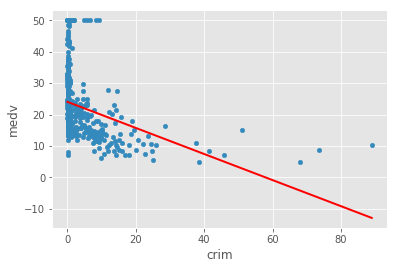

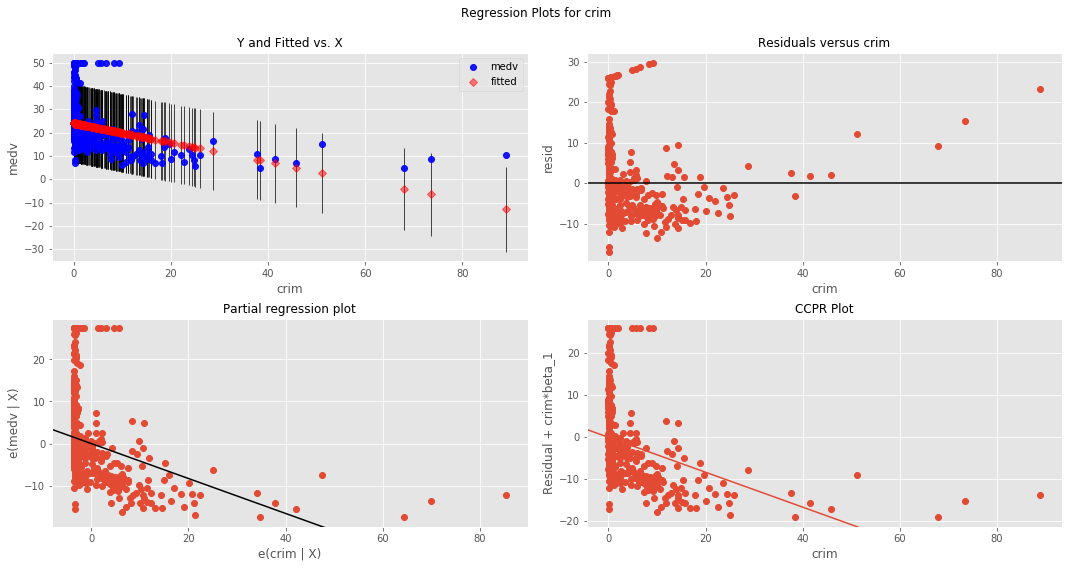

Right here is the real deal. Let's perform a number of simple regression experiments between the chosen independent variables and the dependent variable (price). We shall do this is a loop and in every iteration, we shall pick one of the independent variables perform following steps:

- Run a simple OLS regression between independent and dependent variables

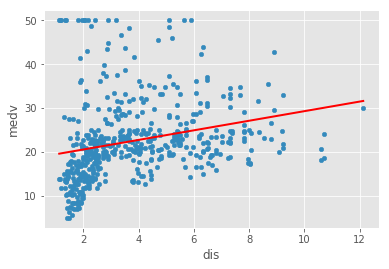

- Plot a regression line on the scatter plots

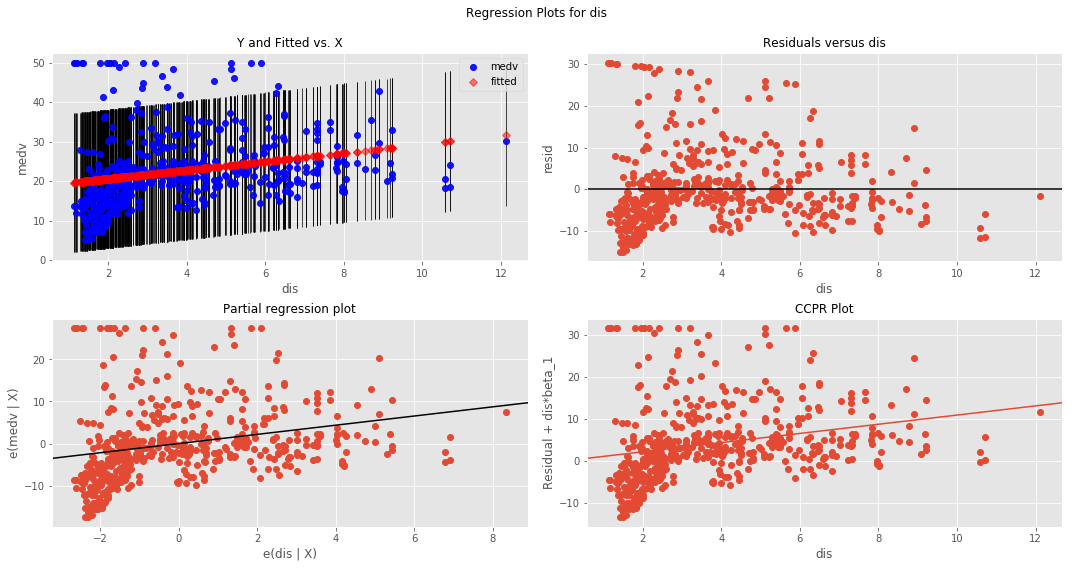

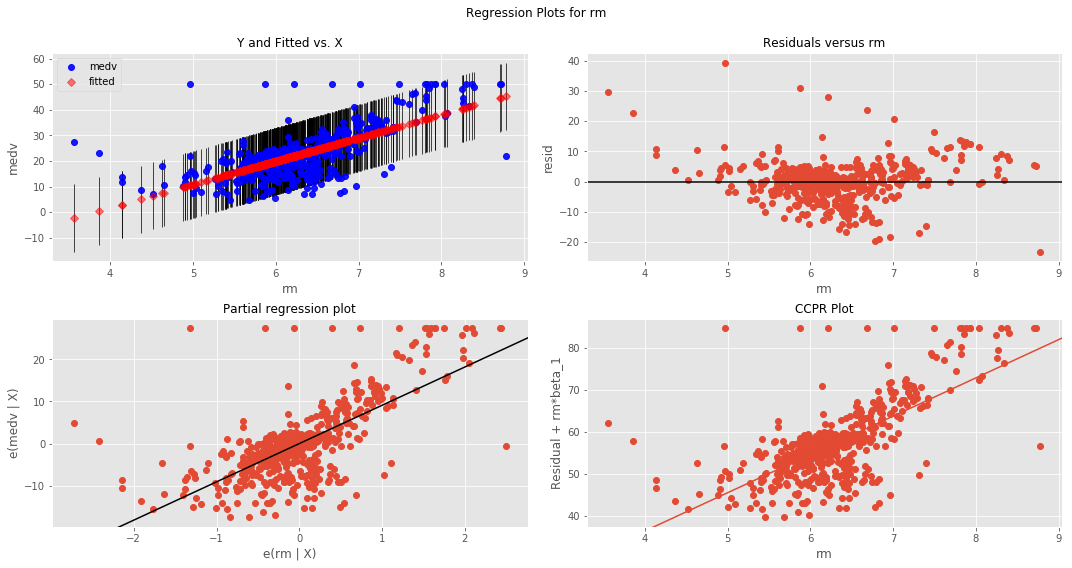

- Plot the residuals using

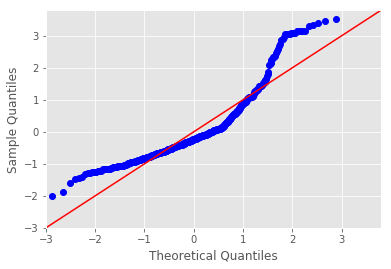

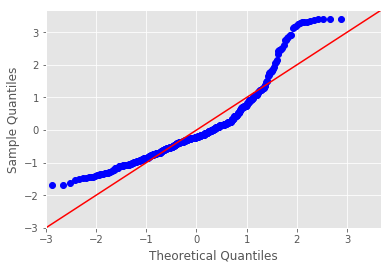

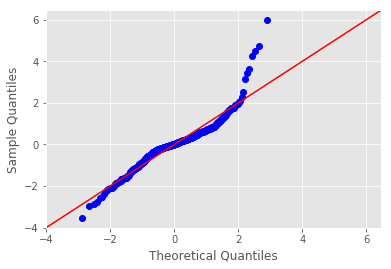

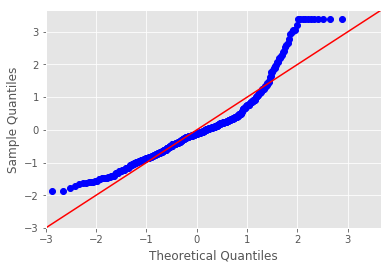

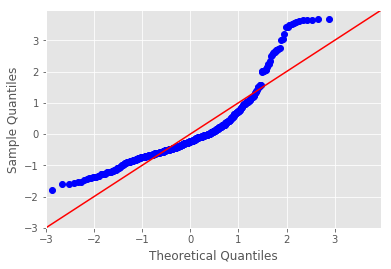

sm.graphics.plot_regress_exog(). - Plot a Q-Q plot for regression residuals normality test

- Store following values in array for each iteration:

- Independent Variable

- r_squared'

- intercept'

- 'slope'

- 'p-value'

- 'normality (JB)'

- Comment on each output

# Your code hereBoston Housing DataSet - Regression Analysis and Diagnostics for formula: medv~crim

-------------------------------------------------------------------------------------

Press Enter to continue...

Boston Housing DataSet - Regression Analysis and Diagnostics for formula: medv~dis

-------------------------------------------------------------------------------------

Press Enter to continue...

Boston Housing DataSet - Regression Analysis and Diagnostics for formula: medv~rm

-------------------------------------------------------------------------------------

Press Enter to continue...

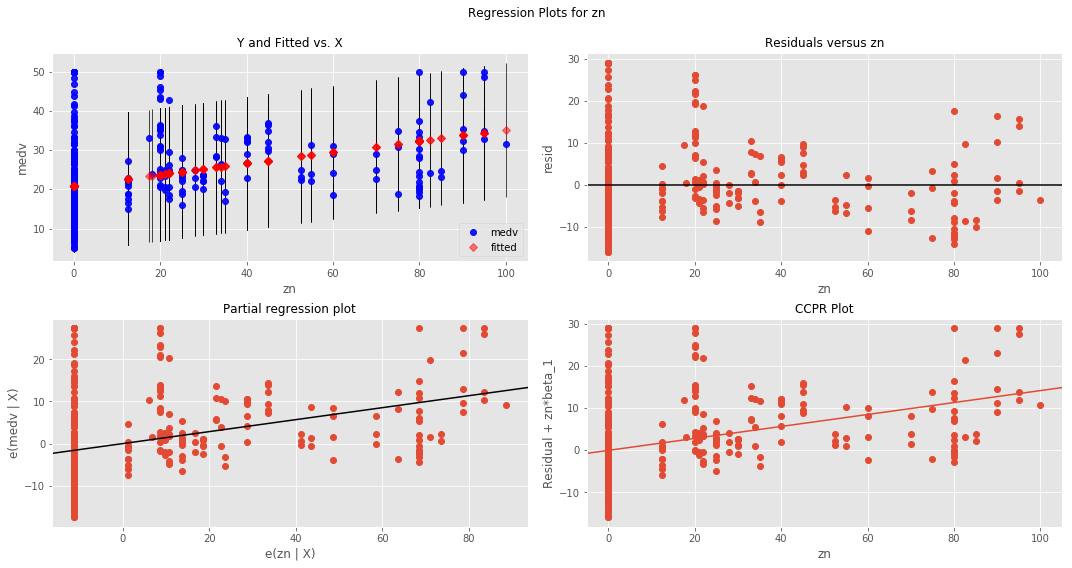

Boston Housing DataSet - Regression Analysis and Diagnostics for formula: medv~zn

-------------------------------------------------------------------------------------

Press Enter to continue...

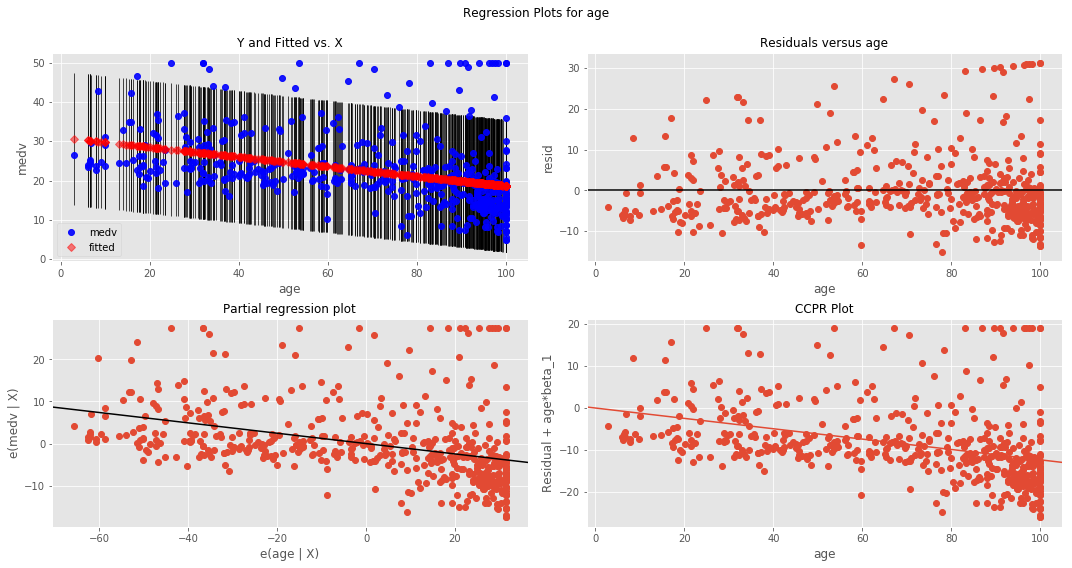

Boston Housing DataSet - Regression Analysis and Diagnostics for formula: medv~age

-------------------------------------------------------------------------------------

Press Enter to continue...

pd.DataFrame(results).dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| 0 | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|

| 0 | ind_var | r_squared | intercept | slope | p-value | normality (JB) |

| 1 | crim | 0.15078 | 24.0331 | -0.41519 | 1.17399e-19 | 295.404 |

| 2 | dis | 0.0624644 | 18.3901 | 1.09161 | 1.20661e-08 | 305.104 |

| 3 | rm | 0.483525 | -34.6706 | 9.10211 | 2.48723e-74 | 612.449 |

| 4 | zn | 0.129921 | 20.9176 | 0.14214 | 5.71358e-17 | 262.387 |

| 5 | age | 0.142095 | 30.9787 | -0.123163 | 1.56998e-18 | 456.983 |

#Your obervations here So clearly the results are not highly reliable. the best good of fit i.e. r-squared is witnessed with rm. So clearly in this analysis this is our best predictor.

- Pre-Processing

This is where pre-processing of data comes in. Dealing with outliers, normalizing data, scaling values etc can help regression analysis get more meaningful results from the given set of data

- Advanced Analytical Methods

Simple regression is a very basic analysis techniques and trying to fit a straight line solution to complex analytical questions may prove to be very inefficient. In the next section we shall look at multiple regression where we can use multiple features AT ONCE to define a relationship with outcome. We shall also look at some pre-processing and data simplification techniques and re-visit the boston dataset with an improved toolkit.

Apply some data wrangling skills that you have learned in previous section to pre-process the set of independent variables we chose above. You can start off with outliers and think of a way to deal with them. See how it affects the the goodness of fit.

In this lab, we attempted to bring in all the skills learnt so far to a slighlt detailed dataset. We looked at the outcome of our analysis and realized that the data might need some pre-processing to see a clear improvement in results. We shall pick it up in the next section from this point and bring in data pre-processing techniques along with some assumptions that are needed for multiple regression .