The DiffRIR is proposed in "LLMGA: Multimodal Large Language Model based Generation Assistant", and the code is based on DiffIR.

Bin Xia, Shiyin Wang, Yingfan Tao, Yitong Wang, and Jiaya Jia

Paper | Project Page | pretrained models

- [2023.12.19] 🔥 We release pretrained models for DiffRIR.

- [2023.11.19] 🔥 We release all training and inference codes of DiffRIR.

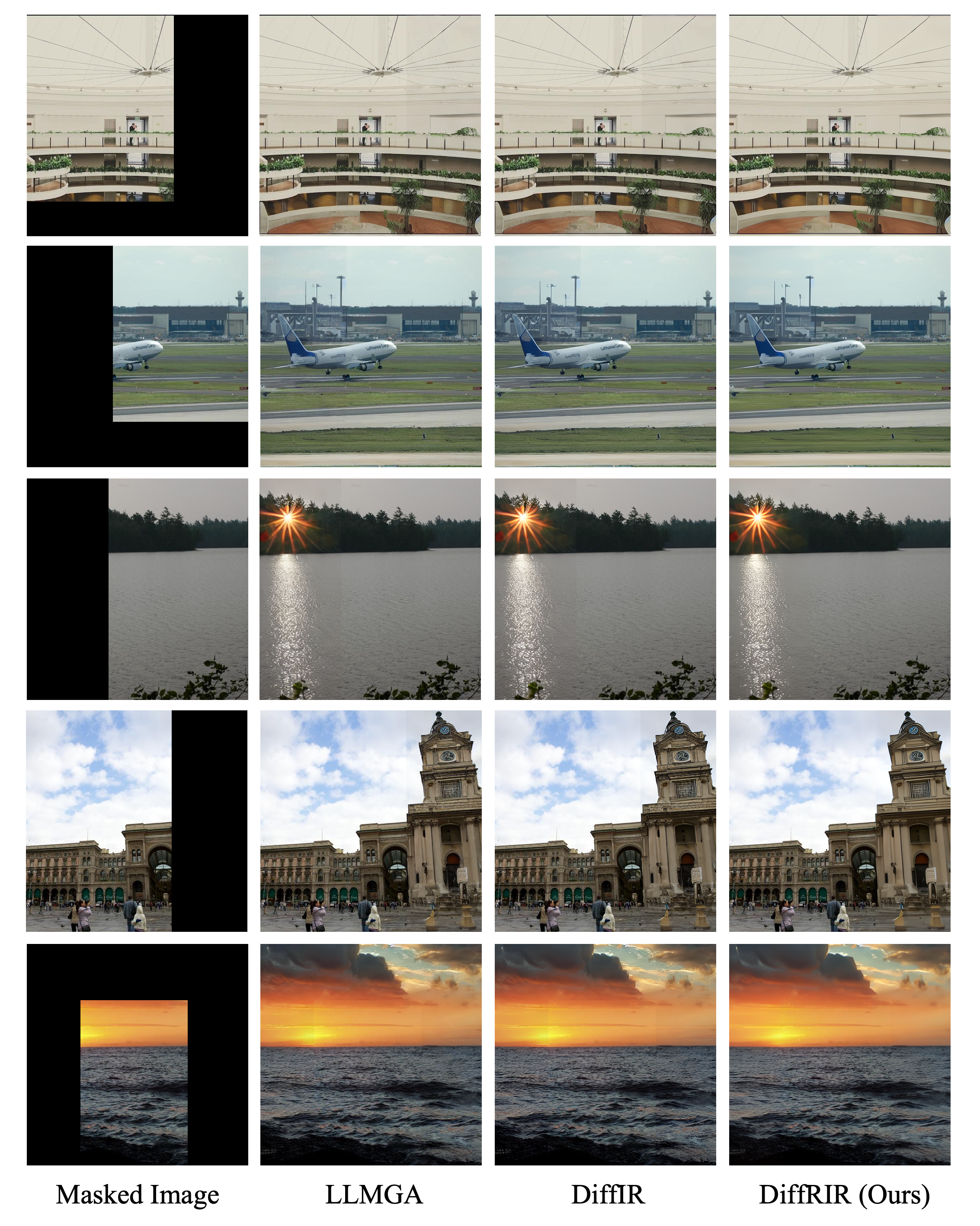

We propose a reference-based restoration network (DiffRIR) to alleviate texture, brightness, and contrast disparities between generated and preserved regions during image editing, such as inpainting and outpainting.

We use DF2K (DIV2K and Flickr2K) + OST datasets for our training. Only HR images are required.

You can download from :

- DIV2K: http://data.vision.ee.ethz.ch/cvl/DIV2K/DIV2K_train_HR.zip

- Flickr2K: https://cv.snu.ac.kr/research/EDSR/Flickr2K.tar

- OST: https://openmmlab.oss-cn-hangzhou.aliyuncs.com/datasets/OST_dataset.zip

Here are steps for data preparation.

For the DF2K dataset, we use a multi-scale strategy, i.e., we downsample HR images to obtain several Ground-Truth images with different scales.

You can use the scripts/generate_multiscale_DF2K.py script to generate multi-scale images.

Note that this step can be omitted if you just want to have a fast try.

python scripts/generate_multiscale_DF2K.py --input datasets/DF2K/DF2K_HR --output datasets/DF2K/DF2K_multiscaleWe then crop DF2K images into sub-images for faster IO and processing.

This step is optional if your IO is enough or your disk space is limited.

You can use the scripts/extract_subimages.py script. Here is the example:

python scripts/extract_subimages.py --input datasets/DF2K/DF2K_multiscale --output datasets/DF2K/DF2K_multiscale_sub --crop_size 400 --step 200You need to prepare a txt file containing the image paths. The following are some examples in meta_info_DF2Kmultiscale+OST_sub.txt (As different users may have different sub-images partitions, this file is not suitable for your purpose and you need to prepare your own txt file):

DF2K_HR_sub/000001_s001.png

DF2K_HR_sub/000001_s002.png

DF2K_HR_sub/000001_s003.png

...You can use the scripts/generate_meta_info.py script to generate the txt file.

You can merge several folders into one meta_info txt. Here is the example:

python scripts/generate_meta_info.py --input datasets/DF2K/DF2K_HR datasets/DF2K/DF2K_multiscale --root datasets/DF2K datasets/DF2K --meta_info datasets/DF2K/meta_info/meta_info_DF2Kmultiscale.txtsh trainS1.sh

#set the 'pretrain_network_g' and 'pretrain_network_S1' in ./options/train_DiffIRS2_x4.yml to be the path of DiffIR_S1's pre-trained model

sh trainS2.sh

#set the 'pretrain_network_g' and 'pretrain_network_S1' in ./options/train_DiffIRS2_GAN_x4.yml to be the path of DiffRIR_S2 and DiffRIR_S1's trained model, respectively.

sh train_DiffRIRS2_GAN.sh

or

sh train_DiffRIRS2_GANv2.sh

Note: The above training script uses 8 GPUs by default.

Download the pre-trained model and place it in ./experiments/

python3 inference_diffrir.py --im_path PathtoSDoutput --mask_path PathtoMASK --gt_path PathtoMASKedImage --res_path ./outputs --model_path Pathto4xModel --scale 4

python3 inference_diffrir.py --im_path PathtoSDoutput --mask_path PathtoMASK --gt_path PathtoMASKedImage --res_path ./outputs --model_path Pathto2xModel --scale 2

python3 inference_diffrir.py --im_path PathtoSDoutput --mask_path PathtoMASK --gt_path PathtoMASKedImage --res_path ./outputs --model_path Pathto1xModel --scale 1

If you find this repo useful for your research, please consider citing the paper

@article{xia2023llmga,

title={LLMGA: Multimodal Large Language Model based Generation Assistant},

author={Xia, Bin and Wang, Shiyin, and Tao, Yingfan and Wang, Yitong and Jia, Jiaya},

journal={arXiv preprint arXiv:2311.16500},

year={2023}

}