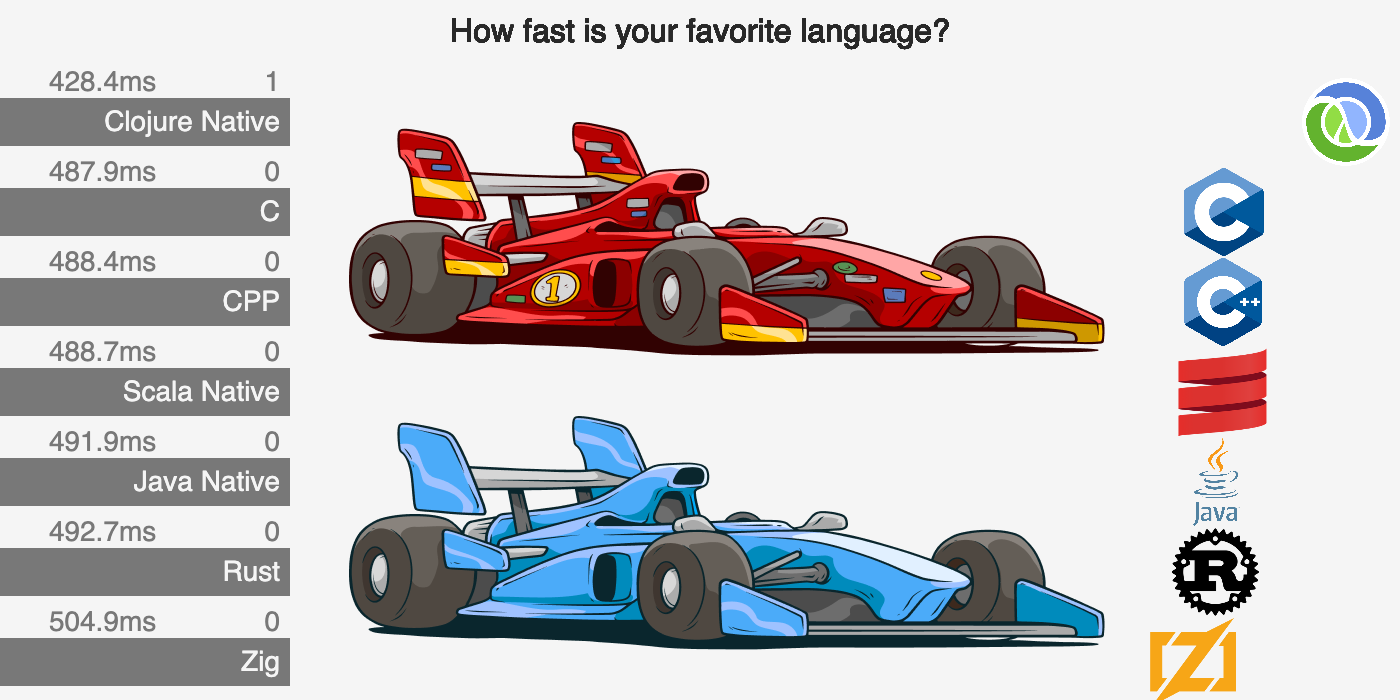

This a playground for visualizing the the Languages benchmark of Benjamin Dicken's.

- The visualizations are here: pez.github.io/languages-visualizations/ (There are some more notes about the experiment there.)

- The benchmarks project is Benjamin Dicken's Languages, here: github.com/bddicken/languages.

If you lack some language in the visualizations, let me know in an issue. If you include instructions on how to get the toolchain installed on a Mac silicon (without any Docker involved) it increases the chances I get the language included.

The visualizations app is written with Replicant (the page with “navigation”) and Quil (the animated visualizations). There are also some Babashka tasks for running benchmarks and collecting the JSON files written by the hyperfine benchmark runner. Some of the icons come from techicons.dev.

I hope you fork this project and have some fun with it. 😄

You'll need the Clojure CLI tool (clojure), and NodeJS.

npm i- Start the app:

npx shadow-cljs watch app

When the app is compiled, you can access it on localhost:9797

To play with the code, connect your editor to the shadow-cljs REPL and go.

To load the app with other data you update benchmark_data.cljs. There are some Babashka tasks to make it more straight forward to produce data for the app. The tasks default to assume you have checked out your fork of the Languages project as a sibling project to this one.

Many languages need a compile step to produce a binary. To compile all benchmarks for any languages in the Languages project that you have a working toolchain for:

bb compile-benchmarksCheck the compile.sh script in the Languages project for the commands used to compile.

To produce the JSON results files and run the hello-world benchmarks together with each benchmark for each langage, there's a “copy” of the run.sh script from the Languages project in this repository: scripts/bench.sh. Basically what I do is that I copy the run commands from run.sh and paste them in bench.sh, commenting out any languages I don't want to run. (Like I can't stand waiting for COBOL.)

There's a Babashka task for running benchmarks in bench.sh:

bb bench-benchmarksSince this can take very long, there's also a bench-some.sh script, where you can have just a few languages enabled. To run that script instead you provide two arguments to task:

bb bench-benchmarks ../languages somesome can really be anything, if the argument is not there the full bench.sh script will be run. The ../languages is the otherwise default directory pointing out where the Languages project is.

Since compiling can also take some time, there's a convenience task for doing both the compile and the benchmark run, and you can go out for a walk or whatever while your computer crunches.

bb compile-and-benchThis task takes the same arguments as bench-benchmarks.

To produce the map for pasting in benchmark_data.cljs:

bb collect-benchmark-dataThe task will look for the data in /tmp/languages (since that is where bench.sh places the files). You can tell it to fetch the files from somewhere else:

bb collect-benchmark-data somewhere-elseE.g. I run the benchmarks on another machine which I have mounted on my regular mac:

bb collect-benchmark-data /Volumes/Macintosh\ HD-1/tmp/languages/The collect task (like the others) is really “happy path”, with almost no error checking. If it crashes on you, connect your editor to a Babashka repl and go hunting. (Or println debug like some cave man.) It's probably some JSON file that contains something funny.

If you share on X, please tag @pappapez and @BenjDicken.