Pricing of enterprise services

Our enterprise service offers comprehensive support and flexibility for businesses seeking professional AI solutions.

302.AI is a pay-as-you-go enterprise-level AI application platform that provides an open platform and open-source ecosystem to help AI solve various needs. Click here to get $1 free credit!

- Quick Conversion: Supports converting all GitHub, GitLab, Gitee, Gitea and other code repositories into knowledge bases within minutes.

- Multi-language Support: Supports code analysis and documentation generation for all programming languages.

- Code Structure Diagrams: Automatically generates Mermaid diagrams to help understand code structure.

- Custom Model Support: Supports custom models and custom APIs for flexible extension.

- AI Intelligent Analysis: AI-based code analysis and code relationship understanding.

- SEO Friendly: Generates SEO-friendly documentation and knowledge bases based on Next.js for easy search engine crawling.

- Conversational Interaction: Supports conversations with AI to obtain detailed code information and usage methods for deep code understanding.

- Support multiple code repositories (GitHub, GitLab, Gitee, Gitea, etc.)

- Support multiple programming languages (Python, Java, C#, JavaScript, etc.)

- Support repository management (CRUD operations on repositories)

- Support multiple AI providers (OpenAI, AzureOpenAI, Anthropic, etc.)

- Support multiple databases (SQLite, PostgreSQL, SqlServer, MySQL, etc.)

- Support multiple languages (Chinese, English, French, etc.)

- Support uploading ZIP files and local files

- Provide data fine-tuning platform to generate fine-tuning datasets

- Support directory-level repository management with dynamic directory and document generation

- Support repository directory modification management

- Support user management (CRUD operations on users)

- Support user permission management

- Support repository-level generation of different fine-tuning framework datasets

OpenDeepWiki is an open-source project inspired by DeepWiki, developed based on .NET 9 and Semantic Kernel. It aims to help developers better understand and utilize code repositories, providing features such as code analysis, documentation generation, and knowledge graph construction.

Main Features:

- Analyze code structure

- Understand repository core concepts

- Generate code documentation

- Automatically generate README.md for code

- Support MCP (Model Context Protocol)

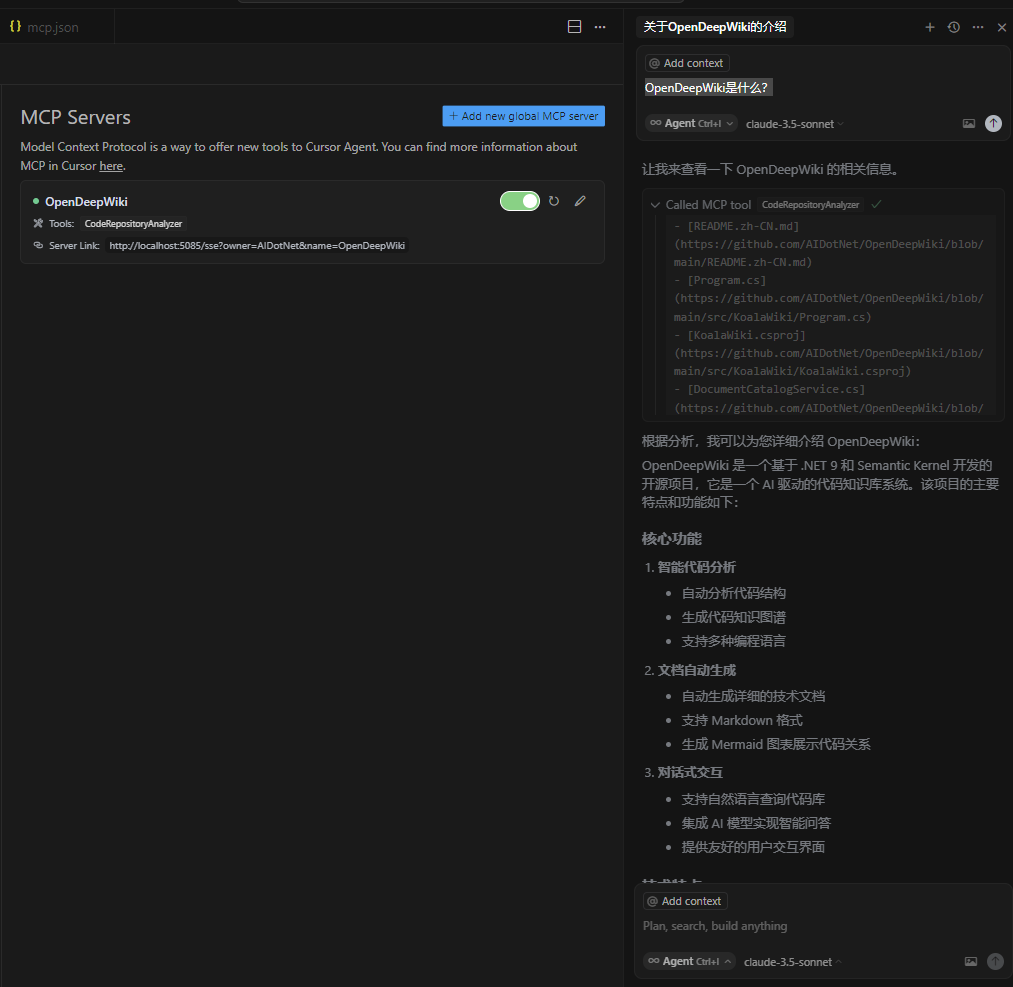

OpenDeepWiki supports the MCP protocol:

- Can serve as a single repository MCPServer for repository analysis.

Example configuration:

{

"mcpServers": {

"OpenDeepWiki":{

"url": "http://Your OpenDeepWiki service IP:port/sse?owner=AIDotNet&name=OpenDeepWiki"

}

}

}- owner: Repository organization or owner name

- name: Repository name

After adding the repository, you can test by asking questions like "What is OpenDeepWiki?", with effects as shown below:

This way, OpenDeepWiki can serve as an MCPServer for other AI models to call, facilitating analysis and understanding of open-source projects.

- Clone the repository

git clone https://github.com/AIDotNet/OpenDeepWiki.git

cd OpenDeepWiki- Modify environment variable configuration in

docker-compose.yml:

- OpenAI example:

services:

koalawiki:

environment:

- KOALAWIKI_REPOSITORIES=/repositories

- TASK_MAX_SIZE_PER_USER=5 # Maximum parallel document generation tasks per user for AI

- CHAT_MODEL=DeepSeek-V3 # Model must support function calling

- ANALYSIS_MODEL= # Analysis model for generating repository directory structure

- CHAT_API_KEY= # Your API Key

- LANGUAGE= # Default generation language, e.g., "Chinese"

- ENDPOINT=https://api.token-ai.cn/v1

- DB_TYPE=sqlite

- MODEL_PROVIDER=OpenAI # Model provider, supports OpenAI, AzureOpenAI, Anthropic

- DB_CONNECTION_STRING=Data Source=/data/KoalaWiki.db

- EnableSmartFilter=true # Whether to enable smart filtering, affects AI's ability to get repository file directories

- UPDATE_INTERVAL # Repository incremental update interval in days

- MAX_FILE_LIMIT=100 # Maximum upload file limit in MB

- DEEP_RESEARCH_MODEL= # Deep research model, if empty uses CHAT_MODEL

- ENABLE_INCREMENTAL_UPDATE=true # Whether to enable incremental updates

- ENABLE_CODED_DEPENDENCY_ANALYSIS=false # Whether to enable code dependency analysis, may affect code quality

- ENABLE_WAREHOUSE_COMMIT=true # Whether to enable warehouse commit

- ENABLE_FILE_COMMIT=true # Whether to enable file commit

- REFINE_AND_ENHANCE_QUALITY=true # Whether to refine and enhance quality

- ENABLE_WAREHOUSE_FUNCTION_PROMPT_TASK=true # Whether to enable warehouse function prompt task

- ENABLE_WAREHOUSE_DESCRIPTION_TASK=true # Whether to enable warehouse description task

- CATALOGUE_FORMAT=compact # Directory structure format (compact, json, pathlist, unix)

- ENABLE_CODE_COMPRESSION=false # Whether to enable code compression

- READ_MAX_TOKENS=100000 # The maximum token limit for reading files in AI is set to prevent unlimited file reading. It is recommended to fill in 70% of the model's maximum token.

# Feishu Bot configuration (optional)

- FeishuAppId=

- FeishuAppSecret=

- FeishuBotName=KoalaWiki- AzureOpenAI and Anthropic configurations are similar, only need to adjust

ENDPOINTandMODEL_PROVIDER.

- DB_TYPE=sqlite

- DB_CONNECTION_STRING=Data Source=/data/KoalaWiki.db- DB_TYPE=postgres

- DB_CONNECTION_STRING=Host=localhost;Database=KoalaWiki;Username=postgres;Password=password- DB_TYPE=sqlserver

- DB_CONNECTION_STRING=Server=localhost;Database=KoalaWiki;Trusted_Connection=true;- DB_TYPE=mysql

- DB_CONNECTION_STRING=Server=localhost;Database=KoalaWiki;Uid=root;Pwd=password;- Start services

Using Makefile commands:

# Build all Docker images

make build

# Start all services in background

make up

# Start in development mode (with visible logs)

make devVisit http://localhost:8090 to access the knowledge base page.

For Windows users without make environment, use Docker Compose directly:

docker-compose build

docker-compose up -d

docker-compose up

docker-compose down

docker-compose logs -f- Build for specific architecture:

docker-compose build --build-arg ARCH=arm64

docker-compose build --build-arg ARCH=amd64- Build only backend or frontend:

docker-compose build koalawiki

docker-compose build koalawiki-web- One-click deployment to Sealos (supports public network access):

For detailed steps, please refer to: One-click Sealos Deployment of OpenDeepWiki

OpenDeepWiki leverages AI to achieve:

- Clone code repository locally

- Read .gitignore configuration to ignore irrelevant files

- Recursively scan directories to get all files and directories

- Determine if file count exceeds threshold; if so, call AI model for intelligent directory filtering

- Parse AI-returned directory JSON data

- Generate or update README.md

- Call AI model to generate repository classification information and project overview

- Clean project analysis tag content and save project overview to database

- Call AI to generate thinking directory (task list)

- Recursively process directory tasks to generate document directory structure

- Save directory structure to database

- Process incomplete document tasks

- If Git repository, clean old commit records, call AI to generate update log and save

graph TD

A[Clone code repository] --> B[Read .gitignore configuration to ignore files]

B --> C[Recursively scan directories to get all files and directories]

C --> D{Does file count exceed threshold?}

D -- No --> E[Directly return directory structure]

D -- Yes --> F[Call AI model for intelligent directory structure filtering]

F --> G[Parse AI-returned directory JSON data]

E --> G

G --> H[Generate or update README.md]

H --> I[Call AI model to generate repository classification information]

I --> J[Call AI model to generate project overview information]

J --> K[Clean project analysis tag content]

K --> L[Save project overview to database]

L --> M[Call AI to generate thinking directory task list]

M --> N[Recursively process directory tasks to generate DocumentCatalog]

N --> O[Save directory structure to database]

O --> P[Process incomplete document tasks]

P --> Q{Is repository type Git?}

Q -- Yes --> R[Clean old commit records]

R --> S[Call AI to generate update log]

S --> T[Save update log to database]

Q -- No --> T

KOALAWIKI_REPOSITORIES: Repository storage pathTASK_MAX_SIZE_PER_USER: Maximum parallel document generation tasks per user for AICHAT_MODEL: Chat model (must support function calling)ENDPOINT: API endpointANALYSIS_MODEL: Analysis model for generating repository directory structureCHAT_API_KEY: API keyLANGUAGE: Document generation languageDB_TYPE: Database type, supports sqlite, postgres, sqlserver, mysql (default: sqlite)MODEL_PROVIDER: Model provider, default OpenAI, supports AzureOpenAI, AnthropicDB_CONNECTION_STRING: Database connection stringEnableSmartFilter: Whether to enable smart filtering, affects AI's ability to get repository directoriesUPDATE_INTERVAL: Repository incremental update interval (days)MAX_FILE_LIMIT: Maximum upload file limit (MB)DEEP_RESEARCH_MODEL: Deep research model, if empty uses CHAT_MODELENABLE_INCREMENTAL_UPDATE: Whether to enable incremental updatesENABLE_CODED_DEPENDENCY_ANALYSIS: Whether to enable code dependency analysis, may affect code qualityENABLE_WAREHOUSE_COMMIT: Whether to enable warehouse commitENABLE_FILE_COMMIT: Whether to enable file commitREFINE_AND_ENHANCE_QUALITY: Whether to refine and enhance qualityENABLE_WAREHOUSE_FUNCTION_PROMPT_TASK: Whether to enable warehouse function prompt taskENABLE_WAREHOUSE_DESCRIPTION_TASK: Whether to enable warehouse description taskCATALOGUE_FORMAT: Directory structure format (compact, json, pathlist, unix)ENABLE_CODE_COMPRESSION: Whether to enable code compressionMAX_FILE_READ_COUNT: Maximum file read count limit for AI, prevents unlimited file reading and improves processing efficiency (default: 10, 0 = no limit)FeishuAppId: Feishu App ID (required if enabling Feishu Bot)FeishuAppSecret: Feishu App Secret (required if enabling Feishu Bot)FeishuBotName: Feishu bot display name (optional)

- Purpose: Connect the current repository to Feishu group/DM as a knowledge bot for Q&A and content delivery.

- Callback route:

/api/feishu-bot/{owner}/{name}(copy the full URL from the "Feishu Bot" button on the repository page). - Requirement: Service must be publicly accessible; message encryption (Encrypt Key) is not supported yet.

- Type: Internal App (for your organization).

- Capability: Enable "Bot". Publish to the organization and install it.

- Permissions (at minimum, per platform guidance):

- Message send/read related scopes (e.g.,

im:message,im:message:send_as_bot). - Event subscription related scopes (for receiving message events).

- Message send/read related scopes (e.g.,

- Open "Event Subscriptions" and disable "Encrypt Key".

- Subscribe to event:

im.message.receive_v1. - Request URL:

https://your-domain/api/feishu-bot/{owner}/{name}.{owner}is the repository organization or owner, e.g.,AIDotNet.{name}is the repository name, e.g.,OpenDeepWiki.

- Save to complete the URL verification (backend already handles the challenge response).

Tip: You can also click the "Feishu Bot" button on the repository page to copy the dedicated callback URL.

Set the following in your backend service (see docker-compose example below):

FeishuAppId: Feishu App IDFeishuAppSecret: Feishu App SecretFeishuBotName: Bot display name (optional)

- After installing the app, add the bot to the target group.

- Group: @bot + your question (answers using the current repository's knowledge).

- Direct Message: send your question directly.

- Supports text and image replies (e.g., mind map images).

- No response/callback failed: ensure the Request URL is publicly reachable and that Nginx proxies

/api/to the backend. - "Encryption enabled" message: disable Encrypt Key (current version doesn't support encrypted messages).

- 403/insufficient permissions: make sure the app is installed in the org and required scopes/events are granted.

Makefile commands:

make build-arm # ARM architecture

make build-amd # AMD architecture

make build-backend-arm # Backend only ARM

make build-frontend-amd # Frontend only AMD- Discord: join us

- Feishu QR Code:

This project is licensed under the MIT License. See LICENSE for details.