This repository contains a curated list of research papers and resources focusing on saliency and scanpath prediction, human attention, human visual search.

❗ Latest Update: 11 July 2024. ❗This repo is a work in progress. New updates coming soon, stay tuned!! 🚧

20 April 2024Our survey paper has been accepted for publication at IJCAI2024 Survey Track!

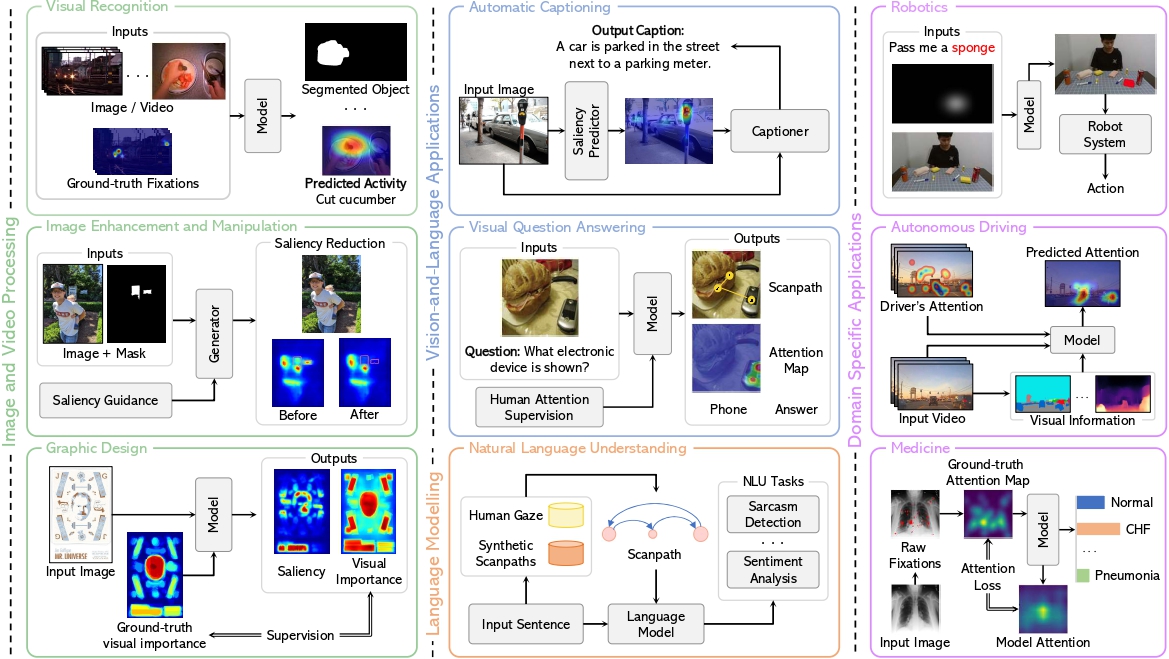

🔥🔥 Trends, Applications, and Challenges in Human Attention Modelling 🔥🔥

Authors:

Giuseppe Cartella,

Marcella Cornia,

Vittorio Cuculo,

Alessandro D'Amelio,

Dario Zanca,

Giuseppe Boccignone,

Rita Cucchiara

-

Human Attention Modelling

-

Saliency Prediction

Year Conference / Journal Title Authors Links 2024 WACV Learning Saliency from Fixations Yasser Abdelaziz Dahou Djilali et al. 📜 Paper / Code

2023 CVPR Learning from Unique Perspectives: User-aware Saliency Modeling Shi Chen et al. 📜 Paper 2023 CVPR TempSAL - Uncovering Temporal Information for Deep Saliency Prediction Bahar Aydemir et al. 📜 Paper / Code

2023 BMVC Clustered Saliency Prediction Rezvan Sherkat et al. 📜 Paper 2023 NeurIPS What Do Deep Saliency Models Learn about Visual Attention? Shi Chen et al. 📜 Paper / Code

2022 Neurocomputing TranSalNet: Towards perceptually relevant visual saliency prediction Jianxun Lou et al. 📜 Paper / Code

2020 CVPR STAViS: Spatio-Temporal AudioVisual Saliency Network Antigoni Tsiami et al. 📜 Paper / Code

2020 CVPR How much time do you have? Modeling multi-duration saliency Camilo Fosco et al. 📜 Paper / Code  / Project Page

/ Project Page2018 IEEE Transactions on Image Processing Predicting Human Eye Fixations via an LSTM-based Saliency Attentive Model Marcella Cornia et al. 📜 Paper / Code

2015 CVPR SALICON: Saliency in Context Ming Jiang et al. 📜 Paper / Project Page 2009 ICCV Learning to Predict Where Humans Look Tilke Judd et al. 📜 Paper 1998 TPAMI A Model of Saliency-Based Visual Attention for Rapid Scene Analysis Laurent Itti et al. 📜 Paper

-

Scanpath Prediction

Year Conference / Journal Title Authors Links 2024 CVPR Beyond Average: Individualized Visual Scanpath Prediction Xianyu Chen et al. 📜 Paper 2024 CVPR Unifying Top-down and Bottom-up Scanpath Prediction Using Transformers Zhibo Yang et al. 📜 Paper / Code

2023 arXiv Contrastive Language-Image Pretrained Models are Zero-Shot Human Scanpath Predictors Dario Zanca et al. 📜 Paper / Code + Dataset

2023 CVPR Gazeformer: Scalable, Effective and Fast Prediction of Goal-Directed Human Attention Sounak Mondal et al. 📜 Paper / Code

2022 ECCV Target-absent Human Attention Zhibo Yang et al. 📜 Paper / Code

2022 TMLR Behind the Machine's Gaze: Neural Networks with Biologically-inspired Constraints Exhibit Human-like Visual Attention Leo Schwinn et al. 📜 Paper / Code

2022 Journal of Vision DeepGaze III: Modeling free-viewing human scanpaths with deep learning Matthias Kümmerer et al. 📜 Paper / Code

2021 CVPR Predicting Human Scanpaths in Visual Question Answering Xianyu Chen et al. 📜 Paper / Code

2019 TPAMI Gravitational Laws of Focus of Attention Dario Zanca et al. 📜 Paper / Code

2015 Vision Research Saccadic model of eye movements for free-viewing condition Olivier Le Meur et al. 📜 Paper

-

-

Integrating Human Attention in AI models

-

Image and Video Processing

-

Visual Recognition

Year Conference / Journal Title Authors Links 2023 IJCV Joint Learning of Visual-Audio Saliency Prediction and Sound Source Localization on Multi-face Videos Minglang Qiao et al. 📜 Paper / Code

2022 ECML PKDD Foveated Neural Computation Matteo Tiezzi et al. 📜 Paper / Code

2021 WACV Integrating Human Gaze into Attention for Egocentric Activity Recognition Kyle Min et al. 📜 Paper / Code

2019 CVPR Learning Unsupervised Video Object Segmentation through Visual Attention Wenguan Wang et al. 📜 Paper / Code

2019 CVPR Shifting more attention to video salient object detection Deng-Ping Fan et al. 📜 Paper / Code

-

Graphic Design

Year Conference / Journal Title Authors Links 2020 ACM Symposium on UIST (User Interface Software and Technology) Predicting Visual Importance Across Graphic Design Types Camilo Fosco et al. 📜 Paper / Code

2020 ACM MobileHCI Understanding Visual Saliency in Mobile User Interfaces Luis A. Leiva et al. 📜 Paper 2017 ACM Symposium on UIST (User Interface Software and Technology) Learning Visual Importance for Graphic Designs and Data Visualizations Zoya Bylinskii et al. 📜 Paper / Code

-

Image Enhancement and Manipulation

Year Conference / Journal Title Authors Links 2023 CVPR Realistic saliency guided image enhancement S. Mahdi H. Miangoleh et al. 📜 Paper / Code  / Project Page

/ Project Page2022 CVPR Deep saliency prior for reducing visual distraction Kfir Aberman et al. 📜 Paper / Project Page 2021 CVPR Saliency-guided image translation Lai Jiang et al. 📜 Paper 2017 arXiv Guiding human gaze with convolutional neural networks Leon A. Gatys et al. 📜 Paper

-

Image Quality Assessment

Year Conference / Journal Title Authors Links 2023 CVPR ScanDMM: A Deep Markov Model of Scanpath Prediction for 360° Images Xiangjie Sui et al. 📜 Paper / Code

2021 ICCV Workshops Saliency-Guided Transformer Network combined with Local Embedding for No-Reference Image Quality Assessment Mengmeng Zhu et al. 📜 Paper 2019 ACMMM SGDNet: An End-to-End Saliency-Guided Deep Neural Network for No-Reference Image Quality Assessment Sheng Yang et al. 📜 Paper / Code

-

-

Vision-and-Language Applications

-

Automatic Captioning

Year Conference / Journal Title Authors Links 2020 EMNLP Generating Image Descriptions via Sequential Cross-Modal Alignment Guided by Human Gaze Ece Takmaz et al. 📜 Paper / Code

2019 ICCV Human Attention in Image Captioning: Dataset and Analysis Sen He et al. 📜 Paper / Code

2018 ACM TOMM Paying More Attention to Saliency: Image Captioning with Saliency and Context Attention Marcella Cornia et al. 📜 Paper 2017 CVPR Supervising Neural Attention Models for Video Captioning by Human Gaze Data Youngjae Yu et al. 📜 Paper / Code

2016 arXiv Seeing with Humans: Gaze-Assisted Neural Image Captioning Yusuke Sugano et al. 📜 Paper

-

Visual Question Answering

Year Conference / Journal Title Authors Links 2023 EMNLP GazeVQA: A Video Question Answering Dataset for Multiview Eye-Gaze Task-Oriented Collaborations Muhammet Furkan Ilaslan et al. 📜 Paper / Code

2023 CVPR Workshops Multimodal Integration of Human-Like Attention in Visual Question Answering Ekta Sood et al. 📜 Paper / Project Page 2021 CoNLL VQA-MHUG: A Gaze Dataset to Study Multimodal Neural Attention in Visual Question Answering Ekta Sood et al. 📜 Paper / Dataset + Project Page 2020 ECCV AiR: Attention with Reasoning Capability Shi Chen et al. 📜 Paper / Code

2018 AAAI Exploring Human-like Attention Supervision in Visual Question Answering Tingting Qiao et al. 📜 Paper / Code

2016 EMNLP Human Attention in Visual Question Answering: Do Humans and Deep Networks Look at the Same Regions? Abhishek Das et al. 📜 Paper

-

-

Language Modelling

-

Machine Reading Comprehension

Year Conference / Journal Title Authors Links 2023 ACL Workshops Native Language Prediction from Gaze: a Reproducibility Study Lina Skerath et al. 📜 Paper / Code

2022 ETRA Inferring Native and Non-Native Human Reading Comprehension and Subjective Text Difficulty from Scanpaths David R. Reich et al. 📜 Paper / Code

2017 ACL Predicting Native Language from Gaze Yevgeni Berzak et al. 📜 Paper

-

Natural Language Understanding

Year Conference / Journal Title Authors Links 2023 EMNLP Pre-Trained Language Models Augmented with Synthetic Scanpaths for Natural Language Understanding Shuwen Deng et al. 📜 Paper / Code

2023 EACL Synthesizing Human Gaze Feedback for Improved NLP Performance Varun Khurana et al. 📜 Paper 2020 NeurIPS Improving Natural Language Processing Tasks with Human Gaze-Guided Neural Attention Ekta Sood et al. 📜 Paper / Project Page

-

-

Domain-Specific Applications

-

Robotics

Year Conference / Journal Title Authors Links 2023 IEEE RA-L GVGNet: Gaze-Directed Visual Grounding for Learning Under-Specified Object Referring Intention Kun Qian et al. 📜 Paper 2022 RSS Gaze Complements Control Input for Goal Prediction During Assisted Teleoperation Reuben M. Aronson et al. 📜 Paper 2019 CoRL Understanding Teacher Gaze Patterns for Robot Learning Akanksha Saran et al. 📜 Paper / Code

2019 CoRL Nonverbal Robot Feedback for Human Teachers Sandy H. Huang et al. 📜 Paper

-

Autonomous Driving

Year Conference / Journal Title Authors Links 2023 ICCV FBLNet: FeedBack Loop Network for Driver Attention Prediction Yilong Chen et al. 📜 Paper 2022 IEEE Transactions on Intelligent Transportation Systems DADA: Driver Attention Prediction in Driving Accident Scenarios Jianwu Fang et al. 📜 Paper / Code

2021 ICCV MEDIRL: Predicting the Visual Attention of Drivers via Deep Inverse Reinforcement Learning Sonia Baee et al. 📜 Paper / Code  / Project Page

/ Project Page2020 CVPR “Looking at the right stuff” - Guided semantic-gaze for autonomous driving Anwesan Pal et al. 📜 Paper / Code

2019 ITSC DADA-2000: Can Driving Accident be Predicted by Driver Attention? Analyzed by A Benchmark Jianwu Fang et al. 📜 Paper / Code

2018 ACCV Predicting Driver Attention in Critical Situations Ye Xia et al. 📜 Paper / Code

2018 TPAMI Predicting the Driver’s Focus of Attention: the DR(eye)VE Project Andrea Palazzi et al. 📜 Paper / Code

-

Medicine

Year Conference / Journal Title Authors Links 2024 MICCAI Weakly-supervised Medical Image Segmentation with Gaze Annotations Yuan Zhong et al. 📜 Paper / Code

2024 AAAI Mining Gaze for Contrastive Learning toward Computer-Assisted Diagnosis Zihao Zhao et al. 📜 Paper / Code

2024 WACV GazeGNN: A Gaze-Guided Graph Neural Network for Chest X-ray Classification Bin Wang et al. 📜 Paper / Code

2023 WACV Probabilistic Integration of Object Level Annotations in Chest X-ray Classification Tom van Sonsbeek et al. 📜 Paper 2023 IEEE Transactions on Medical Imaging Eye-gaze-guided Vision Transformer for Rectifying Shortcut Learning Chong Ma et al. 📜 Paper 2023 Transactions on Neural Networks and Learning Systems Rectify ViT Shortcut Learning by Visual Saliency Chong Ma et al. 📜 Paper 2022 IEEE Transactions on Medical Imaging Follow My Eye: Using Gaze to Supervise Computer-Aided Diagnosis Sheng Wang et al. 📜 Paper / Code

2022 MICCAI GazeRadar: A Gaze and Radiomics-Guided Disease Localization Framework Moinak Bhattacharya et al. 📜 Paper / Code

2022 ECCV RadioTransformer: A Cascaded Global-Focal Transformer for Visual Attention–guided Disease Classification Moinak Bhattacharya et al. 📜 Paper / Code

2021 Nature Scientific Data Creation and validation of a chest X-ray dataset with eye-tracking and report dictation for AI development Alexandros Karargyris et al. 📜 Paper / Code

2021 BMVC Human Attention in Fine-grained Classification Yao Rong et al. 📜 Paper / Code

2018 Journal of Medical Imaging Modeling visual search behavior of breast radiologists using a deep convolution neural network Suneeta Mall et al. 📜 Paper

-

-

-

Datasets & Benchmarks 📂📎

-

Saliency Prediction

-

Scanpath Prediction

-

- Fork this repository and clone it locally.

- Create a new branch for your changes:

git checkout -b feature-name. - Make your changes and commit them:

git commit -m 'Description of the changes'. - Push to your fork:

git push origin feature-name. - Open a pull request on the original repository by providing a description of your changes.

This project is in constant development, and we welcome contributions to include the latest research papers in the field or report issues 💥💥.