This code repo is meant to demystify MATLAB function projectLidarPointsOnImage and fuseCameraToLidar using MATLAB data (camera instrics, extrinsics, image and pcd data) to achieve the exact results. The mathmatical theory can be found in this post.

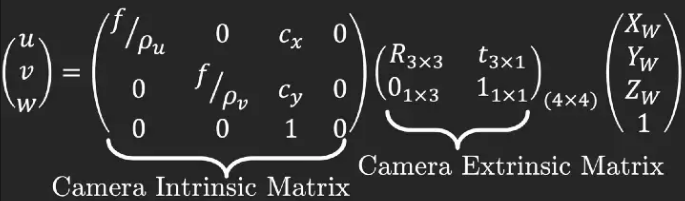

Given a pinhole camera model (camera instrinsics), a 3d world can be mapped onto a 2d camera image plane with

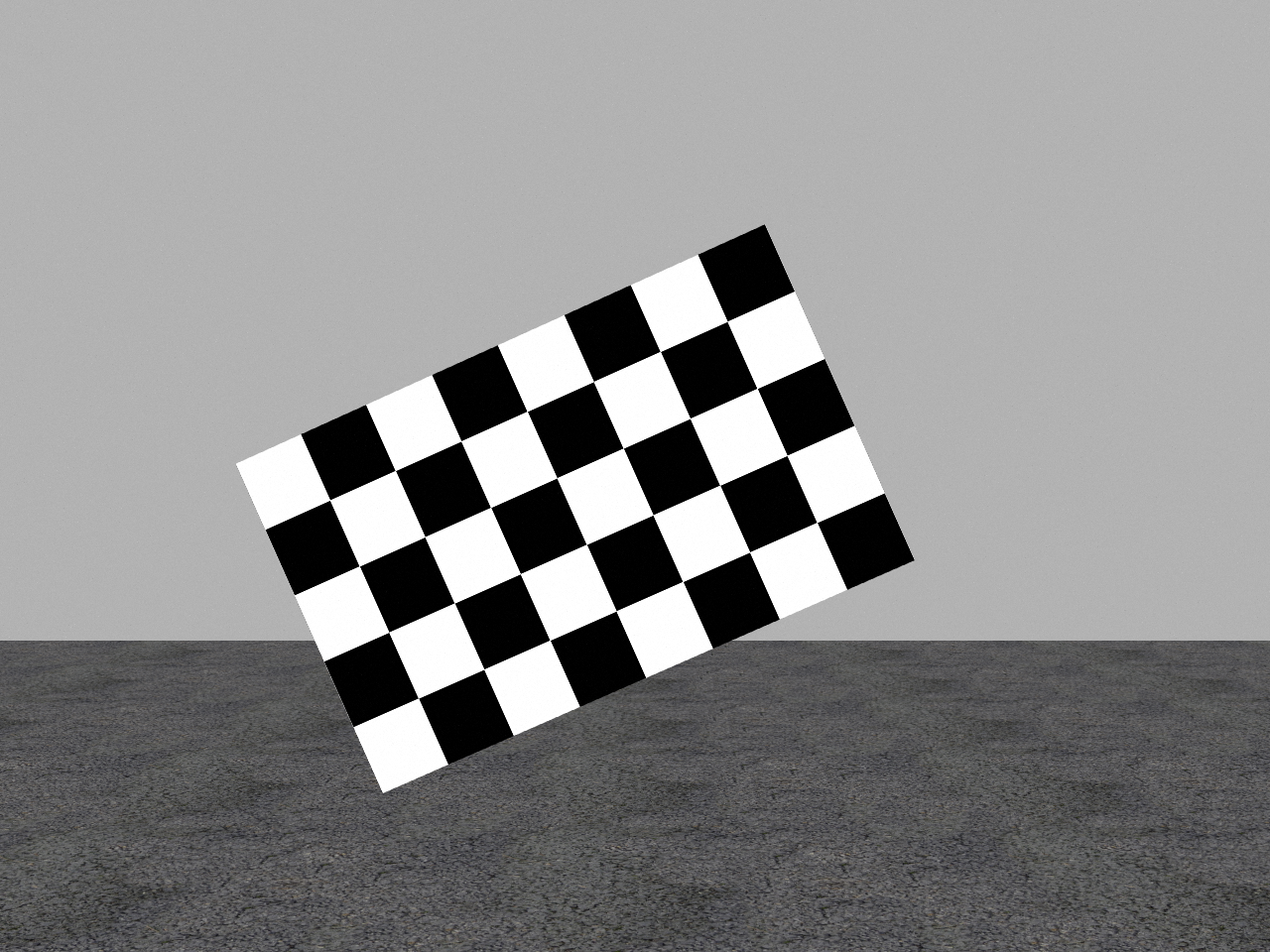

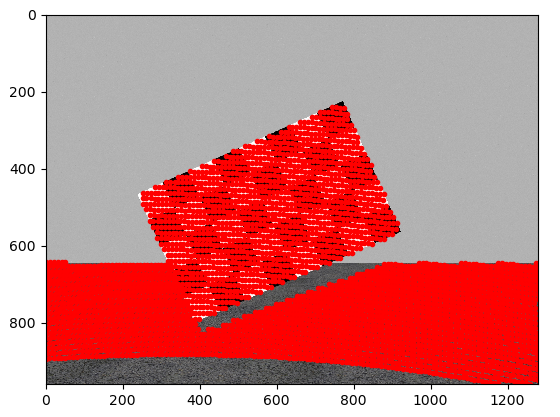

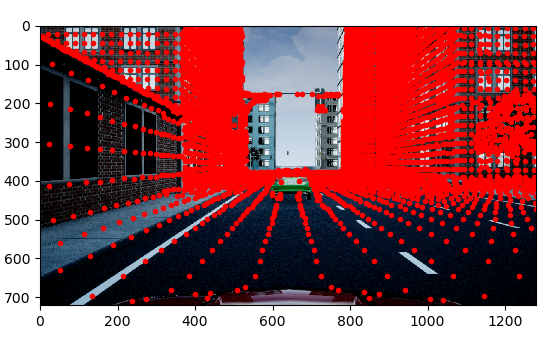

where [Xw,Yw,Zw] denotes a point in this coordinate system, and [u, v, w] represent the indices (w==1) of this point when projected onto the camera image plane. Due to the size of camera field of view (FOV), not all points will be mapped onto the image plane, thus we can track the indices of lidar points which have valid indices after the camera model tranformation.We start with projection of lidar point cloud on to a checkerboard, which would make it straighforward to see whether the project is correct or not. The detailed implementation can be found in projectLidar2Camera, which also returns the indices of lidar points that has captured by camera.

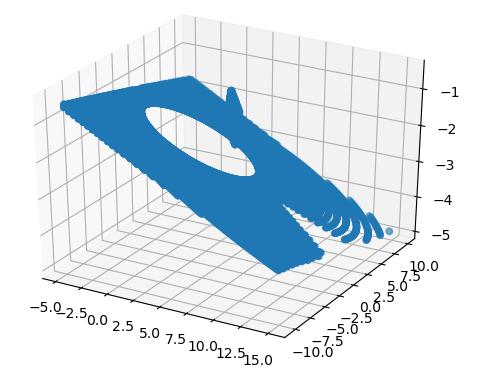

Once we are able to project lidars onto image plane, then it is trivial to fuse image's RBG infomation with 3d lidar point cloud, by assigning the BRG values to at [u,v] on the image to 3d lidar points that fall in [u,v] pixel with Open3d.

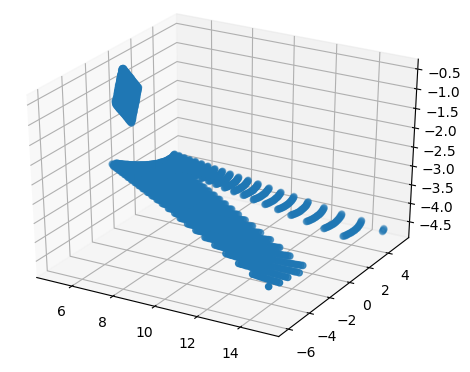

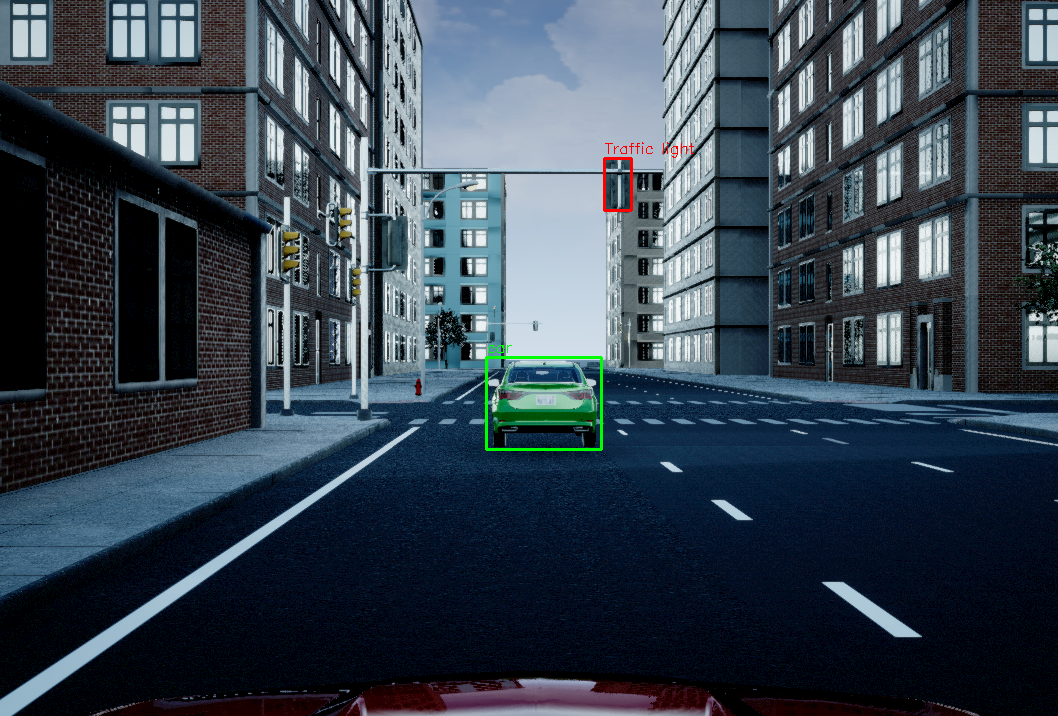

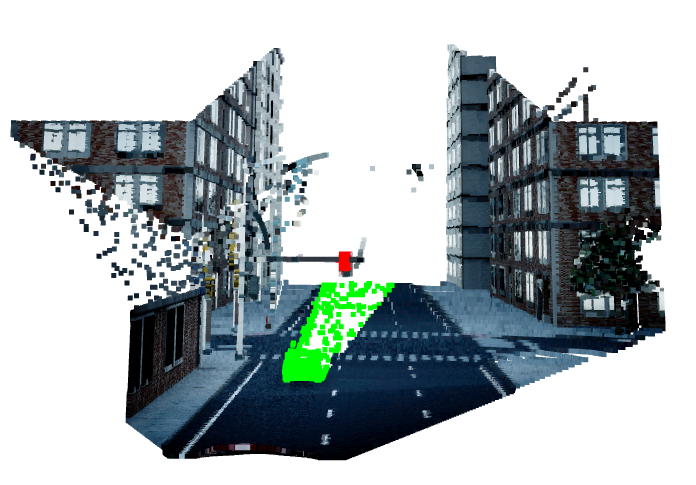

To demonstrate the limitation of this camera lidar fusion method, I had visualized the detected objects from image in point cloud domain.

The traffic light is properly detected,however the detection of car is very bad, becasue there is no object behind the traffic light but there is object (road surface) behind the detected car,thus any object behind the car will be misclassified as part of the car. This is the intrinsic drawback of this method. Even though its performance is not ideal, it is still meaningful as it can be used to add RGB information to 3D global lidar map along SLAM.- Open3d

- python-pcl (can be installed with pip install python-pcl)