For a more in depth best practices guide, go to the solution posted here.

This guide will take you through the steps necessary to continuously deliver your software to end users by leveraging Google Container Engine and Jenkins to orchestrate the software delivery pipeline. If you are not familiar with basic Kubernetes concepts, have a look at Kubernetes 101.

In order to accomplish this goal you will use the following Jenkins plugins:

- Jenkins Kubernetes Plugin - start Jenkins build executor containers in the Kubernetes cluster when builds are requested, terminate those containers when builds complete, freeing resources up for the rest of the cluster

- Jenkins Pipelines - define our build pipeline declaratively and keep it checked into source code management alongside our application code

- Google Oauth Plugin - allows you to add your google oauth credentials to jenkins

In order to deploy the application with Kubernetes you will use the following resources:

- Deployments - replicates our application across our kubernetes nodes and allows us to do a controlled rolling update of our software across the fleet of application instances

- Services - load balancing and service discovery for our internal services

- Ingress - external load balancing and SSL termination for our external service

- Secrets - secure storage of non public configuration information, SSL certs specifically in our case

- A Google Cloud Platform Account

- Enable the Compute Engine, Container Engine, and Container Builder APIs

In this section you will start your Google Cloud Shell and clone the lab code repository to it.

-

Create a new Google Cloud Platform project: https://console.developers.google.com/project

-

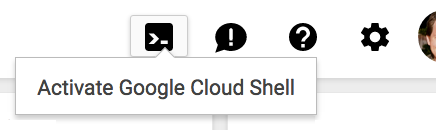

Click the Activate Cloud Shell icon in the top-right and wait for your shell to open.

If you are prompted with a Learn more message, click Continue to finish opening the Cloud Shell.

-

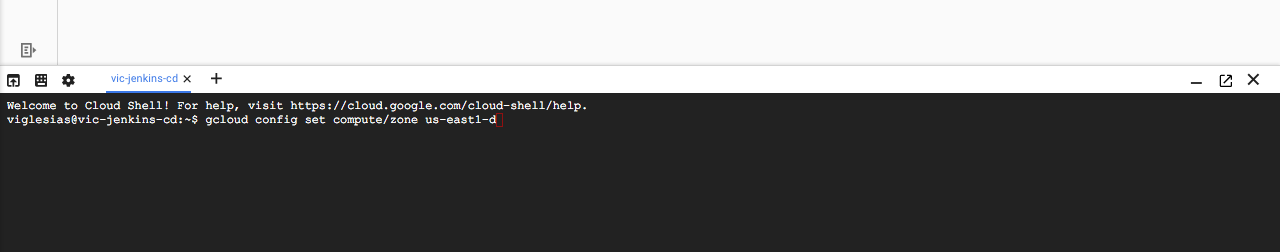

When the shell is open, use the gcloud command line interface tool to set your default compute zone:

gcloud config set compute/zone us-east1-dOutput (do not copy):

Updated property [compute/zone]. -

Set an environment variable with your project:

export GOOGLE_CLOUD_PROJECT=$(gcloud config get-value project)

Output (do not copy):

Your active configuration is: [cloudshell-...] -

Clone the lab repository in your cloud shell, then

cdinto that dir:git clone https://github.com/GoogleCloudPlatform/continuous-deployment-on-kubernetes.git

Output (do not copy):

Cloning into 'continuous-deployment-on-kubernetes'... ...cd continuous-deployment-on-kubernetes

-

Create a service account, on Google Cloud Platform (GCP).

Create a new service account because it's the recommended way to avoid using extra permissions in Jenkins and the cluster.

gcloud iam service-accounts create jenkins-sa \ --display-name "jenkins-sa"Output (do not copy):

Created service account [jenkins-sa]. -

Add required permissions, to the service account, using predefined roles.

Most of these permissions are related to Jenkins use of Cloud Build, and storing/retrieving build artifacts in Cloud Storage. Also, the service account needs to enable the Jenkins agent to read from a repo you will create in Cloud Source Repositories (CSR).

gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member "serviceAccount:jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com" \ --role "roles/viewer" gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member "serviceAccount:jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com" \ --role "roles/source.reader" gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member "serviceAccount:jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com" \ --role "roles/storage.admin" gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member "serviceAccount:jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com" \ --role "roles/storage.objectAdmin" gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member "serviceAccount:jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com" \ --role "roles/cloudbuild.builds.editor" gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member "serviceAccount:jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com" \ --role "roles/container.developer"

You can check the permissions added using IAM & admin in Cloud Console.

-

Export the service account credentials to a JSON key file in Cloud Shell:

gcloud iam service-accounts keys create ~/jenkins-sa-key.json \ --iam-account "jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com"

Output (do not copy):

created key [...] of type [json] as [/home/.../jenkins-sa-key.json] for [jenkins-sa@myproject.aiam.gserviceaccount.com] -

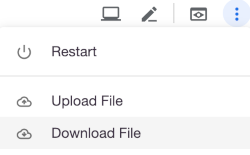

Download the JSON key file to your local machine.

Click Download File from More on the Cloud Shell toolbar:

-

Enter the File path as

jenkins-sa-key.jsonand click Download.The file will be downloaded to your local machine, for use later.

-

Provision the cluster with

gcloud:Use Google Kubernetes Engine (GKE) to create and manage your Kubernetes cluster, named

jenkins-cd. Use the service account created earlier.gcloud container clusters create jenkins-cd \ --num-nodes 2 \ --machine-type n1-standard-2 \ --cluster-version 1.13 \ --service-account "jenkins-sa@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com"Output (do not copy):

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS jenkins-cd us-east1-d 1.13.10-gke.7 35.229.29.69 n1-standard-2 1.13.10-gke.7 2 RUNNING -

Once that operation completes, retrieve the credentials for your cluster.

gcloud container clusters get-credentials jenkins-cd

Output (do not copy):

Fetching cluster endpoint and auth data. kubeconfig entry generated for jenkins-cd. -

Confirm that the cluster is running and

kubectlis working by listing pods:kubectl get pods

Output (do not copy):

No resources found.You would see an error if the cluster was not created, or you did not have permissions.

-

Add yourself as a cluster administrator in the cluster's RBAC so that you can give Jenkins permissions in the cluster:

kubectl create clusterrolebinding cluster-admin-binding --clusterrole=cluster-admin --user=$(gcloud config get-value account)Output (do not copy):

Your active configuration is: [cloudshell-...] clusterrolebinding.rbac.authorization.k8s.io/cluster-admin-binding created

In this lab, you will use Helm to install Jenkins with a stable chart. Helm is a package manager that makes it easy to configure and deploy Kubernetes applications. Once you have Jenkins installed, you'll be able to set up your CI/CD pipleline.

-

Download and install the helm binary

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.14.3-linux-amd64.tar.gz

-

Unzip the file to your local system:

tar zxfv helm-v2.14.3-linux-amd64.tar.gz cp linux-amd64/helm . -

Grant Tiller, the server side of Helm, the cluster-admin role in your cluster:

kubectl create serviceaccount tiller --namespace kube-system kubectl create clusterrolebinding tiller-admin-binding --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

Output (do not copy):

serviceaccount/tiller created clusterrolebinding.rbac.authorization.k8s.io/tiller-admin-binding created -

Initialize Helm. This ensures that the server side of Helm (Tiller) is properly installed in your cluster.

./helm init --service-account=tiller

Output (do not copy):

... Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. ... -

Update your local repo with the latest charts.

./helm repo update

Output (do not copy):

Hang tight while we grab the latest from your chart repositories... ...Skip local chart repository ...Successfully got an update from the "stable" chart repository Update Complete. -

Ensure Helm is properly installed by running the following command. You should see versions

v2.14.3appear for both the server and the client:./helm version

Output (do not copy):

Client: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"} Server: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}If you don't see the Server version immediately, wait a few seconds and try again.

You will use a custom values file to add the GCP specific plugin necessary to use service account credentials to reach your Cloud Source Repository.

-

Use the Helm CLI to deploy the chart with your configuration set.

./helm install -n cd stable/jenkins -f jenkins/values.yaml --version 1.7.3 --waitOutput (do not copy):

... For more information on running Jenkins on Kubernetes, visit: https://cloud.google.com/solutions/jenkins-on-container-engine -

The Jenkins pod STATUS should change to

Runningwhen it's ready:kubectl get pods

Output (do not copy):

NAME READY STATUS RESTARTS AGE cd-jenkins-7c786475dd-vbhg4 1/1 Running 0 1m -

Configure the Jenkins service account to be able to deploy to the cluster.

kubectl create clusterrolebinding jenkins-deploy --clusterrole=cluster-admin --serviceaccount=default:cd-jenkins

Output (do not copy):

clusterrolebinding.rbac.authorization.k8s.io/jenkins-deploy created -

Set up port forwarding to the Jenkins UI, from Cloud Shell:

export JENKINS_POD_NAME=$(kubectl get pods -l "app.kubernetes.io/component=jenkins-master" -o jsonpath="{.items[0].metadata.name}") kubectl port-forward $JENKINS_POD_NAME 8080:8080 >> /dev/null &

-

Now, check that the Jenkins Service was created properly:

kubectl get svc

Output (do not copy):

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE cd-jenkins 10.35.249.67 <none> 8080/TCP 3h cd-jenkins-agent 10.35.248.1 <none> 50000/TCP 3h kubernetes 10.35.240.1 <none> 443/TCP 9hThis Jenkins configuration is using the Kubernetes Plugin, so that builder nodes will be automatically launched as necessary when the Jenkins master requests them. Upon completion of the work, the builder nodes will be automatically turned down, and their resources added back to the cluster's resource pool.

Notice that this service exposes ports

8080and50000for any pods that match theselector. This will expose the Jenkins web UI and builder/agent registration ports within the Kubernetes cluster. Additionally thejenkins-uiservices is exposed using a ClusterIP so that it is not accessible from outside the cluster.

-

The Jenkins chart will automatically create an admin password for you. To retrieve it, run:

printf $(kubectl get secret cd-jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo

-

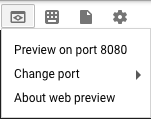

To get to the Jenkins user interface, click on the Web Preview button

in cloud shell, then click

Preview on port 8080:

in cloud shell, then click

Preview on port 8080:

You should now be able to log in with username admin and your auto generated

password.

You've got a Kubernetes cluster managed by GKE. You've deployed:

- a Jenkins Deployment

- a (non-public) service that exposes Jenkins to its agent containers

You have the tools to build a continuous deployment pipeline. Now you need a sample app to deploy continuously.

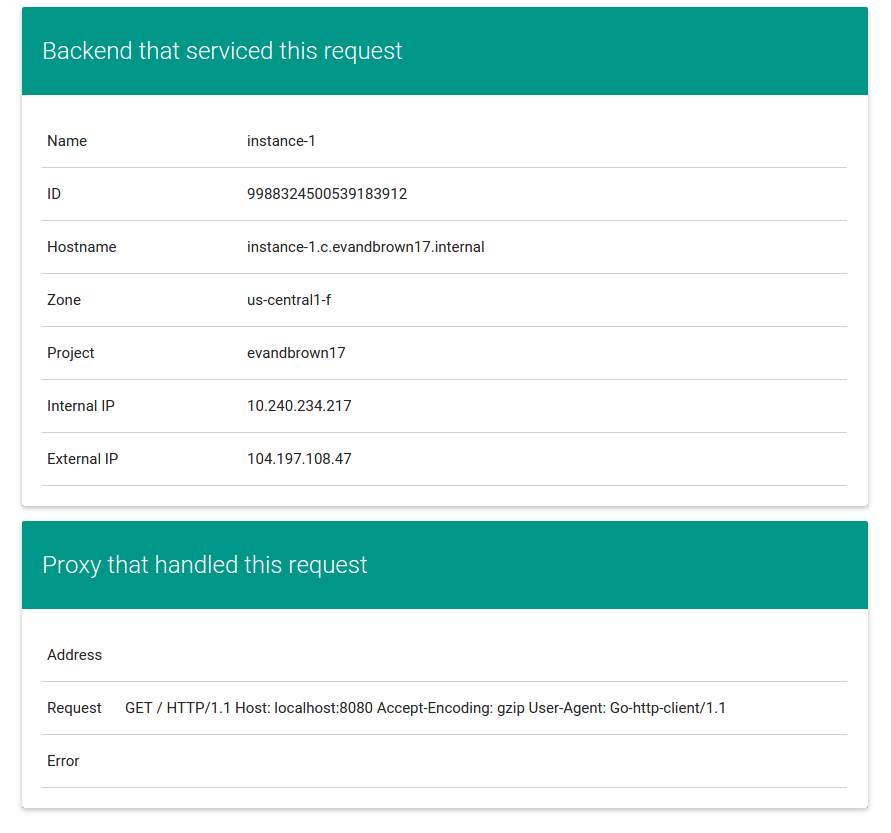

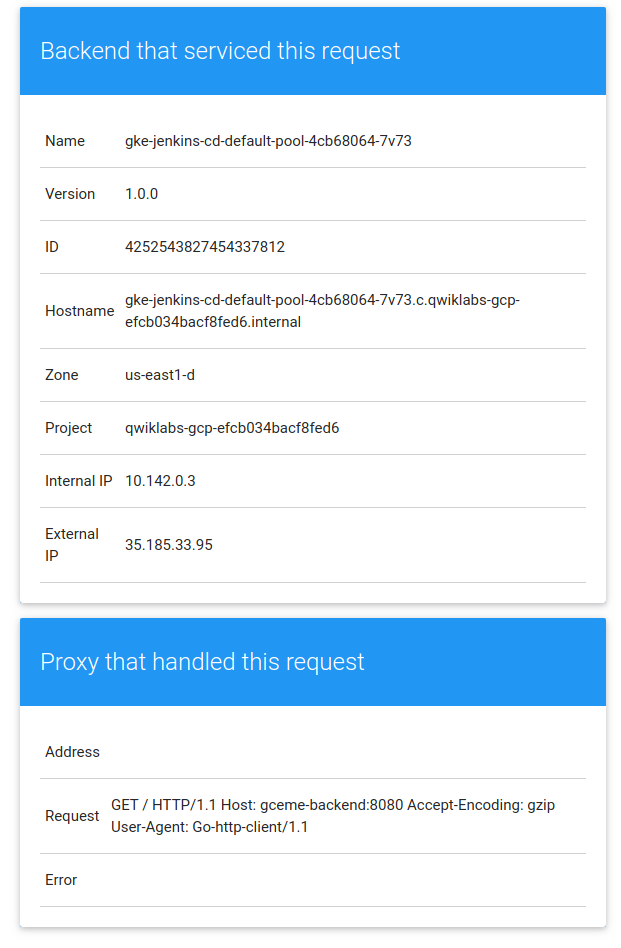

You'll use a very simple sample application - gceme - as the basis for your CD

pipeline. gceme is written in Go and is located in the sample-app directory

in this repo. When you run the gceme binary on a GCE instance, it displays the

instance's metadata in a pretty card:

The binary supports two modes of operation, designed to mimic a microservice. In

backend mode, gceme will listen on a port (8080 by default) and return GCE

instance metadata as JSON, with content-type=application/json. In frontend mode,

gceme will query a backend gceme service and render that JSON in the UI you

saw above. It looks roughly like this:

----------- ------------ ~~~~~~~~~~~~ -----------

| | | | | | | |

| user | ---> | gceme | ---> | lb/proxy | -----> | gceme |

|(browser)| |(frontend)| |(optional)| | |(backend)|

| | | | | | | | |

----------- ------------ ~~~~~~~~~~~~ | -----------

| -----------

| | |

|--> | gceme |

|(backend)|

| |

-----------

Both the frontend and backend modes of the application support two additional URLs:

/versionprints the version of the binary (declared as a const inmain.go)/healthzreports the health of the application. In frontend mode, health will be OK if the backend is reachable.

In this section you will deploy the gceme frontend and backend to Kubernetes

using Kubernetes manifest files (included in this repo) that describe the

environment that the gceme binary/Docker image will be deployed to. They use a

default gceme Docker image that you will be updating with your own in a later

section.

You'll have two primary environments - canary and production - and use Kubernetes to manage them.

Note: The manifest files for this section of the tutorial are in

sample-app/k8s. You are encouraged to open and read each one before creating it per the instructions.

-

First change directories to the sample-app, back in Cloud Shell:

cd sample-app -

Create the namespace for production:

kubectl create ns production

Output (do not copy):

namespace/production created -

Create the production Deployments for frontend and backend:

kubectl --namespace=production apply -f k8s/production

Output (do not copy):

deployment.extensions/gceme-backend-production created deployment.extensions/gceme-frontend-production created -

Create the canary Deployments for frontend and backend:

kubectl --namespace=production apply -f k8s/canary

Output (do not copy):

deployment.extensions/gceme-backend-canary created deployment.extensions/gceme-frontend-canary created -

Create the Services for frontend and backend:

kubectl --namespace=production apply -f k8s/services

Output (do not copy):

service/gceme-backend created service/gceme-frontend created -

Scale the production, frontend service:

kubectl --namespace=production scale deployment gceme-frontend-production --replicas=4

Output (do not copy):

deployment.extensions/gceme-frontend-production scaled -

Retrieve the External IP for the production services:

This field may take a few minutes to appear as the load balancer is being provisioned

kubectl --namespace=production get service gceme-frontend

Output (do not copy):

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE gceme-frontend LoadBalancer 10.35.254.91 35.196.48.78 80:31088/TCP 1m -

Confirm that both services are working by opening the frontend

EXTERNAL-IPin your browser -

Poll the production endpoint's

/versionURL.Open a new Cloud Shell terminal by clicking the

+button to the right of the current terminal's tab.export FRONTEND_SERVICE_IP=$(kubectl get -o jsonpath="{.status.loadBalancer.ingress[0].ip}" --namespace=production services gceme-frontend) while true; do curl http://$FRONTEND_SERVICE_IP/version; sleep 3; done

Output (do not copy):

1.0.0 1.0.0 1.0.0You should see that all requests are serviced by v1.0.0 of the application.

Leave this running in the second terminal so you can easily observe rolling updates in the next section.

-

Return to the first terminal/tab in Cloud Shell.

Here you'll create your own copy of the gceme sample app in

Cloud Source Repository.

-

Initialize the git repository.

Make sure to work from the

sample-appdirectory of the repo you cloned previously.git init git config credential.helper gcloud.sh gcloud source repos create gceme -

Add a git remote for the new repo in Cloud Source Repositories.

git remote add origin https://source.developers.google.com/p/$GOOGLE_CLOUD_PROJECT/r/gceme -

Ensure git is able to identify you:

git config --global user.email "YOUR-EMAIL-ADDRESS" git config --global user.name "YOUR-NAME"

-

Add, commit, and push all the files:

git add . git commit -m "Initial commit" git push origin master

Output (do not copy):

To https://source.developers.google.com/p/myproject/r/gceme * [new branch] master -> master

You'll now use Jenkins to define and run a pipeline that will test, build,

and deploy your copy of gceme to your Kubernetes cluster. You'll approach this

in phases. Let's get started with the first.

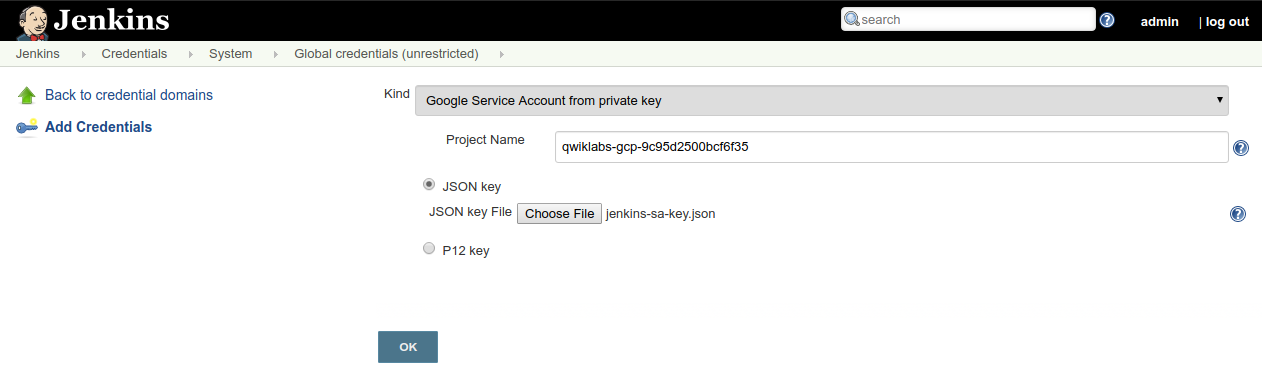

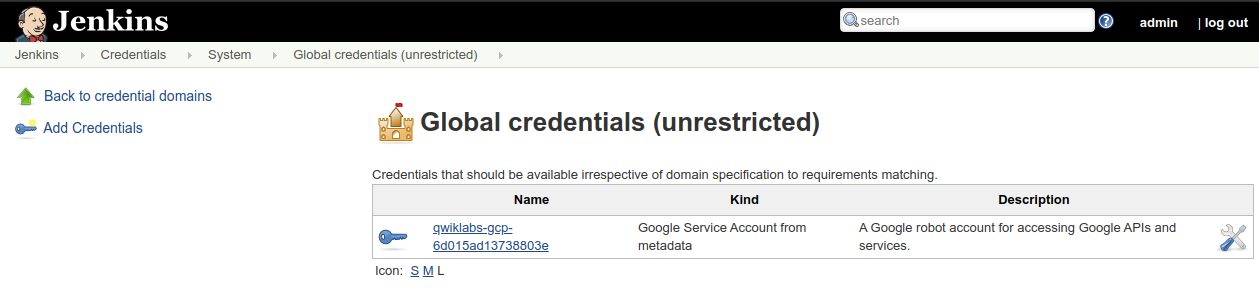

First, you will need to configure GCP credentials in order for Jenkins to be able to access the code repository:

-

In the Jenkins UI, Click Credentials on the left

-

Click the (global) link

-

Click Add Credentials on the left

-

From the Kind dropdown, select

Google Service Account from private key -

Enter the Project Name from your project

-

Leave JSON key selected, and click Choose File.

-

Select the

jenkins-sa-key.jsonfile downloaded earlier, then click Open. -

Click OK

You should now see 1 global credential. Make a note of the name of the credential, as you will reference this in Phase 2.

This lab uses Jenkins Pipeline to define builds as groovy scripts.

Navigate to your Jenkins UI and follow these steps to configure a Pipeline job

(hot tip: you can find the IP address of your Jenkins install with kubectl get ingress --namespace jenkins):

-

Click the Jenkins link in the top left toolbar, of the ui

-

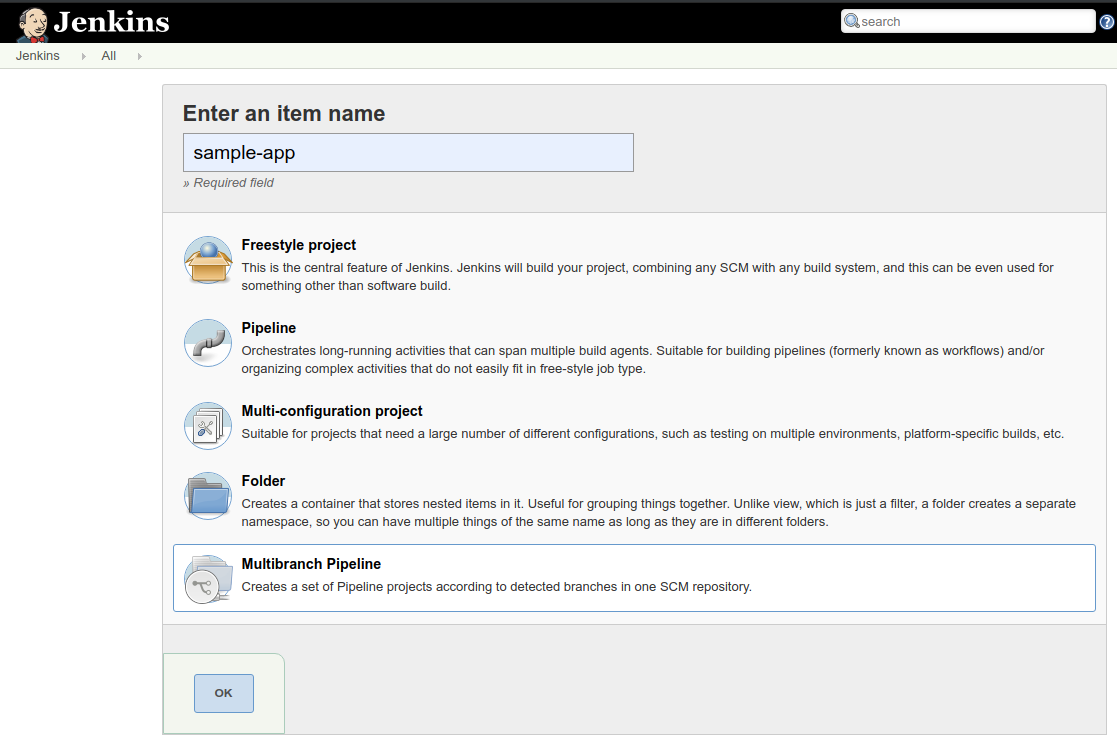

Click the New Item link in the left nav

-

For item name use

sample-app, choose the Multibranch Pipeline option, then click OK -

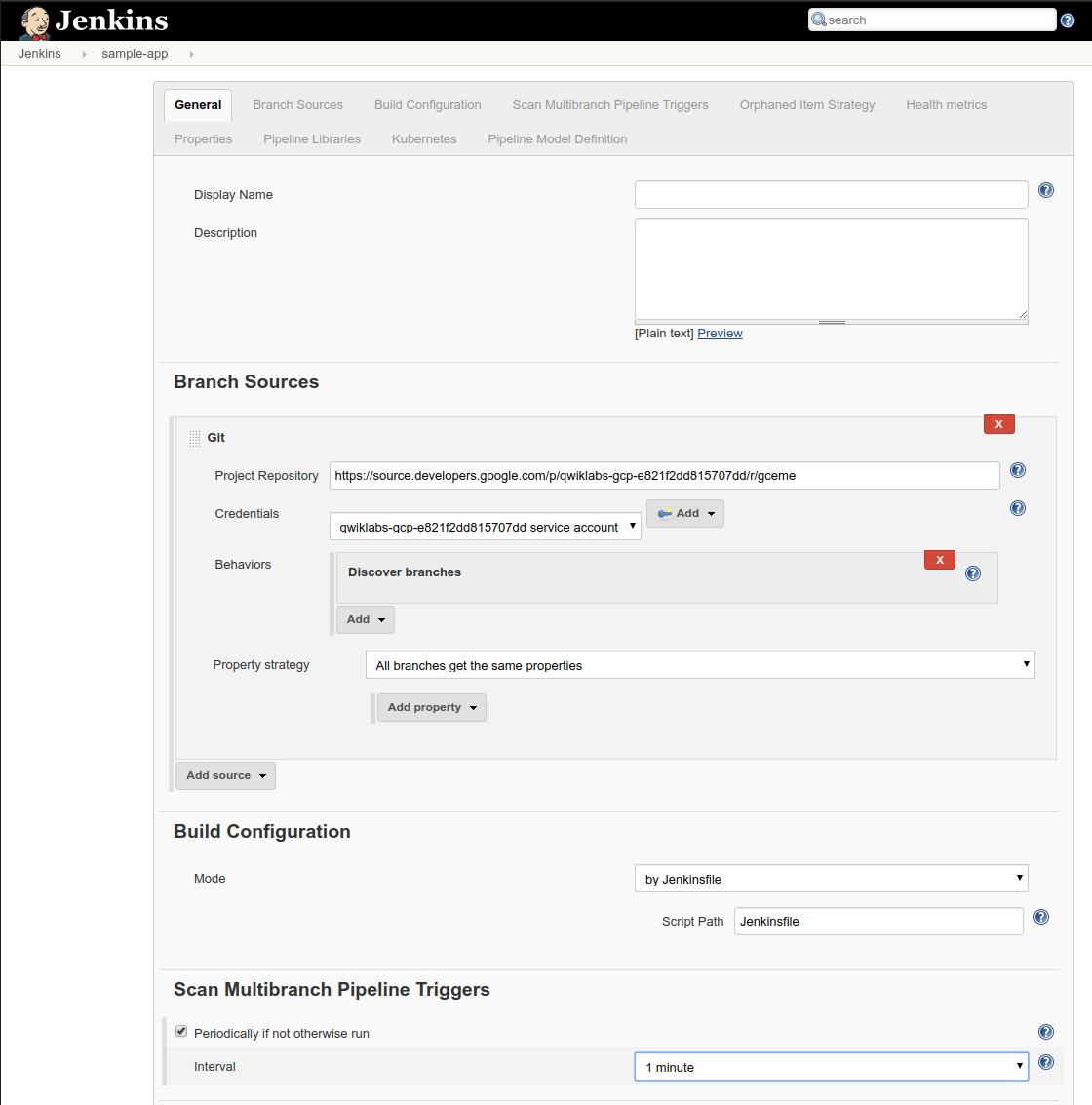

Click Add source and choose git

-

Paste the HTTPS clone URL of your

gcemerepo on Cloud Source Repositories into the Project Repository field. It will look like: https://source.developers.google.com/p/[REPLACE_WITH_YOUR_PROJECT_ID]/r/gceme -

From the Credentials dropdown, select the name of the credential from Phase 1. It should have the format

PROJECT_ID service account. -

Under Scan Multibranch Pipeline Triggers section, check the Periodically if not otherwise run box, then set the Interval value to

1 minute. -

Click Save, leaving all other options with default values.

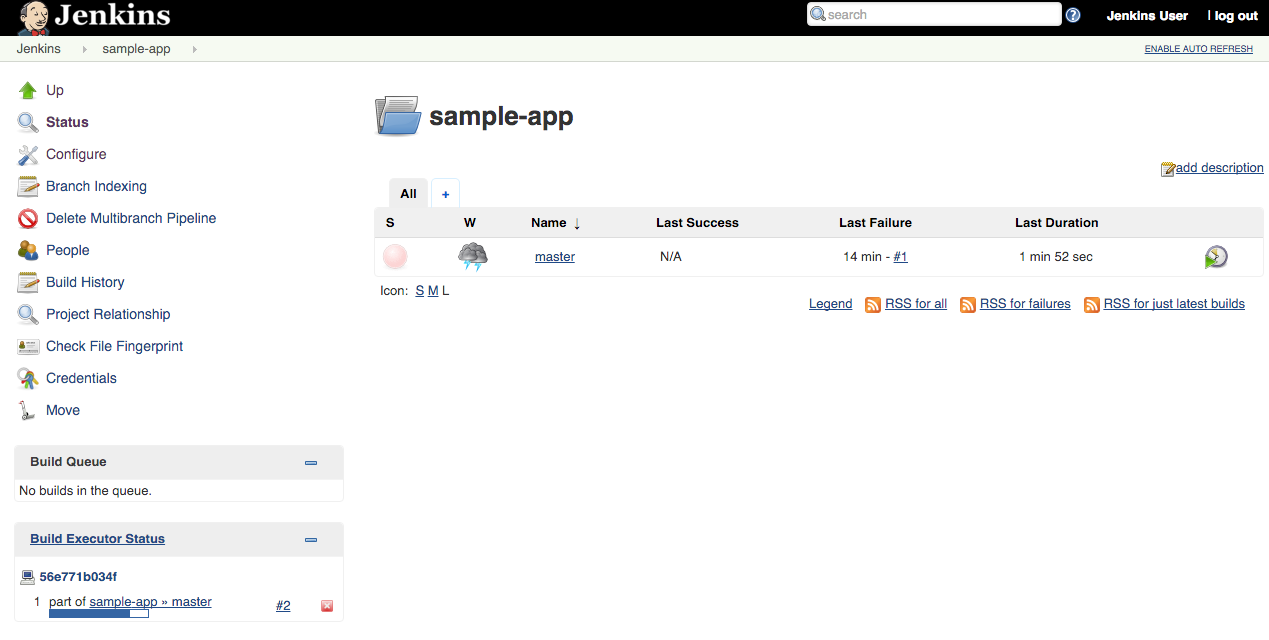

A Branch indexing job was kicked off to identify any branches in your repository.

-

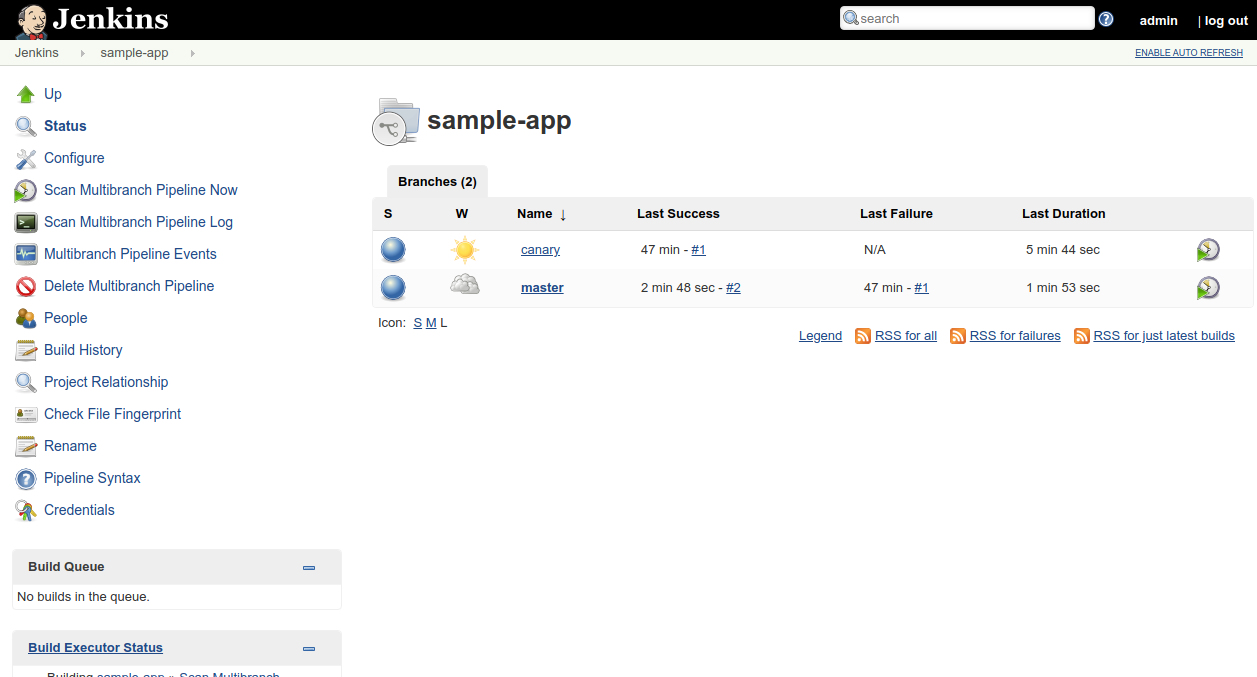

Click Jenkins > sample-app, in the top menu.

You should see the

masterbranch now has a job created for it.The first run of the job will fail, until the project name is set properly in the

Jenkinsfilenext step.

-

Create a branch for the canary environment called

canarygit checkout -b canary

Output (do not copy):

Switched to a new branch 'canary'The

Jenkinsfileis written using the Jenkins Workflow DSL, which is Groovy-based. It allows an entire build pipeline to be expressed in a single script that lives alongside your source code and supports powerful features like parallelization, stages, and user input. -

Update your

Jenkinsfilescript with the correct PROJECT environment value.Be sure to replace

REPLACE_WITH_YOUR_PROJECT_IDwith your project name.Save your changes, but don't commit the new

Jenkinsfilechange just yet. You'll make one more change in the next section, then commit and push them together.

Phase 4: Deploy a canary release to canary

Now that your pipeline is working, it's time to make a change to the gceme app

and let your pipeline test, package, and deploy it.

The canary environment is rolled out as a percentage of the pods behind the

production load balancer. In this case we have 1 out of 5 of our frontends

running the canary code and the other 4 running the production code. This allows

you to ensure that the canary code is not negatively affecting users before

rolling out to your full fleet. You can use the

labels env: production and

env: canary in Google Cloud Monitoring in order to monitor the performance of

each version individually.

- In the

sample-apprepository on your workstation openhtml.goand replace the wordbluewithorange(there should be exactly two occurrences):

//snip

<div class="card orange">

<div class="card-content white-text">

<div class="card-title">Backend that serviced this request</div>

//snip-

In the same repository, open

main.goand change the version number from1.0.0to2.0.0://snip const version string = "2.0.0" //snip

-

Push the version 2 changes to the repo:

git add Jenkinsfile html.go main.go

git commit -m "Version 2"git push origin canary

-

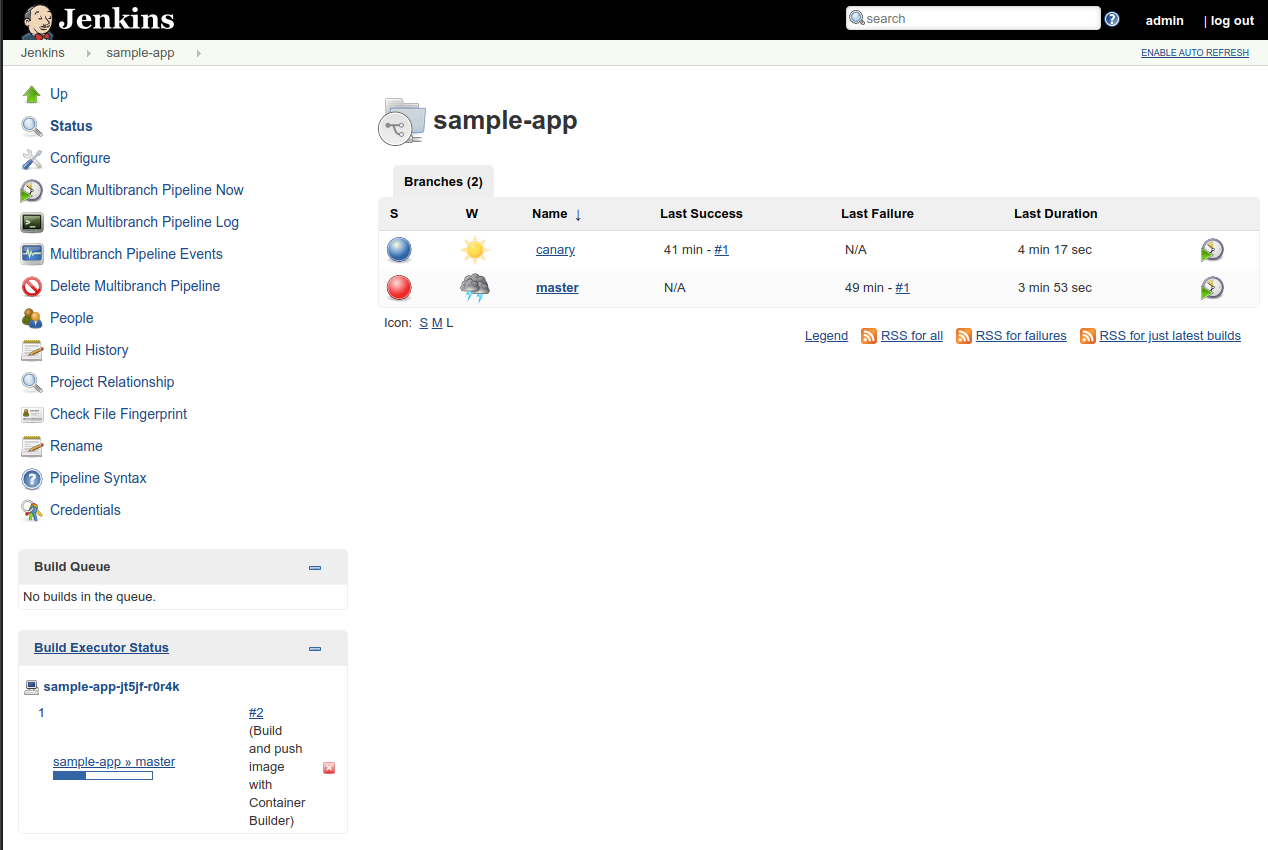

Revisit your sample-app in the Jenkins UI.

Navigate back to your Jenkins

sample-appjob. Notice a canary pipeline job has been created. -

Follow the canary build output.

- Click the Canary link.

- Click the #1 link the Build History box, on the lower left.

- Click Console Output from the left-side menu.

- Scroll down to follow.

-

Track the output for a few minutes.

When you see

Finished: SUCCESS, open the Cloud Shell terminal that you left polling/versionof canary. Observe that some requests are now handled by the canary2.0.0version.1.0.0 1.0.0 1.0.0 1.0.0 2.0.0 2.0.0 1.0.0 1.0.0 1.0.0 1.0.0You have now rolled out that change, version 2.0.0, to a subset of users.

-

Continue the rollout, to the rest of your users.

Back in the other Cloud Shell terminal, create a branch called

production, then push it to the Git server.git checkout master git merge canary git push origin master

-

Watch the pipelines in the Jenkins UI handle the change.

Within a minute or so, you should see a new job in the Build Queue and Build Executor.

-

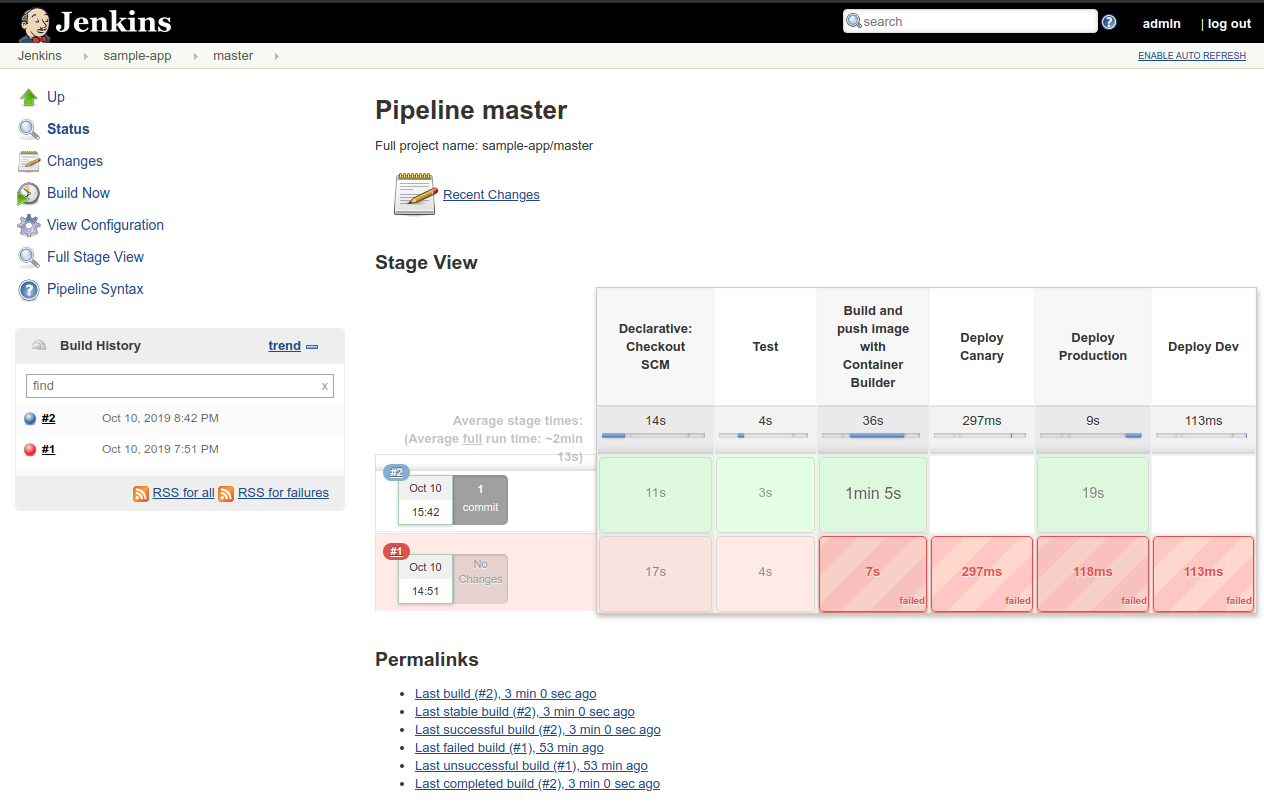

Clicking on the

masterlink will show you the stages of your pipeline as well as pass/fail and timing characteristics.You can see the failed master job #1, and the successful master job #2.

-

Check the Cloud Shell terminal responses again.

In Cloud Shell, open the terminal polling canary's

/versionURL and observe that the new version,2.0.0, has been rolled out and is serving all requests.2.0.0 2.0.0 2.0.0 2.0.0 2.0.0 2.0.0 2.0.0 2.0.0 2.0.0 2.0.0

If you want to understand the pipeline stages in greater detail, you can

look through the Jenkinsfile in the sample-app project directory.

Oftentimes changes will not be so trivial that they can be pushed directly to the canary environment. In order to create a development environment, from a long lived feature branch, all you need to do is push it up to the Git server. Jenkins will automatically deploy your development environment.

In this case you will not use a loadbalancer, so you'll have to access your

application using kubectl proxy. This proxy authenticates itself with the

Kubernetes API and proxies requests from your local machine to the service in

the cluster without exposing your service to the internet.

-

Create another branch and push it up to the Git server

git checkout -b new-feature git push origin new-feature

-

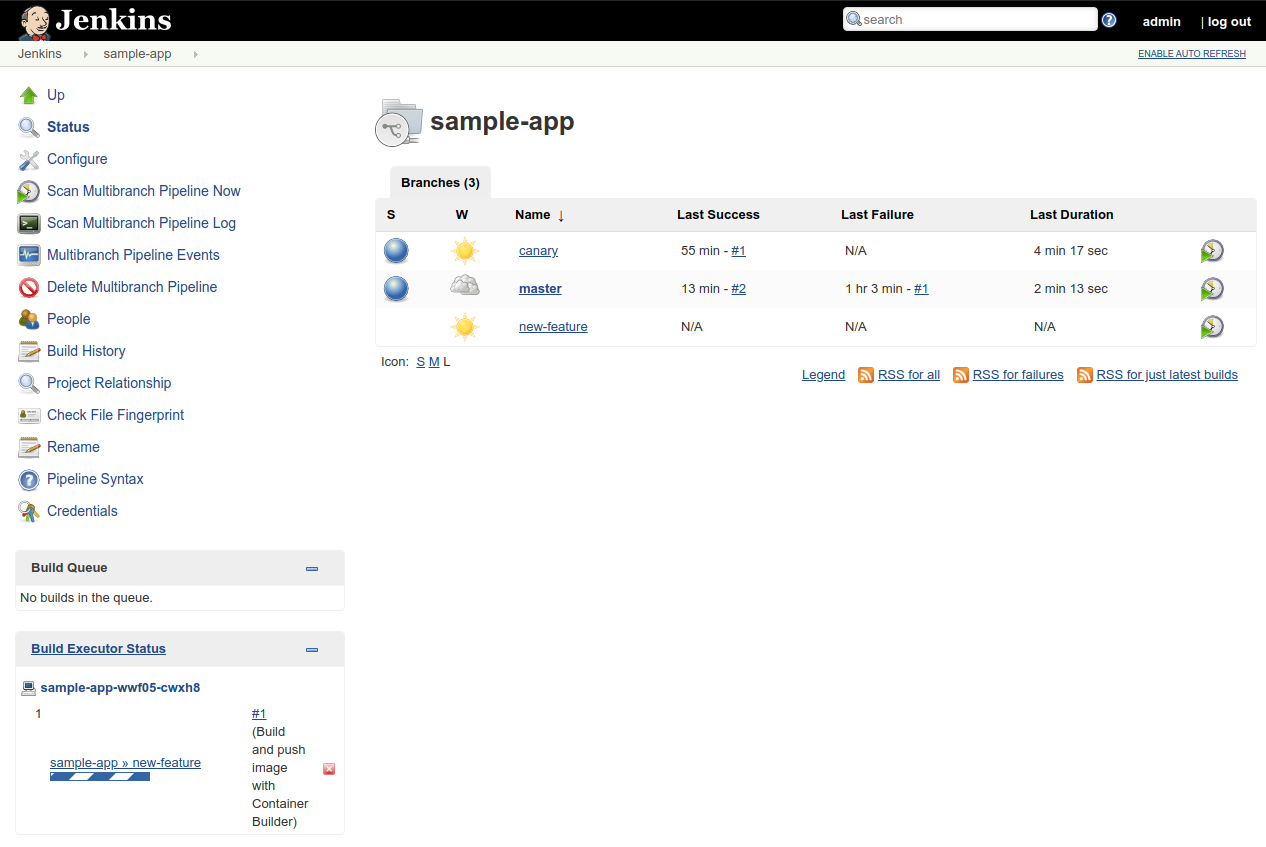

Open Jenkins in your web browser and navigate back to sample-app.

You should see that a new job called

new-featurehas been created, and this job is creating your new environment. -

Navigate to the console output of the first build of this new job by:

- Click the new-feature link in the job list.

- Click the #1 link in the Build History list on the left of the page.

- Finally click the Console Output link in the left menu.

-

Scroll to the bottom of the console output of the job to see instructions for accessing your environment:

Successfully verified extensions/v1beta1/Deployment: gceme-frontend-dev AvailableReplicas = 1, MinimumReplicas = 1 [Pipeline] echo To access your environment run `kubectl proxy` [Pipeline] echo Then access your service via http://localhost:8001/api/v1/proxy/namespaces/new-feature/services/gceme-frontend:80/ [Pipeline] }

-

Set up port forwarding to the dev frontend, from Cloud Shell:

export DEV_POD_NAME=$(kubectl get pods -n new-feature -l "app=gceme,env=dev,role=frontend" -o jsonpath="{.items[0].metadata.name}") kubectl port-forward -n new-feature $DEV_POD_NAME 8001:80 >> /dev/null &

-

Access your application via localhost:

curl http://localhost:8001/api/v1/proxy/namespaces/new-feature/services/gceme-frontend:80/

Output (do not copy):

<!doctype html> <html> ... </div> <div class="col s2"> </div> </div> </div> </html>Look through the response output for

"card orange"that was changed earlier. -

You can now push code changes to the

new-featurebranch in order to update your development environment. -

Once you are done, merge your

new-featurebranch back into thecanarybranch to deploy that code to the canary environment:git checkout canary git merge new-feature git push origin canary

-

When you are confident that your code won't wreak havoc in production, merge from the

canarybranch to themasterbranch. Your code will be automatically rolled out in the production environment:git checkout master git merge canary git push origin master

-

When you are done with your development branch, delete it from Cloud Source Repositories, then delete the environment in Kubernetes:

git push origin :new-feature kubectl delete ns new-feature

Make a breaking change to the gceme source, push it, and deploy it through the

pipeline to production. Then pretend latency spiked after the deployment and you

want to roll back. Do it! Faster!

Things to consider:

- What is the Docker image you want to deploy for roll back?

- How can you interact directly with the Kubernetes to trigger the deployment?

- Is SRE really what you want to do with your life?

Clean up is really easy, but also super important: if you don't follow these instructions, you will continue to be billed for the GKE cluster you created.

To clean up, navigate to the Google Developers Console Project List, choose the project you created for this lab, and delete it. That's it.