Stereo Anywhere: Robust Zero-Shot Deep Stereo Matching Even Where Either Stereo or Mono Fail (ArXiv)

🚨 This repository will contain download links to our code, and trained deep stereo models of our work "Stereo Anywhere: Robust Zero-Shot Deep Stereo Matching Even Where Either Stereo or Mono Fail", ArXiv

by Luca Bartolomei1,2, Fabio Tosi2, Matteo Poggi1,2, and Stefano Mattoccia1,2

Advanced Research Center on Electronic System (ARCES)1 University of Bologna2

Stereo Anywhere: Robust Zero-Shot Deep Stereo Matching Even Where Either Stereo or Mono Fail (ArXiv)

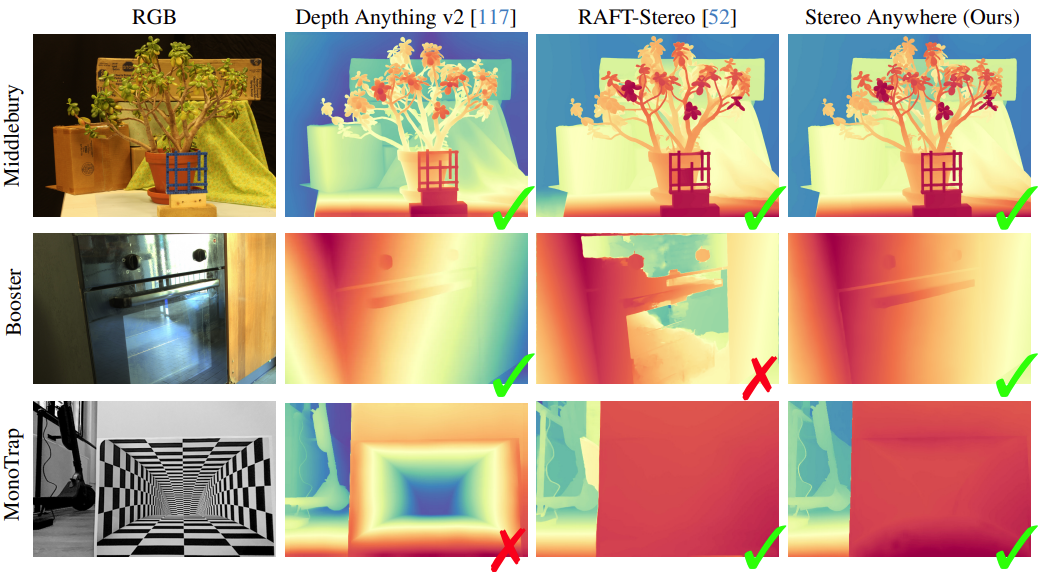

Stereo Anywhere: Combining Monocular and Stereo Strenghts for Robust Depth Estimation. Our model achieves accurate results on standard conditions (on Middlebury), while effectively handling non-Lambertian surfaces where stereo networks fail (on Booster) and perspective illusions that deceive monocular depth foundation models (on MonoTrap, our novel dataset).

Note: 🚧 Kindly note that this repository is currently in the development phase. We are actively working to add and refine features and documentation. We apologize for any inconvenience caused by incomplete or missing elements and appreciate your patience as we work towards completion.

We introduce Stereo Anywhere, a novel stereo-matching framework that combines geometric constraints with robust priors from monocular depth Vision Foundation Models (VFMs). By elegantly coupling these complementary worlds through a dual-branch architecture, we seamlessly integrate stereo matching with learned contextual cues. Following this design, our framework introduces novel cost volume fusion mechanisms that effectively handle critical challenges such as textureless regions, occlusions, and non-Lambertian surfaces. Through our novel optical illusion dataset, MonoTrap, and extensive evaluation across multiple benchmarks, we demonstrate that our synthetic-only trained model achieves state-of-the-art results in zero-shot generalization, significantly outperforming existing solutions while showing remarkable robustness to challenging cases such as mirrors and transparencies.

Contributions:

-

A novel deep stereo architecture leveraging monocular depth VFMs to achieve strong generalization capabilities and robustness to challenging conditions.

-

Novel data augmentation strategies designed to enhance the robustness of our model to textureless regions and non-Lambertian surfaces.

-

A challenging dataset with optical illusion, which is particularly challenging for monocular depth with VFMs.

-

Extensive experiments showing Stereo Anywhere's superior generalization and robustness to conditions critical for either stereo or monocular approaches.

🖋️ If you find this code useful in your research, please cite:

@article{bartolomei2024stereo,

title={Stereo Anywhere: Robust Zero-Shot Deep Stereo Matching Even Where Either Stereo or Mono Fail},

author={Bartolomei, Luca and Tosi, Fabio and Poggi, Matteo and Mattoccia, Stefano},

journal={arXiv preprint arXiv:2412.04472},

year={2024},

}Here, you will be able to download the weights of our proposal trained on Sceneflow.

The download link will be released soon.

Details about training and testing scripts will be released soon.

We used Sceneflow dataset for training and eight datasets for evaluation.

Specifically, we evaluate our proposal and competitors using:

- 5 indoor/outdoor datasets: Middlebury 2014, Middlebury 2021, ETH3D, KITTI 2012, KITTI 2015;

- two datasets containing non-Lambertian surfaces: Booster and LayeredFlow;

- and finally with MonoTrap our novel stereo dataset specifically designed to challenge monocular depth estimation.

Details about datasets will be released soon.

We will provide futher information to train Stereo Anywhere soon.

We will provide futher information to test Stereo Anywhere soon.

In this section, we present illustrative examples that demonstrate the effectiveness of our proposal.

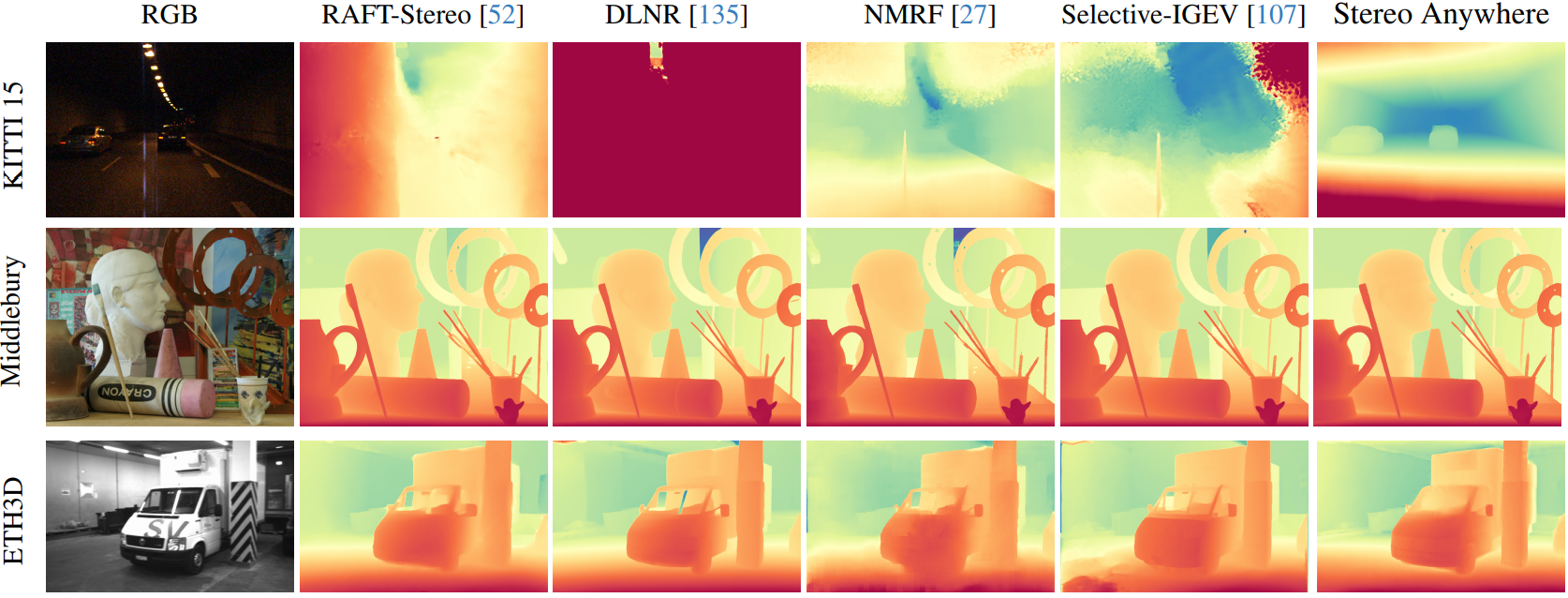

Qualitative Results -- Zero-Shot Generalization. Predictions by state-of-the-art models and Stereo Anywhere. In particular the first row shows an extremely challenging case for SceneFlow-trained models, where Stereo Anywhere achieves accurate disparity maps thanks to VFM priors.

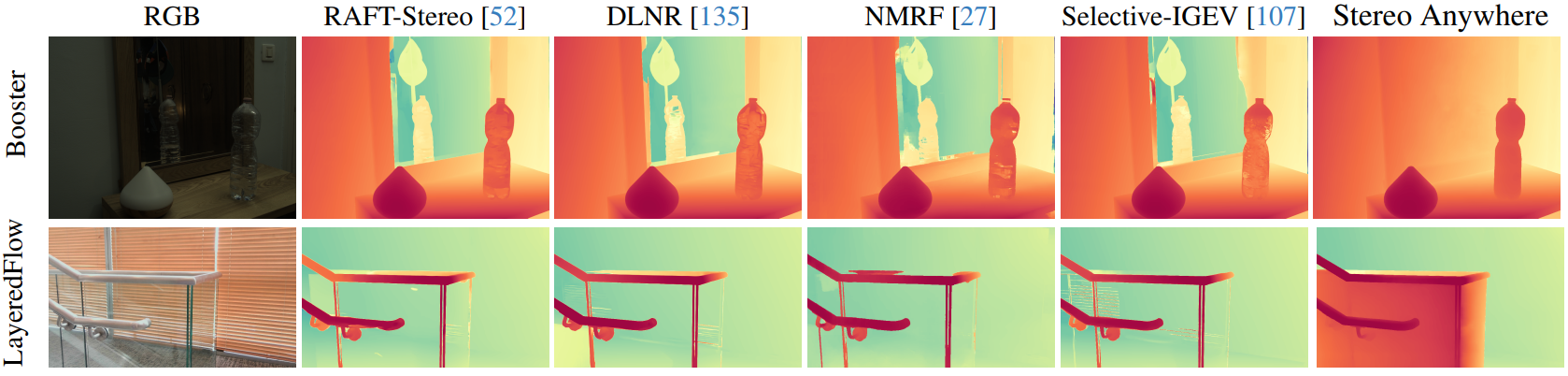

Qualitative results -- Zero-Shot non-Lambertian Generalization. Predictions by state-of-the-art models and Stereo Anywhere. Our proposal is the only stereo model correctly perceiving the mirror and transparent railing.

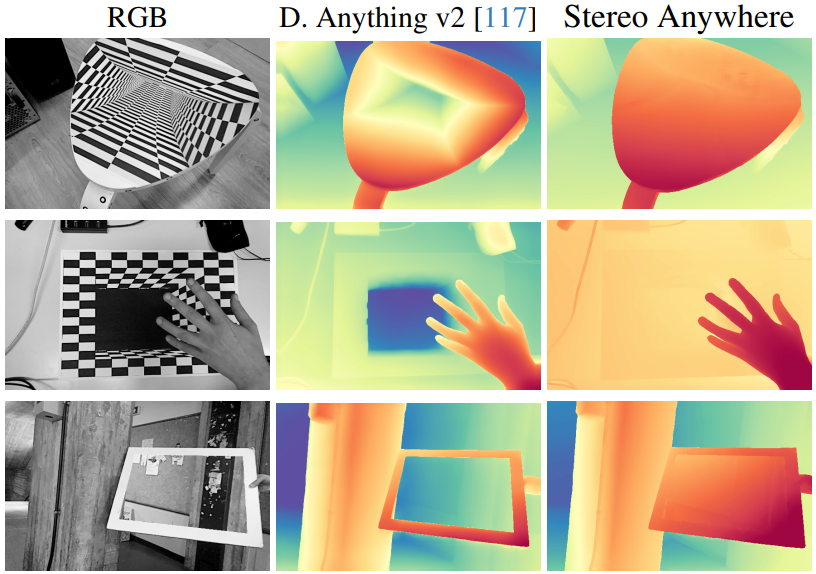

Qualitative results -- MonoTrap. The figure shows three samples where Depth Anything v2 fails while Stereo Anywhere does not.

For questions, please send an email to luca.bartolomei5@unibo.it

We would like to extend our sincere appreciation to the authors of the following projects for making their code available, which we have utilized in our work:

- We would like to thank the authors of RAFT-Stereo for providing their code, which has been inspirational for our stereo matching architecture.

- We would like to thank also the authors of Depth Anything V2 for providing their incredible monocular depth estimation network that fuels our proposal Stereo Anywhere.