[Request/Suggestion] Visualization of alignments

Opened this issue · 5 comments

I would like to request a feature.

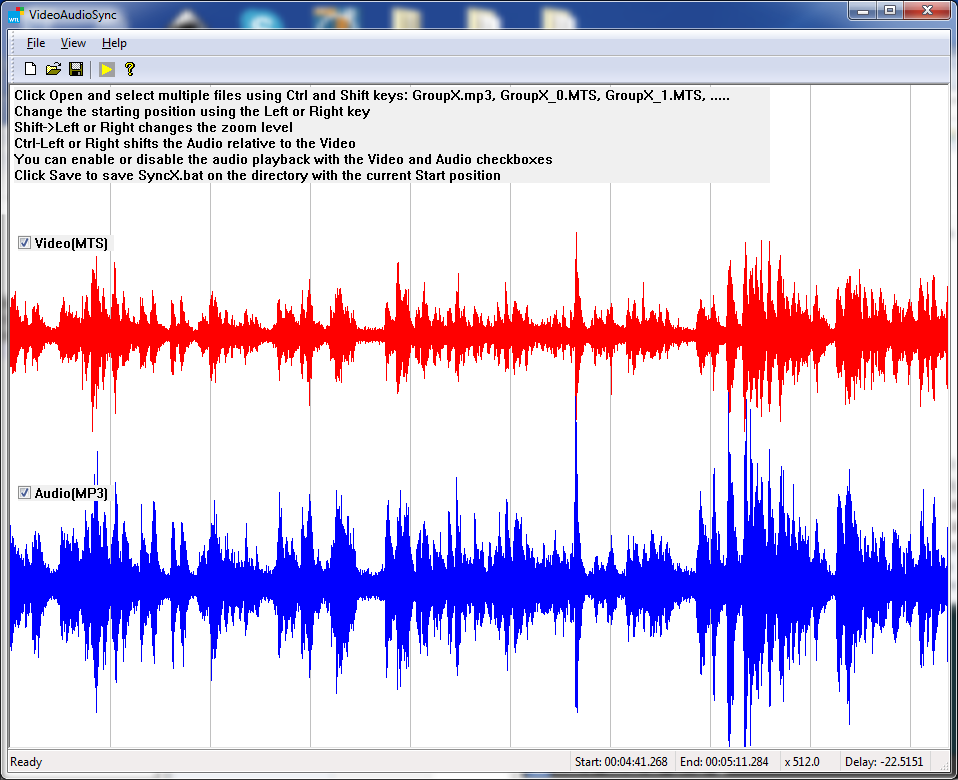

It's nice to be able to easily align various audio files with adalign but it would also be nice to see how the files differ. When you explained the structure of the match dictionary to me I posted a screenshot of an offset graph. Something like this or a graph of wave forms with colored parts that match would be nice. I hope you get what I mean :D

Could you please incorporate something like this into audalign?

That's an interesting idea! I'm not entirely sure how that would work, though. Would this feature be included when plot=true is set in the config or the audalign.plot() function is called? Or, would it be a separate function?

Can you share the code you used for the offset graphs or make a pr?

A graph of color-coded waveforms seems neat, too, but it seems like it would be tough to set defaults. Maybe that would be a lot easier to accomplish in a GUI application?

Sorry, I have not really thought about where or how to incorporate this into audalign 😅. I think a separate function would be OK but having this as an extra graph in audalign.plot() would work too I guess.

Here is the code I wrote back then. I used this as a way to learn how to code a GUI application in Python but I'm not feeling very confident about the outcome.

I fingerprinted two files and sorted the outcome by the x_val (time) in def fingerprint In line 135 I created the plot. It uses a cursor (mplcursors) to show the actual time and offset.

import PySimpleGUI as sg

import matplotlib

import matplotlib.pyplot as plt

import matplotlib.dates as mdates

import audalign

import os

import pandas as pd

import mplcursors

import threading

import numpy as np

from itertools import groupby

# initialize audalign

ada = audalign.Audalign()

def fingerprint(orignal_filename, new_filename, window):

# fingerprint files

#ada = audalign.Audalign()

ada.fingerprint_file(orignal_filename)

ada.fingerprint_file(new_filename)

# recognize new file from fingerprints

result = ada.recognize(orignal_fingerprint_file, filter_matches=50, locality=5, locality_filter_prop = 0.1)

_,recognisefilename = os.path.split(new_filename)

if result != None:

x_val = []

y_val = []

# add up each offset found and sort by x_val (time)

for numberofoffsets in range(0,len(result['match_info'][recognisefilename]['offset_seconds'])):

for locality_seconds in result['match_info'][recognisefilename]['locality_seconds'][numberofoffsets]:

x_val.append(locality_seconds[0])

y_val.append(result['match_info'][recognisefilename]['offset_seconds'][numberofoffsets])

temp = sorted(zip(x_val, y_val))

x_val, y_val = zip(*temp)

# convert x_val to datetime

x_val = pd.to_datetime(x_val, unit='s')

window.write_event_value('-FINGERPRINTING DONE-', (x_val, y_val))

else:

window.write_event_value('-FINGERPRINTING DONE-', (None, None))

def convert_to_audio(filename, threadname, window):

name, extension = os.path.splitext(filename)

ada.convert_audio_file(filename, name + ".mp3")

window.write_event_value('-CONVERTING DONE-', threadname)

if __name__ == '__main__':

# set window theme

sg.theme("Default1")

# create ui layout

layout = [[sg.Text('Choose orignal file:')],

[sg.In(size=(102, 1), key='-ORIGFILE-', visible=True, enable_events=True),

sg.FileBrowse('Browse', file_types=(('video file', '*.mp4 *.avi *.mkv *.flv *.mov'), ('audio file', '*.mp3 *.wav'),('all files', '*.*')),key='-ORIGFILE_BTN-')],

[sg.Text('Choose new file:')],

[sg.In(size=(102, 1),key='-NEWFILE-', visible=True, enable_events=True),

sg.FileBrowse('Browse', file_types=(('video file', '*.mp4 *.avi *.mkv *.flv *.mov'), ('audio file', '*.mp3 *.wav'),('all files', '*.*')),key='-NEWFILE_BTN-')],

[sg.Button('Go'), sg.Button('Clear')],

[sg.Text('⚫', text_color='grey', key="ORIGFILECONVERTTHREAD", pad=(0,0), font='Default 14'),sg.Text('⚫', text_color='grey', key="NEWFILECONVERTTHREAD", pad=(0,0), font='Default 14'),sg.Text('⚫', text_color='grey', key="FINGERPRINTTHREAD", pad=(0,0), font='Default 14')]

]

# window = sg.Window('Offset Graph', layout, finalize=True)

window = sg.Window('Offset Graph', layout)

orignal_fingerprint_file_converted = False

new_fingerprint_file_converted = False

orignal_fingerprint_file = ""

new_fingerprint_file = ""

# --------------------- EVENT LOOP ---------------------

while True:

event, values = window.read()

if event == sg.WIN_CLOSED or event == 'Exit':

break

if event == 'Go':

if values["-ORIGFILE-"] != "" and values["-NEWFILE-"] != "" and values["-ORIGFILE-"] != values["-NEWFILE-"]:

window['-ORIGFILE-'].update(disabled=True)

window['-ORIGFILE_BTN-'].update(disabled=True)

window['-NEWFILE-'].update(disabled=True)

window['-NEWFILE_BTN-'].update(disabled=True)

orignal_fingerprint_file = values["-ORIGFILE-"]

# convert original file if needed

original_filename, original_file_extension = os.path.splitext(values["-ORIGFILE-"])

if not original_file_extension == ".wav" and not original_file_extension == ".mp3":

print("Original file is not an audio file. Converting to mp3...")

window["ORIGFILECONVERTTHREAD"].update(text_color='red')

thread_file1 = threading.Thread(target=convert_to_audio, args=(orignal_fingerprint_file, "ORIGFILECONVERTTHREAD", window,), daemon=True)

thread_file1.start()

orignal_fingerprint_file = original_filename + ".mp3"

else:

window["ORIGFILECONVERTTHREAD"].update(text_color='green')

orignal_fingerprint_file_converted = True

print("Original file to fingerprint: " + orignal_fingerprint_file)

new_fingerprint_file = values["-NEWFILE-"]

# convert newfile file if needed

newfile_filename, newfile_file_extension = os.path.splitext(values["-NEWFILE-"])

if not newfile_file_extension == ".wav" and not newfile_file_extension == ".mp3":

print("New file is not an audio file. Converting to mp3...")

window["NEWFILECONVERTTHREAD"].update(text_color='red')

thread_file2 = threading.Thread(target=convert_to_audio, args=(new_fingerprint_file, "NEWFILECONVERTTHREAD", window,), daemon=True)

thread_file2.start()

new_fingerprint_file = newfile_filename + ".mp3"

else:

window["NEWFILECONVERTTHREAD"].update(text_color='green')

new_fingerprint_file_converted = True

print("New file to fingerprint: " + new_fingerprint_file)

# start fingerprinting if files are audio files

if orignal_fingerprint_file_converted == True and new_fingerprint_file_converted == True:

print("No conversion necessary")

# fingerprint and recognize file

window["FINGERPRINTTHREAD"].update(text_color='red')

thread_fingerprint = threading.Thread(target=fingerprint, args=(orignal_fingerprint_file, new_fingerprint_file, window,), daemon=True)

thread_fingerprint.start()

else:

sg.popup('Choose (different) files first!')

elif event == '-CONVERTING DONE-':

threadname = values[event]

window[threadname].update(text_color='green')

if threadname == "ORIGFILECONVERTTHREAD":

orignal_fingerprint_file_converted = True

if threadname == "NEWFILECONVERTTHREAD":

new_fingerprint_file_converted = True

if orignal_fingerprint_file_converted == True and new_fingerprint_file_converted == True:

# fingerprint and recognize file

window["FINGERPRINTTHREAD"].update(text_color='red')

thread_fingerprint = threading.Thread(target=fingerprint, args=(orignal_fingerprint_file, new_fingerprint_file, window,), daemon=True)

thread_fingerprint.start()

elif event == '-FINGERPRINTING DONE-':

x_val, y_val = values[event]

window["FINGERPRINTTHREAD"].update(text_color='green')

print("Fingerprinting done")

# plot the results if there are ane xy_vals

if len(x_val) > 0:

plt.style.use('bmh')

fig = plt.figure()

ax = fig.gca()

ax.set_title("Offsets graph")

ax.xaxis.set_major_formatter(mdates.DateFormatter("%H:%M:%S"))

ax.yaxis.set_major_formatter(matplotlib.ticker.FormatStrFormatter('%.3f'))

#temp = sorted(zip(x_val, y_val))

#x_val, y_val = zip(*temp)

zippedVals = zip(x_val, y_val)

groupedlist = []

last_offset = None

templist = []

for offset, timestamp in zippedVals:

if last_offset == None:

templist.append((offset, timestamp))

else:

if offset == last_offset:

templist.append((offset, timestamp))

else:

groupedlist.append(templist)

templist = []

templist.append((offset, timestamp))

last_offset = offset

for group in groupedlist:

x_val, y_val = zip(*group)

print("Group")

print(list(x_val))

print(list(y_val))

# ax.plot(list(x_val), list(y_val))

# y = np.array(y_val)

# x = np.array(x_val)

# for yv in np.unique(y):

# if yv != np.nan:

# idx = y == yv

# ax.plot(x[idx],y[idx]) #,marker='o',linestyle='-',color='b'

lines = plt.gca()

# lines = ax.plot(x_val, y_val)

fig.autofmt_xdate()

# add cursor to plot

cursor = mplcursors.cursor(lines, hover = True)

def show_annotation(sel):

_, yi = sel.target

i = sel.target.index

timestamp = x_val[int(round(i))]

sel.annotation.set_text("Time: " + timestamp.strftime("%H:%M:%S") + "\n" + "Offset: " + str('%.3f' % yi))

cursor.connect('add', show_annotation)

plt.ylabel("Offset [s]")

plt.xlabel("Time")

fig.canvas.manager.set_window_title('')

plt.tight_layout()

plt.show(block=False)

else:

sg.popup('No matches found!')

elif event == 'Clear':

# clear paths

window['-ORIGFILE-'].update(value='')

window['-NEWFILE-'].update(value='')

# enable gui elements again

window['-ORIGFILE-'].update(disabled=False)

window['-ORIGFILE_BTN-'].update(disabled=False)

window['-NEWFILE-'].update(disabled=False)

window['-NEWFILE_BTN-'].update(disabled=False)

All this is probably not efficient and looks complicated. I was just trying to get it to work and I'm not really into programming.

About the color-coded waveforms. I'm always in awe when I see such nice looking plots in an applications like you have in your readme gif but I think matplotlib alone could also work. Even though it's not that simple to handle. It seems like one can color parts of a line or add bars to a plot. An image search for "matplotlib waveforms colored parts" shows some nice plots 😁

Something like this or this but with two or more waveforms having similar parts marked with semitransparent bars would be the optimum I think.

I don't think I'm able to code such features so I just made this post hoping for the best. Thank you for having an open ear.

Thanks for the references and code! It looks pretty solid.

That seems like a really neat feature, so I'll try to get it added in.

Hi everyone, just asked @jhpark16 to evaluate possible collaborations...

Here's a screenshot of his Video Audio Sync for FFMPEG tool:

Hope that inspires.

OT

@stephenjolly of @bbc added AudAlign in Similar Projects section of their audio-offset-finder repo.