Key insight: As water is poured, the fundamental frequency that we hear changes predictably over time as a function of physical properties (e.g., container dimensions).

TL;DR: We present a method to infer physical properties of liquids from just the sound of pouring. We show in theory how pitch can be used to derive various physical properties such as container height, flow rate, etc. Then, we train a pitch detection network (wav2vec2) using simulated and real data. The resulting model can predict the physical properties of pouring liquids with high accuracy. The latent representations learned also encode information about liquid mass and container shape.

- We train a

wav2vec2model to estimate the pitch of pouring water. We use supervision from simulated data and fine-tune on real data using visual co-supervision. - We show physical property estimation from pitch. For example, in estimating the height of the container, we achieve a mean absolute error of 2.2 cm, in radius estimation, 1.6 cm and in estimating length of air column, 0.6 cm.

- We show strong generalisation to other datasets (e.g., Wilson et al.) and some videos from YouTube.

- We also show that the learned representations can be regressed to estimate the mass of the liquid and the shape of the container.

- We release a clean dataset of 805 videos of water pouring with annotations for physical properties.

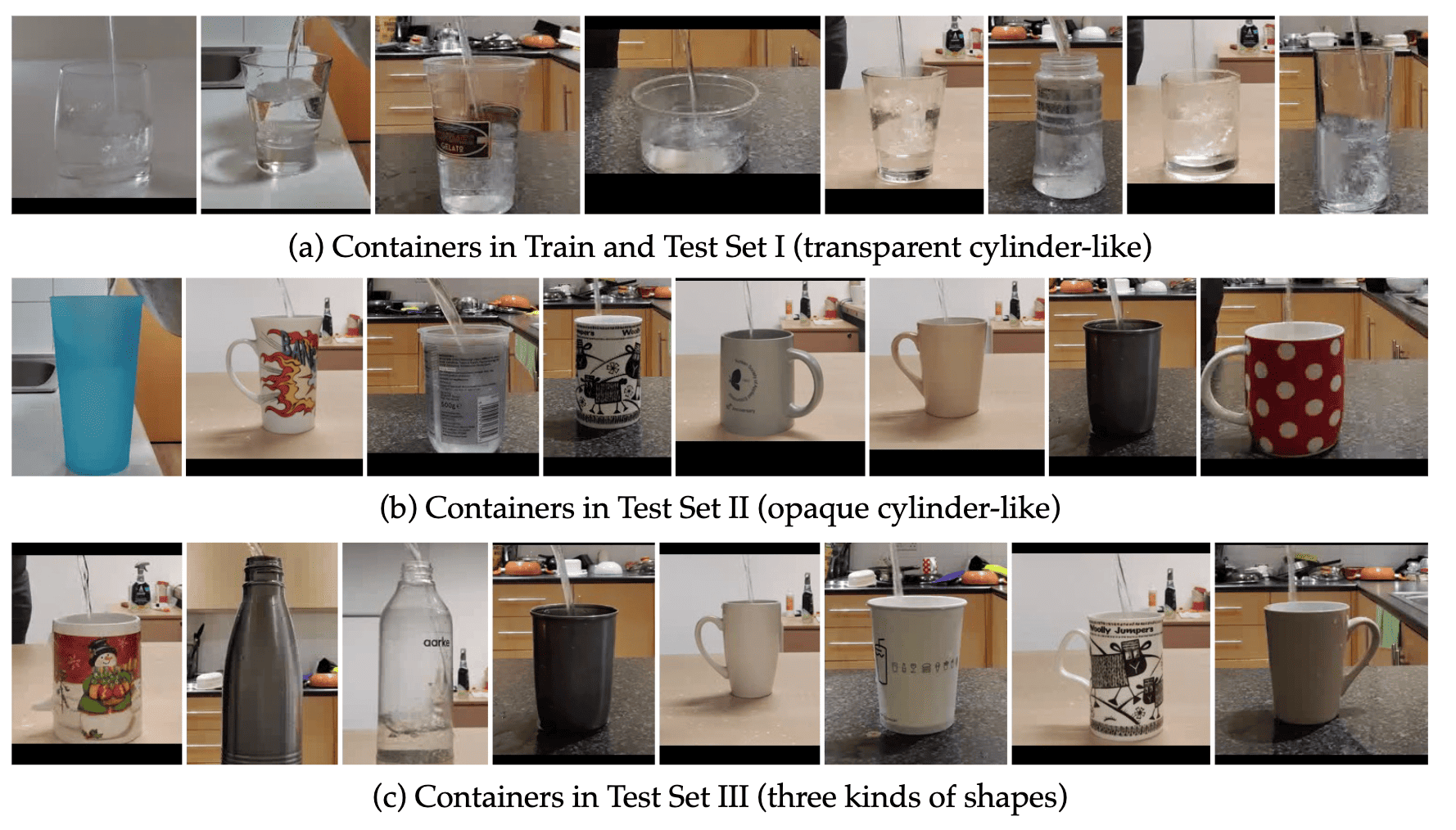

We collect a dataset of 805 clean videos that show the action of pouring water in a container. Our dataset spans over 50 unique containers made of 5 different materials, 4 different shapes and with hot and cold water. Some example containers are shown below.

The dataset is available to download here.

Option 1: Download from huggingface

# Note: this shall take 5-10 mins.

# Optionally, disable progress bars

# os.environ["HF_HUB_DISABLE_PROGRESS_BARS"] = True

from huggingface_hub import snapshot_download

snapshot_download(

repo_id="bpiyush/sound-of-water",

repo_type="dataset",

local_dir="/path/to/dataset/SoundOfWater",

)The total size of the dataset is 1.4 GB.

Option 2: Download from VGG servers

Coming soon!

We provide trained models for pitch estimation.

| File link | Description | Size |

|---|---|---|

| synthetic_pretrained.pth | Pre-trained on synthetic data | 361M |

| real_finetuned_visual_cosupervision.pth | Trained with visual co-supervision | 361M |

The models are available to download here.

Option 1: Download from huggingface. Use this snippet to download the models:

from huggingface_hub import snapshot_download

snapshot_download(

repo_id="bpiyush/sound-of-water-models",

local_dir="/path/to/download/",

)Option 2: Download from VGG servers

Coming soon!

We provide a single notebook to run the model and visualise results. We walk you through the following steps:

- Load data

- Demo the physics behind pouring water

- Load and run the model

- Visualise the results

Before running the notebook, be sure to install the required dependencies:

conda create -n sow python=3.8

conda activate sow

# Install desired torch version

# NOTE: change the version if you are using a different CUDA version

pip install torch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 --index-url https://download.pytorch.org/whl/cu121

# Additional packages

pip install lightning==2.1.2

pip install timm==0.9.10

pip install pandas

pip install decord==0.6.0

pip install librosa==0.10.1

pip install einops==0.7.0

pip install ipywidgets jupyterlab seaborn

# if you find a package is missing, please install it with pipRemember to download the model in the previous step. Then, run the notebook.

You can checkout the demo here.

We show key results in this section. Please refer to the paper for more details.

If you find this repository useful, please consider giving a star ⭐ and citation

@article{sound_of_water_bagad,

title={The Sound of Water: Inferring Physical Properties from Pouring Liquids},

author={Bagad, Piyush and Tapaswi, Makarand and Snoek, Cees G. M. and Zisserman, Andrew},

journal={arXiv},

year={2024}

}- We thank Ashish Thandavan for support with infrastructure and Sindhu Hegde, Ragav Sachdeva, Jaesung Huh, Vladimir Iashin, Prajwal KR, and Aditya Singh for useful discussions.

- This research is funded by EPSRC Programme Grant VisualAI EP/T028572/1, and a Royal Society Research Professorship RP / R1 / 191132.

We also want to highlight closely related work that could be of interest:

- Analyzing Liquid Pouring Sequences via Audio-Visual Neural Networks. IROS (2019).

- Human sensitivity to acoustic information from vessel filling. Journal of Experimental Psychology (2020).

- See the Glass Half Full: Reasoning About Liquid Containers, Their Volume and Content. ICCV (2017).

- CREPE: A Convolutional Representation for Pitch Estimation. ICASSP (2018).