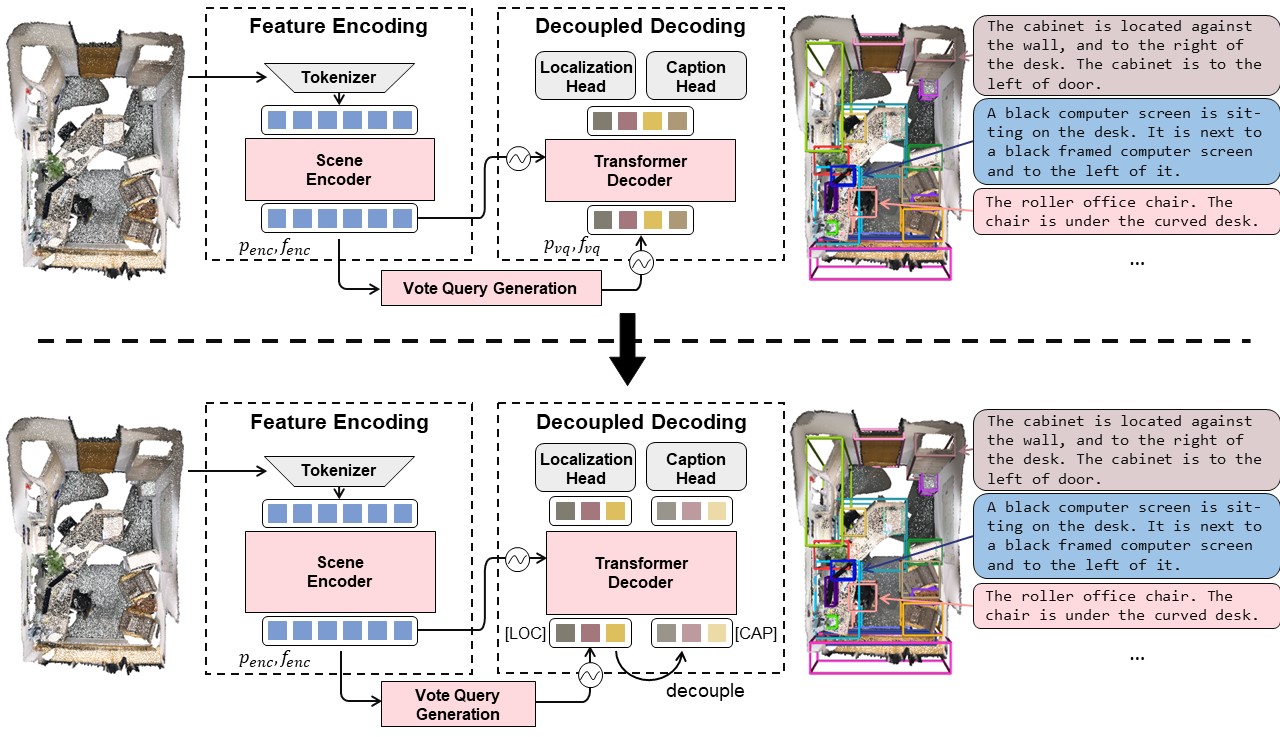

Official implementation of "End-to-End 3D Dense Captioning with Vote2Cap-DETR" (CVPR 2023) and "Vote2Cap-DETR++: Decoupling Localization and Describing for End-to-End 3D Dense Captioning" (T-PAMI 2024).

Thanks to the implementation of 3DETR, Scan2Cap, and VoteNet.

-

2024-04-07. 💥 Our state-of-the-art 3D dense captioning method Vote2Cap-DETR++ is accepted to T-PAMI 2024!

-

2024-02-21. 💥 Code for Vote2Cap-DETR++ is released!

-

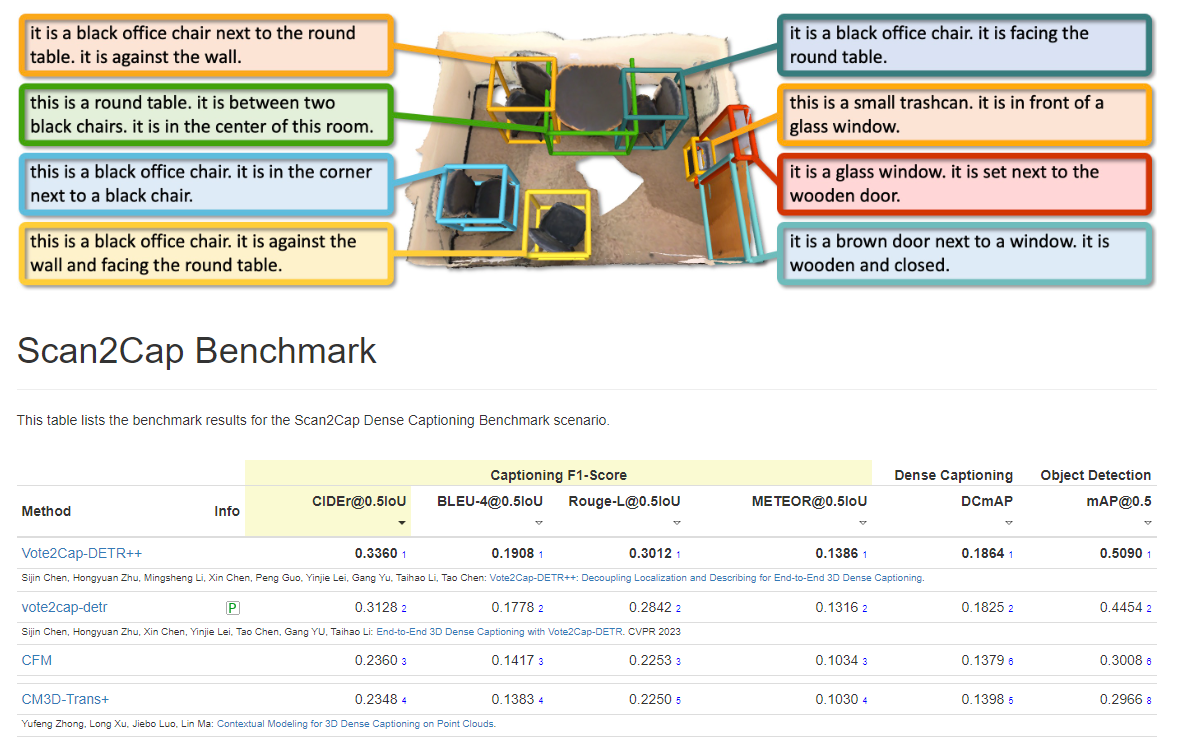

2024-02-20. 🚩 Vote2Cap-DETR++ reaches 1st place on the Scan2Cap online test benchmark.

-

2023-10-06. 🚩 Vote2Cap-DETR wins the Scan2Cap Challenge in the 3rd Language for 3D Scene Workshop at ICCV 2023.

-

2023-09-07. 📃 We further propose an advanced model, Vote2Cap-DETR++, which decouples feature extraction for object localization and caption generation.

-

2022-11-17. 🚩 Our model sets a new state-of-the-art on the Scan2Cap online test benchmark.

Our code is tested with PyTorch 1.7.1, CUDA 11.0 and Python 3.8.13.

Besides pytorch, this repo also requires the following Python dependencies:

matplotlib

opencv-python

plyfile

'trimesh>=2.35.39,<2.35.40'

'networkx>=2.2,<2.3'

scipy

cython

transformers

If you wish to use multi-view feature extracted by Scan2Cap, you should also install h5py:

pip install h5py

It is also REQUIRED to compile the CUDA accelerated PointNet++, and compile gIoU support for fast training:

cd third_party/pointnet2

python setup.py install

cd utils

python cython_compile.py build_ext --inplace

To build support for METEOR metric for evaluating captioning performance, we also installed the java package.

We follow Scan2Cap's procedure to prepare datasets under the ./data folder (Scan2CAD NOT required).

Preparing 3D point clouds from ScanNet.

Download the ScanNetV2 dataset and change the SCANNET_DIR to the scans folder in data/scannet/batch_load_scannet_data.py, and run the following commands.

cd data/scannet/

python batch_load_scannet_data.py

Preparing Language Annotations.

Please follow this to download the ScanRefer dataset, and put it under ./data.

[Optional] To prepare for Nr3D, it is also required to download and put the Nr3D under ./data.

Since it's in .csv format, it is required to run the following command to process data.

cd data; python parse_nr3d.py

You can download all the ready-to-use weights from huggingface.

| Model | SCST | rgb | multi-view | normal | checkpoint |

|---|---|---|---|---|---|

| Vote2Cap-DETR | - | - | [checkpoint] | ||

| Vote2Cap-DETR | - | - | [checkpoint] | ||

| Vote2Cap-DETR | - | [checkpoint] | |||

| Vote2Cap-DETR | - | [checkpoint] | |||

| Vote2Cap-DETR++ | - | - | [checkpoint] | ||

| Vote2Cap-DETR++ | - | - | [checkpoint] | ||

| Vote2Cap-DETR++ | - | [checkpoint] | |||

| Vote2Cap-DETR++ | - | [checkpoint] |

Though we provide training commands from scratch, you can also start with some pretrained parameters provided under the ./pretrained folder and skip certain steps.

[optional] 4.0 Pre-Training for Detection

You are free to SKIP the following procedures as they are to generate the pre-trained weights in ./pretrained folder.

To train the Vote2Cap-DETR's detection branch for point cloud input without additional 2D features (aka [xyz + rgb + normal + height]):

bash scripts/vote2cap-detr/train_scannet.sh

# Please also try our updated Vote2Cap-DETR++ model:

bash scripts/vote2cap-detr++/train_scannet.sh

To evaluate the pre-trained detection branch on ScanNet:

bash scripts/vote2cap-detr/eval_scannet.sh

# Our updated Vote2Cap-DETR++:

bash scripts/vote2cap-detr++/eval_scannet.sh

To train with additional 2D features (aka [xyz + multiview + normal + height]) rather than RGB inputs, you can manually replace --use_color to --use_multiview.

4.1 MLE Training for 3D Dense Captioning

Please make sure there are pretrained checkpoints under the ./pretrained directory. To train the mdoel for 3D dense captioning with MLE training on ScanRefer:

bash scripts/vote2cap-detr/train_mle_scanrefer.sh

# Our updated Vote2Cap-DETR++:

bash scripts/vote2cap-detr++/train_mle_scanrefer.sh

And on Nr3D:

bash scripts/vote2cap-detr/train_mle_nr3d.sh

# Our updated Vote2Cap-DETR++:

bash scripts/vote2cap-detr++/train_mle_nr3d.sh

4.2 Self-Critical Sequence Training for 3D Dense Captioning

To train the model with Self-Critical Sequence Training (SCST), you can use the following command:

bash scripts/vote2cap-detr/train_scst_scanrefer.sh

# Our updated Vote2Cap-DETR++:

bash scripts/vote2cap-detr++/train_scst_scanrefer.sh

And on Nr3D:

bash scripts/vote2cap-detr/train_scst_nr3d.sh

# Our updated Vote2Cap-DETR++:

bash scripts/vote2cap-detr++/train_scst_nr3d.sh

4.3 Evaluating the Weights

You can evaluate any trained model with specified models and checkponts. Change --dataset scene_scanrefer to --dataset scene_nr3d to evaluate the model for the Nr3D dataset.

bash scripts/eval_3d_dense_caption.sh

Run the following commands to store object predictions and captions for each scene.

bash scripts/demo.sh

Our model also provides the inference code for ScanRefer online test benchmark.

The following command will generate a .json file under the folder defined by --checkpoint_dir.

bash submit.sh

If you find our work helpful, please kindly cite our paper:

@inproceedings{chen2023end,

title={End-to-end 3d dense captioning with vote2cap-detr},

author={Chen, Sijin and Zhu, Hongyuan and Chen, Xin and Lei, Yinjie and Yu, Gang and Chen, Tao},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={11124--11133},

year={2023}

}

@article{chen2024vote2cap,

title={Vote2cap-detr++: Decoupling localization and describing for end-to-end 3d dense captioning},

author={Chen, Sijin and Zhu, Hongyuan and Li, Mingsheng and Chen, Xin and Guo, Peng and Lei, Yinjie and Gang, YU and Li, Taihao and Chen, Tao},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2024},

publisher={IEEE}

}

Vote2Cap-DETR and Vote2Cap-DETR++ are both licensed under a MIT License.

If you have any questions or suggestions regarding this repo, please feel free to open issues!