The evaluation code of the paper Densely Connected Search Space for More Flexible Neural Architecture Search

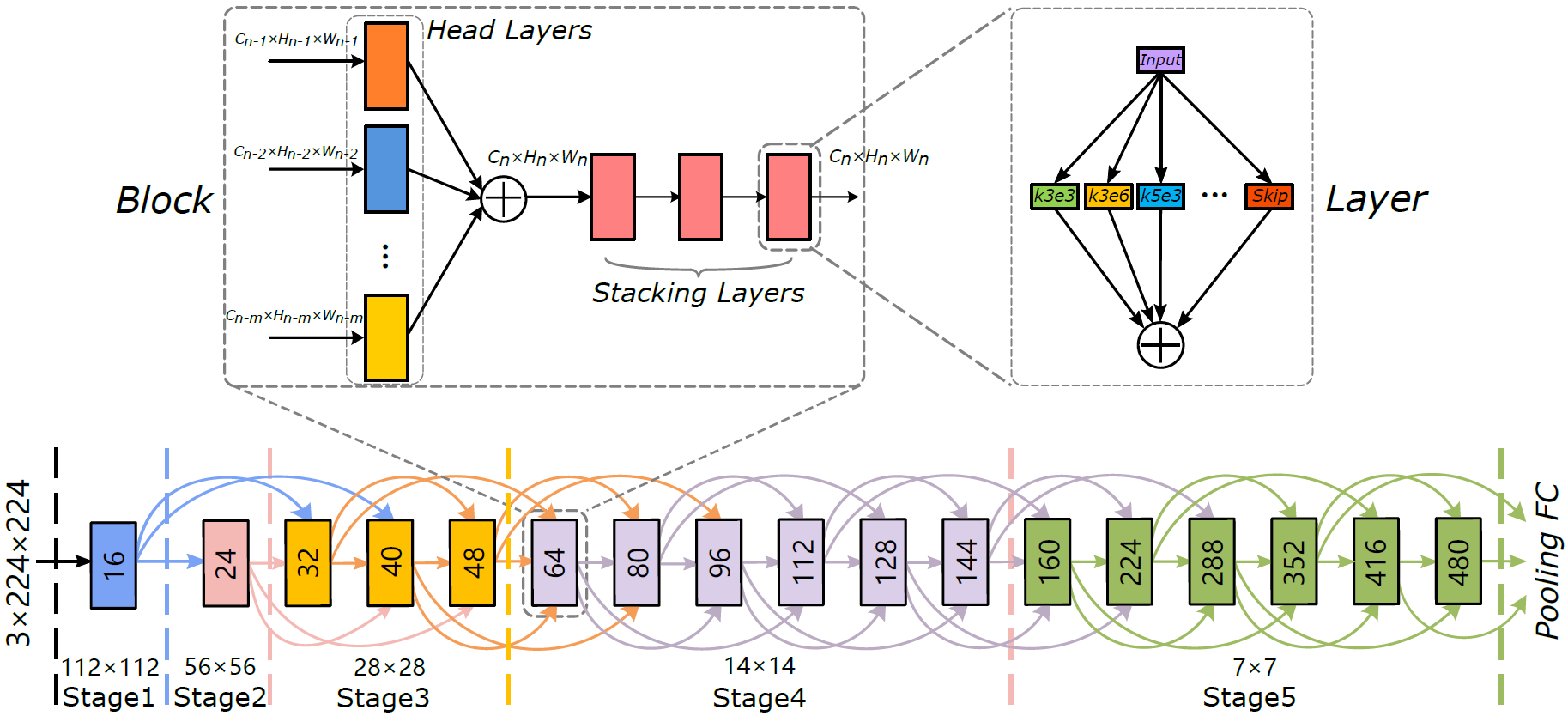

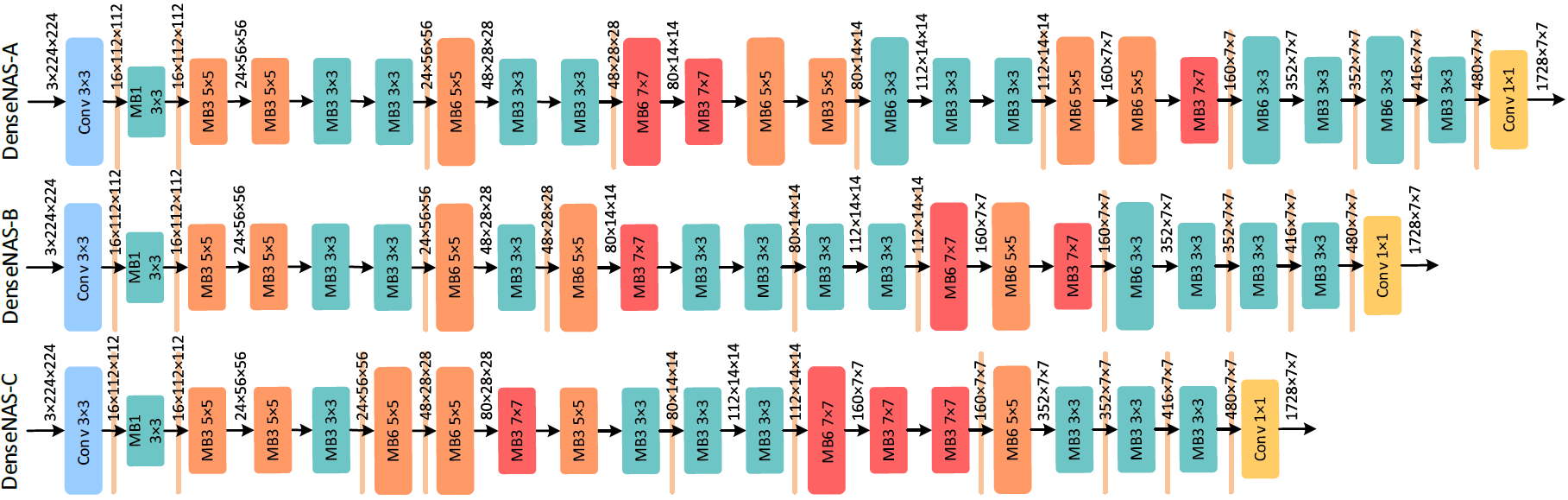

We propose a novel differentiable NAS method which can search for the width and the spatial resolution of each block simultaneously. We achieve this by constructing a densely connected search space and name our method as DenseNAS. Blocks with different width and spatial resolution combinations are densely connected to each other. The best path in the super network is selected by optimizing the transition probabilities between blocks. As a result the overall depth distribution of the network is optimized globally in a graceful manner.

- pytorch 1.0.1

- python 3.6+

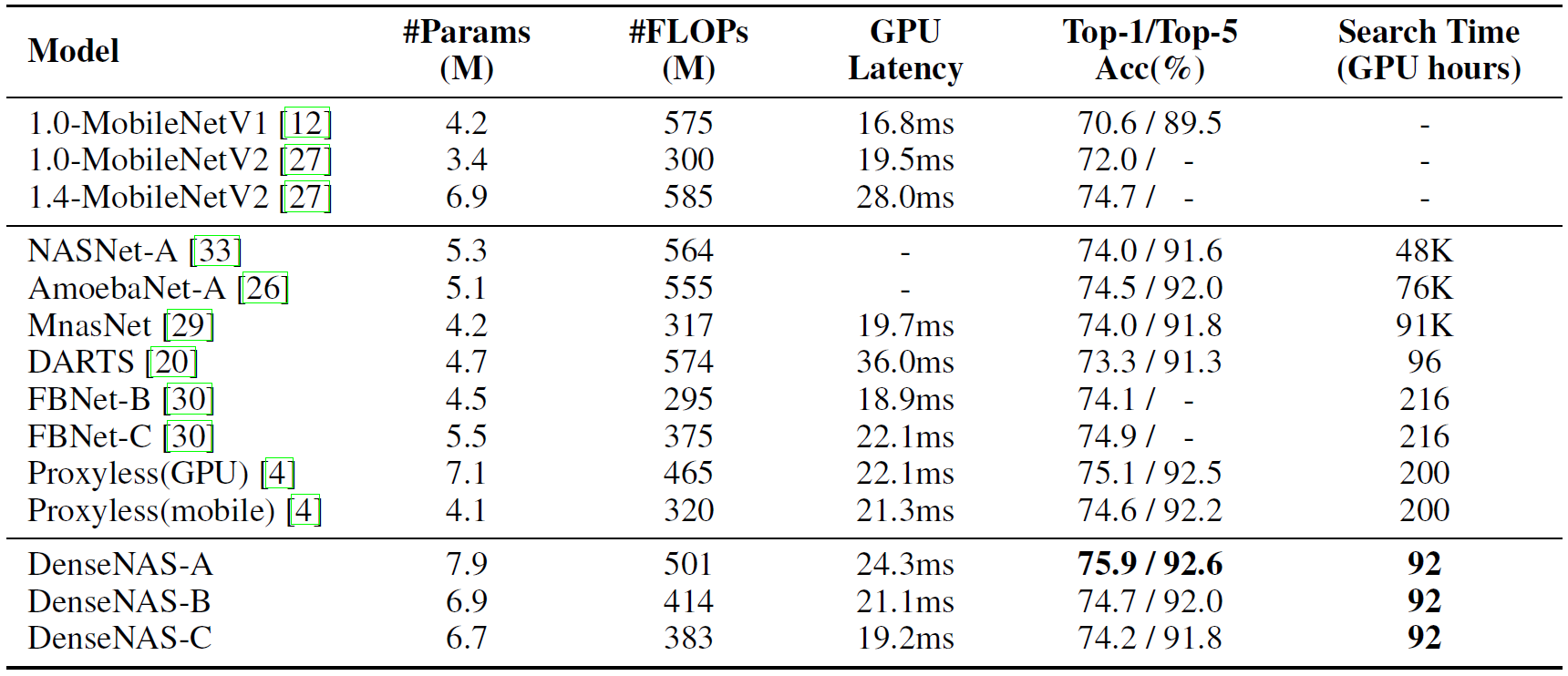

DenseNAS obtains an architecture with 75.9% top-1 accuracy on ImageNet and the latency is as low as 24.3ms on a single TITAN-XP. The total search time is merely 23 hours on 4 GPUs. For the GPU latency, we measure all the models with the same setup (on one TITAN-XP with batch size of 32).

Our results on ImageNet are shown bellow.

Our pretrained models can be downloaded in the following:

- Download the related files of the pretrained model and put

net_configandweights.ptinto themodel_path python validation.py --data_path 'The path to ImageNet data' --load_path 'The path you put the pretrained model'